Flink实战案例四部曲

Flink实战案例四部曲

第一部曲:统计5分钟内用户修改创建删除文件的操作日志数量

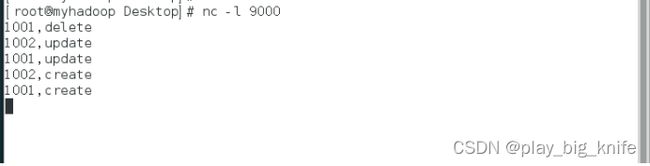

输入

1001,delete

1002,update

1001,create

1002,delte

输出

1001,2

1002,2

代码如下。

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.util.Collector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

public class TestRiZhiSum {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env=StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource lineds=env.socketTextStream("192.168.110.156",9000);

//这里输入string,输出1001,delete的两个字符串组成的元组

lineds.flatMap((String line,Collector> out)->{

String[] words=line.split(",");

out.collect(Tuple2.of(words[0],words[1]));

}).returns(Types.TUPLE(Types.STRING,Types.STRING))

.keyBy(ds->ds.f0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.process(new MyProcessWindow())

.print();

env.execute();

}

}

代码中有MyProcessWindow的窗口处理函数,窗口处理函数需要统计用户日志的数目。需要继承ProcessWindowFunction,实现代码如下所示。

import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.util.Collector;

public class MyProcessWindow extends ProcessWindowFunction,

Tuple2,String,TimeWindow>{

@Override

public void process(String s, Context context, Iterable> iterable,

Collector> collector) throws Exception {

long count=0;

for(Tuple2 iter:iterable){

count+=1;

}

collector.collect(Tuple2.of(s,count));

}

}

注意代码中ProcessWindowFunction函数中产生4个参数,第一个参数是输入的Tuple2元组,其元组中两个值为String和String。第二个参数是输出的Tuple2元组,其元组两个值是String和Long,String代表用户,Long代表统计后的值。第三个参数是键的类型,最后一个参数是固定的TimeWindow。

运行结果后,在linux中输入相关的信息。如下图所示。

运行程序后的结果如下。

第二部曲:统计5分钟内用户订单的平均值,这里需要统计该时间内产生的用户订单和值及订单的个数,然后计算订单的均值。

输入:

1001,102.5

1002, 98.4

1001,56.4

1002,101.2

输出

1001,83.32

1002,87.32

代码如下。

import org.apache.flink.util.Collector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

public class AmWordCount {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource lineds = env.socketTextStream("192.168.110.156",

9000);

lineds.flatMap((String line, Collector> out) -> {

String[] words = line.split(",");

out.collect(Tuple2.of(words[0], Double.parseDouble(words[1])));

}).returns(Types.TUPLE(Types.STRING, Types.DOUBLE))

.keyBy(ds->ds.f0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.process(new MyAvgProcessFunction())

.print();

env.execute();

}

}

代码中其中MyAvgProcessFunction()实现平均值的计算处理,代码如下。

import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

public class MyAvgProcessFunction extends ProcessWindowFunction,

Tuple2,String,TimeWindow> {

@Override

public void process(String s, Context context, Iterable>

iterable, Collector> collector) throws Exception {

double sum=0;

double count=0;

for(Tuple2 iter:iterable){

//tuple2元组的第二个数值为double的价格,可以进行累加

sum+=iter.f1;

count++;

}

collector.collect(Tuple2.of(s,sum/count));

}

}

注意代码中ProcessWindowFunction函数中产生4个参数,第一个参数是输入的Tuple2元组,其元组中两个值为String和Double。Double是因为数值之和计算值是比较大的,第二个参数是输出的Tuple2元组,其元组两个值是String和Double,String代表用户,Double代表统计后的值。第三个参数是键的类型,最后一个参数是固定的TimeWindow。

第三部曲:统计计算五秒内每个信号灯通过的汽车数量。

通过对道路信号灯捕获的汽车数量进行统计,得出5秒内每个信号灯通过汽车数量,以便进行合理的道路交通规划和管制。由于网络延迟的问题,需要加入水印效果决定数据的先后顺序,并使用侧道输出获取后期延迟的数据。

流数据第一列为信号灯ID,第二列为汽车数量,第三列车为嵌入数据中的事件时间戳。数据格式如下。

信号灯ID,汽车数量,事件时间戳

1001,3,1000

1002,2,2000

需要建立一个java bean描述这个信号灯的特征。

CarData类就是标志这个信号灯的特征类。

import lombok.Data;

import lombok.NoArgsConstructor;

import lombok.AllArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class CarData {

private String id;

private Integer count;

private long eventTime;

}

这里需要lombok包。

然后交通灯也需要窗口,输入是一个String,输出则变成了CarData 的类型,同时得到的CarData通过assignTimestampsAndWatermarks方法添加时间戳效果的水印,随之设置水印延迟时间的目的是让水印延迟到达,从而可以解决乱序问题。通过水印延迟到达让在延迟时间范围内到达的迟到数据可以加入到窗口计算中,保证了数据的完整性。当水印到达后就会触发窗口计算,在水印之后到达的迟到数据则会被丢弃。

forBoundedOutOfOrderness(Duration.ofSeconds(5))

起到了这样的目的,后面设置水印的具体内容。

设置水印的内容如下。

DataStream watermarkDs=cardataStream.assignTimestampsAndWatermarks(

WatermarkStrategy.forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner(){

@Override

public long extractTimestamp(CarData carData, long l) {

return carData.getEventTime();

}

})

);

还需要侧道输出。

OutputTag lateOutputTag=new OutputTag("late-data"){};

再设置滑动窗口,处理滑动窗口的reduce处理函数。

具体代码如下 。

SingleOutputStreamOperator result=watermarkDs.keyBy(CarData::getId)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.allowedLateness(Time.seconds(3))

.sideOutputLateData(lateOutputTag)

.reduce(new MyReduceFunction(),new MyProcessOldFunction());

这里设置有窗口允许延迟方法allowLateness(Time.Seconds(3))

这里有两个reduce的两个参数,第一个方法决定了交通灯的汇总计算方法,第二个方法决定交通灯参数的输出。

MyReduceFunction()的函数内容如下。

import org.apache.flink.api.common.functions.ReduceFunction;

public class MyReduceFunction implements ReduceFunction{

@Override

public CarData reduce(CarData carData, CarData t1) throws Exception {

return new CarData(carData.getId(),carData.getCount()+t1.getCount(),carData.getEventTime());

}

}

MyProcessOldFunction()方法如下。

import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

public class MyProcessOldFunction extends ProcessWindowFunction{

@Override

public void process(String s, Context context, Iterable iterable, Collector collector) throws Exception {

CarData cardata=iterable.iterator().next();

collector.collect("窗口1("+context.window().getStart()+"~"+context.window().getEnd()+")的计算结果:"+s+":"+cardata.getCount());

}

}

整个交通灯主程序的代码如下。

public class JiaoTongWordCount {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env=StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource lineds=env.socketTextStream("192.168.110.156",9000);

DataStream cardataStream=lineds.map(new MapFunction(){

public CarData map(String s) throws Exception {

String[] words=s.split(",");

return new CarData(words[0],Integer.parseInt(words[1]),Long.parseLong(words[2]));

}

});

DataStream watermarkDs=cardataStream.assignTimestampsAndWatermarks(

WatermarkStrategy.forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner(){

@Override

public long extractTimestamp(CarData carData, long l) {

return carData.getEventTime();

}

})

);

OutputTag lateOutputTag=new OutputTag("late-data"){};

SingleOutputStreamOperator result=watermarkDs.keyBy(CarData::getId)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.allowedLateness(Time.seconds(3))

.sideOutputLateData(lateOutputTag)

.reduce(new MyReduceFunction(),new MyProcessOldFunction());

result.print();

env.execute();

}

}

第四部:五、使用Flink SQL计算5秒内用户订单总金额

这里包括每个用户的订单总数,订单总金额, 可能有数据延迟的问题,使用水印解决数据延迟的问题,用户订单包括字段:订单ID,用户ID,订单金额,订单时间。具体对应的java bean代码如下。

import lombok.Data;

import lombok.NoArgsConstructor;

import lombok.AllArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class OrderA {

private String id;

private Integer userId;

private Integer money;

private Long createTime;

}

主程序初始化时需要跟Table的StreamTableEnvironment环境变量相融合。代码如下 。

StreamExecutionEnvironment env=StreamExecutionEnvironment.getExecutionEnvironment();

EnvironmentSettings settings=EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

StreamTableEnvironment tableEnv=StreamTableEnvironment.create(env,settings);

案例中需要定义数据源,add_source方法产生定单的相关属性,代码如下 。

DataStreamSource wordStream=env.addSource(new RichSourceFunction(){

private Boolean isRunning=true;

@Override

public void run(SourceContext sourceContext) throws Exception {

Random random=new Random();

while(isRunning){

OrderA ordera=new OrderA(

UUID.randomUUID().toString(),

random.nextInt(5),

random.nextInt(200),

System.currentTimeMillis()

);

TimeUnit.SECONDS.sleep(1);

sourceContext.collect(ordera);

}

}

@Override

public void cancel() {

isRunning=false;

}

});

产生数据时,有1秒钟的延迟后产生第二个订单。

对订单的产生时间制造水印,代码如下。

DataStream watermarkds=wordStream.assignTimestampsAndWatermarks(

WatermarkStrategy.forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner(){

@Override

public long extractTimestamp(OrderA ordera, long l) {

return ordera.getCreateTime();

}

})

);

通过Stream建立Table的TemporaryView,通过createTemporaryView方法,代码如下。

tableEnv.createTemporaryView("t_order",watermarkds,

$("id"),

$("userId"),

$("money"),

$("createTime")

);

接下来使用sql语句进行查询统计,平均值和需要的总钱数。

代码如下。

String sql="select userId,count(*) as totalCount,sum(money) as sumMoney from t_order group by userId,tumble(createTime,interval '5' second)";

Table table=tableEnv.sqlQuery(sql);

tableEnv.toRetractStream(table,Row.class).print();

env.execute();

最终整体代码如下。

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import java.util.Random;

import java.util.UUID;

import java.util.concurrent.TimeUnit;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import java.time.Duration;

import org.apache.flink.table.api.Table;

import static org.apache.flink.table.api.Expressions.$;

import org.apache.flink.types.Row;

public class MyOrderWordCount {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env=StreamExecutionEnvironment.getExecutionEnvironment();

EnvironmentSettings settings=EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build();

StreamTableEnvironment tableEnv=StreamTableEnvironment.create(env,settings);

DataStreamSource wordStream=env.addSource(new RichSourceFunction(){

private Boolean isRunning=true;

@Override

public void run(SourceContext sourceContext) throws Exception {

Random random=new Random();

while(isRunning){

OrderA ordera=new OrderA(

UUID.randomUUID().toString(),

random.nextInt(5),

random.nextInt(200),

System.currentTimeMillis()

);

TimeUnit.SECONDS.sleep(1);

sourceContext.collect(ordera);

}

}

@Override

public void cancel() {

isRunning=false;

}

});

DataStream watermarkds=wordStream.assignTimestampsAndWatermarks(

WatermarkStrategy.forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner(){

@Override

public long extractTimestamp(OrderA ordera, long l) {

return ordera.getCreateTime();

}

})

);

tableEnv.createTemporaryView("t_order",watermarkds,

$("id"),

$("userId"),

$("money"),

$("createTime")

);

String sql="select userId,count(*) as totalCount,sum(money) " +

"as sumMoney from t_order group by userId,tumble(createTime,interval '5' second)";

Table table=tableEnv.sqlQuery(sql);

tableEnv.toRetractStream(table,Row.class).print();

env.execute();

}

}