paddle实现resnet复现

文章目录

-

- 一、介绍

- 二、对总体网络的介绍

-

- 1、网络亮点

- 2、网络构成

- 3、网络结构

- 三、论文复现

-

- 3.1导入工具包

- 3.2 建立Basicblock

- 3.3 建立Bottleneckblock

- 3.4 搭建Resnet的主干

- 3.5 具象化网络

- 四、查看网络结构

- 五、测试网络是否可以使用

一、介绍

ResNet(Residual Neural Network)由微软研究院的Kaiming He等四名华人提出,通过使用ResNet Unit成功训练出了152层的神经网络,并在ILSVRC2015比赛中取得冠军,在top5上的错误率为3.57%,同时参数量比VGGNet低,效果非常突出。ResNet的结构可以极快的加速神经网络的训练,模型的准确率也有比较大的提升。

论文地址:“Deep_Residual_Learning_for_Image_Recognition”

二、对总体网络的介绍

1、网络亮点

网络提出了residual结构(残差结构),可有效缓解随网络层数的加深而导致的梯度消失和梯度爆炸现象。从而可以搭建超深的网络结构(突破1000层)。

2、网络构成

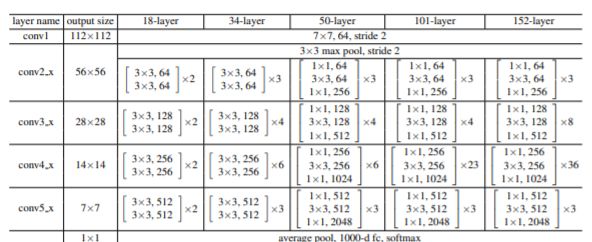

网络大体上由以下两种block构成:

Basicblock(用于resnet18,resnet34)

Bottleneckblock(用于resnet50,resnet101,resnet152)

3、网络结构

三、论文复现

3.1导入工具包

import paddle

import paddle.nn as nn

from paddle.nn import Conv2D, MaxPool2D, AdaptiveAvgPool2D, Linear, ReLU, BatchNorm2D

import paddle.nn.functional as F

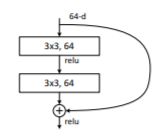

3.2 建立Basicblock

值得注意的细节

在特征矩阵的大小和通道数发生改变时,应在shortcut中对特征矩阵进行卷积,使shortcut输出的特征矩阵和主分支的大小相同,便于相加。

class Basicblock(paddle.nn.Layer):

def __init__(self, in_channel, out_channel, stride = 1):

super(Basicblock, self).__init__()

self.stride = stride

self.conv0 = Conv2D(in_channel, out_channel, 3, stride = stride, padding = 1)

self.conv1 = Conv2D(out_channel, out_channel, 3, stride=1, padding = 1)

self.conv2 = Conv2D(in_channel, out_channel, 1, stride = stride)

self.bn0 = BatchNorm2D(out_channel)

self.bn1 = BatchNorm2D(out_channel)

self.bn2 = BatchNorm2D(out_channel)

def forward(self, inputs):

y = inputs

x = self.conv0(inputs)

x = self.bn0(x)

x = F.relu(x)

x = self.conv1(x)

x = self.bn1(x)

if self.stride == 2:

y = self.conv2(y)

y = self.bn2(y)

z = F.relu(x+y)

return z

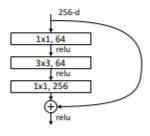

3.3 建立Bottleneckblock

值得注意的细节

基本同上

class Bottleneckblock(paddle.nn.Layer):

def __init__(self, inplane, in_channel, out_channel, stride = 1, start = False):

super(Bottleneckblock, self).__init__()

self.stride = stride

self.start = start

self.conv0 = Conv2D(in_channel, inplane, 1, stride = stride)

self.conv1 = Conv2D(inplane, inplane, 3, stride=1, padding=1)

self.conv2 = Conv2D(inplane, out_channel, 1, stride=1)

self.conv3 = Conv2D(in_channel, out_channel, 1, stride = stride)

self.bn0 = BatchNorm2D(inplane)

self.bn1 = BatchNorm2D(inplane)

self.bn2 = BatchNorm2D(out_channel)

self.bn3 = BatchNorm2D(out_channel)

def forward(self, inputs):

y = inputs

x = self.conv0(inputs)

x = self.bn0(x)

x = F.relu(x)

x = self.conv1(x)

x = self.bn1(x)

x = F.relu(x)

x = self.conv2(x)

x = self.bn2(x)

if self.start:

y = self.conv3(y)

y = self.bn3(y)

z = F.relu(x+y)

return z

3.4 搭建Resnet的主干

值得注意的细节

1.在使用basic_layer的block中,第一个block保留原通道,之后的block因为承接上一个block所以通道数减半。

2.在使用bottleneck_layer的block中,在每一块block内部,输出block时通道深度为输入通道深度乘4,第一个block保留原通道,因为和第一个卷积池化后的通道数和输入通道数一样为64.

class Resnet(paddle.nn.Layer):

def __init__(self, num, bottlenet):

super(Resnet, self).__init__()

self.conv0 = Conv2D(3, 64, 7, stride=2)

self.bn = BatchNorm2D(64)

self.pool1 = MaxPool2D(3, stride=2)

if bottlenet:

self.layer0 = self.add_bottleneck_layer(num[0], 64, start = True)

self.layer1 = self.add_bottleneck_layer(num[1], 128)

self.layer2 = self.add_bottleneck_layer(num[2], 256)

self.layer3 = self.add_bottleneck_layer(num[3], 512)

else:

self.layer0 = self.add_basic_layer(num[0], 64, start = True)

self.layer1 = self.add_basic_layer(num[1], 128)

self.layer2 = self.add_basic_layer(num[2], 256)

self.layer3 = self.add_basic_layer(num[3], 512)

self.pool2 = AdaptiveAvgPool2D(output_size = (1, 1))

def add_basic_layer(self, num, inplane, start = False):

layer = []

if start:

layer.append(Basicblock(inplane, inplane))

else:

layer.append(Basicblock(inplane//2, inplane, stride = 2))

for i in range(num-1):

layer.append(Basicblock(inplane, inplane))

return nn.Sequential(*layer)

def add_bottleneck_layer(self, num, inplane, start = False):

layer = []

if start:

layer.append(Bottleneckblock(inplane, inplane, inplane*4, start = True))

else:

layer.append(Bottleneckblock(inplane, inplane*2, inplane*4, stride = 2, start = True))

for i in range(num-1):

layer.append(Bottleneckblock(inplane, inplane*4, inplane*4))

return nn.Sequential(*layer)

def forward(self, inputs):

x = self.conv0(inputs)

x = self.bn(x)

x = self.pool1(x)

x = self.layer0(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.pool2(x)

x = paddle.squeeze(x)

return x

3.5 具象化网络

def resnet18():

return Resnet([2, 2, 2, 2], bottlenet = False)

def resnet34():

return Resnet([3, 4, 6, 3], bottlenet = False)

def resnet50():

return Resnet([3, 4, 6, 3], bottlenet = True)

def resnet101():

return Resnet([3, 4, 23, 3], bottlenet = True)

def resnet152():

return Resnet([3, 8, 36, 3], bottlenet = True)

四、查看网络结构

model = resnet18()

paddle.summary(model, (1, 3, 224, 224))

五、测试网络是否可以使用

import paddle

d = paddle.uniform(shape=[1,3,28,28])

print(type(d))

d = model(d)

print(d)