自定义数据集的手写数字识别(基于pytorch,可下载训练数据集)

代码依赖的环境,库的引入有以下:

# 神经网络搭建的库

import torch

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# 数据处理的库

from torchvision import transforms, utils, datasets

from torch.utils.data import DataLoader数据集介绍

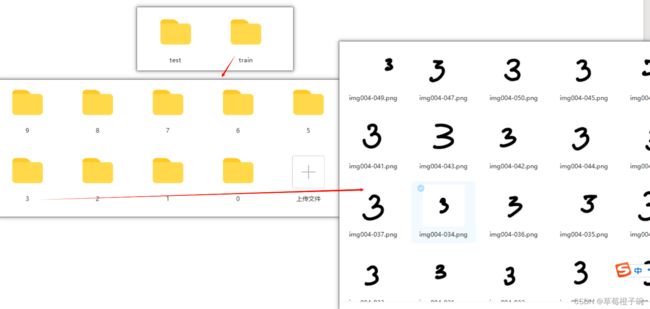

训练模型的数据集是自定义的数据集,每张图片是![]() 的像素大小,内容分别是手写的0~9的数字, 每个类别各有55张图片,这里选各类图片的50张作为训练集,5张作为测试集,分别放入train和test的文件夹中,里面的每个文件夹的标题就是图片的分类标签,这样便于用pytorch的ImageFolder库直接读取数据。

的像素大小,内容分别是手写的0~9的数字, 每个类别各有55张图片,这里选各类图片的50张作为训练集,5张作为测试集,分别放入train和test的文件夹中,里面的每个文件夹的标题就是图片的分类标签,这样便于用pytorch的ImageFolder库直接读取数据。

数据集百度网盘链接:

链接:https://pan.baidu.com/s/1XU9QFB1VhkPIVydECO0CoQ

提取码:czr0

网络模型

网络的模型采用的是比较常规的CNN神经网络,含三层卷积层,三层池化层,三层全连接层。下面展示网络模型代码的结构。

# 定义网络模型

class CNNnet(nn.Module):

def __init__(self):

super(CNNnet, self).__init__()

self.layer1 = nn.Sequential(nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5, stride=2), nn.MaxPool2d(kernel_size=5, stride=3))

self.layer2 = nn.Sequential(nn.Conv2d(in_channels=16, out_channels=36, kernel_size=5, stride=2), nn.MaxPool2d(kernel_size=3, stride=2))

self.layer3 = nn.Sequential(nn.Conv2d(in_channels=36, out_channels=64, kernel_size=3, stride=2), nn.MaxPool2d(kernel_size=3, stride=2))

self.fc1 = nn.Linear(64*8*11, 1024)

self.bn1 = nn.BatchNorm1d(1024)

self.fc2 = nn.Linear(1024, 128)

self.bn2 = nn.BatchNorm1d(128)

self.out = nn.Linear(128, 10)

def forward(self, x):

x = F.relu(self.layer1(x))

x = F.relu(self.layer2(x))

x = F.relu(self.layer3(x))

x = x.view(x.size(0), -1)

x = F.relu(self.bn1(self.fc1(x)))

x = F.dropout(x,p=0.5)

x = F.relu(self.bn2(self.fc2(x)))

x = F.dropout(x,p=0.5)

x = F.softmax(self.out(x), dim=1)

return x用tensorboard显示网络的结构如下: 此处的input是图片tensor的标准格式[B,C,W,H],B表示每一次训练(或测试)的批次,C表示图片通道数,都是标准的RGB三通道图片,W、H表示图片的像素宽度和像素高度;图片中展开显示了layer2中的卷积层和池化层。

此处的input是图片tensor的标准格式[B,C,W,H],B表示每一次训练(或测试)的批次,C表示图片通道数,都是标准的RGB三通道图片,W、H表示图片的像素宽度和像素高度;图片中展开显示了layer2中的卷积层和池化层。

数据预处理

要将读取的图像数据送入GPU训练首先要转换成tensor的格式,这里使用torchvision库里面丰富且方便的数据处理方式。

# 定义转化方式

my_trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])])

# 获取需要处理的数据集

train_dataset = datasets.ImageFolder(train_path, transform=my_trans)

test_dataset = datasets.ImageFolder(test_path, transform=my_trans)

# 转换成生成器

train_boader = DataLoader(train_dataset, batch_size=5, shuffle=True)

test_boader = DataLoader(test_dataset, batch_size=2)trainforms.Compose()函数将多个处理过程合并起来,transforms.Normalize()是将数据指定均值和方差进行标准化;

train_path和test_path是上文提及的train和test两个文件夹的绝对路径;

本处将训练集的批次设置为5,测试集的批次设置为2;

train_boarder每个批次的数据格式为[5,3,900,1200];

test_boarder每个批次的数据格式为[2,3,900,1200];

指定训练设备

定义变量device为模型训练的设备,在主机存在GPU的情况下使用GPU训练,不存在的时候使用CPU训练模型。

# 检测设备是否可以用GPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print(device)初始化网络模型

将上文继承定义的网络模型初始化并送入设备。

# 建立网络模型

model = CNNNet()

model.to(device)定义损失函数以及优化器

这里使用交叉熵损失函数,随机梯度下降优化器,设置学习率为0.01,动量为0.7。

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.7)训练模型

迭代的次数epoch_num定义为50;

定义train_loss_all和train_acc_all储存训练模型过程中的损失值和准确值;

定义test_loss_all和test_acc_all储存测试模型过程中的损失值和准确值;

# 开始训练

epoch_num = 50

for epoch in range(epoch_num):

train_loss_all = 0

train_acc_all = 0

test_loss_all = 0

test_acc_all = 0

# 训练模式

model.train()

train_loss = 0

train_acc = 0

for img, label in train_boarder:

img = img.to(device)

label = label.to(device)

# 正向传播

out = model(img)

loss = loss_funcation(out, label)

# 反向更新参数

loss.backward()

optimizer.step()

optimizer.zero_grad()

# 记录训练精度以及损失值

train_loss += loss.item()

_,pred = out.max(1)

correct_num = (pred==label).sum().item()

train_acc += correct_num/img.size(0)

train_acc_all = train_acc/len(train_boarder)

train_loss_all = train_loss/len(train_boarder)

# 测试模式

model.eval()

test_loss = 0

test_acc = 0

for img, label in test_boarder:

img = img.to(device)

label = label.to(device)

# 正向预测

out = model(img)

loss = loss_funcation(out, label)

# 记录损失值以及精确值

test_loss += loss.item()

_,pred = out.max(1)

correct_num = (pred==label).sum().item()

test_acc += correct_num/img.size(0)

test_loss_all = test_loss/len(test_boarder)

test_acc_all = test_acc/len(test_boarder)

print("epoch:{}, train_loss:{:.4f}, train_acc:{:.4f}, test_loss:{:.4f}, test_acc:{:.4f}\

".format(epoch, train_loss_all, train_acc_all, test_loss_all, test_acc_all))结果

迭代50次后的打印结果如下:

可见第50次迭代后,模型在测试集上的数据精确度达到了0.84, 是有比较好的效果。

可见第50次迭代后,模型在测试集上的数据精确度达到了0.84, 是有比较好的效果。

但是对比一下可以发现,在训练集上模型的准确率达到了0.9180,说明模型存在过拟合的问题,后续对网络的优化可以从这个方面入手。

完整代码

# 神经网络搭建的库

import torch

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# 数据处理的库

from torchvision import transforms, utils, datasets

from torch.utils.data import DataLoader

# 可视化

from matplotlib.pyplot import plot as plt

# 训练集与测试集的绝对路径

train_path = r"D:\deep_learning\12_13_pre_data\train"

test_path = r"D:\deep_learning\12_13_pre_data\test"

# 定义转化方式

my_trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])])

# 获取需要处理的数据集

train_dataset = datasets.ImageFolder(train_path, transform=my_trans)

test_dataset = datasets.ImageFolder(test_path, transform=my_trans)

# 转换成生成器

train_boader = DataLoader(train_dataset, batch_size=5, shuffle=True)

test_boader = DataLoader(test_dataset, batch_size=2)

# 定义网络模型

class CNNnet(nn.Module):

def __init__(self):

super(CNNnet, self).__init__()

self.layer1 = nn.Sequential(nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5, stride=2), nn.MaxPool2d(kernel_size=5, stride=3))

self.layer2 = nn.Sequential(nn.Conv2d(in_channels=16, out_channels=36, kernel_size=5, stride=2), nn.MaxPool2d(kernel_size=3, stride=2))

self.layer3 = nn.Sequential(nn.Conv2d(in_channels=36, out_channels=64, kernel_size=3, stride=2), nn.MaxPool2d(kernel_size=3, stride=2))

self.fc1 = nn.Linear(64*8*11, 1024)

self.bn1 = nn.BatchNorm1d(1024)

self.fc2 = nn.Linear(1024, 128)

self.bn2 = nn.BatchNorm1d(128)

self.out = nn.Linear(128, 10)

def forward(self, x):

x = F.relu(self.layer1(x))

x = F.relu(self.layer2(x))

x = F.relu(self.layer3(x))

x = x.view(x.size(0), -1)

x = F.relu(self.bn1(self.fc1(x)))

x = F.dropout(x,p=0.5)

x = F.relu(self.bn2(self.fc2(x)))

x = F.dropout(x,p=0.5)

x = F.softmax(self.out(x), dim=1)

return x

# 检测设备是否可以用GPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print(device)

# 建立网络模型

model = CNNNet()

model.to(device)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.7)

# 开始训练

epoch_num = 50

for epoch in range(epoch_num):

train_loss_all = 0

train_acc_all = 0

test_loss_all = 0

test_acc_all = 0

# 训练模式

model.train()

train_loss = 0

train_acc = 0

for img, label in train_boarder:

img = img.to(device)

label = label.to(device)

# 正向传播

out = model(img)

loss = loss_funcation(out, label)

# 反向更新参数

loss.backward()

optimizer.step()

optimizer.zero_grad()

# 记录训练精度以及损失值

train_loss += loss.item()

_,pred = out.max(1)

correct_num = (pred==label).sum().item()

train_acc += correct_num/img.size(0)

train_acc_all = train_acc/len(train_boarder)

train_loss_all = train_loss/len(train_boarder)

# 测试模式

model.eval()

test_loss = 0

test_acc = 0

for img, label in test_boarder:

img = img.to(device)

label = label.to(device)

# 正向预测

out = model(img)

loss = loss_funcation(out, label)

# 记录损失值以及精确值

test_loss += loss.item()

_,pred = out.max(1)

correct_num = (pred==label).sum().item()

test_acc += correct_num/img.size(0)

test_loss_all = test_loss/len(test_boarder)

test_acc_all = test_acc/len(test_boarder)

print("epoch:{}, train_loss:{:.4f}, train_acc:{:.4f}, test_loss:{:.4f}, test_acc:{:.4f}\

".format(epoch, train_loss_all, train_acc_all, test_loss_all, test_acc_all))扩展-训练过程可视化

对于网络模型以及训练过程数据记录的可视化,tensorboard库提供了丰富的途径,可以通过写入日志的方式再建立网页读取得到直观的数据。但本篇由于篇幅原因暂时不介绍,具体方法可以参考pytorch官网介绍https://pytorch.org/tutorials/recipes/recipes/tensorboard_with_pytorch.html

如果想具体了解如何运用tensorboard到本篇的训练数据上,可以后台私信博主,考虑再出一期博客介绍如何使用tensorboard进行模型可视化。

本篇就使用matplotlib库中的pyplot直接对训练记录的数据进行可视化,需要导入库

# 数据可视化

import matplotlib.pyplot as plt

# 数据转化成numpy类型

import numpy as np在迭代训练之前初始化列表数据,储存每次迭代对应需要记录的数值:

# 记录训练误差与精确度

train_acc_list = []

train_loss_list = []

# 记录测试误差与精确度

test_acc_list = []

test_loss_list = []每次迭代训练的过程中,把计算得到的数据值append到对应的列表当中:

# 记录训练数据值

train_acc_list.append(train_acc_all)

train_loss_list.append(train_loss_all)

# 记录测试数据集

test_acc_list.append(test_acc_all)

test_loss_list.append(test_loss_all)迭代循环结束后加上下面的代码:

plt.plot(np.arange(len(train_loss_list)), train_loss_list, label='train_loss', color='gray')

plt.plot(np.arange(len(train_acc_list)), train_acc_list, label='train_acc', color='red')

plt.plot(np.arange(len(test_loss_list)), test_loss_list, label='test_loss', color='yellow')

plt.plot(np.arange(len(test_acc_list)), test_acc_list, label='test_acc', color='c')

plt.legend()

plt.show()结果显示如下:

欢迎大家讨论交流~~