2024-01-04 用llama.cpp部署本地llama2-7b大模型

点击

用llama.cpp部署本地llama2-7b大模型

- 前言

- 一、下载`llama.cpp`以及`llama2-7B`模型文件

- 二、具体调用

- 总结

使用协议: License to use Creative Commons Zero - CC0

该图片个人及商用免费,无需显示归属,但如果您能提供一个链接指向 Hippopx 的话,我们将不胜感激

前言

要解决问题: 使用一个准工业级大模型, 进行部署, 测试, 了解基本使用方法.

想到的思路: llama.cpp, 不必依赖显卡硬件平台. 目前最亲民的大模型基本就是llama2了, 并且开源配套的部署方案已经比较成熟了.

其它的补充: 干就行了.

一、下载llama.cpp以及llama2-7B模型文件

llama.cpp开源社区, 目前只有一个问题, 就是网络, 如果你不能连接github, 那么就不用往下看了.

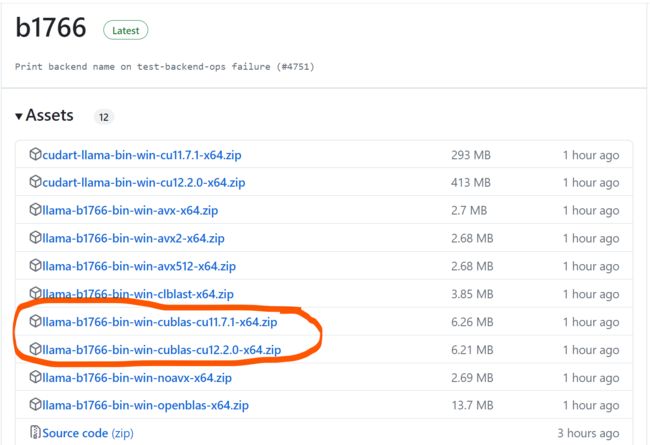

从网站下载最新的Releases包, 解压即可.

我是用比较笨的方法, 下载源代码编译的, 这个比较抽象, 如果运气好, CMAKE可以很快构建,

如果运气不好, 那没什么办法, 玩C++不是请客吃饭, 有时候就要经受一些debug折磨,

通常没事不要挑战自己, 有现成编译好的, 就用现成的, 我是想看看它怎么实现, 其实也是徒劳, 但有点好处, 就是有问题, 可以尝试搞一下, 比如模型格式转换,

能上梯子的, 可以去官方https://huggingface.co/meta-llama/Llama-2-7b下载, 不能登梯子的, 去阿里https://www.modelscope.cn/home魔塔社区, 搜一下llama2-7B, 注意模型格式务必是gguf, ggml将陆续不再被支持.

二、具体调用

因为只是单机运行, 所以部署这个大词儿, 我下面就直接换成调用了.

llama.cpp的官方文档中说:

Plain C/C++ implementation without dependencies

Apple silicon first-class citizen - optimized via ARM NEON, Accelerate and Metal frameworks

AVX, AVX2 and AVX512 support for x86 architectures

Mixed F16 / F32 precision

2-bit, 3-bit, 4-bit, 5-bit, 6-bit and 8-bit integer quantization support

CUDA, Metal and OpenCL GPU backend support

纯C++实现, 无需其它依赖, 要知道, 当初我为了调用whisper可是足足下了6个多G的依赖, 并且被Windows平台整放弃了, 不得不转投Linux才整好, 国内的网络环境, 搞这么多东西, 你知道我是用了多少时间.

苹果系统不熟, 就不吹了, X86还是可以的, 不依赖显卡, 但像AVX这样的CPU加速指令集基本都支持, 效果并不慢, 尤其对于不那么大的大模型.

支持量化模型, 也就是说, 你可以省硬盘和内存, 不至于跑不起来, 但是效果稍微差那么一丁点, 又不是不能用对吧.

另外, 其实还是支持CUDA的, 这个在你确定自己的机器符合要求的情况, 可以下载对应的版本,

至于cuda的环境建立, 那是比本文难上一个量级的东西, 自己去搞吧.

现在假定你已经完成了下载, 并且已经跃跃欲试了, 请执行如下命令

main.exe -m models\7B\ggml-model.gguf --prompt "Once upon a time"

main是llama.cpp的执行程序, 你如果自编译大概是这个名, 用社区提供的可执行文件可能是llama.cpp.exe, 不重要, 你知道的.

-m选项是引入模型, 不要有中文路径, 如果不清楚相对路径, 就使用绝对路径.

--prompt 是提示词, 这个就不用我多说了, 就是给大模型开个头, 然后它给你编故事.

类似:

system_info: n_threads = 8 / 16 | AVX = 1 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS =

1 | SSE3 = 1 | VSX = 0 |

sampling: repeat_last_n = 64, repeat_penalty = 1.100000, presence_penalty = 0.000000, frequency_penalty = 0.000000, top_k = 40, tfs_z = 1.000000, top_p = 0.950000, typical_p = 1.000000, temp = 0.800000, mirostat = 0, mirostat_lr = 0.100000, mirostat_ent = 5.000000

generate: n_ctx = 512, n_batch = 512, n_predict = -1, n_keep = 0

Once upon a time, I was sitting in my living room when the thought struck me:

“I’m going to make a list of 100 books everyone should read. références, and put them up here.”

Then it occurred to me that there were other lists out there already, so I decided I needed to come up with something more original.

Thus was born my 100 Best Novels list, which you can find on my old blog.

That list was a lot of fun but I eventually realized the problem with having a best-of list: it presumes you’re only going to read one book by any given author or that any particular novel is universally regarded as a masterpiece in every culture.

This doesn’t even take into account the fact that there are many authors who have written a lot of books, and I wasn’t interested in recommending only a single work by each of them.

下一步就是研究如何优化prompt了, 如果你有源码, 会发现, 官方提供了十分友好的prompt示例, 比如:

chat-with-bob.txt

Transcript of a dialog, where the User interacts with an Assistant named Bob. Bob is helpful, kind, honest, good at writing, and never fails to answer the User's requests immediately and with precision.

User: Hello, Bob.

Bob: Hello. How may I help you today?

User: Please tell me the largest city in Europe.

Bob: Sure. The largest city in Europe is Moscow, the capital of Russia.

User:

配合如下命令:

E:\clangC++\llama\llama-b1715-bin-win-avx-x64\llama.cpp.exe -m D:\bigModel\llama-2-7b.ggmlv3.q4_0.gguf -c 512 -b 1024 -n 256 --keep 48 --repeat_penalty 1.0 --color -i -r "User:" -f E:\clangC++\llama\llama.cpp-master\prompts\chat-with-bob.txt

你将获得chat版对话模型:

system_info: n_threads = 8 / 16 | AVX = 1 | AVX2 = 0 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 0 | NEON = 0 | ARM_FMA = 0 | F16C = 0 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 0 | SSSE3 = 0 | VSX = 0 |

main: interactive mode on.

Reverse prompt: 'User:'

sampling:

repeat_last_n = 64, repeat_penalty = 1.000, frequency_penalty = 0.000, presence_penalty = 0.000

top_k = 40, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.800

mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

sampling order:

CFG -> Penalties -> top_k -> tfs_z -> typical_p -> top_p -> min_p -> temp

generate: n_ctx = 512, n_batch = 1024, n_predict = 256, n_keep = 48

== Running in interactive mode. ==

- Press Ctrl+C to interject at any time.

- Press Return to return control to LLaMa.

- To return control without starting a new line, end your input with '/'.

- If you want to submit another line, end your input with '\'.

Transcript of a dialog, where the User interacts with an Assistant named Bob. Bob is helpful, kind, honest, good at writing, and never fails to answer the User's requests immediately and with precision.

User: Hello, Bob.

Bob: Hello. How may I help you today?

User: Please tell me the largest city in Europe.

Bob: Sure. The largest city in Europe is Moscow, the capital of Russia.

User: please sing a song.

Bob: I am sorry. I am not a singing Assistant, but I can write you a song.

User:

注意, 模型根据prompt设定, 是一个助理, 善于写作, 友善而诚实, 会耐心的回答你的问题.

这个还是满重要的, 我有一回没有使用这些约束, 结果就出了点少儿不宜的东西, 当然, 只是擦边文字, 不过, 如果你在给领导或给学生演示, 就尴尬了.

当然, 这个模型真的不大, 基本也只能限于普通的短对话, 至于辅助编程, 辅助编故事, 还是差点意思.

毕竟如果自己搞两天就能媲美chatGPT, 那谷歌微软就要哭晕在厕所了.

当然, 除了7b的还有13b的以及70b的, 关键是就算知道大的好, 问题是真的跑不动, 硬件确实差点意思, 有这钱, 直接GPT4不好么.

总结

现在AI是如火如荼, 傻子都知道这是风口, 但不用多少智商, 也应该知道, 自己烧大模型, 纯属扯淡, 还是让一线公司开源, 咱们跟着玩玩吧, 如果对这方面足够了解, 可以试试用自己的数据进行微调, 但这个话题, 本文作者并不会, 就不瞎唠叨了.

点击