FFmpeg 增加自定义协议读取 Android InputStream

前言

需求背景:

1、在使用 FFmpeg 命令处理 Android assets 目录下的文件时,无法读取 assets 目录;

2、Android Q 及以上系统,使用 FFmpeg 处理时没有权限直接通过路径读取应用外文件

实现方案:

1、直接拷贝文件到应用内目录(缺点是需要做一次拷贝,如果文件大耗时较长)

2、实现 FFmpeg 自定义协议,回调到 Android 层通过 InputStream 读取流,InputStream 可以来自 assets 或 uri

一、FFmpeg 自定义实现

实际就是实现 FFmpeg 的 URLProtocol (在 ffmepg/libavformat/url.h 中定义),FFmpeg 内部实际上已经实现了很多协议,如(见 ffmepg/libavformat/protocols.h):

extern const URLProtocol ff_file_protocol;

extern const URLProtocol ff_ftp_protocol;

...

extern const URLProtocol ff_hls_protocol;

extern const URLProtocol ff_http_protocol;

extern const URLProtocol ff_https_protocol;

...

extern const URLProtocol ff_rtmp_protocol;

extern const URLProtocol ff_rtp_protocol;

可参考 ff_file_protocol 的实现 (在 ffmepg/libavformat/file.c 中) 来实现自定义协议 asp (android_stream_protocol),由于只需读取操作,因此只需实现以下方法:

const URLProtocol ff_asp_protocol = {

.name = "asp",

.url_open2 = asp_open,

.url_read = asp_read,

.url_seek = asp_seek,

.url_close = asp_close,

.priv_data_size = sizeof(ASPContext),

.priv_data_class = &asp_context_class

};

在 ffmepg/libavformat 目录下新建 android_stream_protocol.c 具体实现如下:

#include " , "()V", FF_JNI_METHOD, offsetof(struct JNIStreamProtocolFields, jmd_init), 1 },

{ ASP_CLASS_PATH, "open", "(Ljava/lang/String;)I", FF_JNI_METHOD, offsetof(struct JNIStreamProtocolFields, jmd_open), 1 },

{ ASP_CLASS_PATH, "getSize", "()J", FF_JNI_METHOD, offsetof(struct JNIStreamProtocolFields, jmd_getSize), 1 },

{ ASP_CLASS_PATH, "read", "(Ljava/nio/ByteBuffer;II)I", FF_JNI_METHOD, offsetof(struct JNIStreamProtocolFields, jmd_read), 1 },

{ ASP_CLASS_PATH, "seek", "(JI)I", FF_JNI_METHOD, offsetof(struct JNIStreamProtocolFields, jmd_seek), 1 },

{ ASP_CLASS_PATH, "close", "()V", FF_JNI_METHOD, offsetof(struct JNIStreamProtocolFields, jmd_close), 1 },

{ NULL }

};

static const struct FFJniField jni_byte_buffer_mapping[] = {

{ "java/nio/ByteBuffer", NULL, NULL, FF_JNI_CLASS, offsetof(struct JNIByteBufferFields, class_byte_buffer), 1 },

{ "java/nio/ByteBuffer", "allocateDirect", "(I)Ljava/nio/ByteBuffer;", FF_JNI_STATIC_METHOD, offsetof(struct JNIByteBufferFields, jmd_s_allocate_direct), 1 },

{ NULL }

};

static int asp_open(URLContext *h, const char *filename, int flags)

{

ASPContext *context = h->priv_data;

int ret = -1;

JNIEnv *env = NULL;

jobject object = NULL;

jstring file_uri = NULL;

av_strstart(filename, "asp:", &filename);

env = ff_jni_get_env(context);

if (!env) {

goto exit;

}

if (ff_jni_init_jfields(env, &context->jfields, jni_stream_protocol_mapping, 1, context) < 0) {

goto exit;

}

if (ff_jni_init_jfields(env, &context->j_buff_fields, jni_byte_buffer_mapping, 1, context) < 0) {

goto exit;

}

object = (*env)->NewObject(env, context->jfields.class_streamprotocol, context->jfields.jmd_init);

if (!object) {

goto exit;

}

context->obj_stream_protocol = (*env)->NewGlobalRef(env, object);

if (!context->obj_stream_protocol) {

goto exit;

}

file_uri = ff_jni_utf_chars_to_jstring(env, filename, context);

if (!file_uri) {

goto exit;

}

ret = (*env)->CallIntMethod(env, context->obj_stream_protocol, context->jfields.jmd_open, file_uri);

if (ret != 0) {

ret = AVERROR(EIO);

goto exit;

}

context->media_size = (*env)->CallLongMethod(env, context->obj_stream_protocol, context->jfields.jmd_getSize);

if (context->media_size < 0) {

context->media_size = -1;

}

ret = 0;

exit:

if (object) {

(*env)->DeleteLocalRef(env, object);

}

if (!context->obj_stream_protocol) {

ff_jni_reset_jfields(env, &context->jfields, jni_stream_protocol_mapping, 1, context);

}

return ret;

}

static jobject get_jbuffer_with_check_capacity(URLContext *h, int new_capacity)

{

JNIEnv *env = NULL;

ASPContext *context = h->priv_data;

jobject local_obj;

if (context->obj_direct_buf && context->jbuffer_capacity >= new_capacity) {

return context->obj_direct_buf;

}

new_capacity = FFMAX(new_capacity, context->jbuffer_capacity * 2);

env = ff_jni_get_env(context);

if (!env) {

return NULL;

}

if (context->obj_direct_buf) {

(*env)->DeleteGlobalRef(env, context->obj_direct_buf);

context->jbuffer_capacity = 0;

}

local_obj = (*env)->CallStaticObjectMethod(env, context->j_buff_fields.class_byte_buffer,

context->j_buff_fields.jmd_s_allocate_direct, new_capacity);

if (!local_obj) {

return NULL;

}

context->obj_direct_buf = (*env)->NewGlobalRef(env, local_obj);

context->jbuffer_capacity = new_capacity;

(*env)->DeleteLocalRef(env, local_obj);

return context->obj_direct_buf;

}

static int asp_read(URLContext *h, unsigned char *buf, int size)

{

ASPContext *context = h->priv_data;

int ret = -1;

JNIEnv *env = NULL;

jobject jbuffer = NULL;

void * p_buf_data;

env = ff_jni_get_env(context);

if (!env) {

goto exit;

}

jbuffer = get_jbuffer_with_check_capacity(h, size);

if (!jbuffer) {

ret = AVERROR(ENOMEM);

goto exit;

}

ret = (*env)->CallIntMethod(env, context->obj_stream_protocol, context->jfields.jmd_read, jbuffer, 0, size);

if (ret < 0) {

ret = AVERROR(EIO);

goto exit;

}

p_buf_data = (*env)->GetDirectBufferAddress(env, jbuffer);

memcpy(buf, p_buf_data, ret);

if (ret == 0) {

ret = AVERROR_EOF;

}

exit:

return ret;

}

static int64_t asp_seek(URLContext *h, int64_t pos, int whence)

{

ASPContext *context = h->priv_data;

int ret = -1;

JNIEnv *env = NULL;

env = ff_jni_get_env(context);

if (!env) {

goto exit;

}

if (AVSEEK_SIZE == whence) {

return context->media_size;

}

ret = (*env)->CallIntMethod(env, context->obj_stream_protocol, context->jfields.jmd_seek, pos, whence);

if (ret != 0) {

ret = AVERROR(EIO);

goto exit;

}

ret = 0;

exit:

return ret;

}

static int asp_close(URLContext *h)

{

ASPContext *context = h->priv_data;

int ret = -1;

JNIEnv *env = NULL;

env = ff_jni_get_env(context);

if (!env) {

goto exit;

}

(*env)->CallVoidMethod(env, context->obj_stream_protocol, context->jfields.jmd_close);

(*env)->DeleteGlobalRef(env, context->obj_direct_buf);

(*env)->DeleteGlobalRef(env, context->obj_stream_protocol);

ret = 0;

exit:

return ret;

}

const URLProtocol ff_asp_protocol = {

.name = "asp",

.url_open2 = asp_open,

.url_read = asp_read,

.url_seek = asp_seek,

.url_close = asp_close,

.priv_data_size = sizeof(ASPContext),

.priv_data_class = &asp_context_class

};

#endif /* CONFIG_ASP_PROTOCOL */

在 asp_open 、asp_read、asp_seek、asp_close 方法中通过 jni 调用 Android 上层实现相应的方法去对 InputStream 操作。上层接口 IStreamProtocol.h 定义如下:

/**

* Author: AlanWang4523.

* Date: 2020/11/3 17:36.

* Mail: [email protected]

*/

@Keep

public interface IStreamProtocol {

int SUCCESS = 0;

int ERROR_OPEN = -1;

int ERROR_GET_SIZE = -2;

int ERROR_READ = -3;

int ERROR_SEEK = -4;

@Keep

int open(String uriString);

@Keep

long getSize();

@Keep

int read(ByteBuffer buffer, int offset, int size);

@Keep

int seek(long position, int whence);

@Keep

void close();

}

二、编译配置

- 在 ffmpeg/libavformat/protocols.c 中导入自定义协议:

extern const URLProtocol ff_asp_protocol; - ffmpeg/libavformat/Makefile 中增加对 android_stream_protocol.c 的编译(我用 FFmepg-n4.0.2 版本,大概在 618 行):

OBJS-$(CONFIG_ASP_PROTOCOL) += android_stream_protocol.o - 编译配置时打开自定义协议(build_ffmpeg.sh 的 config 中):

./configure \ ... --disable-protocols \ --enable-protocol=file \ --enable-protocol=asp \ ...

三、Android 上层实现

StreamProtocol.java 协议代理,ffmpeg 中实现的自定义协议会调这个类:

package com.alan.ffmpegjni4android.protocols;

import android.util.Log;

import java.nio.ByteBuffer;

import androidx.annotation.Keep;

/**

* Author: AlanWang4523.

* Date: 2020/11/3 17:34.

* Mail: [email protected]

*/

@Keep

public class StreamProtocol implements IStreamProtocol {

private static final String TAG = StreamProtocol.class.getSimpleName();

private IStreamProtocol streamProtocol;

@Keep

@Override

public int open(String uriString) {

Log.e(TAG, "open()---->>" + uriString);

streamProtocol = StreamProtocolFactory.create(uriString);

if (streamProtocol == null) {

return ERROR_OPEN;

}

return streamProtocol.open(uriString);

}

@Keep

@Override

public long getSize() {

long size;

if (streamProtocol != null) {

size = streamProtocol.getSize();

} else {

size = ERROR_GET_SIZE;

}

Log.e(TAG, "getSize()---->>" + size);

return size;

}

@Keep

@Override

public int read(ByteBuffer buffer, int offset, int size) {

int result;

if (streamProtocol != null) {

result = streamProtocol.read(buffer, offset, size);

if (result == -1) {

result = 0;

}

} else {

result = ERROR_READ;

}

return result;

}

@Keep

@Override

public int seek(long position, int whence) {

int result = 0;

if (streamProtocol != null) {

result = streamProtocol.seek(position, whence);

} else {

result = ERROR_SEEK;

}

Log.e(TAG, "seek()---->>position = " + position + ", whence = " + whence);

return result;

}

@Keep

@Override

public void close() {

Log.e(TAG, "close()---->>");

if (streamProtocol != null) {

streamProtocol.close();

streamProtocol = null;

}

}

}

open 方法中通过 StreamProtocolFactory 可以实现二级协议,如 asset 或 uri,StreamProtocolFactory 实现如下:

package com.alan.ffmpegjni4android.protocols;

import android.content.Context;

import android.text.TextUtils;

/**

* Author: AlanWang4523.

* Date: 2020/11/5 19:10.

* Mail: [email protected]

*/

public class StreamProtocolFactory {

public final static String SCHEME_ASSET = "assets://";

public final static String SCHEME_CONTENT = "content://";

private static Context sAppContext;

public static Context getAppContext() {

return sAppContext;

}

public static void setAppContext(Context appContext) {

StreamProtocolFactory.sAppContext = appContext.getApplicationContext();

}

public static IStreamProtocol create(String uriString) {

if (TextUtils.isEmpty(uriString)) {

return null;

}

if (uriString.startsWith(SCHEME_ASSET)) {

return new AssetStreamProtocol(sAppContext);

} else if (uriString.startsWith(SCHEME_CONTENT)) {

return new ContentStreamProtocol(sAppContext);

} else {

return new FileStreamProtocol();

}

}

}

真正对 InputStream 的操作在 InputStreamProtocol.java 比较有坑的地方在 seek ,FFmpeg 中 seek 有三种:

int SEEK_SET = 0;

int SEEK_CUR = 1;

int SEEK_END = 2;

InputStreamProtocol.java 的实现如下:

package com.alan.ffmpegjni4android.protocols;

import java.io.InputStream;

import java.nio.ByteBuffer;

/**

* Author: AlanWang4523.

* Date: 2020/11/5 19:46.

* Mail: [email protected]

*/

abstract class InputStreamProtocol implements IStreamProtocol {

private static final int SEEK_SET = 0;

private static final int SEEK_CUR = 1;

private static final int SEEK_END = 2;

private InputStream mInputStream;

private long mStreamSize = -1;

private long mCurPosition = 0;

private String mUriString;

protected abstract InputStream getInputStream(String uriString);

@Override

public int open(String uriString) {

mUriString = uriString;

mInputStream = getInputStream(uriString);

if (mInputStream == null) {

return ERROR_OPEN;

}

try {

mStreamSize = mInputStream.available();

if (mInputStream.markSupported()) {

mInputStream.mark((int) mStreamSize);

}

return SUCCESS;

} catch (Exception ignored) {

}

return ERROR_OPEN;

}

@Override

public long getSize() {

return mStreamSize;

}

@Override

public int read(ByteBuffer buffer, int offset, int size) {

if (mInputStream != null) {

try {

buffer.clear();

int readLen = mInputStream.read(buffer.array(), buffer.arrayOffset() + offset, size);

mCurPosition += readLen;

return readLen;

} catch (Exception ignored) {

ignored.printStackTrace();

}

}

return ERROR_READ;

}

@Override

public int seek(long position, int whence) {

if (mInputStream != null) {

try {

long posNeedSeekTo = getSeekPosition(position, whence);

long needSkipLen = posNeedSeekTo - mCurPosition;

long skipLen;

if (needSkipLen < 0) {

// 往回跳转

if (mInputStream.markSupported()) {

mInputStream.reset();

} else {

mInputStream.close();

mInputStream = getInputStream(mUriString);

if (mInputStream == null) {

return ERROR_SEEK;

}

}

mCurPosition = 0;

needSkipLen = posNeedSeekTo;

}

do {

skipLen = mInputStream.skip(needSkipLen);

mCurPosition += skipLen;

needSkipLen -= skipLen;

} while (needSkipLen > 0);

return SUCCESS;

} catch (Exception ignored) {

}

}

return ERROR_SEEK;

}

@Override

public void close() {

if (mInputStream != null) {

try {

mInputStream.close();

} catch (Exception ignored) {

}

mInputStream = null;

}

}

/**

* 获取需要跳转到的绝对位置

* @param position 需要跳转

* @param whence 跳转方式

* @return 需要调整到的绝对位置

*/

private long getSeekPosition(long position, int whence) {

long posNeedSeekTo;

if (whence == SEEK_SET) {

posNeedSeekTo = position;

} else if (whence == SEEK_CUR) {

posNeedSeekTo = mCurPosition + position;

} else if (whence == SEEK_END) {

posNeedSeekTo = mStreamSize - position;

} else {

posNeedSeekTo = position;

}

return posNeedSeekTo;

}

}

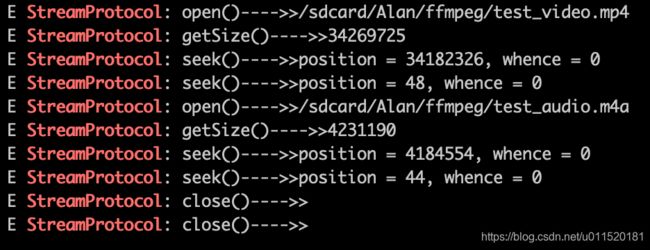

四、自定义协议测试

需要注意的是自定义 Android Stream 协议需要依赖 jni ,因此在执行 FFmpeg 命令前要设置 JVM,通过 ffmpeg 保存 jvm:

JavaVM * jvm;

(*env)->GetJavaVM(env, &jvm);

av_jni_set_java_vm(jvm, NULL);

调用封装的 FFmpeg jni 方法执行 FFmpeg 命令:

// 在文件前增加 asp:

String TEST_CMD_STR = "ffmpeg -i asp:/sdcard/Alan/ffmpeg/test.mp4";

// 通过定义协议处理 assets 中的文件

String TEST_CMD_STR = "ffmpeg -y -i asp:assets://test.mp4 -vcodec copy /sdcard/Alan/ffmpeg/test_out.mp4";

// 通过定义协议实现音频、视频混合

String TEST_CMD_STR = String.format(" -y -i %s -i %s -c copy %s",

"asp:/sdcard/Alan/ffmpeg/test_video.mp4", "asp:/sdcard/Alan/ffmpeg/test_audio.m4a", "/sdcard/Alan/ffmpeg/muxer_out.mp4");

全部代码及测试 demo 见 GitHub