k8s安装、多master节点部署

| master1 | 10.10.10.10 | docker、kubelet、kubeadm、kubectl、containerd |

| master2 | 10.10.10.11 | docker、kubelet、kubeadm、kubectl、containerd |

| master3 | 10.10.10.12 | docker、kubelet、kubeadm、kubectl、containerd |

| node1 | 10.10.10.13 | docker、kubelet、kubeadm、kubectl、containerd |

1.关闭防火墙(所有节点执行)

systemctl stop firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config2.关闭swap分区(所有节点执行)

sed -ri 's/.*swap.*/#&/' /etc/fstab3.添加节点名称(所有节点执行)

cat >> /etc/hosts << EOF

10.10.10.10 master1

10.10.10.11 master2

10.10.10.12 master3

10.10.10.13 node1

EOF

分配完之后需要重启机器reboot设置免密登录

在master1上生成秘钥

ssh-keygen -t rsa

cd ~/.ssh

ssh-copy-id 10.10.10.11 #需要免密登录的机器ip

ssh-copy-id 10.10.10.12

ssh-copy-id 10.10.10.134.在所有节点安装docker(所有节点执行)

yum -y install docker5.所有节点都配置阿里云加速(所有节点执行)

sudo mkdir -p /etc/docker

sudo cat /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xxxxxxxx.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker6.master和node节点都安装kubadm,kubctl,kublet、containerd

6.1 master节点和node节点都配置

vim /etc/yum.repos.d/kubernetes.repo[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg6.2 master节点和node节点都安装

yum install -y kubelet kubeadm kubectl6.3 master节点和node节点都设置开机启动

systemctl enable kubelet

systemctl start kubelet6.4 安装containerd

yum -y install containerd7.在master1节点用kubeadm跑初始化

kubeadm init --image-repository=registry.aliyuncs.com/google_containers报错1:

[root@master1 ~]# kubeadm init --image-repository=registry.aliyuncs.com/google_containers

[init] Using Kubernetes version: v1.26.2

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: E0317 15:44:38.904235 46657 remote_runtime.go:616] "Status from runtime service failed" err="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory\""

time="2023-03-17T15:44:38+08:00" level=fatal msg="getting status of runtime: rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory\""

, error: exit status 1解决:

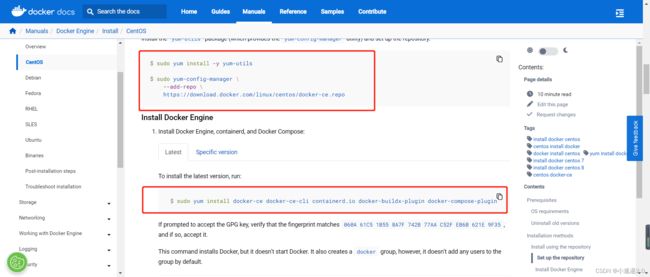

先配置一下阿里源 ,然后再更新一下系统

[root@master1 ~]# yum update[root@master1 ~]# yum -y install containerd如果还是不行,建议按照docker官方文档重新安装一下docker

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

重新安装docker(可选)

[root@master1 yum.repos.d]# sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin --skip-broken

[root@master1 yum.repos.d]# yum -y install containerd

已加载插件:fastestmirror, product-id, search-disabled-repos, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* epel: mirrors.bfsu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

正在解决依赖关系

--> 正在检查事务

---> 软件包 containerd.io.x86_64.0.1.6.19-3.1.el7 将被 安装

--> 解决依赖关系完成

依赖关系解决

========================================================================================================================================================

Package 架构 版本 源 大小

========================================================================================================================================================

正在安装:

containerd.io x86_64 1.6.19-3.1.el7 docker-ce-stable 34 M

事务概要

========================================================================================================================================================

安装 1 软件包

总下载量:34 M

安装大小:114 M

Downloading packages:

警告:/var/cache/yum/x86_64/7/docker-ce-stable/packages/containerd.io-1.6.19-3.1.el7.x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID 621e9f35: NOKEY ETA

containerd.io-1.6.19-3.1.el7.x86_64.rpm 的公钥尚未安装

containerd.io-1.6.19-3.1.el7.x86_64.rpm | 34 MB 00:00:01

从 https://download.docker.com/linux/centos/gpg 检索密钥

导入 GPG key 0x621E9F35:

用户ID : "Docker Release (CE rpm) "

指纹 : 060a 61c5 1b55 8a7f 742b 77aa c52f eb6b 621e 9f35

来自 : https://download.docker.com/linux/centos/gpg

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

正在安装 : containerd.io-1.6.19-3.1.el7.x86_64 1/1

验证中 : containerd.io-1.6.19-3.1.el7.x86_64 1/1

已安装:

containerd.io.x86_64 0:1.6.19-3.1.el7

完毕!

[root@master1 ~]# systemctl start containerd

[root@master1 ~]# systemctl status containerd[root@master1 ~]# containerd config default > /etc/containerd/config.toml

[root@master1 ~]# systemctl enable containerd

[root@master1 ~]# vim /etc/containerd/config.toml

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

[root@master1 ~]# systemctl restart containerd.service报错2:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher解决:

[root@master1 ~]# echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables报错3:

[root@master1 ~]# kubeadm init --image-repository=registry.aliyuncs.com/google_containers

[init] Using Kubernetes version: v1.26.3

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-6443]: Port 6443 is in use

[ERROR Port-10259]: Port 10259 is in use

[ERROR Port-10257]: Port 10257 is in use

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR Port-2379]: Port 2379 is in use

[ERROR Port-2380]: Port 2380 is in use

[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher解决:

[root@master1 ~]# kubeadm reset正常初始化输出内容:

[root@master1 ~]# kubeadm init --image-repository=registry.aliyuncs.com/google_containers

[init] Using Kubernetes version: v1.26.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost.localdomain] and IPs [10.96.0.1 10.10.10.10]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost localhost.localdomain] and IPs [10.10.10.10 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost localhost.localdomain] and IPs [10.10.10.10 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.002772 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: iarelq.4xv3k2uinhpy43gl

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.10.10:6443 --token iarelq.4xv3k2uinhpy43gl \

--discovery-token-ca-cert-hash sha256:31fa6d668197e7652f69b422d5af53888ba5f667fdb87a3eea9fd4c04b34f352 继续在master1节点上执行:

[root@master1 ~]# mkdir -p $HOME/.kube

[root@master1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config注意:保存最后产生的token值

如果忘记,重新获取:kubeadm token create --print-join-command8.将master2节点加入到master1集群

在master1上执行:

[root@master1 ~]# kubeadm init phase upload-certs --upload-certs

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

8ac7964d0808f102629488bb6fc2fbc31db76836245728f03d9303fa1fd7b0bb

[root@master1 ~]# kubeadm token create --print-join-command

kubeadm join 10.10.10.10:6443 --token ze93cl.wmlal7givxmbsre1 --discovery-token-ca-cert-hash sha256:31fa6d668197e7652f69b422d5af53888ba5f667fdb87a3eea9fd4c04b34f352

进行命令拼接并且在master2上执行:

kubeadm join 10.10.10.10:6443 --token iarelq.4xv3k2uinhpy43gl

--discovery-token-ca-cert-hash sha256:31fa6d668197e7652f69b422d5af53888ba5f667fdb87a3eea9fd4c04b34f352

--control-plane --certificate-key 8ac7964d0808f102629488bb6fc2fbc31db76836245728f03d9303fa1fd7b0bb在master2上执行加入集群启动不起来:

报错1:

[root@master2 ~]# kubeadm join 10.10.10.10:6443 --token m4kmor.y5fbvpf2fsd8fglc --discovery-token-ca-cert-hash sha256:ba9bc69326dd5c53b6772de3eaa5dc5152dd285b2ed16d86e565ef843516386c --certificate-key 5fcaa1755f6ebf73b87439ffb2ae583e2f16b0a7c58329d8ac381f32af090fab --control-plane

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

error execution phase preflight:

One or more conditions for hosting a new control plane instance is not satisfied.

unable to add a new control plane instance to a cluster that doesn't have a stable controlPlaneEndpoint address

Please ensure that:

* The cluster has a stable controlPlaneEndpoint address.

* The certificates that must be shared among control plane instances are provided.

To see the stack trace of this error execute with --v=5 or higher

解决:

在master2、master3上执行:

mkdir -p /etc/kubernetes/pki/etcd/

在master1上执行,将master1的证书拷贝到master2、master3

scp /etc/kubernetes/pki/ca.* 10.10.10.11:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.* 10.10.10.11:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.* 10.10.10.11:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.* 10.10.10.11:/etc/kubernetes/pki/etcd/在master1上执行:

[root@master1 kubernetes]# kubectl -n kube-system edit cm kubeadm-config

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

ClusterConfiguration: |

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.27.2

controlPlaneEndpoint: "10.10.10.10:6443" #在这里加上master1主机ip端口

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

kind: ConfigMap

metadata:

creationTimestamp: "2023-06-07T03:41:55Z"

name: kubeadm-config

namespace: kube-system

resourceVersion: "18081"

uid: 7fd4d04a-90b9-4913-966f-1a5e2e1c535b报错2: master3加入集群报错

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[kubelet-check] Initial timeout of 40s passed.

error execution phase kubelet-start: error uploading crisocket: Unauthorized解决:

在master3执行:

[root@master3 kubernetes]# swapoff -a

[root@master3 kubernetes]# kubeadm reset

[root@master3 kubernetes]# rm -rf /etc/cni/net.d/*

[root@master3 kubernetes]# systemctl daemon-reload

[root@master3 kubernetes]# systemctl restart kubelet

[root@master3 kubernetes]# iptables -Fmaster2、master3加入master1集群正常输出信息:

[root@master2 kubernetes]# kubeadm join 10.10.10.10:6443 --token s28dj9.waznokdl70cnqmh1 --discovery-token-ca-cert-hash sha256:7779a342df1bfc2b568ebf9284ce74014c59e6a0debc38c02651840c6d42dc6b --control-plane --certificate-key eb6cca78d76d76fd268a7eb0580ebdff093b6bf8fac75fa7c526271b5c45a3c8

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W0607 15:27:38.100627 3060 images.go:80] could not find officially supported version of etcd for Kubernetes v1.27.2, falling back to the nearest etcd version (3.5.7-0)

W0607 15:27:53.652093 3060 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image.

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki"

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master2] and IPs [10.100.208.22 127.0.0.1 ::1]

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master2] and IPs [10.100.208.22 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master2] and IPs [10.96.0.1 10.10.10.12 10.10.10.10]

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

W0607 15:28:13.529819 3060 images.go:80] could not find officially supported version of etcd for Kubernetes v1.27.2, falling back to the nearest etcd version (3.5.7-0)

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[mark-control-plane] Marking the node master2 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master2 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.9.在node1节点上执行,将node1节点加入到master1集群

kubeadm join 10.10.10.13:6443 --token iarelq.4xv3k2uinhpy43gl

--discovery-token-ca-cert-hash sha256:31fa6d668197e7652f69b422d5af53888ba5f667fdb87a3eea9fd4c04b34f352正常输出内容:

[root@localhost ~]# kubeadm join 10.10.10.10:6443 --token 3cjcra.akfpvh70hmhb3u7v \

> --discovery-token-ca-cert-hash sha256:faa3ff1629387afc41774da2e5a15a7bbf314ecbb05ca190b50a56dbed2f1dc3

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster. 验证:在master1上执行:kubectl get nodes

[root@master1 ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master1 Ready control-plane 5h16m v1.27.2 10.10.10.10 CentOS Linux 7 (Core) 3.10.0-1160.76.1.el7.x86_64 containerd://1.6.18

master2 NotReady control-plane 90m v1.27.2 10.10.10.11 CentOS Linux 7 (Core) 6.2.10-1.el7.elrepo.x86_64 containerd://1.6.19

master3 NotReady control-plane 71m v1.27.2 10.10.10.12 CentOS Linux 7 (Core) 6.2.6-1.el7.elrepo.x86_64 containerd://1.6.21

node1 NotReady 42m v1.27.2 10.10.10.13 CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.21

报错1:

[root@node1 kubernetes]# kubectl get pod

E0505 11:14:00.067452 30511 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0505 11:14:00.068912 30511 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0505 11:14:00.069896 30511 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0505 11:14:00.071297 30511 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0505 11:14:00.071929 30511 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

The connection to the server localhost:8080 was refused - did you specify the right host or port?解决:

[root@node1 kubernetes]# echo "export KUBECONFIG=/etc/kubernetes/kubelet.conf" >> /etc/profile

[root@node1 kubernetes]# source /etc/profile

[root@node1 kubernetes]# kubectl get pod

No resources found in default namespace.

[root@node1 kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane 44d v1.27.210.安装pod网络插件-flannel (在master1上执行)

vim kube-flannel.yml---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreatekubectl apply -f kube-flannel.yml验证:

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane 5h20m v1.27.2

master2 Ready control-plane 94m v1.27.2

master3 Ready control-plane 75m v1.27.2

node1 Ready 46m v1.27.2 报错1:

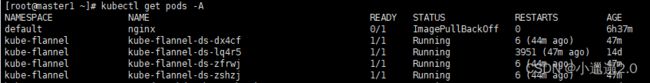

[root@master1 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx 0/1 ContainerCreating 0 5h8m

kube-flannel kube-flannel-ds-4tjq4 0/1 CrashLoopBackOff 11 (21s ago) 18m

kube-flannel kube-flannel-ds-lq4r5 0/1 CrashLoopBackOff 3931 (15s ago) 13d

kube-flannel kube-flannel-ds-mdvt5 0/1 CrashLoopBackOff 3932 (30s ago) 13d

kube-flannel kube-flannel-ds-xq5dt 0/1 CrashLoopBackOff 3923 (30s ago) 13d

kube-system coredns-7bdc4cb885-2k99d 0/1 ContainerCreating 0 14d

kube-system coredns-7bdc4cb885-6zp9z 0/1 ContainerCreating 0 14d

kube-system etcd-master1 1/1 Running 0 14d

kube-system etcd-master2 1/1 Running 0 14d

kube-system etcd-master3 1/1 Running 0 14d

kube-system kube-apiserver-master1 1/1 Running 0 14d

kube-system kube-apiserver-master2 1/1 Running 0 14d

kube-system kube-apiserver-master3 1/1 Running 11 14d

kube-system kube-controller-manager-master1 1/1 Running 0 14d

kube-system kube-controller-manager-master2 1/1 Running 0 14d

kube-system kube-controller-manager-master3 1/1 Running 2 14d

kube-system kube-proxy-j4ndt 1/1 Running 0 13d

kube-system kube-proxy-v2ld8 1/1 Running 0 14d

kube-system kube-proxy-vxljj 1/1 Running 0 14d

kube-system kube-proxy-wbzbk 1/1 Running 0 14d

kube-system kube-scheduler-master1 1/1 Running 0 14d

kube-system kube-scheduler-master2 1/1 Running 0 14d

kube-system kube-scheduler-master3 1/1 Running 2 14d

kubernetes-dashboard dashboard-metrics-scraper-5cb4f4bb9c-qdrm8 0/1 ContainerCreating 0 6d5h

kubernetes-dashboard kubernetes-dashboard-6967859bff-mnnzx 0/1 ContainerCreating 0 6d5h

[root@master1 ~]# kubectl logs -f kube-flannel-ds-xq5dt -n kube-flannel

Defaulted container "kube-flannel" out of: kube-flannel, install-cni-plugin (init), install-cni (init)

I0621 07:55:39.797882 1 main.go:207] CLI flags config: {etcdEndpoints:http://127.0.0.1:4001,http://127.0.0.1:2379 etcdPrefix:/coreos.com/network etcdKeyfile: etcdCertfile: etcdCAFile: etcdUsername: etcdPassword: version:false kubeSubnetMgr:true kubeApiUrl: kubeAnnotationPrefix:flannel.alpha.coreos.com kubeConfigFile: iface:[] ifaceRegex:[] ipMasq:true ifaceCanReach: subnetFile:/run/flannel/subnet.env publicIP: publicIPv6: subnetLeaseRenewMargin:60 healthzIP:0.0.0.0 healthzPort:0 iptablesResyncSeconds:5 iptablesForwardRules:true netConfPath:/etc/kube-flannel/net-conf.json setNodeNetworkUnavailable:true}

W0621 07:55:39.798120 1 client_config.go:614] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0621 07:55:40.194288 1 kube.go:121] Waiting 10m0s for node controller to sync

I0621 07:55:40.194484 1 kube.go:402] Starting kube subnet manager

I0621 07:55:41.195327 1 kube.go:128] Node controller sync successful

I0621 07:55:41.195455 1 main.go:227] Created subnet manager: Kubernetes Subnet Manager - master1

I0621 07:55:41.195472 1 main.go:230] Installing signal handlers

I0621 07:55:41.195736 1 main.go:467] Found network config - Backend type: vxlan

I0621 07:55:41.195795 1 match.go:206] Determining IP address of default interface

I0621 07:55:41.197387 1 match.go:259] Using interface with name ens32 and address 10.10.10.10

I0621 07:55:41.197519 1 match.go:281] Defaulting external address to interface address (10.10.10.10)

I0621 07:55:41.197668 1 vxlan.go:138] VXLAN config: VNI=1 Port=0 GBP=false Learning=false DirectRouting=false

E0621 07:55:41.198362 1 main.go:330] Error registering network: failed to acquire lease: node "master1" pod cidr not assigned

I0621 07:55:41.198602 1 main.go:447] Stopping shutdownHandler...

W0621 07:55:41.198663 1 reflector.go:436] github.com/flannel-io/flannel/subnet/kube/kube.go:403: watch of *v1.Node ended with: an error on the server ("unable to decode an event from the watch stream: context canceled") has prevented the request from succeeding解决: 所有master节点都修改!

增加参数:

--allocate-node-cidrs=true

--cluster-cidr=10.244.0.0/16

[root@master1 ~]# vim /etc/kubernetes/manifests/kube-controller-manager.yaml完整kube-controller-manager.yaml文件:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --use-service-account-credentials=true

- --allocate-node-cidrs=true #添加这行 <<<-----

- --cluster-cidr=10.244.0.0/16 #添加这行 <<<-----

image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.27.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10257

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-controller-manager

resources:

requests:

cpu: 200m

startupProbe:

failureThreshold: 24

httpGet:

host: 127.0.0.1

path: /healthz

port: 10257

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

......然后重启flannel:

[root@master1 ~]# kubectl delete pod kube-flannel-ds-7jg2s -n kube-flannel

pod "kube-flannel-ds-7jg2s" deleted

[root@master1 ~]# kubectl delete pod kkube-flannel-ds-lq4r5 -n kube-flannel

Error from server (NotFound): pods "kkube-flannel-ds-lq4r5" not found

[root@master1 ~]# kubectl delete pod kube-flannel-ds-mdvt5 -n kube-flannel

pod "kube-flannel-ds-mdvt5" deleted

[root@master1 ~]# kubectl delete pod kube-flannel-ds-xq5dt -n kube-flannel

pod "kube-flannel-ds-xq5dt" deleted

已经成功启动了

用top查看磁盘、cpu报错:error: Metrics API not availabl

[root@master1 ~]# kubectl top nodes

error: Metrics API not available解决:

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

这个文件里面是k8s官方的镜像,在国内访问不了,需要将镜像修改成国内的镜像地址:

components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

hostNetwork: true

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

kubectl apply -f components.yaml安装可视化界面

部署dashboard可视化界面

github上的官方地址:

https://github.com/kubernetes/dashboard/releases

或者直接安装(一般访问不了,有墙):

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

或者浏览器访问然后复制或者下载下来:

https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml我自己就是通过浏览器访问然后复制到服务器上的:vim recommended.yaml

修改:

spec:

type: NodePort #新增 <<<-----

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard完整recommended.yaml文件:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #新增 <<<-----

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.7.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

kubectl apply -f recommended.yaml

创建访问Dashbord的用户

vim dashboard-adminuser.yamlapiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

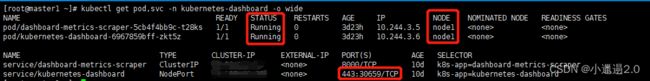

namespace: kubernetes-dashboardkubectl apply -f dashboard-adminuser.yaml查看dashboard状态:

kubectl get pod,svc -n kubernetes-dashboard -o wide获取登录的token:

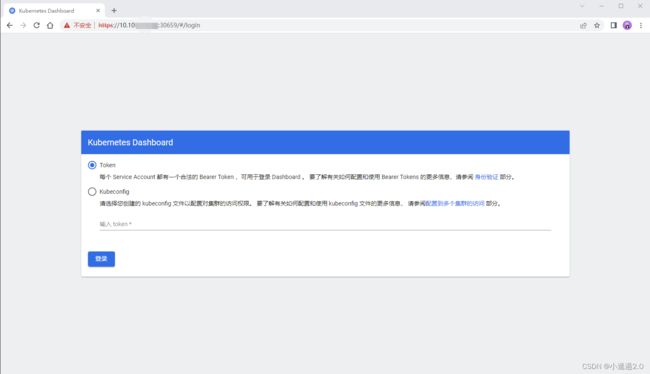

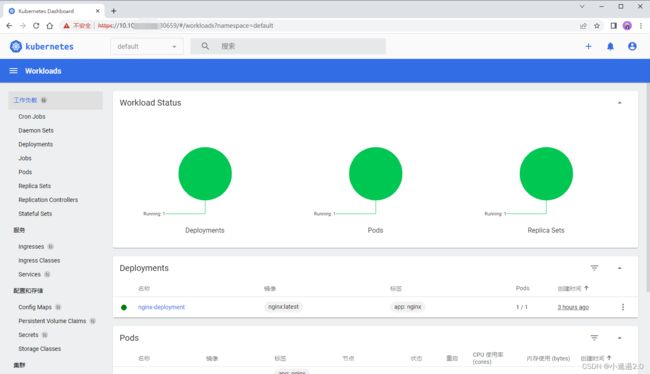

kubectl -n kubernetes-dashboard create token admin-user然后在浏览器中访问:https://10.10.10.13:30659 (node1机器ip)

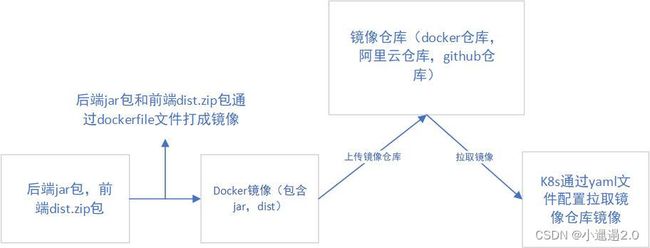

K8S代码发布 <<<<<