大数据学习6-Sqoop安装与使用

sqoop搭建还是挺简单的,前提是hadoop与hive搭建完成

上传解压

首先下载sqoop,sqoop的版本是1.4.7,sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz,提取码:jpzn

上传到master主机上(随便哪台都可以)

[root@master ~]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz 解压

[root@master ~]# mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoop 重命名

配置环境变量

[root@master ~]# vi /etc/profile

export SQOOP_HOME=/root/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

[root@master ~]# source /etc/profile

添加驱动

需要添加mysql-connector-java-5.1.46-bin.jar、hive-common-1.1.0.jar、hive-exec-1.1.0.jar3个jar包到/sqoop/lib/,其中两个hive的jar包是hive/lib目录下有的,mysql驱动需要自己下载,mysql-connector-java-5.1.46-bin.jar(提取码:8d5j)

[root@master ~]# cp mysql-connector-java-5.1.46-bin.jar /root/sqoop/lib/

[root@master lib]# cd hive/lib/

[root@master lib]# cp hive-common-1.1.0.jar ~/sqoop/lib/

[root@master lib]# cp hive-exec-1.1.0.jar ~/sqoop/lib/

配置sqoop-env.sh

[root@master lib]# cd /root/sqoop/conf/ 进入需要配置的文件目录

[root@master conf]# cp sqoop-env-template.sh sqoop-env.sh 模板复制

去掉注释并添加路径,我的都是在root目录下

[root@master conf]# vi sqoop-env.sh

export HADOOP_COMMON_HOME=/root/hadoop

export HADOOP_MAPRED_HOME=/root/hadoop

export HBASE_HOME=/root/hbase

export HIVE_HOME=/root/hive

简单操作sqoop

查看版本信息,检查sqoop是否安装成功

[root@master conf]# sqoop-version

Warning: /root/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /root/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

20/10/16 00:56:19 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

可以看到版本信息1.4.7说明安装成功

数据导入前的准备

启动mysql,准备好需要导入的数据

[root@master sqoop]# service mysql start

[root@master sqoop]# mysql -uroot -p

MySQL [(none)]> create database stus;

MySQL [(none)]> use stus;

MySQL [stus]> create table stu(id int, name char(10));

MySQL [stus]> insert into stu values(1001,'xiaoming');

MySQL [stus]> insert into stu values(1002,'xiaohong');

# 我们需要把以下数据导入到hive中

MySQL [stus]> select * from stu;

+------+----------+

| id | name |

+------+----------+

| 1001 | xiaoming |

| 1002 | xiaohong |

+------+----------+

启动hive前我们先启动dfs、yarn

[root@master sqoop]# start-dfs.sh

[root@slave2 ~]# start-yarn.sh

[root@master sqoop]# hive

hive> create database stus; # 创建接受数据的数据库,表可以不创建,sqoop会帮我们自动创建

新建shell脚本

[root@master conf]# cd ..

[root@master sqoop]# vi sqoop_import.sh

添加以下配置

-------------------------------------------------------

username=root # mysql登录信息

password=123

conn=jdbc:mysql://master:3306/stus # stus表示数据库名称(mysql)

sqoop import \

--connect ${conn} \

--username ${username} \

--password ${password} \

--table stu \ # 表名(mysql)

--m 1 \

--hive-table stus.stu \ # 数据库名称.表名(hive)

--hive-drop-import-delims \

--null-non-string '\\N' \

--null-string '\\N' \

--fetch-size 1000 \

--target-dir /tmp/test1 \

--hive-import

-------------------------------------------------------

运行shell脚本

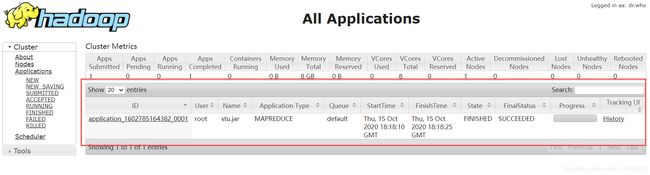

[root@master sqoop]# sh sqoop_import.sh

然后在进入hive数据仓库中可以看到导入的数据

hive> show tables;

OK

tab_name

stu # 导入的表

Time taken: 0.066 seconds, Fetched: 1 row(s)

hive> select * from stu;

OK

stu.id stu.name # 表中的数据

1001 xiaoming

1002 xiaohong

Time taken: 0.491 seconds, Fetched: 2 row(s)