基于3D Gaussian Splatting与NeRF实现三维重建(使用IPhone创建数据集)

基于Spectacular AI与NeRF实现三维重建-使用IPhone创建数据集

- 前言

- 项目简介

- 创建数据集

-

- 扫描

- 处理数据集

- 解析数据集

-

- Python环境

- Windows ffmpeg 环境搭建

- 数据集处理

- 安装Nerfstudio

-

- 需要CUDA环境

- 依次安装依赖

-

- pip install nerfstudio

- Nerfstudio实现效果

-

- 开始训练

-

- 参数配置

-

- 实时训练浏览

前言

本项目参考YouTube中博主(Spectacular AI)

详细可了解:SpectacularAI官网

本文项目构建在Windows与Ubuntu中,二者在项目构建中并未有实质性的差距,可相互参考环境与参数的配置,本文即在Windows11(已配置好CUDA)中进行。

Windows下配置CUDA的方法可参考:《TensorFlow-GPU-2.4.1与CUDA安装教程》

项目简介

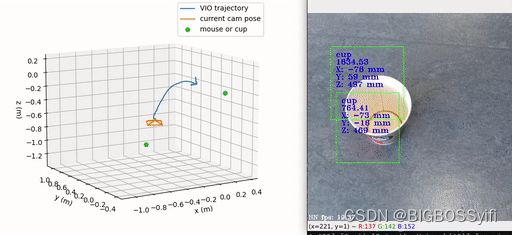

Spectacular AI SDK融合来自相机和IMU传感器(加速度计和陀螺仪)的数据,并输出设备的精确6自由度姿态。这被称为视觉惯性SLAM (VISLAM),它可以用于跟踪(自主)机器人和车辆,以及增强、混合和虚拟现实。SDK还包括一个映射API,可用于访问实时和离线3D重建用例的完整SLAM地图。

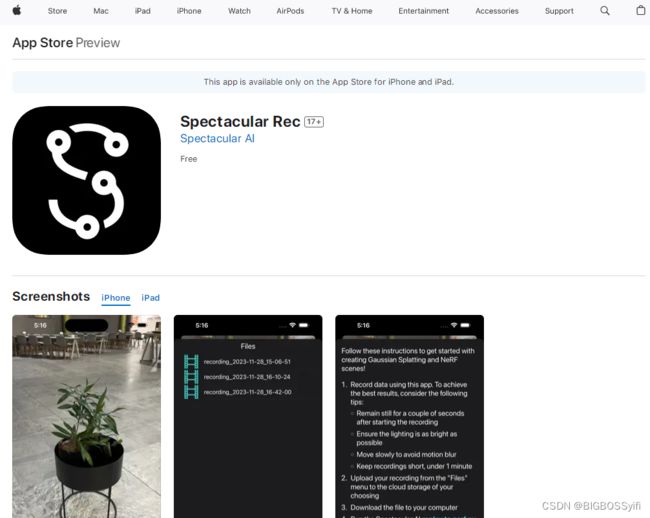

创建数据集

本文使用IPhone15 Pro Max的激光雷达创建场景数据集;

官方SDK提供其他扫描场景的途径:

1.iPhone (with or without LiDAR)

2.Android (with or without ToF雷达组) (如SamsungNote/S系列)

3.OAK-D相机

4.Intel RealSense D455/D435i

5.微软Azure Kinect DK

扫描

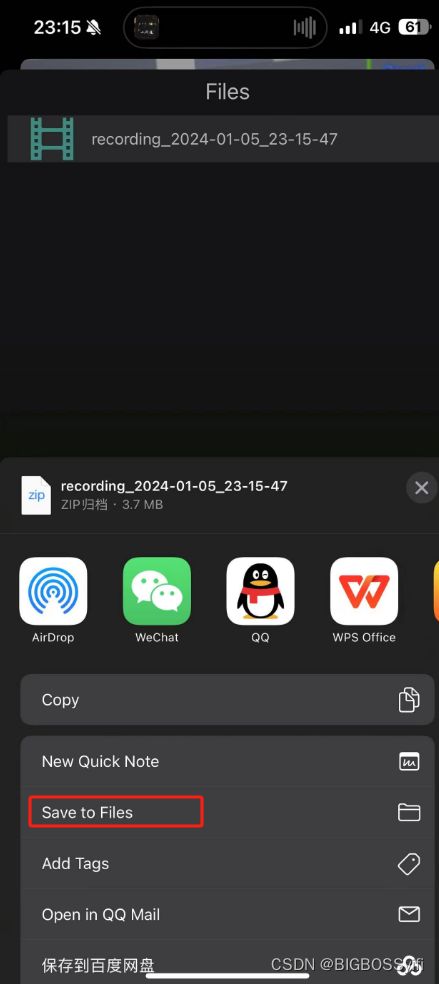

开始拍摄,结束后将扫描完成的视频导入到电脑中(注意:文件可能较大)

处理数据集

解析数据集

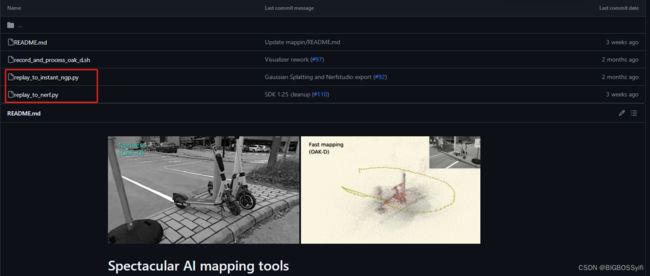

下载SDK:https://github.com/SpectacularAI/sdk-examples.git

Python环境下建图只有两个文件:

replay_to_instant_ngp.py

import argparse

import spectacularAI

import cv2

import json

import os

import shutil

import math

import numpy as np

parser = argparse.ArgumentParser()

parser.add_argument("input", help="Path to folder with session to process")

parser.add_argument("output", help="Path to output folder")

parser.add_argument("--scale", help="Scene scale, exponent of 2", type=int, default=128)

parser.add_argument("--preview", help="Show latest primary image as a preview", action="store_true")

args = parser.parse_args()

# Globals

savedKeyFrames = {}

frameWidth = -1

frameHeight = -1

intrinsics = None

TRANSFORM_CAM = np.array([

[1,0,0,0],

[0,-1,0,0],

[0,0,-1,0],

[0,0,0,1],

])

TRANSFORM_WORLD = np.array([

[0,1,0,0],

[-1,0,0,0],

[0,0,1,0],

[0,0,0,1],

])

def closestPointBetweenTwoLines(oa, da, ob, db):

normal = np.cross(da, db)

denom = np.linalg.norm(normal)**2

t = ob - oa

ta = np.linalg.det([t, db, normal]) / (denom + 1e-10)

tb = np.linalg.det([t, da, normal]) / (denom + 1e-10)

if ta > 0: ta = 0

if tb > 0: tb = 0

return ((oa + ta * da + ob + tb * db) * 0.5, denom)

def resizeToUnitCube(frames):

weight = 0.0

centerPos = np.array([0.0, 0.0, 0.0])

for f in frames:

mf = f["transform_matrix"][0:3,:]

for g in frames:

mg = g["transform_matrix"][0:3,:]

p, w = closestPointBetweenTwoLines(mf[:,3], mf[:,2], mg[:,3], mg[:,2])

if w > 0.00001:

centerPos += p * w

weight += w

if weight > 0.0: centerPos /= weight

scale = 0.

for f in frames:

f["transform_matrix"][0:3,3] -= centerPos

scale += np.linalg.norm(f["transform_matrix"][0:3,3])

scale = 4.0 / (scale / len(frames))

for f in frames: f["transform_matrix"][0:3,3] *= scale

def sharpness(path):

img = cv2.imread(path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

return cv2.Laplacian(img, cv2.CV_64F).var()

def onMappingOutput(output):

global savedKeyFrames

global frameWidth

global frameHeight

global intrinsics

if not output.finalMap:

# New frames, let's save the images to disk

for frameId in output.updatedKeyFrames:

keyFrame = output.map.keyFrames.get(frameId)

if not keyFrame or savedKeyFrames.get(keyFrame):

continue

savedKeyFrames[keyFrame] = True

frameSet = keyFrame.frameSet

if not frameSet.rgbFrame or not frameSet.rgbFrame.image:

continue

if frameWidth < 0:

frameWidth = frameSet.rgbFrame.image.getWidth()

frameHeight = frameSet.rgbFrame.image.getHeight()

undistortedFrame = frameSet.getUndistortedFrame(frameSet.rgbFrame)

if intrinsics is None: intrinsics = undistortedFrame.cameraPose.camera.getIntrinsicMatrix()

img = undistortedFrame.image.toArray()

bgrImage = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

fileName = args.output + "/tmp/frame_" + f'{frameId:05}' + ".png"

cv2.imwrite(fileName, bgrImage)

if args.preview:

cv2.imshow("Frame", bgrImage)

cv2.setWindowTitle("Frame", "Frame #{}".format(frameId))

cv2.waitKey(1)

else:

# Final optimized poses

frames = []

index = 0

up = np.zeros(3)

for frameId in output.map.keyFrames:

keyFrame = output.map.keyFrames.get(frameId)

oldImgName = args.output + "/tmp/frame_" + f'{frameId:05}' + ".png"

newImgName = args.output + "/images/frame_" + f'{index:05}' + ".png"

os.rename(oldImgName, newImgName)

cameraPose = keyFrame.frameSet.rgbFrame.cameraPose

# Converts Spectacular AI camera to coordinate system used by instant-ngp

cameraToWorld = np.matmul(TRANSFORM_WORLD, np.matmul(cameraPose.getCameraToWorldMatrix(), TRANSFORM_CAM))

up += cameraToWorld[0:3,1]

frame = {

"file_path": "images/frame_" + f'{index:05}' + ".png",

"sharpness": sharpness(newImgName),

"transform_matrix": cameraToWorld

}

frames.append(frame)

index += 1

resizeToUnitCube(frames)

for f in frames: f["transform_matrix"] = f["transform_matrix"].tolist()

if frameWidth < 0 or frameHeight < 0: raise Exception("Unable get image dimensions, zero images received?")

fl_x = intrinsics[0][0]

fl_y = intrinsics[1][1]

cx = intrinsics[0][2]

cy = intrinsics[1][2]

angle_x = math.atan(frameWidth / (fl_x * 2)) * 2

angle_y = math.atan(frameHeight / (fl_y * 2)) * 2

transformationsJson = {

"camera_angle_x": angle_x,

"camera_angle_y": angle_y,

"fl_x": fl_x,

"fl_y": fl_y,

"k1": 0.0,

"k2": 0.0,

"p1": 0.0,

"p2": 0.0,

"cx": cx,

"cy": cy,

"w": frameWidth,

"h": frameHeight,

"aabb_scale": args.scale,

"frames": frames

}

with open(args.output + "/transformations.json", "w") as outFile:

json.dump(transformationsJson, outFile, indent=2)

def main():

os.makedirs(args.output + "/images", exist_ok=True)

os.makedirs(args.output + "/tmp", exist_ok=True)

print("Processing")

replay = spectacularAI.Replay(args.input, mapperCallback = onMappingOutput, configuration = {

"globalBABeforeSave": True, # Refine final map poses using bundle adjustment

"maxMapSize": 0, # Unlimited map size

"keyframeDecisionDistanceThreshold": 0.1 # Minimum distance between keyframes

})

replay.runReplay()

shutil.rmtree(args.output + "/tmp")

print("Done!")

print("")

print("You can now run instant-ngp nerfs using following command:")

print("")

print(" ./build/testbed --mode nerf --scene {}/transformations.json".format(args.output))

if __name__ == '__main__':

main()

replay_to_nerf.py

#!/usr/bin/env python

"""

Post-process data in Spectacular AI format and convert it to input

for NeRF or Gaussian Splatting methods.

"""

DEPRECATION_NOTE = """

Note: the replay_to_nerf.py script has been replaced by the sai-cli

tool in Spectacular AI Python package v1.25. Prefer

sai-cli process [args]

as a drop-in replacement of

python replay_to_nerf.py [args]

.

"""

# The code is still available and usable as a stand-alone script, see:

# https://github.com/SpectacularAI/sdk/blob/main/python/cli/process/process.py

import_success = False

try:

from spectacularAI.cli.process.process import process, define_args

import_success = True

except ImportError as e:

print(e)

if not import_success:

msg = """

Unable to import new Spectacular AI CLI, please update to SDK version >= 1.25"

"""

raise RuntimeError(msg)

if __name__ == '__main__':

import argparse

parser = argparse.ArgumentParser(

description=__doc__,

epilog=DEPRECATION_NOTE,

formatter_class=argparse.RawDescriptionHelpFormatter)

define_args(parser)

print(DEPRECATION_NOTE)

process(parser.parse_args())

Python环境

Babel 2.14.0

ConfigArgParse 1.7

GitPython 3.1.40

Jinja2 3.1.2

Markdown 3.4.1

MarkupSafe 2.1.2

PyAudio 0.2.13

PyOpenGL 3.1.7

PySocks 1.7.1

PyWavelets 1.4.1

Rtree 1.1.0

Send2Trash 1.8.2

Shapely 1.8.5.post1

absl-py 1.4.0

accelerate 0.16.0

addict 2.4.0

ansi2html 1.9.1

anyio 4.2.0

appdirs 1.4.4

argon2-cffi 23.1.0

argon2-cffi-bindings 21.2.0

arrow 1.3.0

asttokens 2.4.1

async-lru 2.0.4

attrs 23.2.0

av 11.0.0

beautifulsoup4 4.11.2

bidict 0.22.1

bleach 6.1.0

blinker 1.7.0

boltons 23.1.1

cachetools 5.3.0

certifi 2022.12.7

cffi 1.16.0

chardet 5.2.0

charset-normalizer 3.0.1

clean-fid 0.1.35

click 8.1.7

clip 1.0

clip-anytorch 2.5.0

colorama 0.4.6

colorlog 6.8.0

comet-ml 3.35.5

comm 0.2.1

configobj 5.0.8

contourpy 1.2.0

cryptography 41.0.7

cycler 0.12.1

dash 2.14.2

dash-core-components 2.0.0

dash-html-components 2.0.0

dash-table 5.0.0

debugpy 1.8.0

decorator 5.1.1

defusedxml 0.7.1

depthai 2.24.0.0

descartes 1.1.0

docker-pycreds 0.4.0

docstring-parser 0.15

dulwich 0.21.7

einops 0.6.0

embreex 2.17.7.post4

everett 3.1.0

exceptiongroup 1.2.0

executing 2.0.1

fastjsonschema 2.19.1

ffmpeg 1.4

filelock 3.9.0

fire 0.5.0

flask 3.0.0

fonttools 4.47.0

fqdn 1.5.1

ftfy 6.1.1

future 0.18.3

gdown 4.7.1

gitdb 4.0.11

google-auth 2.16.1

google-auth-oauthlib 1.2.0

grpcio 1.51.3

gsplat 0.1.0

h11 0.14.0

h5py 3.10.0

httpcore 1.0.2

httpx 0.26.0

idna 3.4

imageio 2.25.1

importlib-metadata 7.0.1

ipykernel 6.28.0

ipython 8.19.0

ipywidgets 8.1.1

isoduration 20.11.0

itsdangerous 2.1.2

jaxtyping 0.2.25

jedi 0.19.1

joblib 1.3.2

json5 0.9.14

jsonmerge 1.9.0

jsonpointer 2.4

jsonschema 4.20.0

jsonschema-specifications 2023.12.1

jupyter-client 8.6.0

jupyter-core 5.7.0

jupyter-events 0.9.0

jupyter-lsp 2.2.1

jupyter-server 2.12.2

jupyter-server-terminals 0.5.1

jupyterlab 4.0.10

jupyterlab-pygments 0.3.0

jupyterlab-server 2.25.2

jupyterlab-widgets 3.0.9

k-diffusion 0.0.14

kiwisolver 1.4.5

kornia 0.6.10

lazy-loader 0.1

lightning-utilities 0.10.0

lmdb 1.4.0

lpips 0.1.4

lxml 5.0.0

mapbox-earcut 1.0.1

markdown-it-py 3.0.0

matplotlib 3.5.3

matplotlib-inline 0.1.6

mdurl 0.1.2

mediapy 1.2.0

mistune 3.0.2

mpmath 1.3.0

msgpack 1.0.7

msgpack-numpy 0.4.8

msvc-runtime 14.34.31931

nbclient 0.9.0

nbconvert 7.14.0

nbformat 5.9.2

nerfacc 0.5.2

nerfstudio 0.3.4

nest-asyncio 1.5.8

networkx 3.0

ninja 1.11.1.1

nodeenv 1.8.0

notebook-shim 0.2.3

numpy 1.24.2

nuscenes-devkit 1.1.11

oauthlib 3.2.2

open3d 0.18.0

opencv-python 4.6.0.66

overrides 7.4.0

packaging 23.0

pandas 2.1.4

pandocfilters 1.5.0

parso 0.8.3

pathtools 0.1.2

pillow 9.4.0

pip 23.3.2

platformdirs 4.1.0

plotly 5.18.0

prometheus-client 0.19.0

prompt-toolkit 3.0.43

protobuf 3.20.3

psutil 5.9.7

pure-eval 0.2.2

pyarrow 14.0.2

pyasn1 0.4.8

pyasn1-modules 0.2.8

pycocotools 2.0.7

pycollada 0.7.2

pycparser 2.21

pygame 2.5.2

pygments 2.17.2

pyliblzfse 0.4.1

pymeshlab 2023.12

pyngrok 7.0.5

pyparsing 3.1.1

pyquaternion 0.9.9

python-box 6.1.0

python-dateutil 2.8.2

python-engineio 4.8.1

python-json-logger 2.0.7

python-socketio 5.10.0

pytorch-msssim 1.0.0

pytz 2023.3.post1

pywin32 306

pywinpty 2.0.12

pyyaml 6.0

pyzmq 25.1.2

rawpy 0.19.0

referencing 0.32.0

regex 2022.10.31

requests 2.31.0

requests-oauthlib 1.3.1

requests-toolbelt 1.0.0

resize-right 0.0.2

retrying 1.3.4

rfc3339-validator 0.1.4

rfc3986-validator 0.1.1

rich 13.7.0

rpds-py 0.16.2

rsa 4.9

scikit-image 0.20.0rc8

scikit-learn 1.3.2

scipy 1.10.1

semantic-version 2.10.0

sentry-sdk 1.39.1

setproctitle 1.3.2

setuptools 69.0.3

shtab 1.6.5

simple-websocket 1.0.0

simplejson 3.19.2

six 1.16.0

smmap 5.0.1

sniffio 1.3.0

soupsieve 2.4

spectacularAI 1.26.2

splines 0.3.0

stack-data 0.6.3

svg.path 6.3

sympy 1.12

tb-nightly 2.13.0a20230221

tenacity 8.2.3

tensorboard 2.15.1

tensorboard-data-server 0.7.0

tensorboard-plugin-wit 1.8.1

termcolor 2.4.0

terminado 0.18.0

threadpoolctl 3.2.0

tifffile 2023.2.3

timm 0.6.7

tinycss2 1.2.1

tomli 2.0.1

torch 1.13.1+cu116

torch-fidelity 0.3.0

torchdiffeq 0.2.3

torchmetrics 1.2.1

torchsde 0.2.5

torchvision 0.14.1+cu116

tornado 6.4

tqdm 4.64.1

traitlets 5.14.1

trampoline 0.1.2

trimesh 4.0.8

typeguard 2.13.3

types-python-dateutil 2.8.19.14

typing-extensions 4.5.0

tyro 0.6.3

tzdata 2023.4

uri-template 1.3.0

urllib3 1.26.14

viser 0.1.17

wandb 0.13.10

wcwidth 0.2.6

webcolors 1.13

webencodings 0.5.1

websocket-client 1.3.3

websockets 12.0

werkzeug 3.0.1

wheel 0.38.4

widgetsnbextension 4.0.9

wrapt 1.16.0

wsproto 1.2.0

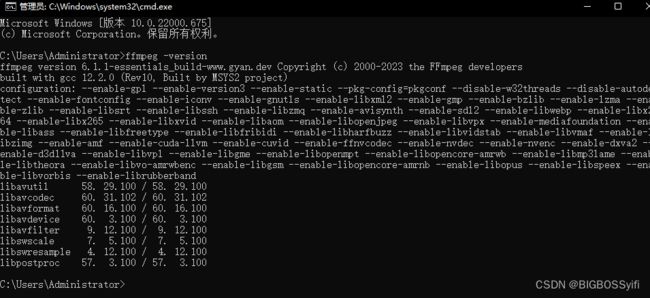

Windows ffmpeg 环境搭建

项目必须FFmpeg

这里我在项目Python中直接pip安装:

pip install ffmpeg

这个网上教程很多,这里就不细讲了

数据集处理

这里以iPhone拍摄的数据集为例子:

将上文中提到的解压后的数据集进行编译处理,并将数据集的路径改为自己的数据集路径

python replay_to_nerf.py E:\SpectacleMapping\sdk-examples-main\python\mapping\recording_2024-01-05_12-24-00 E:\SpectacleMapping\sdk-examples-main\python\mapping\room --preview3d

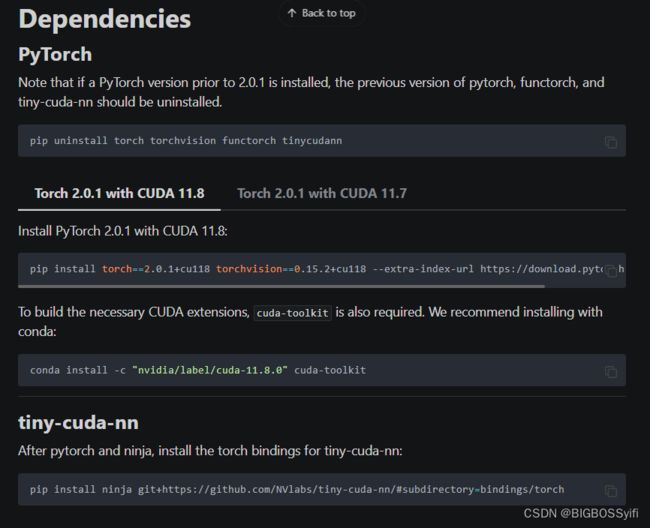

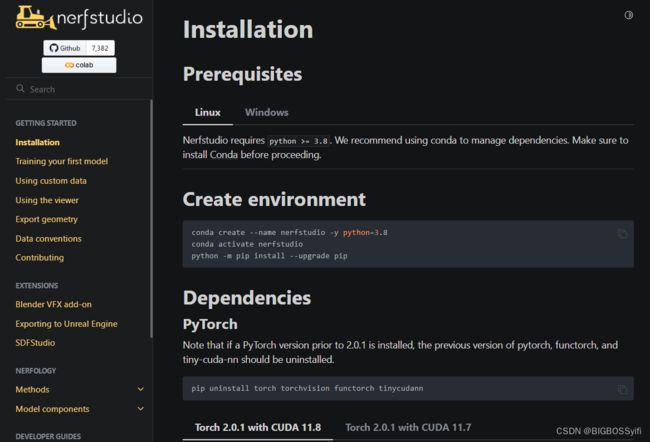

安装Nerfstudio

需要CUDA环境

Nerfstudio 官网:https://docs.nerf.studio/quickstart/installation.html

依次安装依赖

pip install nerfstudio

pip install nerfstudio

git clone https://github.com/nerfstudio-project/nerfstudio.git

cd nerfstudio

pip install --upgrade pip setuptools

pip install -e .

Nerfstudio实现效果

注意,需要显卡的显存至少6GB以上,对显存要求极高!

开始训练

参数配置

ns-train nerfacto --data 数据集绝对地址 --vis viewer --max-num-iterations 50000

实时训练浏览

可以在浏览器中输入:https://viewer.nerf.studio/versions/23-05-15-1/?websocket_url=ws://localhost:7007