还在为MySQL数据同步Elasticsearch发愁?快来试试Canal!

MySQL 数据同步 Elasticsearch - Canal

本章节将介绍如何使用 Canal 中间件将 MySQL 数据同步至 Elasticsearch。

Spring Boot 2.x 实践案例(代码仓库)

前言

最近有一个新需求,需要实现附近或同城以及海量数据搜索功能,项目使用MySQL作为业务数据库,如果使用MySQL来实现上述需求,会发现存在效率低以及不支持按距离排序等问题。经过查阅资料,发现Elasticsearch(分布式搜索引擎)可以高效实现上述功能。那么可以将 Elasticsearch 作为查询数据库,实现读写分离,缓解MySQL数据库查询压力,以及应对海量数据复杂查询。现在需要考虑如何将MySQL数据实时同步至Elasticsearch(增删改),目前业界有以下两种主流解决方案:

-

Logstash:Logstash是一种开源数据收集引擎,它可以将来自不同源的数据集成到一个中央位置。通过使用MySQL的JDBC输入插件和ES的输出插件,可以轻松地将MySQL数据同步到ES。

-

Canal:Canal是阿里巴巴开源的一个用于MySQL数据库增量数据同步工具。它通过解析MySQL的binlog来获取增量数据,并将数据发送到指定位置,包括ES。

本文章将采用Canal来进行数据同步,主要是因为网上教程参差不齐,并且埋了许多坑给大家,不过已经为大家踩好坑了,后续也会出Logstash同步教程,敬请期待!那么接下来就跟我一起动手实践吧!

环境准备

提示:Canal目前不支持Elasticsearch 8版本,以及Canal-1.1.6版本不兼容JDK 8。

| 工具 | 版本 | Linux(ARM) | Windows | 备注 |

|---|---|---|---|---|

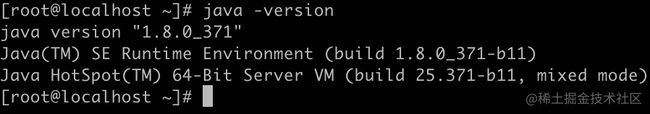

| JDK | 1.8 | jdk-8u371-linux-aarch64.tar.gz | jdk-8u371-windows-x64.exe | |

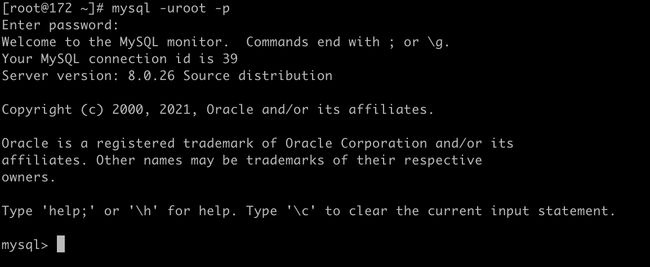

| MySQL | 8.0.26 | mysql-community-server-8.0.26-1.el7.aarch64.rpm | mysql-installer-community-8.0.26.0.msi | 支持MySQL5.7 |

| Elasticsearch | 7.17.11 | elasticsearch-7.17.11-linux-aarch64.tar.gz | elasticsearch-7.17.11-windows-x86_64 | 不支持ES8 |

| Canal | canal-1.1.7-alpha-2 | canal-1.1.7-alpha-2 | canal-1.1.7-alpha-2 |

JDK

MySQL

开启 Binlog 写入功能

注意:MySQL 8与阿里云RDS for MySQL默认打开了binlog,并且账号默认具有binlog dump权限,可以直接跳过这一步。

对于自建MySQL,需要先开启Binlog写入功能,配置binlog-format为ROW模式:

vi /etc/my.cnf

[mysqld]

# 开启 binlog

log-bin=mysql-bin

# 选择 ROW 模式

binlog-format=ROW

# 配置 MySQL replaction 需要定义,不要和 canal 的 slaveId 重复

server_id=1

重启

systemctl start mysqld

验证是否开启

mysql> show variables like 'log_bin%';

+---------------------------------+-----------------------------+

| Variable_name | Value |

+---------------------------------+-----------------------------+

| log_bin | ON |

| log_bin_basename | /var/lib/mysql/binlog |

| log_bin_index | /var/lib/mysql/binlog.index |

| log_bin_trust_function_creators | OFF |

| log_bin_use_v1_row_events | OFF |

+---------------------------------+-----------------------------+

mysql> show variables like 'binlog_format%';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| binlog_format | ROW |

+---------------+-------+

Elasticsearch

Windows操作系统用户,直接解压并前往bin文件夹下运行elasticsearch.bat文件即可。

解压

tar -zxvf elasticsearch-7.17.11-linux-aarch64.tar.gz -C /usr/software

创建数据目录

cd /usr/software/elasticsearch-7.17.11/

mkdir data

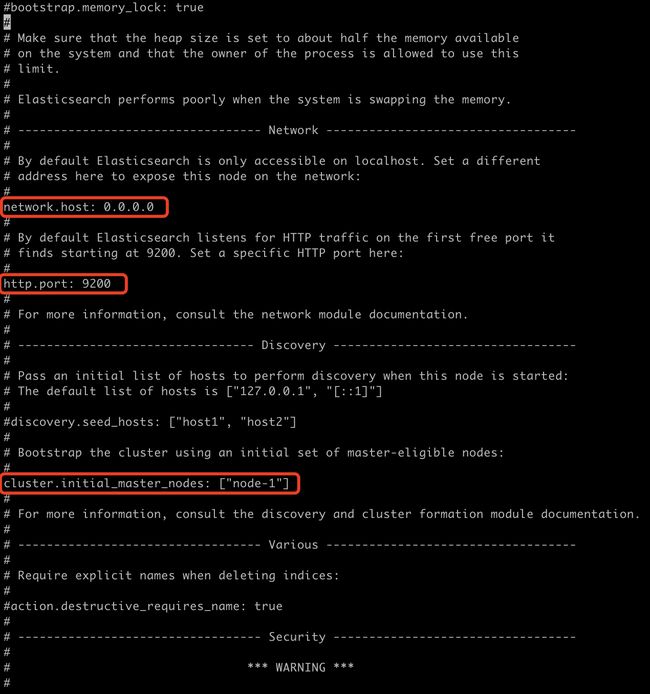

修改配置文件

cd /usr/software/elasticsearch-7.17.11/

vi config/elasticsearch.yml

在配置文件中,放开相关注释,主要修改内容如下:

# 集群名称

cluster.name: xxx

# 节点名称

node.name: node-1

# 数据与日志存储目录

path.data: /usr/software/elasticsearch-7.17.11/data

path.logs: /usr/software/elasticsearch-7.17.11/logs

# 任何计算机节点访问

network.host: 0.0.0.0

# 默认端口

http.port: 9200

# 初始主节点

cluster.initial_master_nodes: ["node-1"]

注意:请记住 cluster.name 后续配置 Canal 时需使用到!

开放端口

firewall-cmd --add-port=9300/tcp --permanent

firewall-cmd --add-port=9200/tcp --permanent

firewall-cmd --reload

systemctl restart firewalld

用户权限问题

注意:在 Linux 系统下,root 用户无法启动 Elasticsearch,所以需额外创建专属用户用来启动 Elasticsearch。

# 创建用户

useradd elastic

# 授权

chown -R elastic /usr/software/elasticsearch-7.17.11/

内存不足问题

注意:如果服务器内存足够大此步骤可以跳过,不然启动时会报内存不足错误!

cd /usr/software/elasticsearch-7.17.11/

vi config/jvm.options

# 设置堆内存大小

-Xms256m

-Xmx256m

其它问题

- max virtual memory areas vm.max_map_count [65530] is too low, increase to at least

vi /etc/sysctl.conf

# 在末尾添加以下配置

vm.max_map_count=655360

sysctl -p

- max file descriptors [4096] for elasticsearch process is too low

vi /etc/security/limits.conf

# 在末尾添加以下配置

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* soft nproc 4096

后台启动

su elastic

cd /usr/software/elasticsearch-7.17.11/bin/

./elasticsearch -d

可视化插件

Install Multi Elasticsearch Head (opens new window) from the Chrome Web Store.

Click the extension icon in the toolbar of your web browser.

Note that you don’t need to enable CORS (opens new window) with this method.

基础配置

注意:本教程所使用IP地址为172.16.138.130,请根据实际IP进行替换!

canal-deployer

下载

wget https://github.com/alibaba/canal/releases/download/canal-1.1.7-alpha-2/canal.deployer-1.1.7-SNAPSHOT.tar.gz

解压

mkdir -p /usr/software/canal-deployer/

tar zxvf canal.deployer-1.1.7-SNAPSHOT.tar.gz -C /usr/software/canal-deployer/

修改配置文件

instance.properties

cd /usr/software/canal-deployer/

vi conf/example/instance.properties

#################################################

## mysql serverId , v1.0.26+ will autoGen

# canal.instance.mysql.slaveId=0

# enable gtid use true/false

canal.instance.gtidon=false

# position info

canal.instance.master.address=172.16.138.130:3306

canal.instance.master.journal.name=

canal.instance.master.position=

canal.instance.master.timestamp=

canal.instance.master.gtid=

# rds oss binlog

canal.instance.rds.accesskey=

canal.instance.rds.secretkey=

canal.instance.rds.instanceId=

# table meta tsdb info

canal.instance.tsdb.enable=true

#canal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdb

#canal.instance.tsdb.dbUsername=canal

#canal.instance.tsdb.dbPassword=canal

#canal.instance.standby.address =

#canal.instance.standby.journal.name =

#canal.instance.standby.position =

#canal.instance.standby.timestamp =

#canal.instance.standby.gtid=

# username/password

canal.instance.dbUsername=root

canal.instance.dbPassword=123456

canal.instance.connectionCharset = UTF-8

# enable druid Decrypt database password

canal.instance.enableDruid=false

#canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ==

# table regex

canal.instance.filter.regex=.*\\..*

# table black regex

canal.instance.filter.black.regex=mysql\\.slave_.*

# table field filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)

#canal.instance.filter.field=test1.t_product:id/subject/keywords,test2.t_company:id/name/contact/ch

# table field black filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)

#canal.instance.filter.black.field=test1.t_product:subject/product_image,test2.t_company:id/name/contact/ch

# mq config

canal.mq.topic=example

# dynamic topic route by schema or table regex

#canal.mq.dynamicTopic=mytest1.user,topic2:mytest2\\..*,.*\\..*

canal.mq.partition=0

# hash partition config

#canal.mq.enableDynamicQueuePartition=false

#canal.mq.partitionsNum=3

#canal.mq.dynamicTopicPartitionNum=test.*:4,mycanal:6

#canal.mq.partitionHash=test.table:id^name,.*\\..*

#################################################

其中主要修改以下几项:

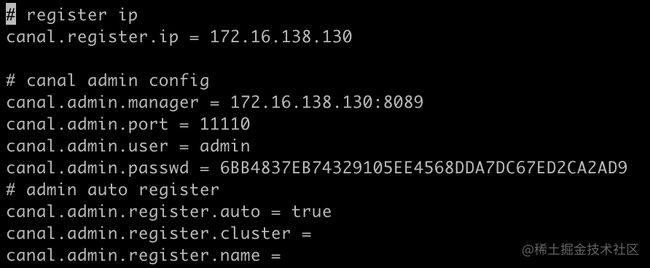

canal_local.properties

cd /usr/software/canal-deployer/

vi conf/canal_local.properties

# register ip

canal.register.ip = 172.16.138.130

# canal admin config

canal.admin.manager = 172.16.138.130:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9

# admin auto register

canal.admin.register.auto = true

canal.admin.register.cluster =

其中主要修改以下几项:

启动

cd /usr/software/canal-deployer/

sh bin/startup.sh

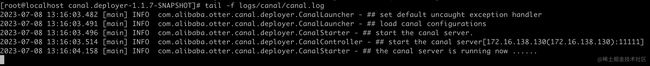

检查是否启动成功

cd /usr/software/canal-deployer/

tail -f logs/canal/canal.log

canal-adapter

下载

wget https://github.com/alibaba/canal/releases/download/canal-1.1.7-alpha-2/canal.adapter-1.1.7-SNAPSHOT.tar.gz

解压

mkdir -p /usr/software/canal-adapter/

tar zxvf canal.adapter-1.1.7-SNAPSHOT.tar.gz -C /usr/software/canal-adapter/

创建数据库并初始化数据

CREATE DATABASE /*!32312 IF NOT EXISTS*/ `canal_manager` /*!40100 DEFAULT CHARACTER SET utf8 COLLATE utf8_bin */;

USE `canal_manager`;

SET NAMES utf8;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for canal_adapter_config

-- ----------------------------

DROP TABLE IF EXISTS `canal_adapter_config`;

CREATE TABLE `canal_adapter_config` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`category` varchar(45) NOT NULL,

`name` varchar(45) NOT NULL,

`status` varchar(45) DEFAULT NULL,

`content` text NOT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_cluster

-- ----------------------------

DROP TABLE IF EXISTS `canal_cluster`;

CREATE TABLE `canal_cluster` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`name` varchar(63) NOT NULL,

`zk_hosts` varchar(255) NOT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_config

-- ----------------------------

DROP TABLE IF EXISTS `canal_config`;

CREATE TABLE `canal_config` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`cluster_id` bigint(20) DEFAULT NULL,

`server_id` bigint(20) DEFAULT NULL,

`name` varchar(45) NOT NULL,

`status` varchar(45) DEFAULT NULL,

`content` text NOT NULL,

`content_md5` varchar(128) NOT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

UNIQUE KEY `sid_UNIQUE` (`server_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_instance_config

-- ----------------------------

DROP TABLE IF EXISTS `canal_instance_config`;

CREATE TABLE `canal_instance_config` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`cluster_id` bigint(20) DEFAULT NULL,

`server_id` bigint(20) DEFAULT NULL,

`name` varchar(45) NOT NULL,

`status` varchar(45) DEFAULT NULL,

`content` text NOT NULL,

`content_md5` varchar(128) DEFAULT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

UNIQUE KEY `name_UNIQUE` (`name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_node_server

-- ----------------------------

DROP TABLE IF EXISTS `canal_node_server`;

CREATE TABLE `canal_node_server` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`cluster_id` bigint(20) DEFAULT NULL,

`name` varchar(63) NOT NULL,

`ip` varchar(63) NOT NULL,

`admin_port` int(11) DEFAULT NULL,

`tcp_port` int(11) DEFAULT NULL,

`metric_port` int(11) DEFAULT NULL,

`status` varchar(45) DEFAULT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_user

-- ----------------------------

DROP TABLE IF EXISTS `canal_user`;

CREATE TABLE `canal_user` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`username` varchar(31) NOT NULL,

`password` varchar(128) NOT NULL,

`name` varchar(31) NOT NULL,

`roles` varchar(31) NOT NULL,

`introduction` varchar(255) DEFAULT NULL,

`avatar` varchar(255) DEFAULT NULL,

`creation_date` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

SET FOREIGN_KEY_CHECKS = 1;

-- ----------------------------

-- Records of canal_user

-- ----------------------------

BEGIN;

INSERT INTO `canal_user` VALUES (1, 'admin', '6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9', 'Canal Manager', 'admin', NULL, NULL, '2019-07-14 00:05:28');

COMMIT;

SET FOREIGN_KEY_CHECKS = 1;

修改配置文件

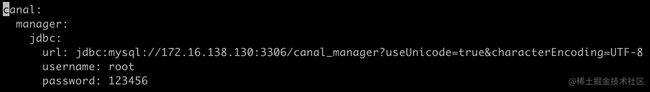

bootstrap.yml

cd /usr/software/canal-adapter/

vi conf/bootstrap.yml

canal:

manager:

jdbc:

url: jdbc:mysql://172.16.138.130:3306/canal_manager?useUnicode=true&characterEncoding=UTF-8

username: root

password: 123456

application.yml

cd /usr/software/canal-adapter/

vi conf/application.yml

server:

port: 8081

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

default-property-inclusion: non_null

canal.conf:

mode: tcp #tcp kafka rocketMQ rabbitMQ

flatMessage: true

zookeeperHosts:

syncBatchSize: 1000

retries: -1

timeout:

accessKey:

secretKey:

consumerProperties:

# canal tcp consumer

canal.tcp.server.host: 172.16.138.130:11111

canal.tcp.zookeeper.hosts:

canal.tcp.batch.size: 500

canal.tcp.username:

canal.tcp.password:

# kafka consumer

kafka.bootstrap.servers: 127.0.0.1:9092

kafka.enable.auto.commit: false

kafka.auto.commit.interval.ms: 1000

kafka.auto.offset.reset: latest

kafka.request.timeout.ms: 40000

kafka.session.timeout.ms: 30000

kafka.isolation.level: read_committed

kafka.max.poll.records: 1000

# rocketMQ consumer

rocketmq.namespace:

rocketmq.namesrv.addr: 127.0.0.1:9876

rocketmq.batch.size: 1000

rocketmq.enable.message.trace: false

rocketmq.customized.trace.topic:

rocketmq.access.channel:

rocketmq.subscribe.filter:

# rabbitMQ consumer

rabbitmq.host:

rabbitmq.virtual.host:

rabbitmq.username:

rabbitmq.password:

rabbitmq.resource.ownerId:

srcDataSources:

defaultDS:

url: jdbc:mysql://172.16.138.130:3306/test?useUnicode=true

username: root

password: 123456

canalAdapters:

- instance: example # canal instance Name or mq topic name

groups:

- groupId: g1

outerAdapters:

- name: logger

- name: es7

hosts: http://172.16.138.130:9200 # 127.0.0.1:9200 for rest mode

properties:

mode: rest # or rest

# security.auth: test:123456 # only used for rest mode

cluster.name: my-application

其中主要修改以下几项:

注意:cluster.name 需要与之前ES配置文件对应上!

canal-admin

下载

wget https://github.com/alibaba/canal/releases/download/canal-1.1.7-alpha-2/canal.admin-1.1.7-SNAPSHOT.tar.gz

解压

mkdir -p /usr/software/canal-admin

tar zxvf canal.admin-1.1.7-SNAPSHOT.tar.gz -C /usr/software/canal-admin

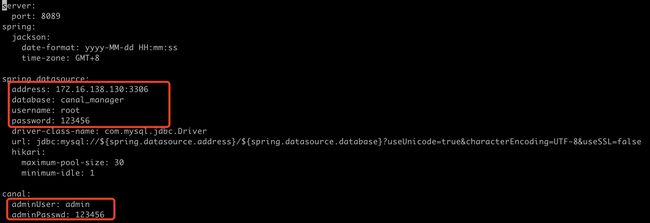

修改配置文件

cd /usr/software/canal-admin

vi conf/application.yml

server:

port: 8089

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

spring.datasource:

address: 172.16.138.130:3306

database: canal_manager

username: root

password: 123456

driver-class-name: com.mysql.jdbc.Driver

url: jdbc:mysql://${spring.datasource.address}/${spring.datasource.database}?useUnicode=true&characterEncoding=UTF-8&useSSL=false

hikari:

maximum-pool-size: 30

minimum-idle: 1

canal:

adminUser: admin

adminPasswd: 123456

其中主要修改以下几项:

启动

cd /usr/software/canal-admin

sh bin/startup.sh

注意:如果是Windows用户,需在结尾添加local参数,否则无法实现服务自动注册(#3484)

检查是否启动成功

cd /usr/software/canal-admin

tail -f logs/admin.log

数据同步

终于到了数据同步操作环节,现在需求如下:将MySQL中user表数据同步到ES中user索引,那么就跟着我一起动手操作吧!

创建数据库及表

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for user

-- ----------------------------

DROP TABLE IF EXISTS `user`;

CREATE TABLE `user` (

`id` int NOT NULL AUTO_INCREMENT,

`username` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '用户名',

`age` int DEFAULT NULL COMMENT '年龄',

`gender` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '性别',

`create_time` datetime(3) NOT NULL DEFAULT CURRENT_TIMESTAMP(3) COMMENT '创建时间',

`update_time` datetime(3) NOT NULL DEFAULT CURRENT_TIMESTAMP(3) ON UPDATE CURRENT_TIMESTAMP(3) COMMENT '更新时间',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci;

SET FOREIGN_KEY_CHECKS = 1;

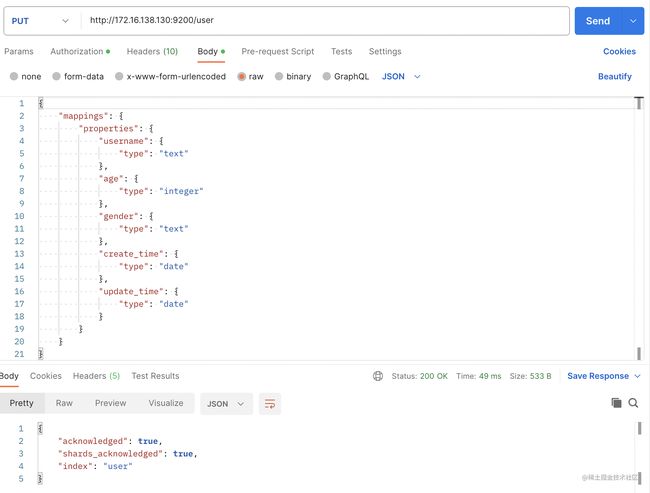

创建索引及映射

在Postman中,向ES服务器发PUT请求:http://172.16.138.130:9200/user

{

"properties": {

"username": {

"type": "text"

},

"age": {

"type": "integer"

},

"gender": {

"type": "text"

},

"create_time": {

"type": "date"

},

"update_time": {

"type": "date"

}

}

}

服务器响应结果如下:

{

"acknowledged": true,

"shards_acknowledged": true,

"index": "user"

}

数据类型

- text,keyword

- byte,short,integer,long

- float,double

- boolean

- date

- object

踩坑记录

Configuration property name ‘-index’ is not valid(#4354)

此坑由于最新版Canal中YAML配置文件不兼容以下划线开头的键,在适配器映射表配置文件中esMapping字段下*_index与_id*都是以下划线开头,所以报此错误。

解决方案有以下两种:

- 修改 ESSyncConfig.java 配置文件(去除下划线)

- 升级 Spring Boot 版本(高版本兼容下划线开头键)

本教程采用修改配置文件方式,因为升级Spring Boot版本可能带来更多兼容性问题。

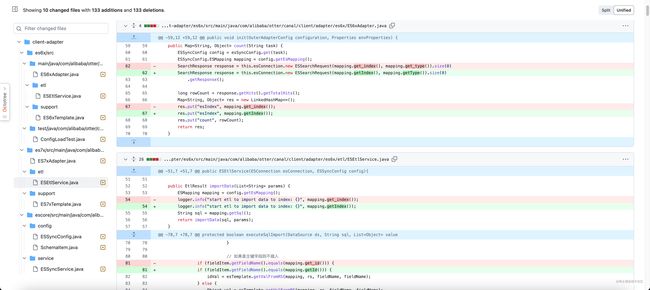

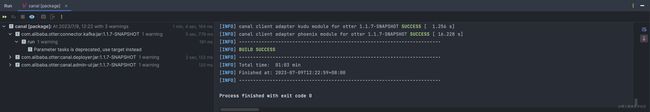

具体修改内容可前往此Commit查看,修改之后需要对源代码进行打包:

mvn clean package

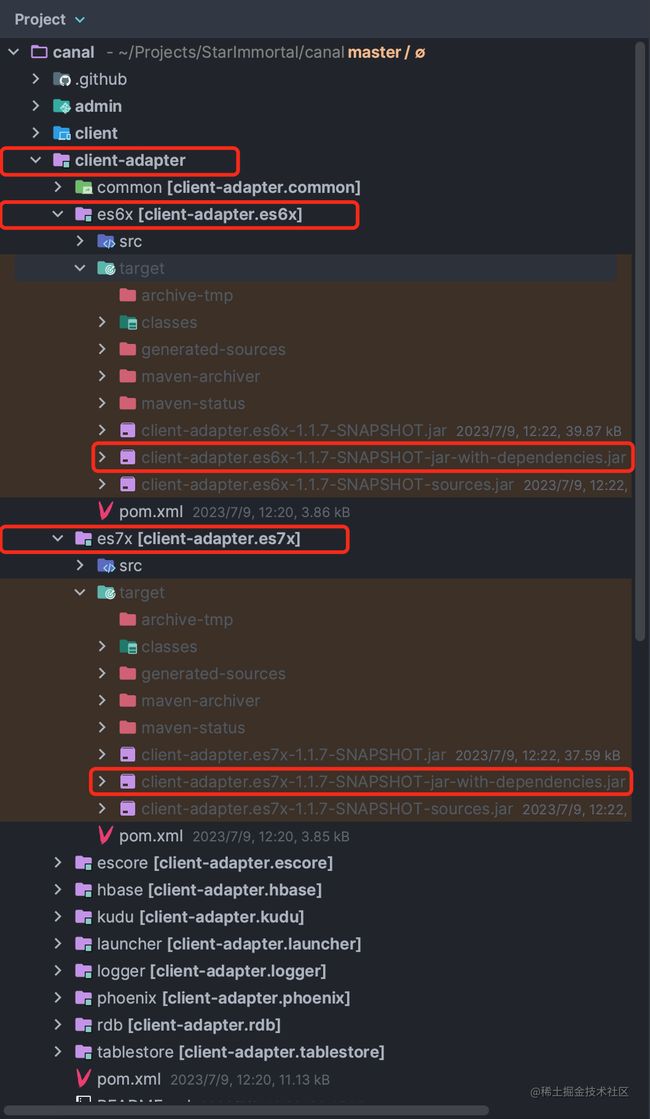

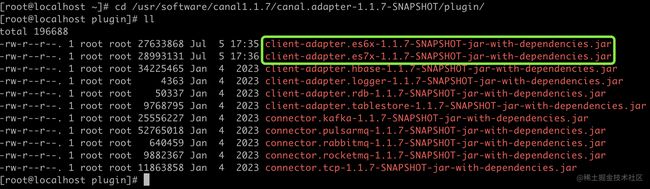

打包之后需要进行Jar包替换操作,将client-adapter模块下es6x或es7x(具体取决于您Elasticsearch版本)中target文件夹下client-adapter.es6x-1.1.7-SNAPSHOT-jar-with-dependencies.jar或client-adapter.es7x-1.1.7-SNAPSHOT-jar-with-dependencies.jar复制粘贴到/usr/software/canal-adapter/plugin目录下。

如果大家不想进行修改源码与打包操作,可以直接前往此链接下载相应Jar包进行替换即可。

适配器表映射文件

适配器会自动加载conf/es6或conf/es7(取决于ES版本)下所有.yml结尾配置文件,一个配置文件表示一张映射表,在编写映射文件之前,需删除默认映射文件,防止干扰正常程序运行:

cd cd /usr/software/canal-adapter

rm -rf conf/es7/*

删除之后创建user.yml映射文件专门用于user表同步:

vi conf/es7/user.yml

具体配置文件如下:

# 源数据源的key,对应adapter中application.yml配置文件srcDataSources中的值

dataSourceKey: defaultDS

# canal的instance或者MQ的topic

destination: example

# 对应MQ模式下的groupId, 只会同步对应groupId的数据

groupId: g1

esMapping:

# 索引名称

index: user

# _id

id: _id

# 映射语句

sql: "select t1.id as _id, t1.username, t1.age, t1.gender from user t1"

# 条件参数

etlCondition: "where t.update_time>='{0}'"

# 提交批大小

commitBatch: 3000

其中重要配置项如下:

- index:索引名称

- id:如果不配置该项则必须配置pk项,需要指定一个属性为主键属性(_id会由ES自动分配)

- sql:同步SQL映射语句(不支持 select *)

注意:配置SQL语句时,需要给每个表起别名,单表也需要,否则可能会报空指针异常!(参考#4789)

启动

cd /usr/software/canal-adapter/

sh bin/startup.sh

检查是否启动成功

cd /usr/software/canal-adapter/

tail -f logs/adapter/adapter.log

管理REST接口

查看同步任务列表

curl http://127.0.0.1:8081/destinations

同步开关

curl http://127.0.0.1:8081/syncSwitch/canal-test/off -X PUT

curl http://127.0.0.1:8081/syncSwitch/canal-test/on -X PUT

数据同步开关状态

curl http://127.0.0.1:8081/syncSwitch/example

手动同步数据

curl http://127.0.0.1:8081/etl/es7/user.yml -X POST

查看相关库总数据

curl http://127.0.0.1:8081/count/es7/user.yml

同步测试

往MySQL数据库中user表添加一条记录,然后前往Elasticsearch可视化界面查看是否同步成功:

注意:如果之前同步过,想要重新做全量同步,那么需要删除conf/example/meta.dat文件,因为此文件会记录上次同步时间与binlog位置。

cd /usr/software/canal-deployer/

rm -rf conf/example/meta.dat

参考链接

- ymal解析问题:Configuration property name ‘-index‘ is not valid

- ES7 配置文件属性包含下划线 引入Binder方式获取对象解析失败

- Sync ES

- ES数据类型介绍