OpenStack搭建

OpenStack搭建

1.前置阶段 环境准备

本实验以 OpenStack(Train版)云计算平台的官方技术文档为依据,逐步引导读者动手完成平台搭建工作。

特别鸣谢两位博主提供的技术指正:CSDN@尼古拉斯程序员 CSDN@m0_60155284

1.1 规划拓扑图

| 虚拟机 | 控制节点 | 计算节点 | 存储节点 | 备注 |

|---|---|---|---|---|

| 主机名 | controller | compute | storage | - |

| CPU | 2核心 | 2核心 | - | - |

| 硬盘 | 100GB | 100GB | 20GB | 建议手动设置磁盘分区 |

| 内存 | 8GB | 4GB | - | - |

| 网卡1 | ens33 NAT 192.168.182.136/24 | ens33 NAT 192.168.182.137/24 | - | NAT模式,用于外网通信 |

| 网卡2 | ens34 host-only 192.168.223.130/24 | ens34 host-only 192.168.223.131/24 | - | host-only模式,用于内网通信 |

通常OpenStack云计算平台至少需要3台服务器用于节点搭建,因作者资源有限,本次实验采用计算节点复用为存储节点,以实现双节点OpenStack云计算平台的搭建,读者可根据自己条件调整配置多节点平台搭建。

1.2 安装虚拟机并配置双网卡(控制节点)

1.3 安装CentOS7

手动设置磁盘分区

> /boot 1G

> / 90G

> swap 8G

2. 主机配置

2.1 更改主机名(控制节点)

[root@localhost ~]# hostnamectl set-hostname controller

2.2 配置本地域名解析(控制节点)

[root@controller]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 # 本地回环地址,表示本地IPv4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 # 本地IPv6地址

# 添加内网通信地址和域名

192.168.223.130 controller

# 测试与主机的连通性

[root@controller ~]# ping controller

PING controller (192.168.223.130) 56(84) bytes of data.

64 bytes from controller (192.168.223.130): icmp_seq=1 ttl=64 time=0.033 ms

64 bytes from controller (192.168.223.130): icmp_seq=2 ttl=64 time=0.080 ms

64 bytes from controller (192.168.223.130): icmp_seq=3 ttl=64 time=0.082 ms

# 以上结果说明成功配置将IP地址解析到指定主机

2.3 防火墙管理(控制节点)

# 查看防火墙状态

[root@controller ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon ## 当前是关闭状态

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled) ## enabled开机自启动

Active: inactive (dead) ## inactive (dead)说明服务未启动,启动时显示running

Docs: man:firewalld(1)

7月 12 17:24:45 controller systemd[1]: Starting firewalld - dynamic firewa....

7月 12 17:24:55 controller systemd[1]: Started firewalld - dynamic firewal....

7月 12 17:24:56 controller firewalld[866]: WARNING: AllowZoneDrifting is e....

7月 12 19:54:13 controller systemd[1]: Stopping firewalld - dynamic firewa....

7月 12 19:54:14 controller systemd[1]: Stopped firewalld - dynamic firewal....

Hint: Some lines were ellipsized, use -l to show in full.

# 临时关闭防火墙

[root@controller ~]# systemctl stop firewalld

# 关闭开机自启动

[root@controller ~]# systemctl disable firewalld

# 禁用SELinux

[root@compute ~]# vi /etc/selinux/config # 关闭开机自启动

ELINUX=disabled

[root@compute ~]# setenforce 0 # 立即禁用

[root@compute ~]# sestatus # 检查状态

[root@compute ~]# reboot # 重启系统(若setenforce 0不生效则关闭自启动后执行重启)

2.4 安装基础支持服务(控制节点)

1. Chrony 时间同步服务

[root@controller ~]# vi /etc/chrony.conf

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

# 预设了四个官方提供的NTP服务器,在连接互联网的情况下,主机将选择与这四个之一的服务器进行时间同步

# 写入配置允许某个网段的Chrony客户端使用本机的NTP服务器

allow 192.168.223.0/24

# 重启服务并设置开机自启动

[root@controller ~]# systemctl restart chronyd

[root@controller ~]# systemctl enable chronyd

# 测试:查看当前客户端与NTP服务器的连接情况

[root@controller ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* time.cloudflare.com 3 10 273 992 -3600us[-1688us] +/- 101ms

^+ tick.ntp.infomaniak.ch 1 10 227 545 -4913us[-4913us] +/- 119ms

^+ makaki.miuku.net 3 10 237 155 +39ms[ +39ms] +/- 126ms

^+ electrode.felixc.at 3 10 363 346 -37ms[ -37ms] +/- 170ms

2. OpenStack 云计算平台框架

# 安装OpenStack云计算平台框架

[root@controller ~]# yum install centos-release-openstack-train

# 升级软件包

[root@controller ~]# yum upgrade -y

# 安装OpenStack云计算平台客户端

[root@controller ~]# yum -y install python-openstackclient

# 测试:查看版本

[root@controller ~]# openstack --version

openstack 4.0.2

# 安装OpenStack SELinux管理包

[root@controller ~]# yum install openstack-selinux

# 如果在安装之前没有手动关闭SELinux,则在安装该软件包时将自动关闭系统的SELinux模块,系统SELinux安全策略将被“openstack-selinux”自动接管

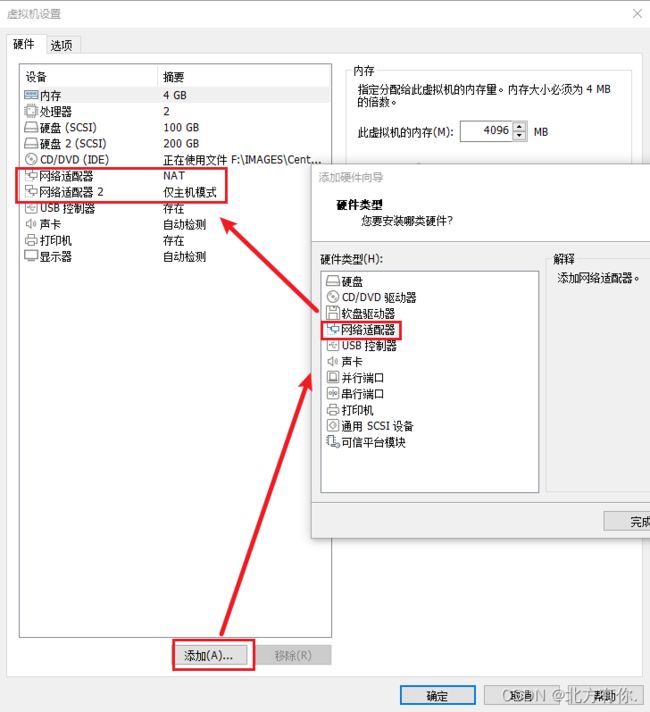

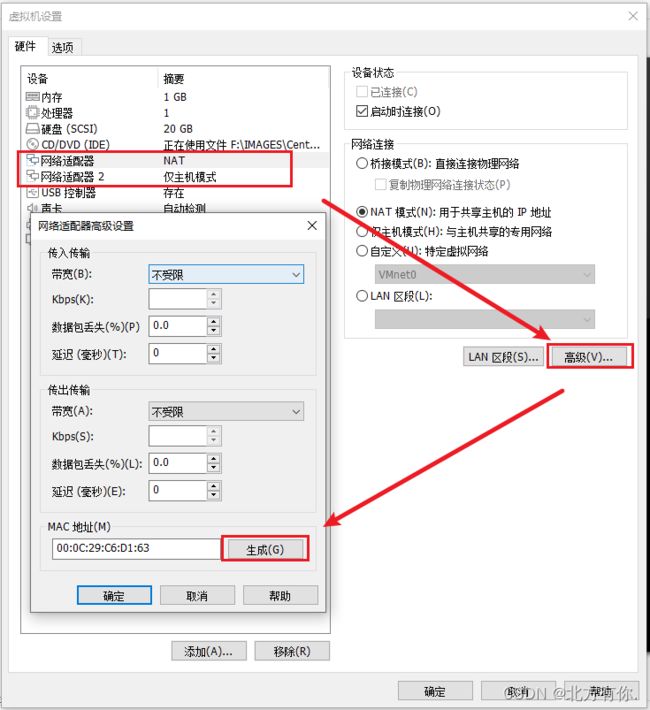

2.5 克隆虚拟机

克隆后开机前刷新MAC地址可以重新生成网卡UID,防止IP冲突

2.6 安装基础支持服务(控制节点【续】)

3. MariaDB数据库服务

# 安装MariaDB数据库

[root@controller ~]# yum install -y mariadb-server python2-PyMySQL

——————————-————————————————————————————

mariadb-server 数据库后台服务

python2-PyMySQL 实现OpenStack与数据库相连的模块

# 创建数据库配置文件

[root@controller ~]# vi /etc/my.cnf.d/openstack.cnf

[mysqld] # 声明以下为数据库服务端的配置

bind-address=192.168.223.130 # 绑定远程访问地址,只允许从该地址访问数据库

default-storage-engine=innodb # 默认存储引擎(Innodb是比较常用的支持事务的存储引擎)

innodb_file_per_table=on # Innodb引擎的独立表空间,使每张表的数据都单独保存

max_connections=4096 # 最大连接数

collation-server=utf8_general_ci # 排列字符集(字符集的排序规则,每个字符集都对应一个或多个排列字符集)

character-set-server=utf8 # 字符集

# 启动数据库并设置开机自启动

[root@controller ~]# systemctl enable mariadb

[root@controller ~]# systemctl start mariadb

# 初始化数据库

[root@controller ~]# mysql_secure_installation

Enter current password for root (enter for none) : # 输入当前密码,若没有密码则直接按【Enter】键

Set root password?[Y/n]Y # 是否设置新密码

New password:000000 # 输入新密码

Re-enter new password:000000 # 确认新密码

Remove anonymous users?[Y/n]Y # 是否去掉匿名用户

Disallow root login remotely?[Y/n]Y # 是否禁止root用户远程登陆

Remove test database and access to it?[Y/n]Y # 是否去掉测试数据库

Reload privilege tables now?[Y/n]Y # 是否重新加载权限

# 测试:使用数据库

[root@controller ~]# mysql -u root -p000000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 9

Server version: 10.3.20-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]>

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

+--------------------+

3 rows in set (0.510 sec)

4. RabbitMQ 消息队列服务

# 安装消息队列服务

[root@controller ~]# yum install rabbitmq-server

# 启动服务并设置开机自启动

[root@controller ~]# systemctl enable rabbitmq-server

[root@controller ~]# systemctl start rabbitmq-server

# 测试:检测服务运行情况(RabbitMQ对外服务端口为5672和25672)

[root@controller ~]# netstat -tnlup | grep -E "(:5672|25672)"

tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 1335/beam.smp

tcp6 0 0 :::5672 :::* LISTEN 1335/beam.smp

# 端口正在监听(LISTEN)中,说明服务已正常启用

由于Linux版本原因,可能出现

-bash:natstat:未找到命令的情况,Linux 没有 netstat 命令是因为在 Linux 计算机系统中,使用的是基于内核信息来判断网络状态的工具,称之为 ss 命令。虽然 netstat 命令在过去是非常流行和广泛使用的工具,但在较新的 Linux 内核版本中已被淘汰。

# RabbitMQ 消息队列服务管理案例:

# 案例1:创建一个名为“openstack”的用户,密码为“PABBIT_PASSWD”

[root@controller ~]# rabbitmqctl add_user openstack RABBIT_PASSWD

Creating user "openstack"

# 案例2:将RabbitMQ消息队列中名为“openstack”的用户密码更改为“000000”

[root@controller ~]# rabbitmqctl change_password openstack 000000

Changing password for user "openstack"

# 案例3:设置RabbitMQ消息队列中名为“openstack”的用户权限为:配置、写入、读取

[root@controller ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

# 案例4:查看RabbitMQ消息队列中名为“openstack”的用户权限

[root@controller ~]# rabbitmqctl list_user_permissions openstack

Listing permissions for user "openstack"

/ .* .* .* 每一个RabbitMQ服务器定义一个默认的虚拟机“/”,openstack用户对于该虚拟机的所有资源拥有配置、写入、读取的权限

# 案例5:删除RabbitMQ消息队列中名为“openstack”的用户

[root@controller ~]# rabbitmqctl delete_user openstack

Deleting user "openstack"

【注】此处不能删除这个用户,此案例用于告诉读者可以通过这种方式进行消息队列用户的管理,若删除了请手动重新创建,否则会引起后续服务报错!

5. Memcached 内存缓存服务

# 安装内存缓存服务软件

[root@controller ~]# yum -y install memcached python-memcached

——————————-————————————————————————————

memcached 内存缓存服务软件

python-memcached 对该服务进行管理的接口软件

# 安装完成后会自动创建一个名为“memcached”的用户

[root@controller ~]# cat /etc/passwd | grep memcached

memcached:x:985:979:Memcached daemon:/run/memcached:/sbin/nologin

# 配置内存缓存服务

[root@controller ~]# vi /etc/sysconfig/memcached

PORT="11211" # 服务端口

USER="memcached" # 用户名(默认使用自动创建的memcached用户)

MAXCONN="1024" # 允许的最大连接数

CACHESIZE="64" # 最大缓存大小(MB)

OPTIONS="-l 127.0.0.1,::1" # 其他选项()默认对本地访问进行监听(可将要监听的地址加入这里)

# 在其他选项中加入内网监听地址

OPTIONS="-l 127.0.0.1,::1,192.168.223.130"

# 启动服务并设置开机自启动

[root@controller ~]# systemctl enable memcached

[root@controller ~]# systemctl start memcached

# 测试:检测服务运行情况

[root@controller ~]# netstat -tnlup | grep memcached

tcp 0 0 192.168.223.130:11211 0.0.0.0:* LISTEN 10259/memcached

tcp 0 0 127.0.0.1:11211 0.0.0.0:* LISTEN 10259/memcached

tcp6 0 0 ::1:11211 :::* LISTEN 10259/memcached

6. etcd 分布式键-值对存储系统

# 安装etcd 分布式键-值对存储系统

[root@controller ~]# yum install etcd

# 配置服务器

[root@controller ~]# vi /etc/etcd/etcd.conf

ETCD_LISTEN_PEER_URLS="http://192.168.223.130:2380" # 用于监听其他etcd成员的地址(只能是地址,不能写域名)

ETCD_LISTEN_CLIENT_URLS="http://192.168.223.130:2379,http://127.0.0.1:2379" # 对外提供服务的地址

ETCD_NAME="controller" # 当前etcd成员的名称(成员必须有唯一名称,建议采用主机名)

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.223.130:2380" # 列出这个成员的伙伴地址,通告给集群中的其他成员

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.223.130:2379" # 列出这个成员的客户端地址,通告给集群中的其他成员

ETCD_INITIAL_CLUSTER="controller=http://192.168.223.130:2380" # 启动初始化集群配置,值为“成员名=该成员服务地址”

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01" # 初始化etcd集群标识,用于多个etcd集群相互识别

ETCD_INITIAL_CLUSTER_STATE="new" # 初始化集群状态(新建值为“new”,已存在值为“existing”,如果被设置为existing则etcd将试图加入现有集群)

# 启动服务并设置开机自启动

[root@controller ~]# systemctl enable etcd

[root@controller ~]# systemctl start etcd

# 测试:检测服务运行情况

[root@controller ~]# netstat -tnlup | grep etcd

tcp 0 0 192.168.223.130:2379 0.0.0.0:* LISTEN 11248/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 11248/etcd

tcp 0 0 192.168.223.130:2380 0.0.0.0:* LISTEN 11248/etcd

# etcd服务管理案例

# 案例1:向etcd中存入一个键值对,键为“testkey”,值为“001”

[root@controller ~]# etcdctl set testkey 001

001

# 案例2:从etcd中读取testkey所对应的值

[root@controller ~]# etcdctl get testkey

001

2.5 从网络中获取安装包(控制节点)

# 安装本地源制作工具

[root@controller ~]# yum -y install yum-utils createrepo yum-plugin-priorities

————————————————————————————————————

yum-utils 一个YUM工具包,其中的reposync软件用于将网络中的YUM源数据同步到本地

createepo 用于将同步到本地的数据生成对应的软件仓库

yum-plugin-priorities 是一个插件,用于管理YUM源中软件的优先级

# 创建YUM源文件

[root@controller ~]# cd /etc/yum.repos.d/ # 进入YUM源配置文件目录

[root@controller yum.repos.d]# mkdir bak # 创建一个备份目录

[root@controller yum.repos.d]# mv *.repo bak # 将所有的“repo”文件移动到本分文件夹中保存

[root@controller yum.repos.d]# vi OpenStack.repo # 创建出一个新的配置文件OpenStack.repo

# 在OpenStack.repo中写入配置信息

[base]

name=base

baseurl=http://repo.huaweicloud.com/centos/7/os/x86_64/

enable=1

gpgcheck=0

[extras]

name=extras

baseurl=http://repo.huaweicloud.com/centos/7/extras/x86_64/

enable=1

gpgcheck=0

[updates]

name=updates

baseurl=http://repo.huaweicloud.com/centos/7/updates/x86_64/

enable=1

gpgcheck=0

[train]

name=train

baseurl=http://repo.huaweicloud.com/centos/7/cloud/x86_64/openstack-train/

enable=1

gpgcheck=0

[virt]

name=virt

baseurl=http://repo.huaweicloud.com/centos/7/virt/x86_64/kvm-common/

enable=1

gpgcheck=0

# 使用了华为云提供的CentOS Linux的国内镜像,包含5个仓库:“base” “extras” “updates” “train” “virt”

# 检查yum源是否可用

[root@controller ~]# yum clean all # 清除缓存

[root@controller ~]# yum makecache # 重建缓存

[root@controller ~]# yum repolist # 查看已启用的仓库列表

已加载插件:fastestmirror, langpacks, priorities

Loading mirror speeds from cached hostfile

源标识 源名称 状态

base base 10,072

extras extras 518

train train 3,168

updates updates 5,061

virt virt 63

repolist: 18,882

# 同步远端安装包到本地

[root@controller ~]# mkdir /opt/openstack # 在/opt目录下创建一个空目录

[root@controller ~]# cd /opt/openstack # 转换目录到新目录

[root@controller openstack]# reposync # 下载所有软件仓库文件到本地 (该命令将从网络中下载近30G的文件,耗时较长)

# 创建YUM源

[root@controller openstack]# createrepo -v base

[root@controller openstack]# createrepo -v updates

[root@controller openstack]# createrepo -v extras

[root@controller openstack]# createrepo -v train

[root@controller openstack]# createrepo -v virt

2.7 更改主机名(计算节点)

[root@localhost ~]# hostnamectl set-hostname compute

2.8 配置本地域名解析(计算节点、控制节点)

[root@controller]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 # 本地回环地址,表示本地IPv4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 # 本地IPv6地址

192.168.223.129 controller # 添加内网通信地址和域名

192.168.223.128 compute

[root@compute]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 # 本地回环地址,表示本地IPv4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 # 本地IPv6地址

192.168.223.129 controller # 添加内网通信地址和域名

192.168.223.128 compute

# 测试:检查与主机的连通性

[root@compute ~]# ping compute

PING compute (192.168.223.131) 56(84) bytes of data.

64 bytes from compute (192.168.223.131): icmp_seq=1 ttl=64 time=0.044 ms

64 bytes from compute (192.168.223.131): icmp_seq=2 ttl=64 time=0.077 ms

64 bytes from compute (192.168.223.131): icmp_seq=3 ttl=64 time=0.043 ms

[root@compute ~]# ping controller

PING controller (192.168.223.130) 56(84) bytes of data.

64 bytes from controller (192.168.223.130): icmp_seq=1 ttl=64 time=1.72 ms

64 bytes from controller (192.168.223.130): icmp_seq=2 ttl=64 time=1.82 ms

64 bytes from controller (192.168.223.130): icmp_seq=3 ttl=64 time=0.373 ms

# 以上结果说明成功配置将IP地址解析到指定主机

2.9 系统防火墙管理(计算节点)

# 1.检查SELinux状态(关闭)

[root@compute ~]# sestatus

SELinux status: disabled

# 检查系统防火墙状态(关闭)

[root@compute ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

2.10 搭建本地软件仓库

2.10.1 配置YUM源(控制节点)

-

修改YUM源文件,使其指向本地文件

[root@controller ~]# cd /etc/yum.repos.d/ [root@controller yum.repos.d]# vi OpenStack.repo [base] name=base baseurl=file:///opt/openstack/base/ enable=1 gpgcheck=0 [extras] name=extras baseurl=file:///opt/openstack/extras/ enable=1 gpgcheck=0 [updates] name=updates baseurl=file:///opt/openstack/updates/ enable=1 gpgcheck=0 [train] name=train baseurl=file:///opt/openstack/train/ enable=1 gpgcheck=0 [virt] name=virt baseurl=file:///opt/openstack/virt/ enable=1 gpgcheck=0 -

清除并重建YUM缓存

[root@controller yum.repos.d]# yum clean all [root@controller yum.repos.d]# yum makecache -

测试:检查YUM源是否可用

[root@controller yum.repos.d]# yum repolist 已加载插件:fastestmirror, langpacks, priorities Loading mirror speeds from cached hostfile 源标识 源名称 状态 base base 10,072 extras extras 518 train train 3,168 updates updates 5,061 virt virt 63 repolist: 18,882 # 正常情况下能列出这5个库,说明配置正确

2.10.2 配置 FTP 服务器(控制节点)

-

安装FTP软件包

[root@controller ~]# yum install -y vsftpd -

配置FTP主目录为软件仓库目录

[root@controller ~]# vim /etc/vsftpd/vsftpd.conf anon_root=/opt # 将匿名用户访问的主目录指向软件仓库 -

启动FTP服务

[root@controller ~]# systemctl start vsftpd [root@controller ~]# systemctl enable vsftpd

2.10.3 配置YUM源(计算节点)

# 备份本地源

[root@compute ~]# cd /etc/yum.repos.d/

[root@compute yum.repos.d]# mkdir bak

[root@compute yum.repos.d]# mv *.repo bak

[root@compute yum.repos.d]# ls

bak

# 将控制节点的OpenStack.repo拷贝到计算节点

[root@compute yum.repos.d]# scp root@controller:/etc/yum.repos.d/OpenStack.repo /etc/yum.repos.d/

The authenticity of host 'controller (192.168.223.130)' can't be established.

ECDSA key fingerprint is SHA256:eISfhBqeL5CkKnn5As40KmbML214dO/UMnkr3kOPLA4.

ECDSA key fingerprint is MD5:25:40:9e:0f:fe:cc:8a:bc:bc:25:03:d4:6e:8c:4b:f1.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'controller,192.168.223.130' (ECDSA) to the list of known hosts.

root@controller's password:

OpenStack.repo 100% 383 168.8KB/s 00:00

[root@compute yum.repos.d]# ls

bak OpenStack.repo

# 编辑YUM源配置文件,使用控制节点FTP服务器中的软件仓库

[root@compute yum.repos.d]# vim OpenStack.repo

[base]

name=base

baseurl=ftp://controller/openstack/base/

enable=1

gpgcheck=0

[extras]

name=extras

baseurl=ftp://controller/openstack/extras/

enable=1

gpgcheck=0

[updates]

name=updates

baseurl=ftp://controller/openstack/updates/

enable=1

gpgcheck=0

[train]

name=train

baseurl=ftp://controller/openstack/train/

enable=1

gpgcheck=0

[virt]

name=virt

baseurl=ftp://controller/openstack/virt/

enable=1

gpgcheck=0

# 清除并重建YUM源缓存

[root@compute ~]# yum clean all

[root@compute ~]# yum makecache

2.11 工具安装(计算、控制节点)

[root@compute ~]# yum -y install net-tool

[root@controller ~]# yum -y install net-tool

2.12 修改Chrony时间同步服务配置

2.13 设置局域网时间同步

-

配置控制节点为NTP时间服务器

[root@controller ~]# vim /etc/chrony.conf# 删除默认的同步服务器 server 0.centos.pool.ntp.org iburst server 1.centos.pool.ntp.org iburst server 2.centos.pool.ntp.org iburst server 3.centos.pool.ntp.org iburst# 增加阿里云NTP服务器(此配置仅在连接互联网时有效) server ntp.aliyun.com iburst# 当外网NTP服务器不可用时,采用本地时间作为同步标准 local stratum 1# 在配置文件中设置允许同网段的主机使用本机的NTP服务 allow 192.168.223.0/24# 重启服务 [root@controller ~]# systemctl restart chronyd -

配置计算节点与控制节点时间同步

[root@compute ~]# vim /etc/chrony.conf# 删除默认的同步服务器 server 0.centos.pool.ntp.org iburst server 1.centos.pool.ntp.org iburst server 2.centos.pool.ntp.org iburst server 3.centos.pool.ntp.org iburst# 增加控制节点的时间同步服务器(使计算节点与控制节点进行对时) server controller iburst# 重启服务 [root@compute ~]# systemctl restart chronyd# 测试:检查时间同步服务状态 [root@compute ~]# chronyc sources [root@compute ~]# chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* controller 3 6 17 10 +56us[ +120us] +/- 40ms # 星号表示连接成功

2.14 保存快照

2.15 主机配置自检工单

3.认证服务(Keystone)安装(控制节点)

3.1 安装与配置Keystone

3.1.1 安装Keystone软件包

[root@controller ~]# yum -y install openstack-keystone httpd mod_wsgi

——————————————————————————————————————————

openstack-keystone 是Keystone的软件包(安装时会创建“keystone”的Linux用户和用户组)

httpd 阿帕奇Web服务

mod_wsgi 是使Web服务器支持WSGI的插件

# Keystone是运行在Web服务器上的一个支持Web服务器网关接口(Web Server Gateway Interface,WSGI)的Web应用

# 测试:查看Keystone用户及同名用户组是否被创建

[root@controller ~]# cat /etc/passwd | grep keystone

keystone:x:163:163:OpenStack Keystone Daemons:/var/lib/keystone:/sbin/nologin

[root@controller ~]# cat /etc/group | grep keystone

keystone:x:163:

3.1.2 创建Keystone的数据库并授权

-

进入数据库服务器

[root@controller ~]# mysql -u root -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 11 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> -

创建名为“keystone”的数据库

MariaDB [(none)]> CREATE DATABASE keystone; Query OK, 1 row affected (0.054 sec) -

给用户授权使用该数据库

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.254 sec) # 把“keystone”数据库的所有表(keystone.*)的所有权限(ALL PRIVILEGES)授予本地主机('localhost')上登录名为“keystone”的用户,验证密码为“000000”MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.001 sec) # 把“keystone”数据库的所有表(keystone.*)的所有权限(ALL PRIVILEGES)授予任意远程主机('%')上登录名为“keystone”的用户,验证密码为“000000” -

退出数据库

MariaDB [(none)]> quit Bye

3.1.3 修改Keystone配置文件

[root@controller ~]# vim /etc/keystone/keystone.conf

# 配置文件中找到[database]部分(第600行),该部分用于实现与数据库的连接,添加以下内容

connection = mysql+pymysql://keystone:000000@controller/keystone

# 配置数据库连接信息,用keystone用户和密码“000000”去连接“controller”主机中名为“keystone”的数据库

# 配置文件中[token](第2476行)部分,用于配置令牌的加密方式,取消注释使其生效

provider = fernet

# Fernet Token是当前主流推荐的令牌加密格式,是一种轻量级的消息格式。

3.1.4 初始化keystone的数据库

-

同步数据库

[root@controller ~]# su keystone -s /bin/sh -c "keystone-manage db_sync" —————————————————————————————————— su keystone 切换为keystone用户(因为该用户拥有对keystone数据库的管理权限) -s /bin/sh su命令的选项,用于指定编译器 -c su命令的选项,用于指定执行某完命令,结束后自动切换为原用户keystone服务管理工具

语法:keystone-manage [OPTION]OPTION EXPLAIN db_sync 同步数据库 fernet_setup 创建一个Fernet密钥库,用于令牌加密 credential_setup 创建一个Fernet密钥库,用于凭证加密 bootstrap 初始化身份认证信息,并将这些信息存入数据库 token_flush 清除过期的令牌 -

测试:检查数据库

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 14 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]>MariaDB [(none)]> USE keystone; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changedMariaDB [keystone]> SHOW TABLES; +------------------------------------+ | Tables_in_keystone | +------------------------------------+ | access_rule | | access_token | | application_credential | | application_credential_access_rule | | application_credential_role | | assignment | | config_register | # 总共48行

3.2 Keystone 组件初始化

3.2.1 初始化Ferent密钥库

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

# 该命令会自动创建“/etc/keystone/fernet-keys/”目录,并在改目录下生成两个Fernet密钥,用于加密和解密令牌。

# 验证如下:

[root@controller ~]# ls /etc/keystone/fernet-keys/

0 1

[root@controller ~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

# 该命令会自动创建“/etc/keystone/credential-keys/”目录,并在该目录下生成两个Fernet密钥,用于加密/解密用户凭证

# 验证如下

[root@controller ~]# ls /etc/keystone/credential-keys/

0 1

3.2.2 初始化用户身份信息

openstack有一个默认用户为“admin”,但现在还没有对应的登陆信息。使用“keystone-manage bootstrap”命令给“admin”用户初始化登录凭证,以后登录时将出示凭证与此进行对比即可进行认证。

[root@controller ~]# keystone-manage bootstrap --bootstrap-password 000000 --bootstrap-admin-url http://controller:5000/v3 --bootstrap-internal-url http://controller:5000/v3 --bootstrap-public-url http://controller:5000/v3 --bootstrap-region-id RegionOne

# 配置初始化admin用户密码为000000

keystone-manage bootstrap命令参数说明

语法:keystone-manage bootstrap

OPERATE EXPLAIN –bootstrap-username 设置登录用户名,如果没有该参数则默认登录用户为“admin”用户 –bootstrap-password 设置“admin”用户的密码 –bootstrap-admin-url 设置“admin”用户的服务端点 –bootstrap-internal-url 设置内部用户使用的服务端点 –bootstrap-public-url 设置公共用户使用的服务端点 –bootstrap-region-id 设置区域ID名称,用于配置集群服务

3.2.3 配置Web服务

-

为Apache服务器增加WSGI支持

[root@controller ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d # 将“wsgi-keystone.conf”文件软链接到“/etc/httpd/conf.d/”目录 -

修改Apache服务器配置并启动Apache服务

[root@controller ~]# vim /etc/httpd/conf/httpd.conf# 修改ServerName的值为web服务器所在的域名或IP ServerName controller # (第95行)# 启动服务并设置开机自启动 [root@controller ~]# systemctl enable httpd [root@controller ~]# systemctl start httpd

3.2.4 模拟登录验证

-

创建初始化环境变量文件

[root@controller ~]# vim admin-login # 该文件用于存储身份凭证export OS_USERNAME=admin # 登录openstack云计算平台的用户名 export OS_PASSWORD=000000 # 登陆密码 export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default # 用户属于的域 export OS_PROJECT_DOMAIN_NAME=Default # 项目属于的域 export OS_AUTH_URL=HTTP://controller:5000/v3 # 认证地址 export OS_IDENTITY_API_VERSION=3 # keystone版本号 export OS_IMAGE_API_VERSION=2 # 镜像管理应用版本号 -

导入环境变量进行验证

# 将身份凭证导入环境变量 [root@controller ~]# source admin-login# 测试:查看现有环境变量 [root@controller ~]# export -p declare -x OS_AUTH_URL="HTTP://controller:5000/v3" declare -x OS_IDENTITY_API_VERSION="3" declare -x OS_IMAGE_API_VERSION="2" declare -x OS_PASSWORD="000000" declare -x OS_PROJECT_DOMAIN_NAME="Default" declare -x OS_PROJECT_NAME="admin" declare -x OS_USERNAME="admin" declare -x OS_USER_DOMAIN_NAME="Default" # 说明环境变量已经导入成功

3.2.5 检测Keystone服务

-

创建与查阅项目列表

# 1.创建名为“project”的项目 [root@controller ~]# openstack project create --domain default project +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | | | domain_id | default | | enabled | True | | id | 0e284b9459e14b40801ce2bffb2f5e0a | | is_domain | False | | name | project | | options | {} | | parent_id | default | | tags | [] | +-------------+----------------------------------+ # openstack project create 创建一个项目 # --domain default 该项目属于default域 # project 项目名称# 2.查看现有项目列表 [root@controller ~]# openstack project list +----------------------------------+---------+ | ID | Name | +----------------------------------+---------+ | 0e284b9459e14b40801ce2bffb2f5e0a | project | # 上一步骤创建的项目 | 75697606e21045f188036410b6e5ac90 | admin | +----------------------------------+---------+ -

创建角色与查阅角色列表

# 1.创建名为“user”的角色 [root@controller ~]# openstack role create user +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | None | | domain_id | None | | id | 2ea89bb8766c48fd8167194be6f087d0 | | name | user | | options | {} | +-------------+----------------------------------+# 查看现有角色列表 [root@controller ~]# openstack role list +----------------------------------+--------+ | ID | Name | +----------------------------------+--------+ | 1fa5a3a626db402aa833f9d22e69a23e | member | | 2ea89bb8766c48fd8167194be6f087d0 | user | # 上一步骤创建的角色 | 78d64a433e8647379b290a9d02f5cc2a | admin | | a33d50fbc7834985975f231f494093d8 | reader | +----------------------------------+--------+ -

查看域列表、用户列表

# 1.查看现有域列表 [root@controller ~]# openstack domain list +---------+---------+---------+--------------------+ | ID | Name | Enabled | Description | +---------+---------+---------+--------------------+ | default | Default | True | The default domain | +---------+---------+---------+--------------------+# 2.查看现有用户列表 [root@controller ~]# openstack user list +----------------------------------+-------+ | ID | Name | +----------------------------------+-------+ | 157c2fe27dc54c8baa467a035274ec00 | admin | +----------------------------------+-------+

3.2.6 Keystone自检工单

检查无误后保存控制节点快照2:Keystone安装完成

4.镜像服务(Glance)安装(控制节点)

4.1 安装配置Glance镜像服务

4.1.1 安装Glance软件包

[root@controller ~]# yum -y install openstack-glance

# 安装“openstack-glance”时会自动在CentO S中生成一个名为“glance”的用户和同名用户组

[root@controller ~]# cat /etc/passwd | grep glance

glance:x:161:161:OpenStack Glance Daemons:/var/lib/glance:/sbin/nologin

[root@controller ~]# cat /etc/group | grep glance

glance:x:161:

4.1.2 创建Glance的数据库并授权

-

进入数据库服务器

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 22 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> -

新建 “glance” 数据库

MariaDB [(none)]> CREATE DATABASE glance; Query OK, 1 row affected (0.003 sec) -

为用户授权使用该数据库

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.019 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.000 sec)

4.1.3 修改Glance配置文件

-

备份配置文件

[root@controller ~]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.bak -

去掉配置文件中所有注释和空行并写入原文件

[root@controller ~]# grep -Ev '^$|#' /etc/glance/glance-api.bak > /etc/glance/glance-api.conf[root@controller ~]# vim /etc/glance/glance-api.conf [DEFAULT] [cinder] [cors] [database] [file] [glance.store.http.store] [glance.store.rbd.store] [glance.store.sheepdog.store] [glance.store.swift.store] [glance.store.vmware_datastore.store] [glance_store] [image_format] [keystone_authtoken] [oslo_concurrency] [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_middleware] [oslo_policy] [paste_deploy] [profiler] [store_type_location_strategy] [task] [taskflow_executor] -

编辑配置文件

[database] # 该部分用于实现与数据库的连接 connection = mysql+pymysql://glance:000000@controller/glance# 实现keystone的交互 [keystone_authtoken] auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password username = glance password = 000000 project_name = project user_domain_name = Default project_domain_name = Default [paste_deploy] flavor = keystone# 指定后端存储系统 [glance_store] stores = file default_store = file filesystem_store_datadir = /var/lib/glance/images/ # 【注】“/var/lib/glance”文件夹是在安装Glance的时候自动生成的,“glance”用户具有该文件夹的完全操作权限

4.1.4 初始化Glance的数据库

-

同步数据库

[root@controller ~]# su glance -s /bin/sh -c "glance-manage db_sync" Database is synced successfully. -

检查数据库

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 25 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]>MariaDB [(none)]> use glance; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changedMariaDB [glance]> show tables; +----------------------------------+ | Tables_in_glance | +----------------------------------+ | alembic_version | | image_locations | | image_members | | image_properties | | image_tags | | images | | metadef_namespace_resource_types | | metadef_namespaces | | metadef_objects | | metadef_properties | | metadef_resource_types | | metadef_tags | | migrate_version | | task_info | | tasks | +----------------------------------+ 15 rows in set (0.002 sec) # glance数据库中存在以上数据表表示同步成功

4.2 Glance组件初始化

4.2.1 创建Glance用户并分配角色

-

导入环境变量模拟登录

[root@controller ~]# . admin-login # 也可使用“source”代替“.”执行环境变量导入操作 -

在OpenStack云计算平台中创建用户“glance”

[root@controller ~]# openstack user create --domain default --password 000000 glance +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | bee66d0f8d7b4ff2ad16400cdc0f7138 | | name | glance | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+ # 在“default”域中创建一个名为“glance”的用户,密码为“000000”此处设置的用户名和密码一定要与 “/etc/glance/glance-api.conf" 文件中的 “[keystone_authtoken]" 中的用户名和密码一致。

-

为用户 “glance” 分配 “admin” 角色

[root@controller ~]# openstack role add --project project --user glance admin # 授予“glance”用户操作“project”项目时的“admin”的权限

4.2.2 创建Glance服务及服务端点

-

创建服务

[root@controller ~]# openstack service create --name glance image # 创建一个名为“glance”、类型为“image”的服务 +---------+----------------------------------+ | Field | Value | +---------+----------------------------------+ | enabled | True | | id | 6f106feeb8ec40838aa189ef94aafc4c | | name | glance | | type | image | +---------+----------------------------------+ -

创建镜像服务端点

OpenStack组件的服务端点有3种,分别对应公众用户(public)、内部组件(internal)、Admin用户(admin)服务的地址。

# 1.创建公众用户访问的服务端点 [root@controller ~]# openstack endpoint create --region RegionOne glance public http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 85af1b039d954f24b9bd1d1ed7cc1564 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 6f106feeb8ec40838aa189ef94aafc4c | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+# 2.创建内部组件访问的服务端点 [root@controller ~]# openstack endpoint create --region RegionOne glance internal http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 7f0a84e96c79440eb570c8f10c96e779 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 6f106feeb8ec40838aa189ef94aafc4c | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+# 3.创建Admin用户访问的服务端点 [root@controller ~]# openstack endpoint create --region RegionOne glance admin http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 984d6855f1c64a4cac0d3ecc25a7432d | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 6f106feeb8ec40838aa189ef94aafc4c | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+

4.2.3 启动Glance服务

[root@controller ~]# systemctl enable openstack-glance-api

[root@controller ~]# systemctl start openstack-glance-api

4.3 验证Glance服务

# 1.查看端口占用情况

[root@controller ~]# netstat -tnlup | grep 9292

tcp 0 0 0.0.0.0:9292 0.0.0.0:* LISTEN 6650/python2

# 2.查看服务运行状态

[root@controller ~]# systemctl status openstack-glance-api

● openstack-glance-api.service - OpenStack Image Service (code-named Glance) API server

Loaded: loaded (/usr/lib/systemd/system/openstack-glance-api.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2023-07-17 09:59:24 CST; 3min 29s ago

4.4 用Glance制作镜像

将官网下载的CirrOS镜像(cirros-0.5.1-x86_64-disk.img)拷贝至 “/root” 目录下

C:\Users\admin>scp F:\openstack环境\cirros-0.5.1-x86_64-disk.img [email protected]:/root/ cirros-0.5.1-x86_64-disk.img 100% 16MB 33.8MB/s 00:00[root@controller ~]# ls cirros-0.5.1-x86_64-disk.img

# 1.调用Glance创建镜像

[root@controller ~]# openstack image create --file cirros-0.5.1-x86_64-disk.img --disk-format qcow2 --container-format bare --public cirros

_________________________________________

“openstack image create”语句对镜像(image)执行创建(create)操作,创建了一个名为“cirros”的公有(public)镜像,它由当前目录 的“cirros-0.5.1-x86_64-disk.img”文件制作而成,生成的镜像磁盘格式为“qcow2”,容器格式为“bare”。

# 2.查看镜像

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 08a58e13-dab2-4378-87c4-24dc6bd99b75 | cirros | active |

+--------------------------------------+--------+--------+

镜像状态说明

状态 说明 queued 表示Glance注册表中已保留该镜像标识,但还没有镜像数据上传到Glance中 saving 表示镜像的原始数据正在上传到Glance中 active 表示在Glance中完全可用的镜像 deactivated 表示不允许任何非管理员用户访问镜像数据 killed 表示在上传镜像数据期间发生错误,且镜像不可读 deleted Glance保留了关于镜像的信息,但镜像不再可用(此状态下的镜像将在以后自动删除) ending_delete Glance尚未删除的镜像数据(处于此状态的镜像无法恢复)

# 3.查看物理文件(在glance-api.conf配置文件中定义了镜像文件的存储位置为/var/lib/glance/images)

[root@controller ~]# ll /var/lib/glance/images/

总用量 15956

-rw-r----- 1 glance glance 16338944 7月 20 16:17 08a58e13-dab2-4378-87c4-24dc6bd99b75

4.5 Glance安装自检工单

检查无误后保存控制节点快照3:Glance安装完成

5.放置服务(Placement)安装(控制节点)

5.1 安装与配置Placement服务

5.1.1 安装placement软件包

[root@controller ~]# yum install openstack-placement-api

# 安装上述软件包时会自动创建名为“placement”的用户和同名用户组

[root@controller ~]# cat /etc/passwd | grep placement

placement:x:983:977:OpenStack Placement:/:/bin/bash

[root@controller ~]# cat /etc/group | grep placement

placement:x:977:

5.1.2 创建Placement的数据库并授权

-

进入数据库

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 38 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> -

新建 ”placement“ 数据库

MariaDB [(none)]> CREATE DATABASE placement; Query OK, 1 row affected (0.000 sec) -

为数据库授权

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.008 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.001 sec)

5.1.3 修改Placement配置文件

-

去掉配置文件中的注释和空行

# 1.备份配置文件 [root@controller ~]# cp /etc/placement/placement.conf /etc/placement/placement.bak [root@controller ~]# ls /etc/placement/ placement.bak placement.conf policy.json# 2.去除注释和空行,生成新的配置文件 [root@controller ~]# grep -Ev '^$|#' /etc/placement/placement.bak > /etc/placement/placement.conf [root@controller ~]# cat /etc/placement/placement.conf [DEFAULT] [api] [cors] [keystone_authtoken] [oslo_policy] [placement] [placement_database] [profiler] -

修改配置

[root@controller ~]# vim /etc/placement/placement.conf# 实现数据库连接 [placement_database] connection = mysql+pymysql://placement:000000@controller/placement# 实现与keystone交互 [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = project username = placement password = 000000

5.1.4 修改Apache配置文件

[root@controller ~]# vim /etc/httpd/conf.d/00-placement-api.conf

# 在"VirtualHost"节点中加入以下内容(意思时告诉Web服务器,如果Apache的版本号大于或等于2.4,则向系统请求活动“/usr/bin/”目录的所有操作权限)

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

</Directory>

# 查看当前Web服务器版本

[root@controller ~]# httpd -v

Server version: Apache/2.4.6 (CentOS)

5.1.5 初始化Placement的数据库

-

同步数据库

[root@controller ~]# su placement -s /bin/sh -c "placement-manage db sync" -

检查数据库是否同步成功

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 41 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]>MariaDB [(none)]> use placement; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changedMariaDB [placement]> show tables; +------------------------------+ | Tables_in_placement | +------------------------------+ | alembic_version | | allocations | | consumers | | inventories | | placement_aggregates | | projects | | resource_classes | | resource_provider_aggregates | | resource_provider_traits | | resource_providers | | traits | | users | +------------------------------+ 12 rows in set (0.001 sec)

5.2 Placement组件初始化

5.2.1 创建Placement用户并分配角色

-

导入环境变量模拟登录

[root@controller ~]# source admin-login -

在OpenStack云计算平台中创建用户 “placement”

[root@controller ~]# openstack user create --domain default --password 000000 placement +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | 5b7a7b4f7e9144888ba23857e5cb828d | | name | placement | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+ # 在“default”域中创建了一个名为“placement”、密码为“000000”的用户此处设置的用户名和密码一定要与 “/etc/placement/placement.conf" 文件中的 “[keystone_authtoken]" 中的用户名和密码一致。

-

为用户 “placement” 分配 “admin” 角色

# 授予“placement”用户操作“project”项目时的“admin”权限 [root@controller ~]# openstack role add --project project --user placement admin

5.2.2 创建Placement服务及服务端点

-

创建服务

[root@controller ~]# openstack service create --name placement placement +---------+----------------------------------+ | Field | Value | +---------+----------------------------------+ | enabled | True | | id | aed5079ceead4dae80b084d59e0b71d6 | | name | placement | | type | placement | +---------+----------------------------------+ -

创建服务端点

# 1.创建公众用户访问端点 [root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | e1e889616b2d47cc82f072ad5cfa08f4 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | aed5079ceead4dae80b084d59e0b71d6 | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+# 2.创建内部组件访问的端点 [root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 18bab6d33adf4a0586f7e74dd5f1078a | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | aed5079ceead4dae80b084d59e0b71d6 | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+# 3.创建Admin用户访问的端点 [root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 47dc11279c3e42feaa8abcf0c19cc517 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | aed5079ceead4dae80b084d59e0b71d6 | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+

5.2.3 启动Placement服务

# 和Keystone以及Glance一样,需要借助Apache的Web服务实现功能,故重启Apache服务即可使配置文件生效

[root@controller ~]# systemctl restart httpd

5.3 检测Placement服务

-

检测端口占用情况

[root@controller ~]# netstat -tnlup | grep 8778 tcp6 0 0 :::8778 :::* LISTEN 21474/httpd -

检验服务端点

[root@controller ~]# curl http://controller:8778 {"versions": [{"status": "CURRENT", "min_version": "1.0", "max_version": "1.36", "id": "v1.0", "links": [{"href": "", "rel": "self"}]}]}

5.4 Placement安装自检工单

检查无误后保存控制节点快照4:Placement安装完成

6. 计算服务(Nova)安装

6.1 控制节点上Nova服务的安装与配置

6.1.1 安装Nova软件包

[root@controller ~]# yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-scheduler openstack-nova-novncproxy

___________________________________________

openstack-nova-api:Nova与外部的接口模块

openstack-nova-conductor:Nova传导服务模块,提供数据库访问

nova-scheduler:Nova调度服务模块,用以选择某台主机进行云主机创建

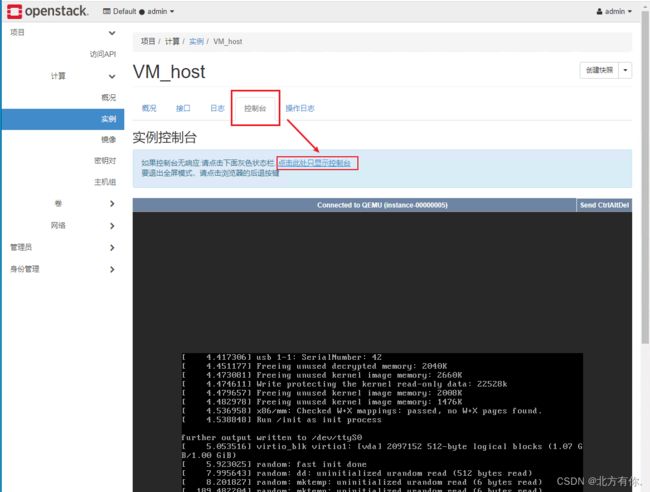

openstack-nova-novncproxy:Nova的虚拟网络控制台(Virtual Network Console,VNC)代理模块,支持用户提供VNC访问云主机

# 安装nova时,会自动创建名为nova的系统用户和用户组

[root@controller ~]# cat /etc/passwd | grep nova

nova:x:162:162:OpenStack Nova Daemons:/var/lib/nova:/sbin/nologin

[root@controller ~]# cat /etc/group | grep nova

nobody:x:99:nova

nova:x:162:nova

6.1.2 创建Nova的数据库并授权

-

进入数据库

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 54 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> -

新建 “nova-api”、“nova-cell0”、“nova”数据库

MariaDB [(none)]> CREATE DATABASE nova_api; Query OK, 1 row affected (0.006 sec)MariaDB [(none)]> CREATE DATABASE nova_cell0; Query OK, 1 row affected (0.001 sec)MariaDB [(none)]> CREATE DATABASE nova; Query OK, 1 row affected (0.000 sec) -

为数据库授权

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.004 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.001 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.001 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.006 sec)

6.1.3 修改Nova配置文件

-

去除配置文件中注释和空行

# 1.备份配置文件 [root@controller ~]# cp /etc/nova/nova.conf /etc/nova/nova.bak [root@controller ~]# ls /etc/nova/nova* /etc/nova/nova.bak /etc/nova/nova.conf# 2.去除注释和空行,生成新文件 [root@controller ~]# grep -Ev '^$|#' /etc/nova/nova.bak > /etc/nova/nova.conf [root@controller ~]# cat /etc/nova/nova.conf [DEFAULT] [api] [api_database] [barbican] [cache] [cinder] [compute] [conductor] [console] [consoleauth] [cors] [database] [devices] [ephemeral_storage_encryption] [filter_scheduler] [glance] [guestfs] [healthcheck] [hyperv] [ironic] -

写入配置信息

[root@controller ~]# vim /etc/nova/nova.conf# 1.实现与数据库“nova_api”、和“nova”的连接 [api_database] connection = mysql+pymysql://nova:000000@controller/nova_api [database] connection = mysql+pymysql://nova:000000@controller/nova# 2.实现与Keystone的交互 [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = project username = nova password = 000000# 3.实现与Placement的交互 [placement] auth_url = http://controller:5000 auth_type = password project_domain_name = Default user_domain_name = Default project_name = project username = placement password = 000000 region_name = RegionOne# 4.实现与Glance的交互 [glance] api_servers = http://controller:9292# 5.配置锁路径 [oslo_concurrency] lock_path = /var/lib/nova/tmp# 6.配置消息队列及防火墙信息 [DEFAULT] enabled_apis = osapi_compute,metadata # 指定启用的服务 API,多个 API 之间用逗号分隔。 transport_url = rabbit://openstack:000000@controller:5672 # 格式为 rabbit://rabbitmq_username:password@该节点的地址或域名:5672 my_ip = 192.168.223.130 use_neutron = true firewall_driver = nova.virt.firewall.NoopFirewallDriver# 7.配置VNC连接模式 [vnc] enabled = true server_listen = $my_ip server_proxyclient_address = $my_ip

6.1.4 初始化Nova的数据库

-

初始化 “nova_api” 数据库

[root@controller ~]# su nova -s /bin/sh -c "nova-manage api_db sync" # 执行完毕后不返回任何结果即为初始化成功!!! -

创建 “cell1” 单元,该单元使用 “nova” 数据库

[root@controller ~]# su nova -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1" -

映射 “nova” 到 “cell0” 数据库,使 “cell0” 的表结构和 “nova” 的表结构保持一致

[root@controller ~]# su nova -s /bin/sh -c "nova-manage cell_v2 map_cell0" -

初始化 “nova” 数据库,由于映射的存在,“cell0” 中同时会创建相同的数据表

[root@controller ~]# su nova -s /bin/sh -c "nova-manage db sync"

6.1.5 验证单元是否都已正确注册

[root@controller ~]# nova-manage cell_v2 list_cells

6.1.6 Nova组件初始化

6.1.6-1 创建Nova用户并分配角色

-

导入环境变量模拟登录

[root@controller ~]# source admin-login -

在OpenStack云计算平台中创建用户“nova”

[root@controller ~]# openstack user create --domain default --password 000000 nova +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | 23acdca9cd1244bcb8aeb8d117a93db1 | | name | nova | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+ # 此处用户名和密码须与“nova.conf”文件“[keystone_authtoken]”中的一致 -

为用户“nova”分配“admin”角色

[root@controller ~]# openstack role add --project project --user nova admin

6.1.6-2 创建Nova服务及端点

-

创建服务

[root@controller ~]# openstack service create --name nova compute +---------+----------------------------------+ | Field | Value | +---------+----------------------------------+ | enabled | True | | id | d7cb10067a04401e95e8144fd5f8a3f3 | | name | nova | | type | compute | +---------+----------------------------------+ -

创建服务端点

# 1.创建公众用户访问的端点 [root@controller ~]# openstack endpoint create --region RegionOne nova public http://controller:8774/v2.1 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 61bb957c9e714f7db1856af5c61c115f | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | d7cb10067a04401e95e8144fd5f8a3f3 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+----------------------------------+# 2.创建内部组件访问的端点 [root@controller ~]# openstack endpoint create --region RegionOne nova internal http://controller:8774/v2.1 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | da3afa1b7b01486cad2109a4bce58077 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | d7cb10067a04401e95e8144fd5f8a3f3 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+----------------------------------+# 3.创建Admin用户访问的端点 [root@controller ~]# openstack endpoint create --region RegionOne nova admin http://controller:8774/v2.1 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 4c67400ca28c4dba9aebb364f030bb57 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | d7cb10067a04401e95e8144fd5f8a3f3 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+----------------------------------+

6.1.6-3 启动控制节点的Nova服务

[root@controller ~]# systemctl enable openstack-nova-api openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

[root@controller ~]# systemctl start openstack-nova-api openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

6.1.7 检测控制节点的Nova服务

-

查看端口占用情况

[root@controller ~]# netstat -nutpl | grep 877 tcp 0 0 0.0.0.0:8774 0.0.0.0:* LISTEN 10861/python2 tcp 0 0 0.0.0.0:8775 0.0.0.0:* LISTEN 10861/python2 tcp6 0 0 :::8778 :::* LISTEN 8975/httpd -

查看计算服务列表

[root@controller ~]# openstack compute service list +----+----------------+------------+----------+---------+-------+----------------------------+ | ID | Binary | Host | Zone | Status | State | Updated At | +----+----------------+------------+----------+---------+-------+----------------------------+ | 4 | nova-conductor | controller | internal | enabled | up | 2023-07-24T05:22:04.000000 | | 5 | nova-scheduler | controller | internal | enabled | up | 2023-07-24T05:22:01.000000 | +----+----------------+------------+----------+---------+-------+----------------------------+ # “nova-conductor”和“nava-scheduler”两个模块在控制节点上均处于开启(up)状态且正常显示更新时间即为服务正常若出现这两个模块状态为down时,检查“/var/log/nova/”目录下对应的模块日志

例如 /var/log/nova/nova-scheduler.log 中出现以下情况

ERROR oslo_service.service AccessRefused: (0, 0): (403) ACCESS_REFUSED - Login was refused using authentication mechanism AMQPLAIN. For details see the broker logfile.

意思是:使用AMQPLAIN身份验证机制拒绝登录。

解决方法:检查nova配置文件/etc/nova/nova.conf 中

transport_url的值格式rabbit://rabbitmq_username:password@节点地址或域名:5672

6.2 计算节点上Nova服务的安装与配置

6.2.1 安装Nova软件包

-

安装

[root@compute ~]# yum -y install openstack-nova-compute -

检测

[root@compute ~]# cat /etc/passwd | grep nova nova:x:162:162:OpenStack Nova Daemons:/var/lib/nova:/sbin/nologin[root@compute ~]# cat /etc/group | grep nova nobody:x:99:nova qemu:x:107:nova libvirt:x:985:nova nova:x:162:nova

6.2.2 修改配置文件

-

备份配置文件

[root@compute ~]# cp /etc/nova/nova.conf /etc/nova/nova.bak -

去除注释和空行生成新文件

[root@compute ~]# grep -Ev '^$|#' /etc/nova/nova.bak > /etc/nova/nova.conf -

编辑配置文件内容

[root@compute ~]# vim /etc/nova/nova.conf# 1.实现Keystone交互 [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = project username = nova password = 000000# 2. 实现与Placement的交互 [placement] auth_url = http://controller:5000 auth_type = password project_domain_name = Default user_domain_name = Default project_name = project username = placement password = 000000 region_name = RegionOne# 3.实现与Glance的交互 [glance] api_servers = http://controller:9292# 4.配置锁路径 [oslo_concurrency] lock_path = /var/lib/nova/tmp# 5.配置消息队列及防火墙信息 [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:000000@controller:5672 my_ip = 192.168.223.131 use_neutron = true firewall_driver = nova.virt.firewall.NoopFirewallDriver# 6.配置VNC连接模式 [vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html # 仅计算节点需要该配置# 7.设置虚拟化类型为QEMU [libvirt] virt_type = qemu

6.2.3 启动计算节点的Nova服务

[root@compute ~]# systemctl enable libvirtd openstack-nova-compute

[root@compute ~]# systemctl start libvirtd openstack-nova-compute

# libvirtd是管理虚拟化平台的开源接口应用,提供对KVM、Xen、VMware ESX、QEMU和其他虚拟化程序的统一管理接口服务

6.3 发现计算节点并检验服务

6.3.1 发现计算节点

-

导入环境变量模拟登录

[root@controller ~]# . admin-login -

发现新的计算节点

# 切换到nova用户执行发现未注册计算节点的命令 [root@controller ~]# su nova -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" Found 2 cell mappings. Getting computes from cell 'cell1': 30253634-2832-4b44-93d1-88e6f02cbe8a Checking host mapping for compute host 'compute': c5869d42-3625-47a7-8a61-5f476f38b3c4 Creating host mapping for compute host 'compute': c5869d42-3625-47a7-8a61-5f476f38b3c4 Found 1 unmapped computes in cell: 30253634-2832-4b44-93d1-88e6f02cbe8a Skipping cell0 since it does not contain hosts. # 发现计算节点后,计算节点将自动与“cell1”单元形成关联,即可通过计算节点进行管理 -

设置自动发现

OpenStack中可有多个计算节点存在,每增加一个新的节点就需要执行一次发现命令,可通过配置文件中设置每隔一段时间自动执行一次发现命令来减少工作量。

[root@controller ~]# vim /etc/nova/nova.conf[scheduler] discover_hosto_in_cells_interval = 60 # 每隔60秒自动执行一次发现命令[root@controller ~]# systemctl restart openstack-nova-api # 重启服务使配置生效

6.3.2 验证Nova服务

# 方法一:查看计算服务中各个模块的服务状态

[root@controller ~]# openstack compute service list

+----+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+------------+----------+---------+-------+----------------------------+

| 4 | nova-conductor | controller | internal | enabled | up | 2023-07-24T07:45:40.000000 |

| 5 | nova-scheduler | controller | internal | enabled | up | 2023-07-24T07:45:47.000000 |

| 7 | nova-compute | compute | nova | enabled | up | 2023-07-24T07:45:41.000000 |

+----+----------------+------------+----------+---------+-------+----------------------------+

# 各个模块(Binary)的状态(State)为启用(up)即为服务正常

# 方法二:查看OpenStack现有的服务和对应的端点列表

[root@controller ~]# openstack catalog list

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| glance | image | RegionOne |

| | | internal: http://controller:9292 |

| | | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | |

| keystone | identity | RegionOne |

| | | admin: http://controller:5000/v3 |

| | | RegionOne |

| | | public: http://controller:5000/v3 |

| | | RegionOne |

| | | internal: http://controller:5000/v3 |

| | | |

| placement | placement | RegionOne |

| | | internal: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | RegionOne |

| | | public: http://controller:8778 |

| | | |

| nova | compute | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | |

+-----------+-----------+-----------------------------------------+

# 可显示出OpenStack云计算平台中已有的4个服务的名称(Name)、服务类型(Type)、服务端点(Endpoints)信息

# 方法三、使用Nova状态检测工具“nova-status”

[root@controller ~]# nova-status upgrade check

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------+

# 各服务检查结果(Result)显示“Success”即为运行正常

6.4 Nova安装自检工单

控制节点创建快照5:Nova安装完成

计算节点创建快照2:Nova安装完成

7. 网络服务(Neutron)安装

7.1 网络初始环境准备(控制节点、计算节点)

7.1.1 将网卡设置为混杂模式

-

将外网网卡设置为混杂模式

[root@controller ~]# ifconfig ens33 promisc[root@compute ~]# ifconfig ens33 promisc -

查看网卡信息

成功设置为混杂模式后,网卡信息中会出现 ”PROMISC“ 字样,凡是通过该网卡的数据(不论接收方是否是该网卡),均可被该网卡接收

[root@controller ~]# ip a 2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:4c:30:55 brd ff:ff:ff:ff:ff:ff inet 192.168.182.136/24 brd 192.168.182.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::8b37:6ff4:79ac:9a35/64 scope link noprefixroute valid_lft forever preferred_lft forever[root@compute ~]# ip a 2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:50:56:28:ba:e8 brd ff:ff:ff:ff:ff:ff inet 192.168.182.137/24 brd 192.168.182.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::8b37:6ff4:79ac:9a35/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft forever inet6 fe80::2a6c:ca:3ac3:fa4/64 scope link noprefixroute valid_lft forever preferred_lft forever -

设置开机自动生效

# 在环境变量配置文件末尾写入命令,开机后将自动执行 [root@controller ~]# vim /etc/profile ifconfig ens33 promisc[root@compute ~]# vim /etc/profile ifconfig ens33 promisc

7.1.2 加载桥接模式防火墙模块

-

编辑配置文件

[root@compute ~]# vim /etc/sysctl.conf[root@controller ~]# vim /etc/sysctl.conf# 文件最后写入以下信息 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 -

加载 ”br_netfilter“ 模块

[root@controller ~]# modprobe br_netfilter[root@compute ~]# modprobe br_netfilter -

检查模块加载情况

[root@controller ~]# sysctl -p net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1[root@compute ~]# sysctl -p net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1

7.2 安装与配置控制节点上的Neutron服务

7.2.1 安装Neutron软件包

-

安装

[root@controller ~]# yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge -

查看用户和用户组

[root@controller ~]# cat /etc/passwd | grep neutron neutron:x:981:975:OpenStack Neutron Daemons:/var/lib/neutron:/sbin/nologin[root@controller ~]# cat /etc/group | grep neutron neutron:x:975:

7.2.2 创建Neutron数据库并授权

-

进入数据库

[root@controller ~]# mysql -uroot -p000000 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 115 Server version: 10.3.20-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> -

新建neutron数据库

MariaDB [(none)]> CREATE DATABASE neutron; Query OK, 1 row affected (0.001 sec) -

为数据库授权

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.013 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY '000000'; Query OK, 0 rows affected (0.001 sec)

7.2.3 修改配置文件

-

配置Neutron组件信息

# 1.备份配置文件 [root@controller ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.bak# 2.去除空行和注释,生成新文件 [root@controller ~]# grep -Ev '^$|#' /etc/neutron/neutron.bak > /etc/neutron/neutron.conf [root@controller ~]# cat /etc/neutron/neutron.conf [DEFAULT] [cors] [database] [keystone_authtoken] [oslo_concurrency] [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_middleware] [oslo_policy] [privsep] [ssl]# 3.编辑配置 [root@controller ~]# vim /etc/neutron/neutron.conf[DEFAULT] core_plugin = ml2 service_plugins = transport_url = rabbit://openstack:000000@controller:5672 #xxxxx auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [database] connection = mysql+pymysql://neutron:000000@controller/neutron [keystone_authtoken] auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = project username = neutron password = 000000 [oslo_concurrency] lock_path = /var/lib/neutron/tmp [nova] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default project_name = project username = nova password = 000000 region_name = RegionOne server_proxyclient_address = 192.168.223.130 # 增加配置与Nova交互 [neutron] auth_url = http://192.168.223.130:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = project username = neutron password = 000000 service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET -

修改二层模块插件(ML2Plugin)的配置文件

# 1.备份配置文件 [root@controller ~]# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.bak# 2.去除空行和注释生成新文件 [root@controller ~]# grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.bak > /etc/neutron/plugins/ml2/ml2_conf.ini [root@controller ~]# cat /etc/neutron/plugins/ml2/ml2_conf.ini [DEFAULT]# 3.修改配置文件 [root@controller ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini [DEFAULT] [ml2] type_drivers = flat tenant_network_types = mechanism_drivers = linuxbridge extension_drivers = port_security [ml2_type_flat] flat_networks = provider [securitygroup] enable_ipset = true# 4.启用ML2插件 [root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini # 只有在“/etc/neutron/”下的插件才能生效,因此将“ml2_conf.ini”映射为“/etc/neutron/”下的“plugin.ini”文件,使ML2插件启用 -

修改网桥代理(Linuxbridge_agent)的配置文件

# 1.备份配置文件 [root@controller ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.bak# 2.去除空行和注释生成新文件 [root@controller ~]# grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini [root@controller ~]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT]# 3.修改配置文件 [root@controller ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT] [linux_bridge] physical_interface_mappings = provider:ens33 # 这里的“provider”就是ML2插件中的“flat_networks”的值,provider对应外网网卡。 [vxlan] enable_vxlan = false [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver -

修改DHCP代理(dhcp-agent)配置文件

# 1.备份配置文件 [root@controller ~]# cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.bak# 2.去除配置文件中的注释和空行 [root@controller ~]# grep -Ev '^$|#' /etc/neutron/dhcp_agent.bak > /etc/neutron/dhcp_agent.ini [root@controller ~]# cat /etc/neutron/dhcp_agent.ini [DEFAULT]# 3.修改配置文件 [root@controller ~]# vim /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true -

修改元数据代理(neutron-metadata-agent)配置文件

# 配置Nova主机地址和元数据加密方式 [root@controller ~]# vim /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = METADATA_SECRET -

修改Nova配置文件

[root@controller ~]# vim /etc/nova/nova.conf [neutron] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = project username = neutron password = 000000 service_metadata_proxy = true metadata_proxy_shared_secrect = METADATA_SECRET

7.2.4 同步数据库

[root@controller ~]# su neutron -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head"

# 验证是否同步成功

MariaDB [neutron]> show tables;

+-----------------------------------------+

| Tables_in_neutron |

+-----------------------------------------+

| address_scopes |

| agents |

| alembic_version |

| allowedaddresspairs |

| arista_provisioned_nets |

| arista_provisioned_tenants |

| arista_provisioned_vms |

| auto_allocated_topologies |

| bgp_peers |

| bgp_speaker_dragent_bindings |

| bgp_speaker_network_bindings |

| bgp_speaker_peer_bindings |

| bgp_speakers |

| brocadenetworks |

| brocadeports |

| cisco_csr_identifier_map |

| cisco_hosting_devices |

| cisco_ml2_apic_contracts |

| cisco_ml2_apic_host_links |

7.2.5 Neutron组件初始化

7.2.5.1 创建Neutron用户并分配角色

-

导入环境变量模拟登录

[root@controller ~]# . admin-login -

在OpenStack云计算平台中创建用户 “neutron”

[root@controller ~]# openstack user create --domain default --password 000000 neutron +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | d263edcf60b4441c9d47354ec2384147 | | name | neutron | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+ # 此处的用户名和密码必须与neutron.conf中“[keystone_authtoken]”一致 -

为用户 “neutron” 分配 “admin” 角色

[root@controller ~]# openstack role add --project project --user neutron admin

7.2.5.2 创建Neutron服务及端点

-

创建服务

[root@controller ~]# openstack service create --name neutron network +---------+----------------------------------+ | Field | Value | +---------+----------------------------------+ | enabled | True | | id | e5405504e48440469bbe448e3eb710d1 | | name | neutron | | type | network | +---------+----------------------------------+ -

创建服务端点

# 1.创建公众用户访问端点 [root@controller ~]# openstack endpoint create --region RegionOne neutron public http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | ebc4c55594aa47f1881180d56fc93f89 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | e5405504e48440469bbe448e3eb710d1 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+# 2.创建内部组件访问端点 [root@controller ~]# openstack endpoint create --region RegionOne neutron internal http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 15f15d559c254fdb929442fef81ecb85 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | e5405504e48440469bbe448e3eb710d1 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+# 3.创建Admin用户访问端点 [root@controller ~]# openstack endpoint create --region RegionOne neutron admin http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 3473e4bd3190471e891bea2f156739f2 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | e5405504e48440469bbe448e3eb710d1 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+

7.2.6 启动控制节点上的Neutron访问

-

重启Nova服务

[root@controller ~]# systemctl restart openstack-nova-api -

启动Neutron服务

依次启动Neutron服务组件、网桥代理、DHCP代理、元数据代理

[root@controller ~]# systemctl enable neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent[root@controller ~]# systemctl start neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

7.2.7 检测控制节点上的Neutron服务

-

查看端口占用情况

[root@controller ~]# netstat -tnlup | grep 9696 tcp 0 0 0.0.0.0:9696 0.0.0.0:* LISTEN 40342/server.log -

检验服务端点

[root@controller ~]# curl http://controller:9696 {"versions": [{"status": "CURRENT", "id": "v2.0", "links": [{"href": "http://controller:9696/v2.0/", "rel": "self"}]}]} -

查看服务运行情况

[root@controller ~]# systemctl status neutron-server ● neutron-server.service - OpenStack Neutron Server Loaded: loaded (/usr/lib/systemd/system/neutron-server.service; enabled; vendor preset: disabled) Active: active (running) since 二 2023-07-25 10:32:56 CST; 7min ago Main PID: 40342 (/usr/bin/python) Tasks: 6

7.3 安装与配置计算节点上的Neutron服务

7.3.1 安装Neutron软件包

-

安装软件包

[root@compute ~]# yum install openstack-neutron-linuxbridge -

查看用户和用户组信息

[root@compute ~]# cat /etc/passwd | grep neutron neutron:x:986:980:OpenStack Neutron Daemons:/var/lib/neutron:/sbin/nologin[root@compute ~]# cat /etc/group | grep neutron neutron:x:980:

7.3.2 修改Neutron配置文件

-

配置Neutron组件信息

# 1.备份配置文件 [root@compute ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.bak# 2.去除注释和空行生成新文件 [root@compute ~]# grep -Ev '^$|#' /etc/neutron/neutron.bak > /etc/neutron/neutron.conf [root@compute ~]# cat /etc/neutron/neutron.conf [DEFAULT] [cors] [database] [keystone_authtoken] [oslo_concurrency] [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_middleware] [oslo_policy] [privsep] [ssl]# 3.编辑配置 [root@compute ~]# vim /etc/neutron/neutron.conf [DEFAULT] transport_url = rabbit://openstack:000000@controller:5672 auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = project username = neutron password = 000000 [oslo_concurrency] lock_path = /var/lib/neutron/tmp -

修改网桥代理(Linuxbridge_agent)的配置文件

# 1.备份配置文件 [root@compute ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.bak# 2.去除空行和注释生成新文件 [root@compute ~]# grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini [root@compute ~]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT]# 3.编辑配置 [root@compute ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT] [linux_bridge] physical_interface_mappings = provider:ens33 # 对应外网网卡 [vxlan] enable_vxlan = false [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver -

修改Nova配置文件

[root@compute ~]# vim /etc/nova/nova.conf [DEFAULT] vif_plugging_is_fatal = false vif_plugging_timout = 0 [neutron] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = project username = neutron password = 000000

7.3.3 启动计算节点的Neutron服务

-

重启计算节点的Nova服务

[root@compute ~]# systemctl restart openstack-nova-compute -

启动Neutron网桥代理并设置开机自启动

[root@compute ~]# systemctl enable neutron-linuxbridge-agent[root@compute ~]# systemctl start neutron-linuxbridge-agent

7.4 检测Neutron服务

-

查看网络代理服务列表

[root@controller ~]# openstack network agent list +-----------+--------------------+------------+-------------------+-------+-------+---------------------------+ | ID | Agent Type | Host | Availability Zone | Alive | State | Binary | +-----------+--------------------+------------+-------------------+-------+-------+---------------------------+ | --- | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent | | --- | Linux bridge agent | controller | None | :-) | UP | neutron-linuxbridge-agent | | --- | Linux bridge agent | compute | None | :-) | UP | neutron-linuxbridge-agent | | --- | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent | +-----------+--------------------+------------+-------------------+-------+-------+---------------------------+ # 若显示以上四个服务的生命状态(Alive)为笑脸符号“:-)”,状态列(Start)均为开启(UP)则说明Neutron的代理运行正常 -

用Neutron状态检测工具检测

[root@controller ~]# neutron-status upgrade check +---------------------------------------------------------------------+ | Upgrade Check Results | +---------------------------------------------------------------------+ | Check: Gateway external network | | Result: Success | | Details: L3 agents can use multiple networks as external gateways. | +---------------------------------------------------------------------+ | Check: External network bridge | | Result: Success | | Details: L3 agents are using integration bridge to connect external | | gateways | +---------------------------------------------------------------------+ | Check: Worker counts configured | | Result: Warning | | Details: The default number of workers has changed. Please see | | release notes for the new values, but it is strongly | | encouraged for deployers to manually set the values for | | api_workers and rpc_workers. | +---------------------------------------------------------------------+ # Gateway external network 和 External network bridge 两项检查结果为Success即为Neutron运行正常

7.5 Neutron服务自检工单

控制节点快照6:Neutron配置完成

计算节点快照3:Neutron配置完成

8.仪表盘服务(Dashboard)安装

8.1 安装与配置Dashboard服务(计算节点)

8.1.1 安装软件包

[root@compute ~]# yum install openstack-dashboard

8.1.2 配置Dashboard服务

-

打开配置文件

vi /etc/openstack-dashboard/local_settings -

配置Web服务器基本信息

# 1.配置允许从任意主机访问Web服务(第39行) ALLOWED_HOSTS = ['*']# 2.配置用于指定控制节点的位置(第119行) OPENSTACK_HOST = "controller"# 3.配置用于将当前时区指向“亚洲/上海”(第148行) TIME_ZONE= "Asia/Shanghai" -

配置缓存服务(第105行)

SESSION_ENGINE = 'django.contrib.sessions.backends.cache' CACHES = { 'default':{ 'BACKEND':'django.core.cache.backends.memcached.MemcachedCache', 'LOCATION':'controller:11211', } } -

启用对多域的支持(新增内容)

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True # 允许使用多个域 -

指定OpenStack组件的版本(新增内容)

OPENSTACK_API_VERASIONS = { "identity":3, "image":2, "volume":3, } -

设置通过Dashboard创建的用户所属的默认域(新增内容)

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default" -

设置通过Dashboard创建的用户默认角色为 “user”(新增内容)

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user" -

设置如何使用Neutron网络(第132行)

132 OPENSTACK_NEUTRON_NETWORK = { 133 'enable_auto_allocated_network': False, 134 'enable_distributed_router': False, 135 'enable_fip_topology_check': False, # 修改 136 'enable_ha_router': False, 137 'enable_ipv6': True, # 修改 138 # TODO(amotoki): Drop OPENSTACK_NEUTRON_NETWORK completely from here. 139 # enable_quotas has the different default value here. 140 'enable_quotas': True, # 修改 141 'enable_rbac_policy': True, # 修改 142 'enable_router': True, # 修改 143 144 'default_dns_nameservers': [], 145 'supported_provider_types': ['*'], 146 'segmentation_id_range': {}, 147 'extra_provider_types': {}, 148 'supported_vnic_types': ['*'], 149 'physical_networks': [], 150 151 }

8.2 发布Dashboard服务

8.2.1 重建Dashboard的Web应用配置文件

-

进入Dashboard网站目录

[root@compute openstack-dashboard]# cd /usr/share/openstack-dashboard[root@compute openstack-dashboard]# ll 总用量 16 -rwxr-xr-x 1 root root 831 5月 17 2021 manage.py -rw-r--r-- 2 root root 435 5月 17 2021 manage.pyc -rw-r--r-- 2 root root 435 5月 17 2021 manage.pyo drwxr-xr-x 18 root root 4096 7月 25 14:31 openstack_dashboard drwxr-xr-x 10 root root 114 7月 25 14:31 static -

编译生成Dashboard的Web服务配置文件

[root@compute openstack-dashboard]# python manage.py make_web_conf --apache > /etc/httpd/conf.d/openstack-dashboard.conf[root@compute openstack-dashboard]# cat /etc/httpd/conf.d/openstack-dashboard.conf <VirtualHost *:80> ServerAdmin [email protected] ServerName openstack_dashboard DocumentRoot /usr/share/openstack-dashboard/ # DocumentRoot为网站主目录,可见其已经指向Dashboard的网站目录 LogLevel warn ErrorLog /var/log/httpd/openstack_dashboard-error.log CustomLog /var/log/httpd/openstack_dashboard-access.log combined WSGIScriptReloading On WSGIDaemonProcess openstack_dashboard_website processes=3 WSGIProcessGroup openstack_dashboard_website WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On WSGIScriptAlias / /usr/share/openstack-dashboard/openstack_dashboard/wsgi.py <Location "/"> Require all granted </Location> Alias /static /usr/share/openstack-dashboard/static <Location "/static"> SetHandler None </Location> </Virtualhost> -

查看组件API信息