数仓学习笔记(5)——数仓搭建(DWD层)

目录

一、数仓搭建——DWD层

1、DWD层(用户行为日志)

1.1 日志解析思路

1.2 get_json_object函数使用

1.3 启动日志表

1.4 页面日志表

1.5 动作日志表

1.6 曝光日志表

1.7 错误日志表

1.8 DWD层用户行为数据加载脚本

2、DWD层(业务数据)

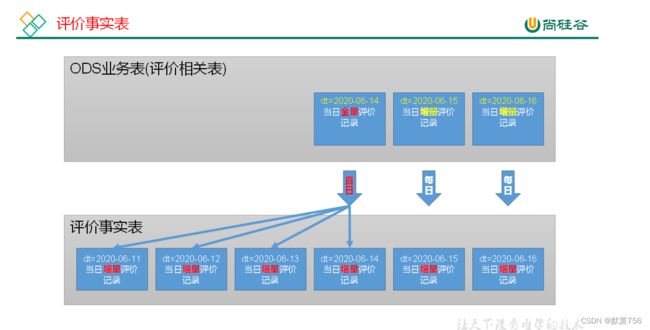

2.1 评价事实表(事务型事实表)

2.2 订单明细事实表(事务型事实表)

2.3 退单事实表(事务型事实表)

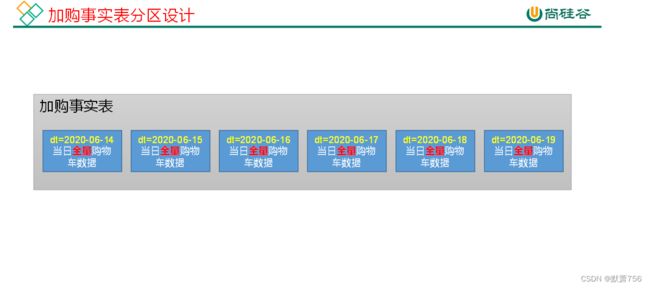

2.4 加购事实表(周期型快照事实表,每日快照)

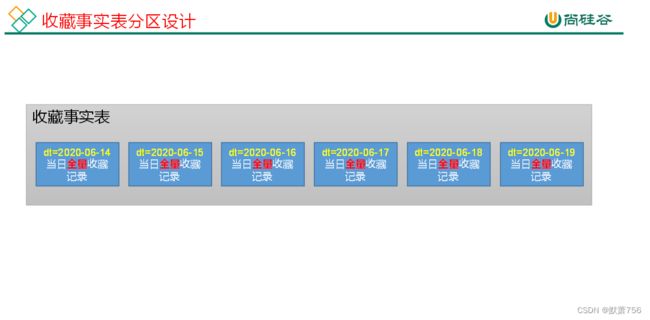

2.5 收藏事实表(周期型快照事实表,每日快照)

2.6 优惠券领用事实表(累积型快照事实表)

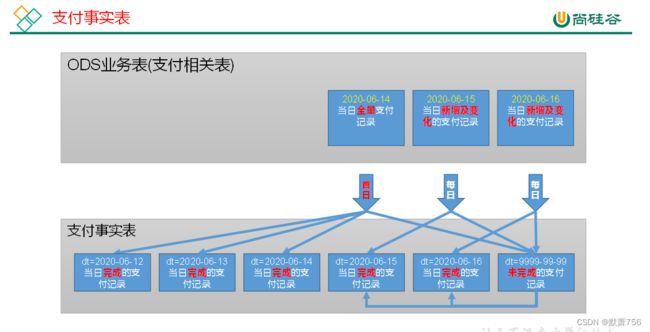

2.7 支付事实表(累积型快照事实表)

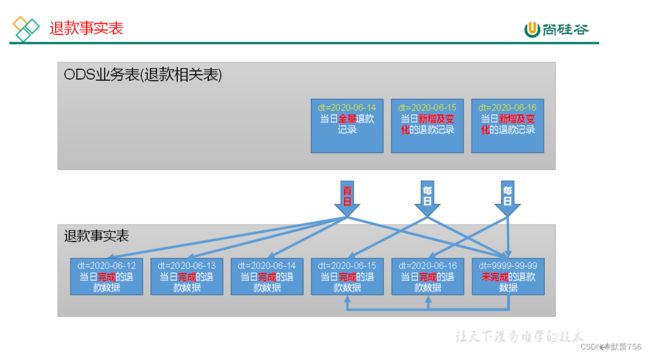

2.8 退款事实表(累积型快照事实表)

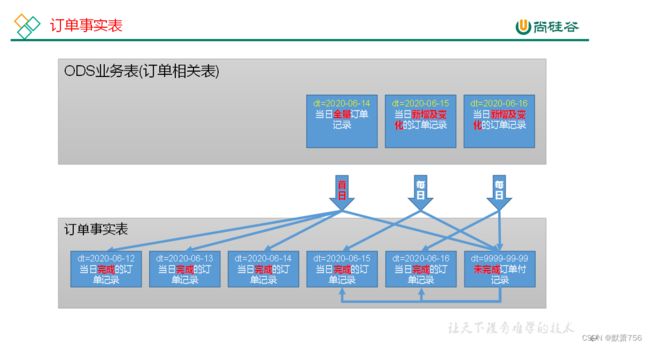

2.9 订单事实表(累积型快照事实表)

2.10 DWD层业务数据首日装载脚本

2.11 DWD层业务数据每日装载脚本

一、数仓搭建——DWD层

1)对用户行为数据解析。

2)对业务数据采用维度模型重新建模。

DWD是明细数据层

1、DWD层(用户行为日志)

1.1 日志解析思路

1)日志结构回顾:

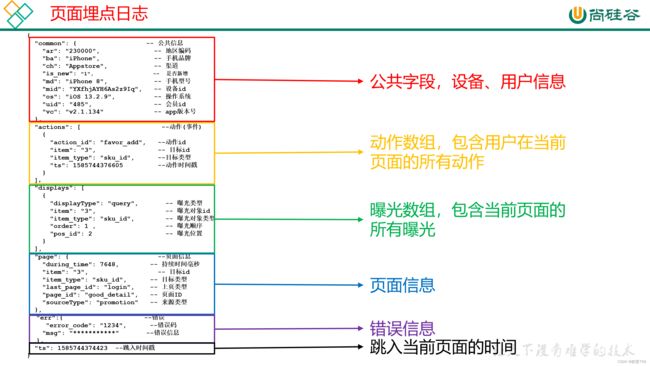

(1):页面买点日志:

ODS的唯一一张日志表:每条数据是一条日志,字段只有一个String的字段,而以一条字符串形式存储不方便统计分析,因此需要对该日志进行解析,把不同类型的日志放在不同的表中 ,把JSON类型中的每个字段解析出来

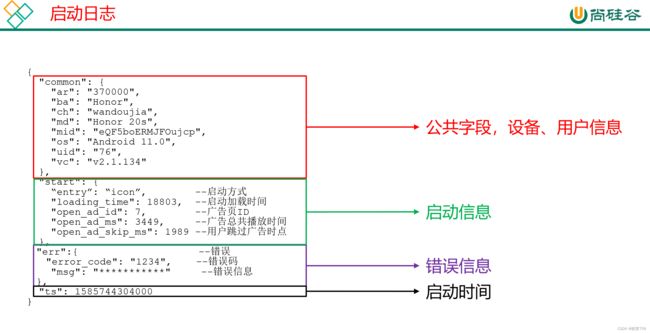

(2):启动日志:

1.2 get_json_object函数使用

该函数第一个参数是数据,第二个参数$代表的就是整个JSON数据,再使用[下标]就可以查到对应的json对象了,要找对应的值就.xxx即可

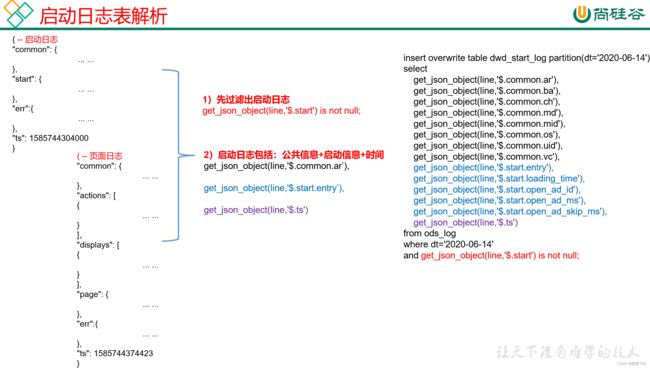

1.3 启动日志表

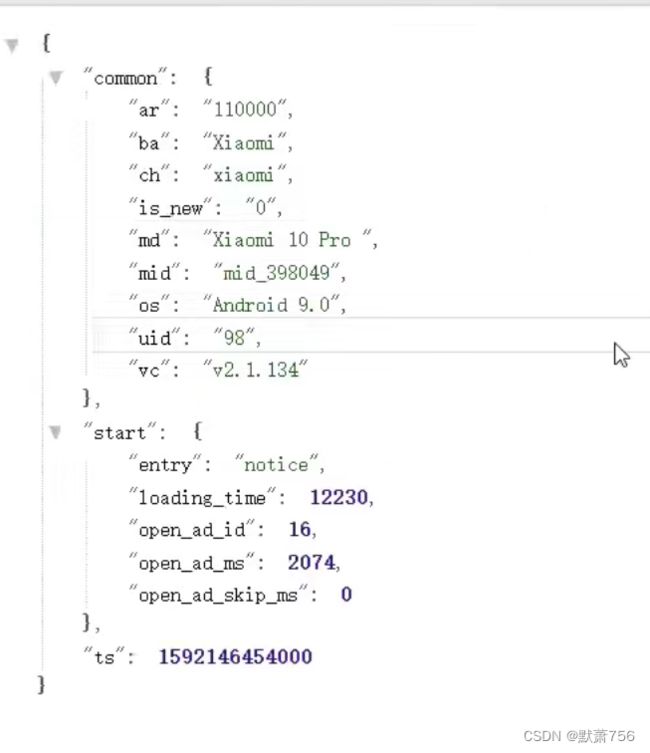

启动日志解析思路:启动日志表中每行数据对应一个启动记录,一个启动记录应该包含日志中的公共信息和启动信息。先将所有包含start字段的日志过滤出来,然后使用get_json_object函数解析每个字段。

1)建表语句:

DROP TABLE IF EXISTS dwd_start_log;

CREATE EXTERNAL TABLE dwd_start_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`entry` STRING COMMENT 'icon手机图标 notice 通知 install 安装后启动',

`loading_time` BIGINT COMMENT '启动加载时间',

`open_ad_id` STRING COMMENT '广告页ID ',

`open_ad_ms` BIGINT COMMENT '广告总共播放时间',

`open_ad_skip_ms` BIGINT COMMENT '用户跳过广告时点',

`ts` BIGINT COMMENT '时间'

) COMMENT '启动日志表'

PARTITIONED BY (`dt` STRING) -- 按照时间创建分区

STORED AS PARQUET -- 采用parquet列式存储

LOCATION '/warehouse/gmall/dwd/dwd_start_log' -- 指定在HDFS上存储位置

TBLPROPERTIES('parquet.compression'='lzo') -- 采用LZO压缩

;

2)数据导入:

hive (gmall)>

insert overwrite table dwd_start_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.ts')

from ods_log

where dt='2020-06-14'

and get_json_object(line,'$.start') is not null;

3)查看数据:

hive (gmall)>

select * from dwd_start_log where dt='2020-06-14' limit 2;

1.4 页面日志表

页面日志解析思路:页面日志表中每行数据对应一个页面访问记录,一个页面访问记录应该包含日志中的公共信息和页面信息。先将所有包含page字段的日志过滤出来,然后使用get_json_object函数解析每个字段。

1)建表语句:

DROP TABLE IF EXISTS dwd_page_log;

CREATE EXTERNAL TABLE dwd_page_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`during_time` BIGINT COMMENT '持续时间毫秒',

`page_item` STRING COMMENT '目标id ',

`page_item_type` STRING COMMENT '目标类型',

`last_page_id` STRING COMMENT '上页类型',

`page_id` STRING COMMENT '页面ID ',

`source_type` STRING COMMENT '来源类型',

`ts` bigint

) COMMENT '页面日志表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_page_log'

TBLPROPERTIES('parquet.compression'='lzo');

2)数据导入:

insert overwrite table dwd_page_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts')

from ods_log

where dt='2020-06-14'

and get_json_object(line,'$.page') is not null;

3)查看数据:

hive (gmall)>

select * from dwd_page_log where dt='2020-06-14' limit 2;

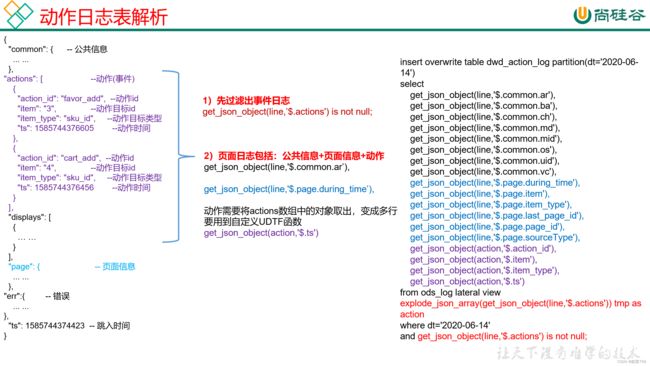

1.5 动作日志表

动作日志解析思路:动作日志表中每行数据对应用户的一个动作记录,一个动作记录应当包含公共信息、页面信息以及动作信息。先将包含action字段的日志过滤出来,然后通过UDTF函数,将action数组“炸开”(类似于explode函数的效果),然后使用get_json_object函数解析每个字段。

1)建表语句:

DROP TABLE IF EXISTS dwd_action_log;

CREATE EXTERNAL TABLE dwd_action_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`during_time` BIGINT COMMENT '持续时间毫秒',

`page_item` STRING COMMENT '目标id ',

`page_item_type` STRING COMMENT '目标类型',

`last_page_id` STRING COMMENT '上页类型',

`page_id` STRING COMMENT '页面id ',

`source_type` STRING COMMENT '来源类型',

`action_id` STRING COMMENT '动作id',

`item` STRING COMMENT '目标id ',

`item_type` STRING COMMENT '目标类型',

`ts` BIGINT COMMENT '时间'

) COMMENT '动作日志表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_action_log'

TBLPROPERTIES('parquet.compression'='lzo');

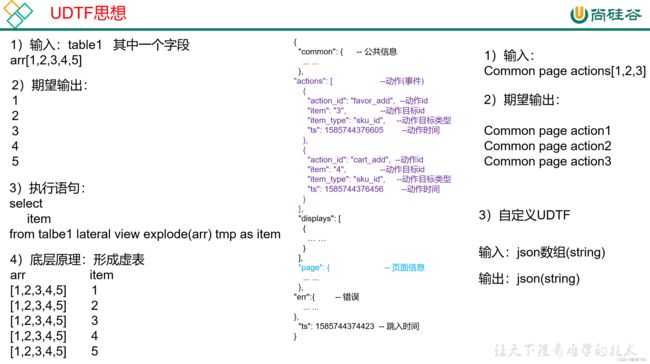

由于页面埋点日志中的动作数组是储存用户在当前页面的所有动作,而动作日志表里的每一行数据只有一条动作日志,因此需要用一个“一进多出的操作”,就是进来一条日志,返回多行,每一行都是一个动作。hive当中有UDTF的一进多出函数,但提供的explode()不能解析JSON对象,因此需要自定义一个UDTF函数

2)创建UDTF函数——设计思路:

3)创建UDTF函数——编写代码:

(1)创建一个maven工程:hivefunction

(2)创建包名:com.atguigu.hive.udtf

(3)引入如下依赖

org.apache.hive

hive-exec

3.1.2

(4)编码

package com.atguigu.hive.udtf;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.PrimitiveObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.json.JSONArray;

import java.util.ArrayList;

import java.util.List;

public class ExplodeJSONArray extends GenericUDTF {

@Override

public StructObjectInspector initialize(ObjectInspector[] argOIs) throws UDFArgumentException {

// 1 参数合法性检查

if (argOIs.length != 1) {

throw new UDFArgumentException("explode_json_array 只需要一个参数");

}

// 2 第一个参数必须为string

//判断参数是否为基础数据类型

if (argOIs[0].getCategory() != ObjectInspector.Category.PRIMITIVE) {

throw new UDFArgumentException("explode_json_array 只接受基础类型参数");

}

//将参数对象检查器强转为基础类型对象检查器

PrimitiveObjectInspector argumentOI = (PrimitiveObjectInspector) argOIs[0];

//判断参数是否为String类型

if (argumentOI.getPrimitiveCategory() != PrimitiveObjectInspector.PrimitiveCategory.STRING) {

throw new UDFArgumentException("explode_json_array 只接受string类型的参数");

}

// 3 定义返回值名称和类型

List fieldNames = new ArrayList();

List fieldOIs = new ArrayList();

fieldNames.add("items");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);

}

public void process(Object[] objects) throws HiveException {

// 1 获取传入的数据

String jsonArray = objects[0].toString();

// 2 将string转换为json数组

JSONArray actions = new JSONArray(jsonArray);

// 3 循环一次,取出数组中的一个json,并写出

for (int i = 0; i < actions.length(); i++) {

String[] result = new String[1];

result[0] = actions.getString(i);

forward(result);

}

}

public void close() throws HiveException {

}

}

4)创建函数

(1)打包

(2)将hivefunction-1.0-SNAPSHOT.jar上传到hadoop102的/opt/module,然后再将该jar包上传到HDFS的/user/hive/jars路径下

[atguigu@hadoop102 module]$ hadoop fs -mkdir -p /user/hive/jars

[atguigu@hadoop102 module]$ hadoop fs -put hivefunction-1.0-SNAPSHOT.jar /user/hive/jars

(3)创建永久函数与开发好的java class关联

create function explode_json_array as 'com.atguigu.hive.udtf.ExplodeJSONArray' using jar 'hdfs://hadoop102:8020/user/hive/jars/hivefunction-1.0-SNAPSHOT.jar';(4)注意:如果修改了自定义函数重新生成jar包怎么处理?只需要替换HDFS路径上的旧jar包,然后重启Hive客户端即可。

5)数据导入

insert overwrite table dwd_action_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(action,'$.action_id'),

get_json_object(action,'$.item'),

get_json_object(action,'$.item_type'),

get_json_object(action,'$.ts')

from ods_log lateral view explode_json_array(get_json_object(line,'$.actions')) tmp as action

where dt='2020-06-14'

and get_json_object(line,'$.actions') is not null;

3)查看数据

select * from dwd_action_log where dt='2020-06-14' limit 2;1.6 曝光日志表

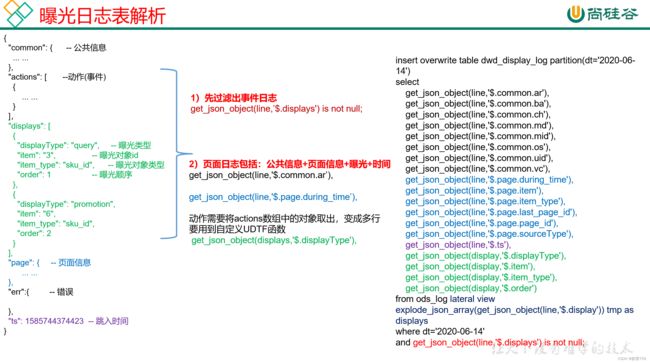

曝光日志解析思路:曝光日志表中每行数据对应一个曝光记录,一个曝光记录应当包含公共信息、页面信息以及曝光信息。先将包含display字段的日志过滤出来,然后通过UDTF函数,将display数组“炸开”(类似于explode函数的效果),然后使用get_json_object函数解析每个字段。

1)建表语句

DROP TABLE IF EXISTS dwd_display_log;

CREATE EXTERNAL TABLE dwd_display_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`during_time` BIGINT COMMENT 'app版本号',

`page_item` STRING COMMENT '目标id ',

`page_item_type` STRING COMMENT '目标类型',

`last_page_id` STRING COMMENT '上页类型',

`page_id` STRING COMMENT '页面ID ',

`source_type` STRING COMMENT '来源类型',

`ts` BIGINT COMMENT 'app版本号',

`display_type` STRING COMMENT '曝光类型',

`item` STRING COMMENT '曝光对象id ',

`item_type` STRING COMMENT 'app版本号',

`order` BIGINT COMMENT '曝光顺序',

`pos_id` BIGINT COMMENT '曝光位置'

) COMMENT '曝光日志表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_display_log'

TBLPROPERTIES('parquet.compression'='lzo');

2)数据导入

insert overwrite table dwd_display_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts'),

get_json_object(display,'$.display_type'),

get_json_object(display,'$.item'),

get_json_object(display,'$.item_type'),

get_json_object(display,'$.order'),

get_json_object(display,'$.pos_id')

from ods_log lateral view explode_json_array(get_json_object(line,'$.displays')) tmp as display

where dt='2020-06-14'

and get_json_object(line,'$.displays') is not null;

3)查看数据

select * from dwd_display_log where dt='2020-06-14' limit 2;1.7 错误日志表

错误日志解析思路:错误日志表中每行数据对应一个错误记录,为方便定位错误,一个错误记录应当包含与之对应的公共信息、页面信息、曝光信息、动作信息、启动信息以及错误信息。先将包含err字段的日志过滤出来,然后使用get_json_object函数解析所有字段。

1)建表语句

DROP TABLE IF EXISTS dwd_error_log;

CREATE EXTERNAL TABLE dwd_error_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`page_item` STRING COMMENT '目标id ',

`page_item_type` STRING COMMENT '目标类型',

`last_page_id` STRING COMMENT '上页类型',

`page_id` STRING COMMENT '页面ID ',

`source_type` STRING COMMENT '来源类型',

`entry` STRING COMMENT ' icon手机图标 notice 通知 install 安装后启动',

`loading_time` STRING COMMENT '启动加载时间',

`open_ad_id` STRING COMMENT '广告页ID ',

`open_ad_ms` STRING COMMENT '广告总共播放时间',

`open_ad_skip_ms` STRING COMMENT '用户跳过广告时点',

`actions` STRING COMMENT '动作',

`displays` STRING COMMENT '曝光',

`ts` STRING COMMENT '时间',

`error_code` STRING COMMENT '错误码',

`msg` STRING COMMENT '错误信息'

) COMMENT '错误日志表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_error_log'

TBLPROPERTIES('parquet.compression'='lzo');

说明:此处为对动作数组和曝光数组做处理,如需分析错误与单个动作或曝光的关联,可先使用explode_json_array函数将数组“炸开”,再使用get_json_object函数获取具体字段。

2)数据导入

insert overwrite table dwd_error_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.actions'),

get_json_object(line,'$.displays'),

get_json_object(line,'$.ts'),

get_json_object(line,'$.err.error_code'),

get_json_object(line,'$.err.msg')

from ods_log

where dt='2020-06-14'

and get_json_object(line,'$.err') is not null;

3)查看数据

hive (gmall)>

select * from dwd_error_log where dt='2020-06-14' limit 2;

1.8 DWD层用户行为数据加载脚本

#!/bin/bash

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

dwd_start_log="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_start_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.ts')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.start') is not null;"

dwd_page_log="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_page_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.page') is not null;"

dwd_action_log="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_action_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(action,'$.action_id'),

get_json_object(action,'$.item'),

get_json_object(action,'$.item_type'),

get_json_object(action,'$.ts')

from ${APP}.ods_log lateral view ${APP}.explode_json_array(get_json_object(line,'$.actions')) tmp as action

where dt='$do_date'

and get_json_object(line,'$.actions') is not null;"

dwd_display_log="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_display_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts'),

get_json_object(display,'$.display_type'),

get_json_object(display,'$.item'),

get_json_object(display,'$.item_type'),

get_json_object(display,'$.order'),

get_json_object(display,'$.pos_id')

from ${APP}.ods_log lateral view ${APP}.explode_json_array(get_json_object(line,'$.displays')) tmp as display

where dt='$do_date'

and get_json_object(line,'$.displays') is not null;"

dwd_error_log="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_error_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.actions'),

get_json_object(line,'$.displays'),

get_json_object(line,'$.ts'),

get_json_object(line,'$.err.error_code'),

get_json_object(line,'$.err.msg')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.err') is not null;"

case $1 in

dwd_start_log )

hive -e "$dwd_start_log"

;;

dwd_page_log )

hive -e "$dwd_page_log"

;;

dwd_action_log )

hive -e "$dwd_action_log"

;;

dwd_display_log )

hive -e "$dwd_display_log"

;;

dwd_error_log )

hive -e "$dwd_error_log"

;;

all )

hive -e "$dwd_start_log$dwd_page_log$dwd_action_log$dwd_display_log$dwd_error_log"

;;

esac

2、DWD层(业务数据)

业务数据方面DWD层的搭建主要注意点在于维度建模

2.1 评价事实表(事务型事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_comment_info;

CREATE EXTERNAL TABLE dwd_comment_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`sku_id` STRING COMMENT '商品sku',

`spu_id` STRING COMMENT '商品spu',

`order_id` STRING COMMENT '订单ID',

`appraise` STRING COMMENT '评价(好评、中评、差评、默认评价)',

`create_time` STRING COMMENT '评价时间'

) COMMENT '评价事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_comment_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_comment_info partition (dt)

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time,

date_format(create_time,'yyyy-MM-dd')

from ods_comment_info

where dt='2020-06-14';

(2)每日装载

insert overwrite table dwd_comment_info partition(dt='2020-06-15')

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time

from ods_comment_info where dt='2020-06-15';

2.2 订单明细事实表(事务型事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_order_detail;

CREATE EXTERNAL TABLE dwd_order_detail (

`id` STRING COMMENT '订单编号',

`order_id` STRING COMMENT '订单号',

`user_id` STRING COMMENT '用户id',

`sku_id` STRING COMMENT 'sku商品id',

`province_id` STRING COMMENT '省份ID',

`activity_id` STRING COMMENT '活动ID',

`activity_rule_id` STRING COMMENT '活动规则ID',

`coupon_id` STRING COMMENT '优惠券ID',

`create_time` STRING COMMENT '创建时间',

`source_type` STRING COMMENT '来源类型',

`source_id` STRING COMMENT '来源编号',

`sku_num` BIGINT COMMENT '商品数量',

`original_amount` DECIMAL(16,2) COMMENT '原始价格',

`split_activity_amount` DECIMAL(16,2) COMMENT '活动优惠分摊',

`split_coupon_amount` DECIMAL(16,2) COMMENT '优惠券优惠分摊',

`split_final_amount` DECIMAL(16,2) COMMENT '最终价格分摊'

) COMMENT '订单明细事实表表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_order_detail/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_order_detail partition(dt)

select

od.id,

od.order_id,

oi.user_id,

od.sku_id,

oi.province_id,

oda.activity_id,

oda.activity_rule_id,

odc.coupon_id,

od.create_time,

od.source_type,

od.source_id,

od.sku_num,

od.order_price*od.sku_num,

od.split_activity_amount,

od.split_coupon_amount,

od.split_final_amount,

date_format(create_time,'yyyy-MM-dd')

from

(

select

*

from ods_order_detail

where dt='2020-06-14'

)od

left join

(

select

id,

user_id,

province_id

from ods_order_info

where dt='2020-06-14'

)oi

on od.order_id=oi.id

left join

(

select

order_detail_id,

activity_id,

activity_rule_id

from ods_order_detail_activity

where dt='2020-06-14'

)oda

on od.id=oda.order_detail_id

left join

(

select

order_detail_id,

coupon_id

from ods_order_detail_coupon

where dt='2020-06-14'

)odc

on od.id=odc.order_detail_id;

(2)每日装载

insert overwrite table dwd_order_detail partition(dt='2020-06-15')

select

od.id,

od.order_id,

oi.user_id,

od.sku_id,

oi.province_id,

oda.activity_id,

oda.activity_rule_id,

odc.coupon_id,

od.create_time,

od.source_type,

od.source_id,

od.sku_num,

od.order_price*od.sku_num,

od.split_activity_amount,

od.split_coupon_amount,

od.split_final_amount

from

(

select

*

from ods_order_detail

where dt='2020-06-15'

)od

left join

(

select

id,

user_id,

province_id

from ods_order_info

where dt='2020-06-15'

)oi

on od.order_id=oi.id

left join

(

select

order_detail_id,

activity_id,

activity_rule_id

from ods_order_detail_activity

where dt='2020-06-15'

)oda

on od.id=oda.order_detail_id

left join

(

select

order_detail_id,

coupon_id

from ods_order_detail_coupon

where dt='2020-06-15'

)odc

on od.id=odc.order_detail_id;

2.3 退单事实表(事务型事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_order_refund_info;

CREATE EXTERNAL TABLE dwd_order_refund_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`order_id` STRING COMMENT '订单ID',

`sku_id` STRING COMMENT '商品ID',

`province_id` STRING COMMENT '地区ID',

`refund_type` STRING COMMENT '退单类型',

`refund_num` BIGINT COMMENT '退单件数',

`refund_amount` DECIMAL(16,2) COMMENT '退单金额',

`refund_reason_type` STRING COMMENT '退单原因类型',

`create_time` STRING COMMENT '退单时间'

) COMMENT '退单事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_order_refund_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_order_refund_info partition(dt)

select

ri.id,

ri.user_id,

ri.order_id,

ri.sku_id,

oi.province_id,

ri.refund_type,

ri.refund_num,

ri.refund_amount,

ri.refund_reason_type,

ri.create_time,

date_format(ri.create_time,'yyyy-MM-dd')

from

(

select * from ods_order_refund_info where dt='2020-06-14'

)ri

left join

(

select id,province_id from ods_order_info where dt='2020-06-14'

)oi

on ri.order_id=oi.id;

(2)每日装载

insert overwrite table dwd_order_refund_info partition(dt='2020-06-15')

select

ri.id,

ri.user_id,

ri.order_id,

ri.sku_id,

oi.province_id,

ri.refund_type,

ri.refund_num,

ri.refund_amount,

ri.refund_reason_type,

ri.create_time

from

(

select * from ods_order_refund_info where dt='2020-06-15'

)ri

left join

(

select id,province_id from ods_order_info where dt='2020-06-15'

)oi

on ri.order_id=oi.id;

2.4 加购事实表(周期型快照事实表,每日快照)

1)建表语句

DROP TABLE IF EXISTS dwd_cart_info;

CREATE EXTERNAL TABLE dwd_cart_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`sku_id` STRING COMMENT '商品ID',

`source_type` STRING COMMENT '来源类型',

`source_id` STRING COMMENT '来源编号',

`cart_price` DECIMAL(16,2) COMMENT '加入购物车时的价格',

`is_ordered` STRING COMMENT '是否已下单',

`create_time` STRING COMMENT '创建时间',

`operate_time` STRING COMMENT '修改时间',

`order_time` STRING COMMENT '下单时间',

`sku_num` BIGINT COMMENT '加购数量'

) COMMENT '加购事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_cart_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_cart_info partition(dt='2020-06-14')

select

id,

user_id,

sku_id,

source_type,

source_id,

cart_price,

is_ordered,

create_time,

operate_time,

order_time,

sku_num

from ods_cart_info

where dt='2020-06-14';

(2)每日装载

insert overwrite table dwd_cart_info partition(dt='2020-06-15')

select

id,

user_id,

sku_id,

source_type,

source_id,

cart_price,

is_ordered,

create_time,

operate_time,

order_time,

sku_num

from ods_cart_info

where dt='2020-06-15';

2.5 收藏事实表(周期型快照事实表,每日快照)

1)建表语句

DROP TABLE IF EXISTS dwd_favor_info;

CREATE EXTERNAL TABLE dwd_favor_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户id',

`sku_id` STRING COMMENT 'skuid',

`spu_id` STRING COMMENT 'spuid',

`is_cancel` STRING COMMENT '是否取消',

`create_time` STRING COMMENT '收藏时间',

`cancel_time` STRING COMMENT '取消时间'

) COMMENT '收藏事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_favor_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_favor_info partition(dt='2020-06-14')

select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from ods_favor_info

where dt='2020-06-14';

(2)每日装载

insert overwrite table dwd_favor_info partition(dt='2020-06-15')

select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from ods_favor_info

where dt='2020-06-15';

2.6 优惠券领用事实表(累积型快照事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_coupon_use;

CREATE EXTERNAL TABLE dwd_coupon_use(

`id` STRING COMMENT '编号',

`coupon_id` STRING COMMENT '优惠券ID',

`user_id` STRING COMMENT 'userid',

`order_id` STRING COMMENT '订单id',

`coupon_status` STRING COMMENT '优惠券状态',

`get_time` STRING COMMENT '领取时间',

`using_time` STRING COMMENT '使用时间(下单)',

`used_time` STRING COMMENT '使用时间(支付)',

`expire_time` STRING COMMENT '过期时间'

) COMMENT '优惠券领用事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_coupon_use/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_coupon_use partition(dt)

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time,

coalesce(date_format(used_time,'yyyy-MM-dd'),date_format(expire_time,'yyyy-MM-dd'),'9999-99-99')

from ods_coupon_use

where dt='2020-06-14';

(2)每日装载

a.装载逻辑

b.装载语句

insert overwrite table dwd_coupon_use partition(dt)

select

nvl(new.id,old.id),

nvl(new.coupon_id,old.coupon_id),

nvl(new.user_id,old.user_id),

nvl(new.order_id,old.order_id),

nvl(new.coupon_status,old.coupon_status),

nvl(new.get_time,old.get_time),

nvl(new.using_time,old.using_time),

nvl(new.used_time,old.used_time),

nvl(new.expire_time,old.expire_time),

coalesce(date_format(nvl(new.used_time,old.used_time),'yyyy-MM-dd'),date_format(nvl(new.expire_time,old.expire_time),'yyyy-MM-dd'),'9999-99-99')

from

(

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time

from dwd_coupon_use

where dt='9999-99-99'

)old

full outer join

(

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time

from ods_coupon_use

where dt='2020-06-15'

)new

on old.id=new.id;

2.7 支付事实表(累积型快照事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_payment_info;

CREATE EXTERNAL TABLE dwd_payment_info (

`id` STRING COMMENT '编号',

`order_id` STRING COMMENT '订单编号',

`user_id` STRING COMMENT '用户编号',

`province_id` STRING COMMENT '地区ID',

`trade_no` STRING COMMENT '交易编号',

`out_trade_no` STRING COMMENT '对外交易编号',

`payment_type` STRING COMMENT '支付类型',

`payment_amount` DECIMAL(16,2) COMMENT '支付金额',

`payment_status` STRING COMMENT '支付状态',

`create_time` STRING COMMENT '创建时间',--调用第三方支付接口的时间

`callback_time` STRING COMMENT '完成时间'--支付完成时间,即支付成功回调时间

) COMMENT '支付事实表表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_payment_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_payment_info partition(dt)

select

pi.id,

pi.order_id,

pi.user_id,

oi.province_id,

pi.trade_no,

pi.out_trade_no,

pi.payment_type,

pi.payment_amount,

pi.payment_status,

pi.create_time,

pi.callback_time,

nvl(date_format(pi.callback_time,'yyyy-MM-dd'),'9999-99-99')

from

(

select * from ods_payment_info where dt='2020-06-14'

)pi

left join

(

select id,province_id from ods_order_info where dt='2020-06-14'

)oi

on pi.order_id=oi.id;

(2)每日装载

insert overwrite table dwd_payment_info partition(dt)

select

nvl(new.id,old.id),

nvl(new.order_id,old.order_id),

nvl(new.user_id,old.user_id),

nvl(new.province_id,old.province_id),

nvl(new.trade_no,old.trade_no),

nvl(new.out_trade_no,old.out_trade_no),

nvl(new.payment_type,old.payment_type),

nvl(new.payment_amount,old.payment_amount),

nvl(new.payment_status,old.payment_status),

nvl(new.create_time,old.create_time),

nvl(new.callback_time,old.callback_time),

nvl(date_format(nvl(new.callback_time,old.callback_time),'yyyy-MM-dd'),'9999-99-99')

from

(

select id,

order_id,

user_id,

province_id,

trade_no,

out_trade_no,

payment_type,

payment_amount,

payment_status,

create_time,

callback_time

from dwd_payment_info

where dt = '9999-99-99'

)old

full outer join

(

select

pi.id,

pi.out_trade_no,

pi.order_id,

pi.user_id,

oi.province_id,

pi.payment_type,

pi.trade_no,

pi.payment_amount,

pi.payment_status,

pi.create_time,

pi.callback_time

from

(

select * from ods_payment_info where dt='2020-06-15'

)pi

left join

(

select id,province_id from ods_order_info where dt='2020-06-15'

)oi

on pi.order_id=oi.id

)new

on old.id=new.id;

2.8 退款事实表(累积型快照事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_refund_payment;

CREATE EXTERNAL TABLE dwd_refund_payment (

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`order_id` STRING COMMENT '订单编号',

`sku_id` STRING COMMENT 'SKU编号',

`province_id` STRING COMMENT '地区ID',

`trade_no` STRING COMMENT '交易编号',

`out_trade_no` STRING COMMENT '对外交易编号',

`payment_type` STRING COMMENT '支付类型',

`refund_amount` DECIMAL(16,2) COMMENT '退款金额',

`refund_status` STRING COMMENT '退款状态',

`create_time` STRING COMMENT '创建时间',--调用第三方支付接口的时间

`callback_time` STRING COMMENT '回调时间'--支付接口回调时间,即支付成功时间

) COMMENT '退款事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_refund_payment/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_refund_payment partition(dt)

select

rp.id,

user_id,

order_id,

sku_id,

province_id,

trade_no,

out_trade_no,

payment_type,

refund_amount,

refund_status,

create_time,

callback_time,

nvl(date_format(callback_time,'yyyy-MM-dd'),'9999-99-99')

from

(

select

id,

out_trade_no,

order_id,

sku_id,

payment_type,

trade_no,

refund_amount,

refund_status,

create_time,

callback_time

from ods_refund_payment

where dt='2020-06-14'

)rp

left join

(

select

id,

user_id,

province_id

from ods_order_info

where dt='2020-06-14'

)oi

on rp.order_id=oi.id;

(2)每日装载

insert overwrite table dwd_refund_payment partition(dt)

select

nvl(new.id,old.id),

nvl(new.user_id,old.user_id),

nvl(new.order_id,old.order_id),

nvl(new.sku_id,old.sku_id),

nvl(new.province_id,old.province_id),

nvl(new.trade_no,old.trade_no),

nvl(new.out_trade_no,old.out_trade_no),

nvl(new.payment_type,old.payment_type),

nvl(new.refund_amount,old.refund_amount),

nvl(new.refund_status,old.refund_status),

nvl(new.create_time,old.create_time),

nvl(new.callback_time,old.callback_time),

nvl(date_format(nvl(new.callback_time,old.callback_time),'yyyy-MM-dd'),'9999-99-99')

from

(

select

id,

user_id,

order_id,

sku_id,

province_id,

trade_no,

out_trade_no,

payment_type,

refund_amount,

refund_status,

create_time,

callback_time

from dwd_refund_payment

where dt='9999-99-99'

)old

full outer join

(

select

rp.id,

user_id,

order_id,

sku_id,

province_id,

trade_no,

out_trade_no,

payment_type,

refund_amount,

refund_status,

create_time,

callback_time

from

(

select

id,

out_trade_no,

order_id,

sku_id,

payment_type,

trade_no,

refund_amount,

refund_status,

create_time,

callback_time

from ods_refund_payment

where dt='2020-06-15'

)rp

left join

(

select

id,

user_id,

province_id

from ods_order_info

where dt='2020-06-15'

)oi

on rp.order_id=oi.id

)new

on old.id=new.id;

2.9 订单事实表(累积型快照事实表)

1)建表语句

DROP TABLE IF EXISTS dwd_order_info;

CREATE EXTERNAL TABLE dwd_order_info(

`id` STRING COMMENT '编号',

`order_status` STRING COMMENT '订单状态',

`user_id` STRING COMMENT '用户ID',

`province_id` STRING COMMENT '地区ID',

`payment_way` STRING COMMENT '支付方式',

`delivery_address` STRING COMMENT '邮寄地址',

`out_trade_no` STRING COMMENT '对外交易编号',

`tracking_no` STRING COMMENT '物流单号',

`create_time` STRING COMMENT '创建时间(未支付状态)',

`payment_time` STRING COMMENT '支付时间(已支付状态)',

`cancel_time` STRING COMMENT '取消时间(已取消状态)',

`finish_time` STRING COMMENT '完成时间(已完成状态)',

`refund_time` STRING COMMENT '退款时间(退款中状态)',

`refund_finish_time` STRING COMMENT '退款完成时间(退款完成状态)',

`expire_time` STRING COMMENT '过期时间',

`feight_fee` DECIMAL(16,2) COMMENT '运费',

`feight_fee_reduce` DECIMAL(16,2) COMMENT '运费减免',

`activity_reduce_amount` DECIMAL(16,2) COMMENT '活动减免',

`coupon_reduce_amount` DECIMAL(16,2) COMMENT '优惠券减免',

`original_amount` DECIMAL(16,2) COMMENT '订单原始价格',

`final_amount` DECIMAL(16,2) COMMENT '订单最终价格'

) COMMENT '订单事实表'

PARTITIONED BY (`dt` STRING)

STORED AS PARQUET

LOCATION '/warehouse/gmall/dwd/dwd_order_info/'

TBLPROPERTIES ("parquet.compression"="lzo");

2)分区规划

3)数据装载

(1)首日装载

insert overwrite table dwd_order_info partition(dt)

select

oi.id,

oi.order_status,

oi.user_id,

oi.province_id,

oi.payment_way,

oi.delivery_address,

oi.out_trade_no,

oi.tracking_no,

oi.create_time,

times.ts['1002'] payment_time,

times.ts['1003'] cancel_time,

times.ts['1004'] finish_time,

times.ts['1005'] refund_time,

times.ts['1006'] refund_finish_time,

oi.expire_time,

feight_fee,

feight_fee_reduce,

activity_reduce_amount,

coupon_reduce_amount,

original_amount,

final_amount,

case

when times.ts['1003'] is not null then date_format(times.ts['1003'],'yyyy-MM-dd')

when times.ts['1004'] is not null and date_add(date_format(times.ts['1004'],'yyyy-MM-dd'),7)<='2020-06-14' and times.ts['1005'] is null then date_add(date_format(times.ts['1004'],'yyyy-MM-dd'),7)

when times.ts['1006'] is not null then date_format(times.ts['1006'],'yyyy-MM-dd')

when oi.expire_time is not null then date_format(oi.expire_time,'yyyy-MM-dd')

else '9999-99-99'

end

from

(

select

*

from ods_order_info

where dt='2020-06-14'

)oi

left join

(

select

order_id,

str_to_map(concat_ws(',',collect_set(concat(order_status,'=',operate_time))),',','=') ts

from ods_order_status_log

where dt='2020-06-14'

group by order_id

)times

on oi.id=times.order_id;

(2)每日装载

insert overwrite table dwd_order_info partition(dt)

select

nvl(new.id,old.id),

nvl(new.order_status,old.order_status),

nvl(new.user_id,old.user_id),

nvl(new.province_id,old.province_id),

nvl(new.payment_way,old.payment_way),

nvl(new.delivery_address,old.delivery_address),

nvl(new.out_trade_no,old.out_trade_no),

nvl(new.tracking_no,old.tracking_no),

nvl(new.create_time,old.create_time),

nvl(new.payment_time,old.payment_time),

nvl(new.cancel_time,old.cancel_time),

nvl(new.finish_time,old.finish_time),

nvl(new.refund_time,old.refund_time),

nvl(new.refund_finish_time,old.refund_finish_time),

nvl(new.expire_time,old.expire_time),

nvl(new.feight_fee,old.feight_fee),

nvl(new.feight_fee_reduce,old.feight_fee_reduce),

nvl(new.activity_reduce_amount,old.activity_reduce_amount),

nvl(new.coupon_reduce_amount,old.coupon_reduce_amount),

nvl(new.original_amount,old.original_amount),

nvl(new.final_amount,old.final_amount),

case

when new.cancel_time is not null then date_format(new.cancel_time,'yyyy-MM-dd')

when new.finish_time is not null and date_add(date_format(new.finish_time,'yyyy-MM-dd'),7)='2020-06-15' and new.refund_time is null then '2020-06-15'

when new.refund_finish_time is not null then date_format(new.refund_finish_time,'yyyy-MM-dd')

when new.expire_time is not null then date_format(new.expire_time,'yyyy-MM-dd')

else '9999-99-99'

end

from

(

select

id,

order_status,

user_id,

province_id,

payment_way,

delivery_address,

out_trade_no,

tracking_no,

create_time,

payment_time,

cancel_time,

finish_time,

refund_time,

refund_finish_time,

expire_time,

feight_fee,

feight_fee_reduce,

activity_reduce_amount,

coupon_reduce_amount,

original_amount,

final_amount

from dwd_order_info

where dt='9999-99-99'

)old

full outer join

(

select

oi.id,

oi.order_status,

oi.user_id,

oi.province_id,

oi.payment_way,

oi.delivery_address,

oi.out_trade_no,

oi.tracking_no,

oi.create_time,

times.ts['1002'] payment_time,

times.ts['1003'] cancel_time,

times.ts['1004'] finish_time,

times.ts['1005'] refund_time,

times.ts['1006'] refund_finish_time,

oi.expire_time,

feight_fee,

feight_fee_reduce,

activity_reduce_amount,

coupon_reduce_amount,

original_amount,

final_amount

from

(

select

*

from ods_order_info

where dt='2020-06-15'

)oi

left join

(

select

order_id,

str_to_map(concat_ws(',',collect_set(concat(order_status,'=',operate_time))),',','=') ts

from ods_order_status_log

where dt='2020-06-15'

group by order_id

)times

on oi.id=times.order_id

)new

on old.id=new.id;

2.10 DWD层业务数据首日装载脚本

执行脚本:

#!/bin/bash

APP=gmall

if [ -n "$2" ] ;then

do_date=$2

else

echo "请传入日期参数"

exit

fi

dwd_order_info="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_order_info partition(dt)

select

oi.id,

oi.order_status,

oi.user_id,

oi.province_id,

oi.payment_way,

oi.delivery_address,

oi.out_trade_no,

oi.tracking_no,

oi.create_time,

times.ts['1002'] payment_time,

times.ts['1003'] cancel_time,

times.ts['1004'] finish_time,

times.ts['1005'] refund_time,

times.ts['1006'] refund_finish_time,

oi.expire_time,

feight_fee,

feight_fee_reduce,

activity_reduce_amount,

coupon_reduce_amount,

original_amount,

final_amount,

case

when times.ts['1003'] is not null then date_format(times.ts['1003'],'yyyy-MM-dd')

when times.ts['1004'] is not null and date_add(date_format(times.ts['1004'],'yyyy-MM-dd'),7)<='$do_date' and times.ts['1005'] is null then date_add(date_format(times.ts['1004'],'yyyy-MM-dd'),7)

when times.ts['1006'] is not null then date_format(times.ts['1006'],'yyyy-MM-dd')

when oi.expire_time is not null then date_format(oi.expire_time,'yyyy-MM-dd')

else '9999-99-99'

end

from

(

select

*

from ${APP}.ods_order_info

where dt='$do_date'

)oi

left join

(

select

order_id,

str_to_map(concat_ws(',',collect_set(concat(order_status,'=',operate_time))),',','=') ts

from ${APP}.ods_order_status_log

where dt='$do_date'

group by order_id

)times

on oi.id=times.order_id;"

dwd_order_detail="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_order_detail partition(dt)

select

od.id,

od.order_id,

oi.user_id,

od.sku_id,

oi.province_id,

oda.activity_id,

oda.activity_rule_id,

odc.coupon_id,

od.create_time,

od.source_type,

od.source_id,

od.sku_num,

od.order_price*od.sku_num,

od.split_activity_amount,

od.split_coupon_amount,

od.split_final_amount,

date_format(create_time,'yyyy-MM-dd')

from

(

select

*

from ${APP}.ods_order_detail

where dt='$do_date'

)od

left join

(

select

id,

user_id,

province_id

from ${APP}.ods_order_info

where dt='$do_date'

)oi

on od.order_id=oi.id

left join

(

select

order_detail_id,

activity_id,

activity_rule_id

from ${APP}.ods_order_detail_activity

where dt='$do_date'

)oda

on od.id=oda.order_detail_id

left join

(

select

order_detail_id,

coupon_id

from ${APP}.ods_order_detail_coupon

where dt='$do_date'

)odc

on od.id=odc.order_detail_id;"

dwd_payment_info="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_payment_info partition(dt)

select

pi.id,

pi.order_id,

pi.user_id,

oi.province_id,

pi.trade_no,

pi.out_trade_no,

pi.payment_type,

pi.payment_amount,

pi.payment_status,

pi.create_time,

pi.callback_time,

nvl(date_format(pi.callback_time,'yyyy-MM-dd'),'9999-99-99')

from

(

select * from ${APP}.ods_payment_info where dt='$do_date'

)pi

left join

(

select id,province_id from ${APP}.ods_order_info where dt='$do_date'

)oi

on pi.order_id=oi.id;"

dwd_cart_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_cart_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

source_type,

source_id,

cart_price,

is_ordered,

create_time,

operate_time,

order_time,

sku_num

from ${APP}.ods_cart_info

where dt='$do_date';"

dwd_comment_info="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_comment_info partition(dt)

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time,

date_format(create_time,'yyyy-MM-dd')

from ${APP}.ods_comment_info

where dt='$do_date';

"

dwd_favor_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_favor_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from ${APP}.ods_favor_info

where dt='$do_date';"

dwd_coupon_use="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_coupon_use partition(dt)

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time,

coalesce(date_format(used_time,'yyyy-MM-dd'),date_format(expire_time,'yyyy-MM-dd'),'9999-99-99')

from ${APP}.ods_coupon_use

where dt='$do_date';"

dwd_order_refund_info="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_order_refund_info partition(dt)

select

ri.id,

ri.user_id,

ri.order_id,

ri.sku_id,

oi.province_id,

ri.refund_type,

ri.refund_num,

ri.refund_amount,

ri.refund_reason_type,

ri.create_time,

date_format(ri.create_time,'yyyy-MM-dd')

from

(

select * from ${APP}.ods_order_refund_info where dt='$do_date'

)ri

left join

(

select id,province_id from ${APP}.ods_order_info where dt='$do_date'

)oi

on ri.order_id=oi.id;"

dwd_refund_payment="

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_refund_payment partition(dt)

select

rp.id,

user_id,

order_id,

sku_id,

province_id,

trade_no,

out_trade_no,

payment_type,

refund_amount,

refund_status,

create_time,

callback_time,

nvl(date_format(callback_time,'yyyy-MM-dd'),'9999-99-99')

from

(

select

id,

out_trade_no,

order_id,

sku_id,

payment_type,

trade_no,

refund_amount,

refund_status,

create_time,

callback_time

from ${APP}.ods_refund_payment

where dt='$do_date'

)rp

left join

(

select

id,

user_id,

province_id

from ${APP}.ods_order_info

where dt='$do_date'

)oi

on rp.order_id=oi.id;"

case $1 in

dwd_order_info )

hive -e "$dwd_order_info"

;;

dwd_order_detail )

hive -e "$dwd_order_detail"

;;

dwd_payment_info )

hive -e "$dwd_payment_info"

;;

dwd_cart_info )

hive -e "$dwd_cart_info"

;;

dwd_comment_info )

hive -e "$dwd_comment_info"

;;

dwd_favor_info )

hive -e "$dwd_favor_info"

;;

dwd_coupon_use )

hive -e "$dwd_coupon_use"

;;

dwd_order_refund_info )

hive -e "$dwd_order_refund_info"

;;

dwd_refund_payment )

hive -e "$dwd_refund_payment"

;;

all )

hive -e "$dwd_order_info$dwd_order_detail$dwd_payment_info$dwd_cart_info$dwd_comment_info$dwd_favor_info$dwd_coupon_use$dwd_order_refund_info$dwd_refund_payment"

;;

esac

2.11 DWD层业务数据每日装载脚本

#!/bin/bash

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

# 假设某累积型快照事实表,某天所有的业务记录全部完成,则会导致9999-99-99分区的数据未被覆盖,从而导致数据重复,该函数根据9999-99-99分区的数据的末次修改时间判断其是否被覆盖了,如果未被覆盖,就手动清理

clear_data(){

current_date=`date +%F`

current_date_timestamp=`date -d "$current_date" +%s`

last_modified_date=`hadoop fs -ls /warehouse/gmall/dwd/$1 | grep '9999-99-99' | awk '{print $6}'`

last_modified_date_timestamp=`date -d "$last_modified_date" +%s`

if [[ $last_modified_date_timestamp -lt $current_date_timestamp ]]; then

echo "clear table $1 partition(dt=9999-99-99)"

hadoop fs -rm -r -f /warehouse/gmall/dwd/$1/dt=9999-99-99/*

fi

}

dwd_order_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table ${APP}.dwd_order_info partition(dt)

select

nvl(new.id,old.id),

nvl(new.order_status,old.order_status),

nvl(new.user_id,old.user_id),

nvl(new.province_id,old.province_id),

nvl(new.payment_way,old.payment_way),

nvl(new.delivery_address,old.delivery_address),

nvl(new.out_trade_no,old.out_trade_no),

nvl(new.tracking_no,old.tracking_no),

nvl(new.create_time,old.create_time),

nvl(new.payment_time,old.payment_time),

nvl(new.cancel_time,old.cancel_time),

nvl(new.finish_time,old.finish_time),

nvl(new.refund_time,old.refund_time),

nvl(new.refund_finish_time,old.refund_finish_time),

nvl(new.expire_time,old.expire_time),

nvl(new.feight_fee,old.feight_fee),

nvl(new.feight_fee_reduce,old.feight_fee_reduce),

nvl(new.activity_reduce_amount,old.activity_reduce_amount),

nvl(new.coupon_reduce_amount,old.coupon_reduce_amount),

nvl(new.original_amount,old.original_amount),

nvl(new.final_amount,old.final_amount),

case

when new.cancel_time is not null then date_format(new.cancel_time,'yyyy-MM-dd')

when new.finish_time is not null and date_add(date_format(new.finish_time,'yyyy-MM-dd'),7)='$do_date' and new.refund_time is null then '$do_date'

when new.refund_finish_time is not null then date_format(new.refund_finish_time,'yyyy-MM-dd')

when new.expire_time is not null then date_format(new.expire_time,'yyyy-MM-dd')

else '9999-99-99'

end

from

(

select

id,

order_status,

user_id,

province_id,

payment_way,

delivery_address,

out_trade_no,

tracking_no,

create_time,

payment_time,

cancel_time,

finish_time,

refund_time,

refund_finish_time,

expire_time,

feight_fee,

feight_fee_reduce,

activity_reduce_amount,

coupon_reduce_amount,

original_amount,

final_amount

from ${APP}.dwd_order_info

where dt='9999-99-99'

)old

full outer join

(

select

oi.id,

oi.order_status,

oi.user_id,

oi.province_id,

oi.payment_way,

oi.delivery_address,

oi.out_trade_no,

oi.tracking_no,

oi.create_time,

times.ts['1002'] payment_time,

times.ts['1003'] cancel_time,

times.ts['1004'] finish_time,

times.ts['1005'] refund_time,

times.ts['1006'] refund_finish_time,

oi.expire_time,

feight_fee,

feight_fee_reduce,

activity_reduce_amount,

coupon_reduce_amount,

original_amount,

final_amount

from

(

select

*

from ${APP}.ods_order_info

where dt='$do_date'

)oi

left join

(

select

order_id,

str_to_map(concat_ws(',',collect_set(concat(order_status,'=',operate_time))),',','=') ts

from ${APP}.ods_order_status_log

where dt='$do_date'

group by order_id

)times

on oi.id=times.order_id

)new

on old.id=new.id;"

dwd_order_detail="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_order_detail partition(dt='$do_date')

select

od.id,

od.order_id,

oi.user_id,

od.sku_id,

oi.province_id,

oda.activity_id,

oda.activity_rule_id,

odc.coupon_id,

od.create_time,

od.source_type,

od.source_id,

od.sku_num,

od.order_price*od.sku_num,

od.split_activity_amount,

od.split_coupon_amount,

od.split_final_amount

from

(

select

*

from ${APP}.ods_order_detail

where dt='$do_date'

)od

left join

(

select

id,

user_id,

province_id

from ${APP}.ods_order_info

where dt='$do_date'

)oi

on od.order_id=oi.id

left join

(

select

order_detail_id,

activity_id,

activity_rule_id

from ${APP}.ods_order_detail_activity

where dt='$do_date'

)oda

on od.id=oda.order_detail_id

left join

(

select

order_detail_id,

coupon_id

from ${APP}.ods_order_detail_coupon

where dt='$do_date'

)odc

on od.id=odc.order_detail_id;"

dwd_payment_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table ${APP}.dwd_payment_info partition(dt)

select

nvl(new.id,old.id),

nvl(new.order_id,old.order_id),

nvl(new.user_id,old.user_id),

nvl(new.province_id,old.province_id),

nvl(new.trade_no,old.trade_no),

nvl(new.out_trade_no,old.out_trade_no),

nvl(new.payment_type,old.payment_type),

nvl(new.payment_amount,old.payment_amount),

nvl(new.payment_status,old.payment_status),

nvl(new.create_time,old.create_time),

nvl(new.callback_time,old.callback_time),

nvl(date_format(nvl(new.callback_time,old.callback_time),'yyyy-MM-dd'),'9999-99-99')

from

(

select id,

order_id,

user_id,

province_id,

trade_no,

out_trade_no,

payment_type,

payment_amount,

payment_status,

create_time,

callback_time

from ${APP}.dwd_payment_info

where dt = '9999-99-99'

)old

full outer join

(

select

pi.id,

pi.out_trade_no,

pi.order_id,

pi.user_id,

oi.province_id,

pi.payment_type,

pi.trade_no,

pi.payment_amount,

pi.payment_status,

pi.create_time,

pi.callback_time

from

(

select * from ${APP}.ods_payment_info where dt='$do_date'

)pi

left join

(

select id,province_id from ${APP}.ods_order_info where dt='$do_date'

)oi

on pi.order_id=oi.id

)new

on old.id=new.id;"

dwd_cart_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_cart_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

source_type,

source_id,

cart_price,

is_ordered,

create_time,

operate_time,

order_time,

sku_num

from ${APP}.ods_cart_info

where dt='$do_date';"

dwd_comment_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_comment_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time

from ${APP}.ods_comment_info where dt='$do_date';"

dwd_favor_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_favor_info partition(dt='$do_date')

select

id,

user_id,

sku_id,

spu_id,

is_cancel,

create_time,

cancel_time

from ${APP}.ods_favor_info

where dt='$do_date';"

dwd_coupon_use="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table ${APP}.dwd_coupon_use partition(dt)

select

nvl(new.id,old.id),

nvl(new.coupon_id,old.coupon_id),

nvl(new.user_id,old.user_id),

nvl(new.order_id,old.order_id),

nvl(new.coupon_status,old.coupon_status),

nvl(new.get_time,old.get_time),

nvl(new.using_time,old.using_time),

nvl(new.used_time,old.used_time),

nvl(new.expire_time,old.expire_time),

coalesce(date_format(nvl(new.used_time,old.used_time),'yyyy-MM-dd'),date_format(nvl(new.expire_time,old.expire_time),'yyyy-MM-dd'),'9999-99-99')

from

(

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time

from ${APP}.dwd_coupon_use

where dt='9999-99-99'

)old

full outer join

(

select

id,

coupon_id,

user_id,

order_id,

coupon_status,

get_time,

using_time,

used_time,

expire_time

from ${APP}.ods_coupon_use

where dt='$do_date'

)new

on old.id=new.id;"

dwd_order_refund_info="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

insert overwrite table ${APP}.dwd_order_refund_info partition(dt='$do_date')

select

ri.id,

ri.user_id,

ri.order_id,

ri.sku_id,

oi.province_id,

ri.refund_type,

ri.refund_num,

ri.refund_amount,

ri.refund_reason_type,

ri.create_time

from

(

select * from ${APP}.ods_order_refund_info where dt='$do_date'

)ri

left join

(

select id,province_id from ${APP}.ods_order_info where dt='$do_date'

)oi

on ri.order_id=oi.id;"

dwd_refund_payment="

set hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat;

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table ${APP}.dwd_refund_payment partition(dt)

select

nvl(new.id,old.id),

nvl(new.user_id,old.user_id),

nvl(new.order_id,old.order_id),

nvl(new.sku_id,old.sku_id),

nvl(new.province_id,old.province_id),

nvl(new.trade_no,old.trade_no),

nvl(new.out_trade_no,old.out_trade_no),

nvl(new.payment_type,old.payment_type),

nvl(new.refund_amount,old.refund_amount),

nvl(new.refund_status,old.refund_status),

nvl(new.create_time,old.create_time),

nvl(new.callback_time,old.callback_time),

nvl(date_format(nvl(new.callback_time,old.callback_time),'yyyy-MM-dd'),'9999-99-99')

from

(

select

id,

user_id,

order_id,

sku_id,

province_id,

trade_no,

out_trade_no,

payment_type,

refund_amount,

refund_status,

create_time,

callback_time

from ${APP}.dwd_refund_payment

where dt='9999-99-99'

)old

full outer join

(

select

rp.id,

user_id,

order_id,

sku_id,

province_id,

trade_no,

out_trade_no,

payment_type,

refund_amount,

refund_status,

create_time,

callback_time

from

(

select

id,

out_trade_no,

order_id,

sku_id,

payment_type,

trade_no,

refund_amount,

refund_status,

create_time,

callback_time

from ${APP}.ods_refund_payment

where dt='$do_date'

)rp

left join

(

select

id,

user_id,

province_id

from ${APP}.ods_order_info

where dt='$do_date'

)oi

on rp.order_id=oi.id

)new

on old.id=new.id;"

case $1 in

dwd_order_info )

hive -e "$dwd_order_info"

clear_data dwd_order_info

;;

dwd_order_detail )

hive -e "$dwd_order_detail"

;;

dwd_payment_info )

hive -e "$dwd_payment_info"

clear_data dwd_payment_info

;;

dwd_cart_info )

hive -e "$dwd_cart_info"

;;

dwd_comment_info )

hive -e "$dwd_comment_info"

;;

dwd_favor_info )

hive -e "$dwd_favor_info"

;;

dwd_coupon_use )

hive -e "$dwd_coupon_use"

clear_data dwd_coupon_use

;;

dwd_order_refund_info )

hive -e "$dwd_order_refund_info"

;;

dwd_refund_payment )

hive -e "$dwd_refund_payment"

clear_data dwd_refund_payment

;;

all )

hive -e "$dwd_order_info$dwd_order_detail$dwd_payment_info$dwd_cart_info$dwd_comment_info$dwd_favor_info$dwd_coupon_use$dwd_order_refund_info$dwd_refund_payment"

clear_data dwd_order_info

clear_data dwd_payment_info

clear_data dwd_coupon_use

clear_data dwd_refund_payment

;;

esac

注意:需要开始的clear_data方法是因为累计型快照的9999_99_99表里本身就是有数据的,当每日进行装载的时候,完成的数据表会加入该天的分区,而没有完成的数据就会覆盖9999_99_99表中原有的数据。但是如果碰到某些特殊情况,当该天的表数据全部被完成,那么所有的数据都会写入到该天的对应分区中,那么就不会有数据覆盖到9999_99_99的原有数据中,此时应该把9999_99_99中的数据进行手动清空,这样就不会有数据多余。

综上,所有的累积型快照事实表都需要在最后多加上clear_data操作