数据仓库-离线数仓(基于物流数仓)

1、数据仓库概述

1.1、数据仓库概念

数据仓库是一个为数据分析而设计的企业级数据管理系统。数据仓库可集中、整合多个信息源的大量数据,借助数据仓库的分析能力,企业可从数据中获得宝贵的信息进而改进决策。同时,随着时间的推移,数据仓库中积累的大量历史数据对于数据科学家和业务分析师也是十分宝贵的。

1.2、数据仓库核心架构

2、数据仓库建模概述

2.1、数据仓库建模的意义

如果把数据看作图书馆里的书,我们希望看到它们在书架上分门别类地放置;如果把数据看作城市的建筑,我们希望城市规划布局合理;如果把数据看作电脑文件和文件夹,我们希望按照自己的习惯有很好的文件夹组织方式,而不是糟糕混乱的桌面,经常为找一个文件而不知所措。

数据模型就是数据组织和存储方法,它强调从业务、数据存取和使用角度合理存储数据。只有将数据有序的组织和存储起来之后,数据才能得到高性能、低成本、高效率、高质量的使用。

高性能:良好的数据模型能够帮助我们快速查询所需要的数据。

低成本:良好的数据模型能减少重复计算,实现计算结果的复用,降低计算成本。

高效率:良好的数据模型能极大的改善用户使用数据的体验,提高使用数据的效率。

高质量:良好的数据模型能改善数据统计口径的混乱,减少计算错误的可能性。

2.2、数据仓库建模方法论

2.2.1、ER模型

数据仓库之父Bill Inmon提出的建模方法是从全企业的高度,用实体关系(Entity Relationship,ER)模型来描述企业业务,并用规范化的方式表示出来,在范式理论上符合3NF。

1)实体关系模型

实体关系模型将复杂的数据抽象为两个概念——实体和关系。实体表示一个对象,例如学生、班级,关系是指两个实体之间的关系,例如学生和班级之间的从属关系。

2)数据库规范化

数据库规范化是使用一系列范式设计数据库(通常是关系型数据库)的过程,其目的是减少数据冗余,增强数据的一致性。

这一系列范式就是指在设计关系型数据库时,需要遵从的不同的规范。关系型数据库的范式一共有六种,分别是第一范式(1NF)、第二范式(2NF)、第三范式(3NF)、巴斯-科德范式(BCNF)、第四范式(4NF)和第五范式(5NF)。遵循的范式级别越高,数据冗余性就越低。

3)三范式

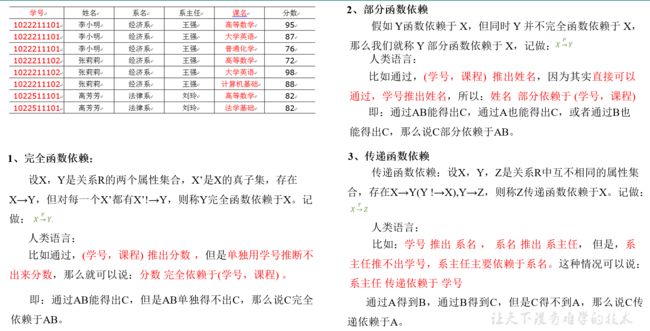

(1)函数依赖

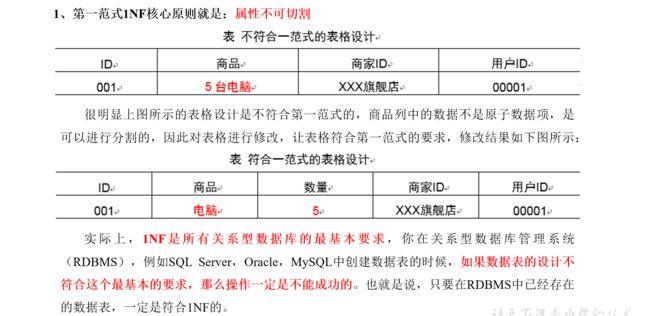

(2)第一范式

(3)第二范式

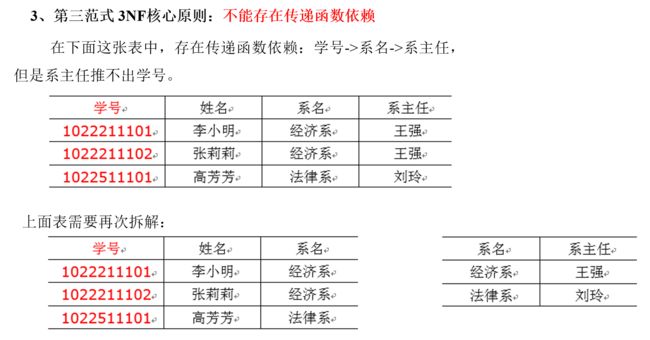

(4)第三范式

下图为一个采用Bill Inmon倡导的建模方法构建的模型,从图中可以看出,较为松散、零碎,物理表数量多。

这种建模方法的出发点是整合数据,其目的是将整个企业的数据进行组合和合并,并进行规范处理,减少数据冗余性,保证数据的一致性。这种模型并不适合直接用于分析统计。

2.2.2、维度模型

数据仓库领域的令一位大师——Ralph Kimball倡导的建模方法为维度建模。维度模型将复杂的业务通过事实和维度两个概念进行呈现。事实通常对应业务过程,而维度通常对应业务过程发生时所处的环境。

注:业务过程可以概括为一个个不可拆分的行为事件,例如在线教育交易中的下单,付款,加购等,都是业务过程。

下图为一个典型的维度模型,其中位于中心的SalesOrder为事实表,其中保存的是下单这个业务过程的所有记录。位于周围每张表都是维度表,包括Date(日期),Customer(顾客),Product(产品),Location(地区)等,这些维度表就组成了每个订单发生时所处的环境,即何人、何时、在何地下单了何种产品。从图中可以看出,模型相对清晰、简洁。

维度建模以数据分析作为出发点,为数据分析服务,因此它关注的重点的用户如何更快的完成需求分析以及如何实现较好的大规模复杂查询的响应性能。

3、维度建模理论之事实表

3.1、事实表概述

事实表作为数据仓库维度建模的核心,紧紧围绕着业务过程来设计。其包含与该业务过程有关的维度引用(维度表外键)以及该业务过程的度量(通常是可累加的数字类型字段)。

3.1.1、事实表特点

事实表通常比较“细长”,即列较少,但行较多,且行的增速快。

3.1.2、事实表分类

事实表有三种类型:分别是事务事实表、周期快照事实表和累积快照事实表,每种事实表都具有不同的特点和适用场景,下面逐个介绍。

3.2、事务型事实表

3.2.1、概述

事务事实表用来记录各业务过程,它保存的是各业务过程的原子操作事件,即最细粒度的操作事件。粒度是指事实表中一行数据所表达的业务细节程度。

事务型事实表可用于分析与各业务过程相关的各项统计指标,由于其保存了最细粒度的记录,可以提供最大限度的灵活性,可以支持无法预期的各种细节层次的统计需求。

3.2.2、设计流程

设计事务事实表时一般可遵循以下四个步骤:

选择业务过程→声明粒度→确认维度→确认事实

1)选择业务过程

在业务系统中,挑选我们感兴趣的业务过程,业务过程可以概括为一个个不可拆分的行为事件,例如在线教育交易中的下单,付款,加购等,都是业务过程。通常情况下,一个业务过程对应一张事务型事实表。

2)声明粒度

业务过程确定后,需要为每个业务过程声明粒度。即精确定义每张事务型事实表的每行数据表示什么,应该尽可能选择最细粒度,以此来应对各种细节程度的需求。

典型的粒度声明如下:

订单事实表中一行数据表示的是一个订单中的一门课程。

3)确定维度

确定维度具体是指,确定与每张事务型事实表相关的维度有哪些。

确定维度时应尽量多的选择与业务过程相关的环境信息。因为维度的丰富程度就决定了维度模型能够支持的指标丰富程度。

4)确定事实

此处的“事实”一词,指的是每个业务过程的度量值(通常是可累加的数字类型的值,例如:次数、个数、件数、金额等)。

经过上述四个步骤,事务型事实表就基本设计完成了。第一步选择业务过程可以确定有哪些事务型事实表,第二步可以确定每张事务型事实表的每行数据是什么,第三步可以确定每张事务型事实表的维度外键,第四步可以确定每张事务型事实表的度量值字段。

3.2.3、不足

事务型事实表可以保存所有业务过程的最细粒度的操作事件,故理论上其可以支撑与各业务过程相关的各种统计粒度的需求。但对于某些特定类型的需求,其逻辑可能会比较复杂,或者效率会比较低下。例如:

1)存量型指标

例如购物车存量,账户余额等。此处以在线教育中的加购业务为例,加购业务包含的业务过程主要包括加购物车和减购物车,两个业务过程各自对应一张事务型事实表,一张存储所有加购物车的原子操作事件,另一张存储所有减购物车的原子操作事件。

假定现有一个需求,要求统计截至当日的各用户各科目的购物车存量。由于加购物车和减购物车操作均会影响到购物车存量,故需要对两张事务型事实表进行聚合,且需要区分两者对购物车存量的影响(加或减),另外需要对两张表的全表数据聚合才能得到统计结果。

可以看到,不论是从逻辑上还是效率上考虑,这都不是一个好的方案。

2)多事务关联统计

例如,现需要统计最近30天,用户下单到支付的时间间隔的平均值。统计思路应该是找到下单事务事实表和支付事务事实表,过滤出最近30天的记录,然后按照订单id对两张事实表进行关联,之后用支付时间减去下单时间,然后再求平均值。

逻辑上虽然并不复杂,但是其效率较低,因为下单事务事实表和支付事务事实表均为大表,大表join大表的操作应尽量避免。

可以看到,在上述两种场景下事务型事实表的表现并不理想。下面要介绍的另外两种类型的事实表就是为了弥补事务型事实表的不足的。

3.3、周期型快照事实表

3.3.1、概述

周期快照事实表以具有规律性的、可预见的时间间隔来记录事实,主要用于分析一些存量型(例如购物车存量,账户余额)或者状态型(空气温度,行驶速度)指标。

对于购物车存量、账户余额这些存量型指标,业务系统中通常就会计算并保存最新结果,所以定期同步一份全量数据到数据仓库,构建周期型快照事实表,就能轻松应对此类统计需求,而无需再对事务型事实表中大量的历史记录进行聚合了。

对于空气温度、行驶速度这些状态型指标,由于它们的值往往是连续的,我们无法捕获其变动的原子事务操作,所以无法使用事务型事实表统计此类需求。而只能定期对其进行采样,构建周期型快照事实表。

3.3.2、设计流程

1)确定粒度

周期型快照事实表的粒度可由采样周期和维度描述,故确定采样周期和维度后即可确定粒度。

采样周期通常选择每日。

维度可根据统计指标决定,例如指标为统计每个用户每个科目的购物车存量,则可确定维度为用户和科目。

确定完采样周期和维度后,即可确定该表粒度为每日-用户-科目。

2)确认事实

事实也可根据统计指标决定,例如指标为统计每个用户每个科目的购物车存量,则事实为购物车存量。

3.3.3、事实类型

此处的事实类型是指度量值的类型,而非事实表的类型。事实(度量值)共分为三类,分别是可加事实,半可加事实和不可加事实。

1)可加事实

可加事实是指可以按照与事实表相关的所有维度进行累加,例如事务型事实表中的事实。

2)半可加事实

半可加事实是指只能按照与事实表相关的一部分维度进行累加,例如周期型快照事实表中的事实。以上述各仓库中各用户购物车存量每天快照事实表为例,这张表中的购物车存量事实可以按照用户或者科目维度进行累加,但是不能按照时间维度进行累加,因为将每天的购物车存量累加起来是没有任何意义的。

3)不可加事实

不可加事实是指完全不具备可加性,例如比率型事实。不可加事实通常需要转化为可加事实,例如比率可转化为分子和分母。

3.4、累积型快照事实表

3.4.1、概述

累积型快照事实表是基于一个业务流程中的多个关键业务过程联合处理而构建的事实表,如交易流程中的试听、下单、支付等业务过程。

累积型快照事实表通常具有多个日期字段,每个日期对应业务流程中的一个关键业务过程(里程碑)。

| 课程id |

用户id |

试听日期 |

下单日期 |

支付日期 |

订单分摊金额 |

支付分摊金额 |

| 1001 |

1234 |

2022-02-19 |

2022-02-20 |

2022-02-21 |

1000 |

1000 |

累积型快照事实表主要用于分析业务过程(里程碑)之间的时间间隔等需求。例如前文提到的用户下单到支付的平均时间间隔,使用累积型快照事实表进行统计,就能避免两个事务事实表的关联操作,从而变得十分简单高效。

3.4.2、设计流程

累积型快照事实表的设计流程同事务型事实表类似,也可采用以下四个步骤,下面重点描述与事务型事实表的不同之处。

选择业务过程→声明粒度→确认维度→确认事实。

1)选择业务过程

选择一个业务流程中需要关联分析的多个关键业务过程,多个业务过程对应一张累积型快照事实表。

2)声明粒度

精确定义每行数据表示的是什么,尽量选择最小粒度。

3)确认维度

选择与各业务过程相关的维度,需要注意的是,每各业务过程均需要一个日期维度。

4)确认事实

选择各业务过程的度量值。

4、维度建模理论之维度表

4.1、维度表概述

维度表是维度建模的基础和灵魂。前文提到,事实表紧紧围绕业务过程进行设计,而维度表则围绕业务过程所处的环境进行设计。维度表主要包含一个主键和各种维度字段,维度字段称为维度属性。

4.2、维度表设计步骤

1)确定维度(表)

在设计事实表时,已经确定了与每个事实表相关的维度,理论上每个相关维度均需对应一张维度表。需要注意到,可能存在多个事实表与同一个维度都相关的情况,这种情况需保证维度的唯一性,即只创建一张维度表。另外,如果某些维度表的维度属性很少,例如只有一个**名称,则可不创建该维度表,而把该表的维度属性直接增加到与之相关的事实表中,这个操作称为维度退化。

2)确定主维表和相关维表

此处的主维表和相关维表均指业务系统中与某维度相关的表。例如业务系统中与课程相关的表有course_info,chapter_info,base_subject_info,base_category_info,video_info等,其中course_info就称为课程维度的主维表,其余表称为课程维度的相关维表。维度表的粒度通常与主维表相同。

3)确定维度属性

确定维度属性即确定维度表字段。维度属性主要来自于业务系统中与该维度对应的主维表和相关维表。维度属性可直接从主维表或相关维表中选择,也可通过进一步加工得到。

确定维度属性时,需要遵循以下要求:

(1)尽可能生成丰富的维度属性

维度属性是后续做分析统计时的查询约束条件、分组字段的基本来源,是数据易用性的关键。维度属性的丰富程度直接影响到数据模型能够支持的指标的丰富程度。

(2)尽量不使用编码,而使用明确的文字说明,一般可以编码和文字共存。

(3)尽量沉淀出通用的维度属性

有些维度属性的获取需要进行比较复杂的逻辑处理,例如需要通过多个字段拼接得到。为避免后续每次使用时的重复处理,可将这些维度属性沉淀到维度表中。

4.3、维度设计要点

4.3.1、规范化与反规范化

规范化是指使用一系列范式设计数据库的过程,其目的是减少数据冗余,增强数据的一致性。通常情况下,规范化之后,一张表的字段会拆分到多张表。

反规范化是指将多张表的数据冗余到一张表,其目的是减少join操作,提高查询性能。

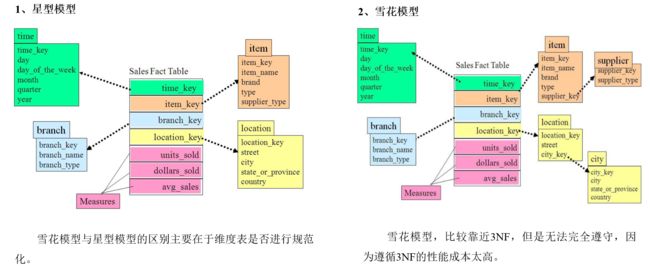

在设计维度表时,如果对其进行规范化,得到的维度模型称为雪花模型,如果对其进行反规范化,得到的模型称为星型模型。

数据仓库系统的主要目的是用于数据分析和统计,所以是否方便用户进行统计分析决定了模型的优劣。采用雪花模型,用户在统计分析的过程中需要大量的关联操作,使用复杂度高,同时查询性能很差,而采用星型模型,则方便、易用且性能好。所以出于易用性和性能的考虑,维度表一般是很不规范化的。

4.3.2、维度变化

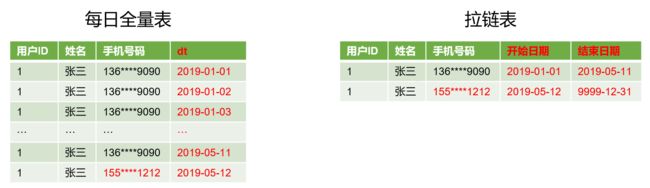

维度属性通常不是静态的,而是会随时间变化的,数据仓库的一个重要特点就是反映历史的变化,所以如何保存维度的历史状态是维度设计的重要工作之一。保存维度数据的历史状态,通常有以下两种做法,分别是全量快照表和拉链表。

1)全量快照表

离线数据仓库的计算周期通常为每天一次,所以可以每天保存一份全量的维度数据。这种方式的优点和缺点都很明显。

优点是简单而有效,开发和维护成本低,且方便理解和使用。

缺点是浪费存储空间,尤其是当数据的变化比例比较低时。

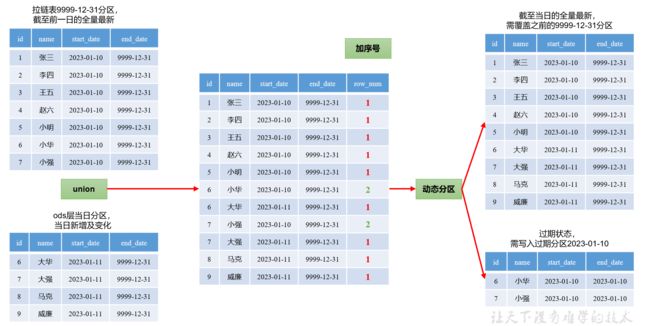

2)拉链表

拉链表的意义就在于能够更加高效的保存维度信息的历史状态。

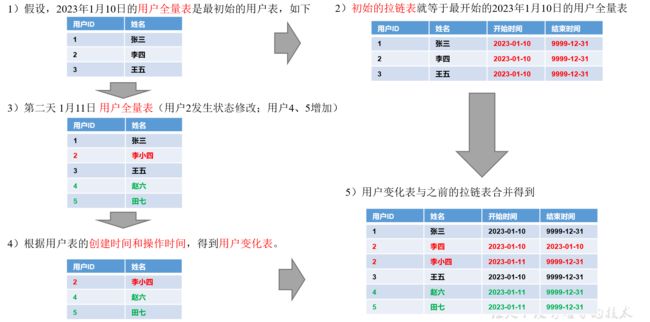

(1)什么是拉链表

拉链表,记录每条信息的生命周期,一旦一条记录的生命周期结束,就重新开始一条新的记录,并把当前日期放入生效开始日期。

如果当前信息至今有效,在生效结束日期中填入一个极大值(如9999-12-31 )

(2)为什么要做拉链表

拉链表适合于:数据会发生变化,但是变化频率并不高的维度(即: 缓慢变化维)。

比如:用户信息会发生变化,但是每天变化的比例不高。如果数据量有一定规模,按照每日全量的方式保存效率很低。 比如: 1亿用户365天,每天一份用户信息。(做每日全量效率低)

(3)如何使用拉链表

4.3.3、多值维度

如果事实表中一条记录在某个维度表中有多条记录与之对应,称为多值维度。例如,下单事实表中的一条记录为一个订单,一个订单可能包含多个课程,所以课程维度表中就可能有多条数据与之对应。

针对这种情况,通常采用以下两种方案解决。

第一种:降低事实表的粒度,例如将订单事实表的粒度由一个订单降低为一个订单中的一门课程。

第二种:在事实表中采用多字段保存多个维度值,每个字段保存一个维度id。这种方案只适用于多值维度个数固定的情况。

建议尽量采用第一种方案解决多值维度问题。

4.3.4、多值属性

维表中的某个属性同时有多个值,称之为“多值属性”,例如课程维度的课程类别,每个课程均有多个属性值。

针对这种情况,通常有可以采用以下两种方案。

第一种:将多值属性放到一个字段,该字段内容为key1:value1,key2:value2或者 value1,value2的形式。如课程类别属性,一个课程可以属于编程技术、后端开发等多个类别。

第二种:将多值属性放到多个字段,每个字段对应一个属性。这种方案只适用于多值属性个数固定的情况。

5、数据仓库设计

5.1、数据仓库分层规划

优秀可靠的数仓体系,需要良好的数据分层结构。合理的分层,能够使数据体系更加清晰,使复杂问题得以简化。以下是该项目的分层规划。

5.2、数据仓库构建流程

以下是构建数据仓库的完整流程。

5.2.1、数据调研

数据调研重点要做两项工作,分别是业务调研和需求分析。这两项工作做的是否充分,直接影响着数据仓库的质量。

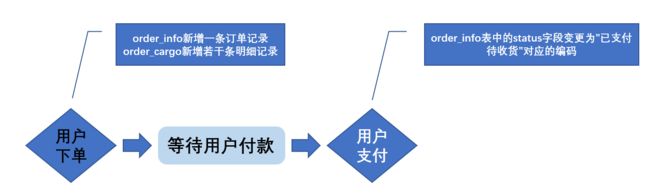

1)业务调研

业务调研的主要目标是熟悉业务流程、熟悉业务数据。

熟悉业务流程要求做到,明确每个业务的具体流程,需要将该业务所包含的每个业务过程一一列举出来。

熟悉业务数据要求做到,将数据(包括埋点日志和业务数据表)与业务过程对应起来,明确每个业务过程会对哪些表的数据产生影响,以及产生什么影响。产生的影响,需要具体到,是新增一条数据,还是修改一条数据,并且需要明确新增的内容或者是修改的逻辑。

下面以在线教育中的交易业务为例进行演示,交易业务涉及到的业务过程有用户试听、用户下单、用户支付,具体流程如下图。

2)需求分析

典型的需求指标如,最近一天各省份 Java 学科订单总额。

分析需求时,需要明确需求所需的业务过程及维度,例如该需求所需的业务过程就是用户下单,所需的维度有日期,省份,科目。

3)总结

做完业务分析和需求分析之后,要保证每个需求都能找到与之对应的业务过程及维度。若现有数据无法满足需求,则需要和业务方进行沟通,例如某个页面需要新增某个行为的埋点。

5.2.2、明确数据域

数据仓库模型设计除横向的分层外,通常也需要根据业务情况进行纵向划分数据域。划分数据域的意义是便于数据的管理和应用。

通常可以根据业务过程或者部门进行划分,本项目根据业务过程进行划分,需要注意的是一个业务过程只能属于一个数据域。

下面是本数仓项目所需的所有业务过程及数据域划分详情。

| 数据域 |

业务过程 |

| 交易域 |

下单、支付成功、取消运单 |

| 物流域 |

揽收(接单)、发单、转运完成、派送成功、签收、运输 |

| 中转域 |

入库、分拣、出库 |

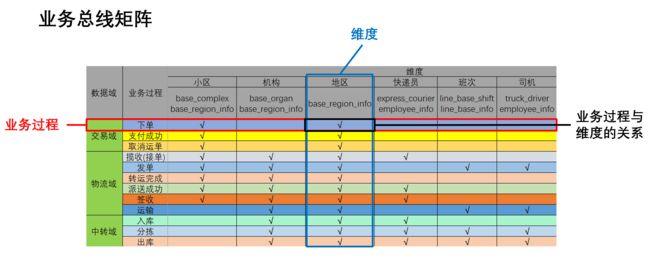

5.2.3、构建业务总线矩阵

业务总线矩阵中包含维度模型所需的所有事实(业务过程)以及维度,以及各业务过程与各维度的关系。矩阵的行是一个个业务过程,矩阵的列是一个个的维度,行列的交点表示业务过程与维度的关系。

一个业务过程对应维度模型中一张事务型事实表,一个维度则对应维度模型中的一张维度表。所以构建业务总线矩阵的过程就是设计维度模型的过程。但是需要注意的是,总线矩阵中通常只包含事务型事实表,另外两种类型的事实表需单独设计。

按照事务型事实表的设计流程,选择业务过程->声明粒度->确认维度->确认事实,得到的最终的业务总线矩阵见以下表格。

后续的DWD层以及DIM层的搭建需参考业务总线矩阵。

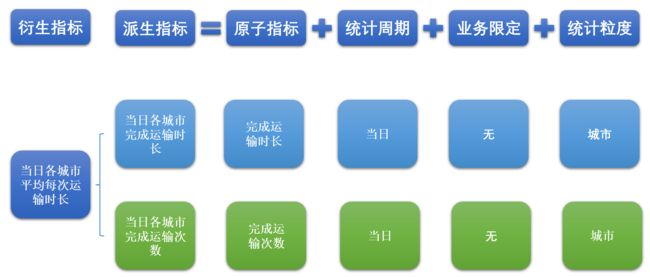

5.2.4、明确统计指标

明确统计指标具体的工作是,深入分析需求,构建指标体系。构建指标体系的主要意义就是指标定义标准化。所有指标的定义,都必须遵循同一套标准,这样能有效的避免指标定义存在歧义,指标定义重复等问题。

1)指标体系相关概念

(1)原子指标

原子指标基于某一业务过程的度量值,是业务定义中不可再拆解的指标,原子指标的核心功能就是对指标的聚合逻辑进行了定义。我们可以得出结论,原子指标包含三要素,分别是业务过程、度量值和聚合逻辑。

例如订单总额就是一个典型的原子指标,其中的业务过程为用户下单、度量值为订单金额,聚合逻辑为sum()求和。需要注意的是原子指标只是用来辅助定义指标一个概念,通常不会对应有实际统计需求与之对应。

(2)派生指标

派生指标基于原子指标,其与原子指标的关系如下图所示。

与原子指标不同,派生指标通常会对应实际的统计需求。请从图中的例子中,体会指标定义标准化的含义。

(3)衍生指标

衍生指标是在一个或多个派生指标的基础上,通过各种逻辑运算复合而成的。例如比率、比例等类型的指标。衍生指标也会对应实际的统计需求。

2)指标体系对于数仓建模的意义

通过上述两个具体的案例可以看出,绝大多数的统计需求,都可以使用原子指标、派生指标以及衍生指标这套标准去定义。同时能够发现这些统计需求都直接的或间接的对应一个或者是多个派生指标。

当统计需求足够多时,必然会出现部分统计需求对应的派生指标相同的情况。这种情况下,我们就可以考虑将这些公共的派生指标保存下来,这样做的主要目的就是减少重复计算,提高数据的复用性。

这些公共的派生指标统一保存在数据仓库的DWS层。因此DWS层设计,就可以参考我们根据现有的统计需求整理出的派生指标。

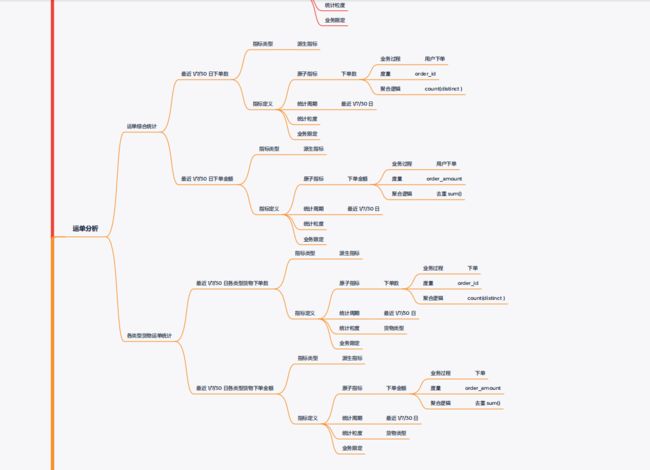

按照上述标准整理出的指标体系如下(部分):

从上述指标体系中抽取出来的所有派生指标见如下表格(部分)。

5.2.4、维度模型设计

维度模型的设计参照上述得到的业务总线矩阵即可。事实表存储在DWD层,维度表存储在DIM层。

5.2.5、汇总模型设计

汇总模型的设计参考上述整理出的指标体系(主要是派生指标)即可。汇总表与派生指标的对应关系是,一张汇总表通常包含业务过程相同、统计周期相同、统计粒度相同的多个派生指标。请思考:汇总表与事实表的对应关系是?

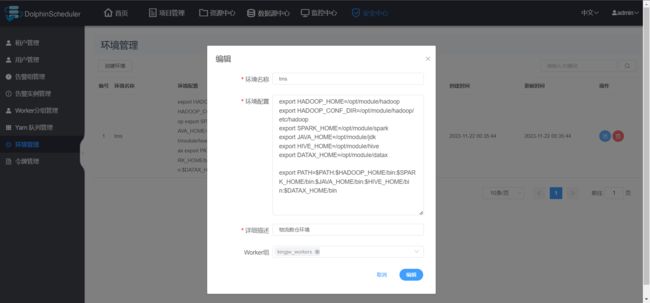

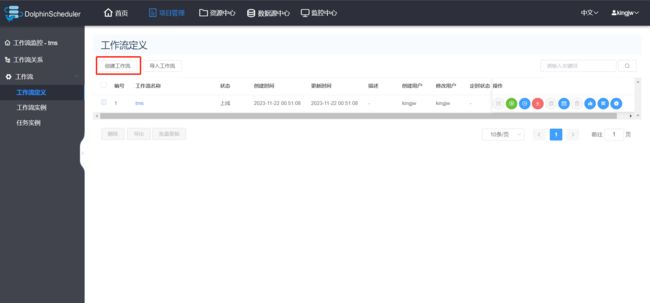

6、数据仓库环境准备

6.1、数据仓库运行环境

数据仓库运行环境-HiveOnSpark配置-CSDN博客

6.2、数据仓库开发环境

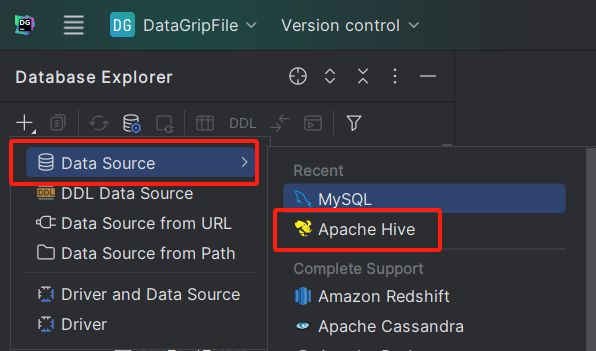

数仓开发工具可选用DBeaver或者DataGrip。两者都需要用到JDBC协议连接到Hive,故需要启动HiveServer2。

1)启动HiveServer2

[kingjw@hadoop102 hive]$ hiveserver22)配置DataGrip连接

(1)创建连接

(2)配置连接属性

所有属性配置,和Hive的beeline客户端配置一致即可。初次使用,配置过程会提示缺少JDBC驱动,按照提示下载即可。

如果驱动下载失败,参考

DataGrip下载驱动失败,手动配置http代理-CSDN博客

6.3、模拟数据准备

通常企业在开始搭建数仓时,业务系统中会存在历史数据,一般业务数据库存在历史数据,而用户行为日志无历史数据。假定数仓上线的日期为2023-01-10,为模拟真实场景,需准备以下数据。

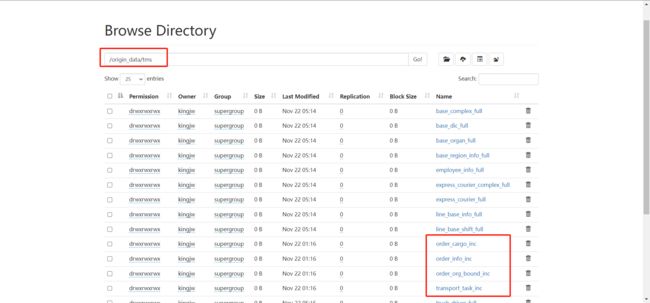

注:在执行以下操作之前,先将HDFS上/origin_data路径下之前的测试使用的数据删除。

6.3.1、用户行为日志

本项目没有日志数据。

6.3.2、业务数据

业务数据一般存在历史数据,此处需准备2023-01-05至2023-01-10的数据。

1)增量表同步

(1)将application.yml的mock.reset-all-dim和mock.clear.busi置为1,mock.date修改为2023-01-05,如下。

#业务日期

mock.date: "2023-01-05"

# 清空所有维度数据

mock.reset-all-dim: 1

# 清空所有业务事实数据

mock.clear.busi: 1(2)生成数据

[kingjw@hadoop102 tms]$ java -jar tms-mock-2023-01-06.jar(3)将application.yml中的mock.reset-all-dim和mock.clear.busi修改为0,mock.date修改为2023-01-06,如下。

mock.date: "2023-01-06"(4)重新生成数据

[kingjw@hadoop102 tms]$ java -jar tms-mock-2023-01-06.jar(5)依次修改mock.date为2023-01-07,2023-01-08,2023-01-09,2023-01-10,生成对应日期的数据,不再赘述。

(6)执行flink-cdc.sh脚本,如下。

[kingjw@hadoop102 tms]$ flink-cdc.sh initial 2023-01-10(7)查看HDFS对应目录,如下

2)全量表同步

(1)执行全量表同步脚本

[kingjw@hadoop102 bin]$ mysql_to_hdfs_full.sh all 2023-01-10(2)查看HDFS对应目录,如下。

6.4、Hive 常见问题及解决方式

6.4.1、DataGrip 中注释乱码问题

注释属于元数据的一部分,同样存储在mysql的metastore库中,如果metastore库的字符集不支持中文,就会导致中文显示乱码。

不建议修改Hive元数据库的编码,此处我们在metastore中找存储注释的表,找到表中存储注释的字段,只改对应表对应字段的编码。

如下两步修改,缺一不可

(1)修改mysql元数据库

我们用到的注释有两种:字段注释和整张表的注释。

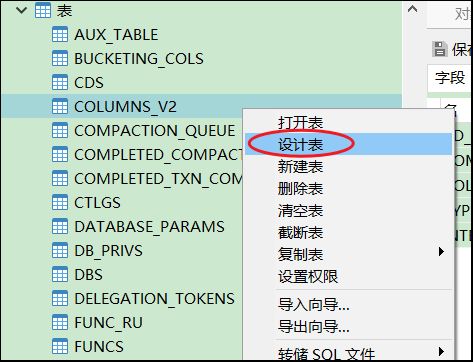

COLUMNS_V2 表中的 COMMENT 字段存储了 Hive 表所有字段的注释,TABLE_PARAMS 表中的 PARAM_VALUE 字段存储了所有表的注释。我们可以通过命令修改字段编码,也可以用 DataGrip 或 Navicat 等工具修改,此处仅对 Navicat 进行展示。

① 命令修改

alter table COLUMNS_V2 modify column COMMENT varchar(256) character set utf8;

alter table TABLE_PARAMS modify column PARAM_VALUE mediumtext character set utf8;② 使用工具

以 COLUMNS_V2 表中COMMENT 字段的修改为例

i)右键点击表名,选择设计表

ii)在右侧页面中选中表的字段

iii)在页面下方下拉列表中将字符集改为 utf8

修改字符集之后,已存在的中文注释能否正确显示?不能。为何?

数据库中的字符都是通过编码存储的,写入时编码,读取时解码。修改字段编码并不会改变此前数据的编码方式,依然为默认的latin1,此时读取之前的中文注释会用utf8解码,编解码方式不一致,依然乱码。

(2)修改url连接

修改hive-site.xml在末尾添加

&useUnicode=true&characterEncoding=UTF-8xml 文件中 & 符是有特殊含义的,我们必须使用转义的方式 & 对 & 进行替换

修改结果如下

javax.jdo.option.ConnectionURL

jdbc:mysql://hadoop102:3306/metastore?useSSL=false&useUnicode=true&characterEncoding=UTF-8

只要修改了 hive-site.xml,就必须重启 hiveserver2。

6.4.2、DataGrip 刷新连接时 hiveserver2 后台报错

(1)报错信息如下

FAILED: ParseException line 1:5 cannot recognize input near 'show' 'indexes' 'on' in ddl statement

原因:我们使用的是Hive-3.1.3,早期版本的Hive有索引功能,当前版本已移除, DataGrip刷新连接时会扫描索引,而 Hive 没有,就会报错。

(2)报错信息如下

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. Current user : kingjw is not allowed to list roles. User has to belong to ADMIN role and have it as current role, for this action.

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. Current user : kingjw is not allowed get principals in a role. User has to belong to ADMIN role and have it as current role, for this action. Otherwise, grantor need to have ADMIN OPTION on role being granted and have it as a current role for this action.

DataGrip 连接 hiveserver2 时会做权限认证,但本项目中我们没有对 Hive 的权限管理进行配置,因而报错。

上述两个问题都是 DataGrip 导致的,并非 Hive 环境的问题,不影响使用

6.4.3、OOM 报错

Hive 默认堆内存只有 256M,如果 hiveserver2 后台频繁出现 OutOfMemoryError,可以调大堆内存。

在 Hive 家目录的 conf 目录下复制一份模板文件hive-env.sh.template

[kingjw@hadoop102 conf]$ cd $HIVE_HOME/conf

[kingjw@hadoop102 conf]$ cp hive-env.sh.template hive-env.sh修改 hive-env.sh,将 Hive 堆内存改为 1024M,如下

export HADOOP_HEAPSIZE=1024可根据实际使用情况适当调整堆内存。

6.4.4、DataGrip ODS 层部分表字段显示异常

建表字段中有如下语句的表字段无法显示

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'上述语句指定了 Hive 表的序列化器和反序列化器 SERDE(serialization 和 deserialization 的合并缩写),用于解析 JSON 格式的文件。上述 SERDE 是由第三方提供的,在 hive-site.xml 中添加如下配置即可解决

metastore.storage.schema.reader.impl

org.apache.hadoop.hive.metastore.SerDeStorageSchemaReader

7、数仓开发之ODS层

Flink-cdc采集到的增量同步数据是以JSON的格式保存的,所以读取JSON数据也需要对应的序列化方法。

JSON格式的序列化和反序列化

LanguageManual DDL - Apache Hive - Apache Software Foundation

复杂数据类型

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF#LanguageManualUDF-OperatorsonComplexTypes

ODS层的设计要点如下:

(1)ODS层的表结构设计依托于从业务系统同步过来的数据结构。

(2)ODS层要保存全部历史数据,故其压缩格式应选择压缩比较高的,此处选择gzip。

(3)ODS层表名的命名规范为:ods_表名_单分区增量全量标识(inc/full)。

7.1、运单表(增量表)

drop table if exists ods_order_info_inc;

create external table ods_order_info_inc(

`op` string comment '操作类型',

`after` struct<`id`:bigint,`order_no`:string,`status`:string,`collect_type`:string,`user_id`:bigint,`receiver_complex_id`:bigint,`receiver_province_id`:string,`receiver_city_id`:string,`receiver_district_id`:string,`receiver_address`:string,`receiver_name`:string,`sender_complex_id`:bigint,`sender_province_id`:string,`sender_city_id`:string,`sender_district_id`:string,`sender_name`:string,`payment_type`:string,`cargo_num`:bigint,`amount`:decimal(16,2),`estimate_arrive_time`:string,`distance`:decimal(16,2),`create_time`:string,`update_time`:string,`is_deleted`:string> comment '修改或插入后的数据',

`before` struct<`id`:bigint,`order_no`:string,`status`:string,`collect_type`:string,`user_id`:bigint,`receiver_complex_id`:bigint,`receiver_province_id`:string,`receiver_city_id`:string,`receiver_district_id`:string,`receiver_address`:string,`receiver_name`:string,`sender_complex_id`:bigint,`sender_province_id`:string,`sender_city_id`:string,`sender_district_id`:string,`sender_name`:string,`payment_type`:string,`cargo_num`:bigint,`amount`:decimal(16,2),`estimate_arrive_time`:string,`distance`:decimal(16,2),`create_time`:string,`update_time`:string,`is_deleted`:string> comment '修改前的数据',

`ts` bigint comment '时间戳'

) comment '运单表'

partitioned by (`dt` string comment '统计日期')

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

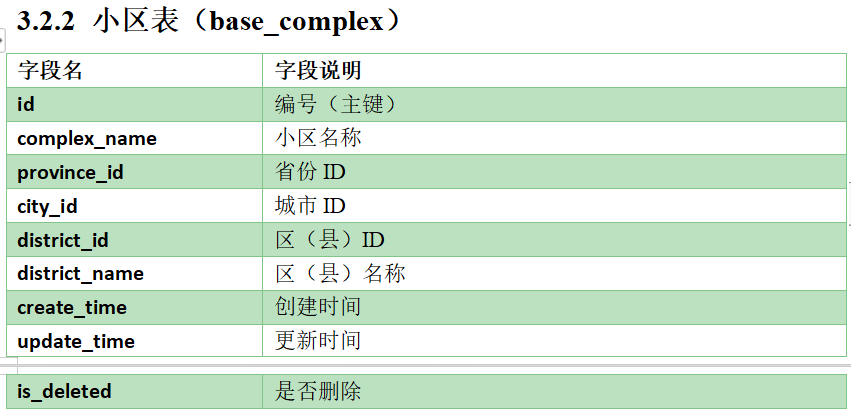

location '/warehouse/tms/ods/ods_order_info_inc';7.2、小区表(全量表)

drop table if exists ods_base_complex_full;

create external table ods_base_complex_full(

`id` bigint comment '小区ID',

`complex_name` string comment '小区名称',

`province_id` bigint comment '省份ID',

`city_id` bigint comment '城市ID',

`district_id` bigint comment '区(县)ID',

`district_name` string comment '区(县)名称',

`create_time` string comment '创建时间',

`update_time` string comment '更新时间',

`is_deleted` string comment '是否删除'

) comment '小区表'

partitioned by (`dt` string comment '统计日期')

row format delimited fields terminated by '\t'

null defined as ''

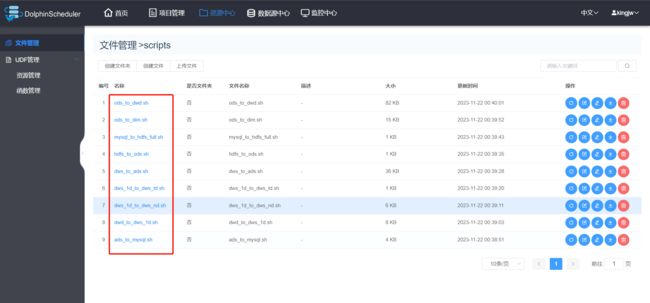

location '/warehouse/tms/ods/ods_base_complex_full';7.3、数据装载脚本

1)在hadoop102的/home/kingjw/bin目录下创建hdfs_to_ods.sh

[kingjw@hadoop102 bin]$ vim hdfs_to_ods.sh2)编写如下内容

#!/bin/bash

APP='tms'

# 如果有第二个参数

if [ -n "$2" ] ;then

do_date=$2

else

# 获得上一天的时间,可以通过data -help 查看帮助

do_date=`date -d '-1 day' +%F`

fi

load_data(){

sql=""

for i in $*; do

#判断路径是否存在,存在则返回0

hadoop fs -test -e /origin_data/tms/${i:4}/$do_date

#路径存在方可装载数据;$? 为获取上一个的输出

if [[ $? = 0 ]]; then

sql=$sql"load data inpath '/origin_data/tms/${i:4}/$do_date' OVERWRITE into table ${APP}.${i} partition(dt='$do_date');"

fi

done

hive -e "$sql"

}

case $1 in

ods_order_info_inc | ods_order_cargo_inc | ods_transport_task_inc | ods_order_org_bound_inc | ods_user_info_inc | ods_user_address_inc | ods_base_complex_full | ods_base_dic_full | ods_base_region_info_full | ods_base_organ_full | ods_express_courier_full | ods_express_courier_complex_full | ods_employee_info_full | ods_line_base_shift_full | ods_line_base_info_full | ods_truck_driver_full | ods_truck_info_full | ods_truck_model_full | ods_truck_team_full)

load_data $1

;;

"all")

load_data ods_order_info_inc ods_order_cargo_inc ods_transport_task_inc ods_order_org_bound_inc ods_user_info_inc ods_user_address_inc ods_base_complex_full ods_base_dic_full ods_base_region_info_full ods_base_organ_full ods_express_courier_full ods_express_courier_complex_full ods_employee_info_full ods_line_base_shift_full ods_line_base_info_full ods_truck_driver_full ods_truck_info_full ods_truck_model_full ods_truck_team_full

;;

esac3)赋予脚本执行权限

[kingjw@hadoop102 bin]$ chmod +x hdfs_to_ods.sh4)脚本用法

[kingjw@hadoop102 bin]$ hdfs_to_ods.sh all 2023-01-108、数仓开发之DIM层

DIM层设计要点:

(1)DIM层的设计依据是维度建模理论,该层存储维度模型的维度表。

(2)DIM层的数据存储格式为orc列式存储+snappy压缩。

(3)DIM层表名的命名规范为dim_表名_全量表或者拉链表标识(full/zip)

8.1、小区维度表

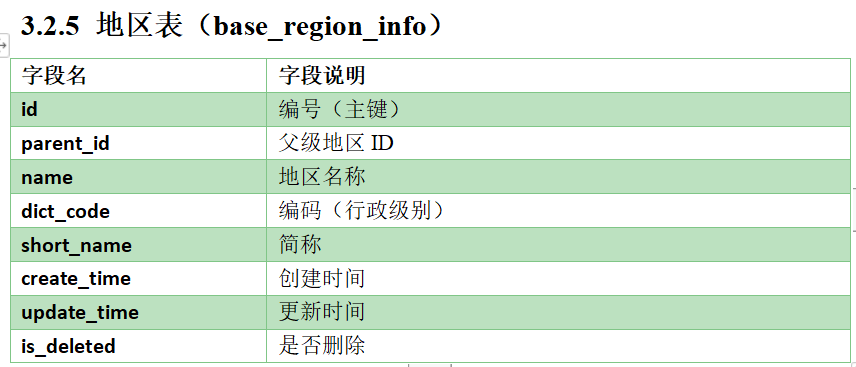

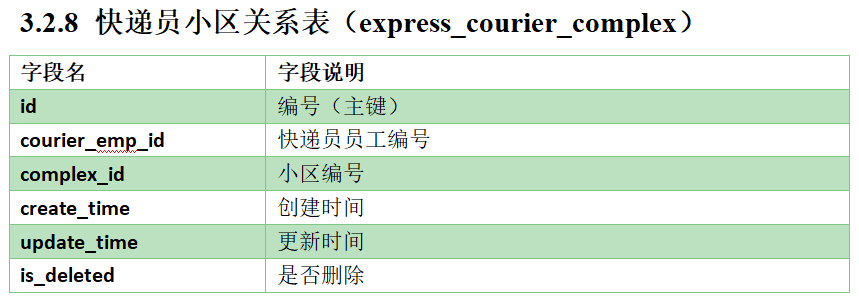

8.1.1、主维表和相关维表

主维表

相关维表1

相关维表2

8.1.2、建表语句

drop table if exists dim_complex_full;

create external table dim_complex_full(

`id` bigint comment '小区ID',

`complex_name` string comment '小区名称',

`courier_emp_ids` array comment '负责快递员IDS',

`province_id` bigint comment '省份ID',

`province_name` string comment '省份名称',

`city_id` bigint comment '城市ID',

`city_name` string comment '城市名称',

`district_id` bigint comment '区(县)ID',

`district_name` string comment '区(县)名称'

) comment '小区维度表'

partitioned by (`dt` string comment '统计日期')

stored as orc

location '/warehouse/tms/dim/dim_complex_full'

tblproperties('orc.compress'='snappy'); 8.1.3、数据装载

insert overwrite table tms.dim_complex_full

partition (dt = '2023-01-10')

select complex_info.id id,

complex_name,

courier_emp_ids,

province_id,

dic_for_prov.name province_name,

city_id,

dic_for_city.name city_name,

district_id,

district_name

from (select id,

complex_name,

province_id,

city_id,

district_id,

district_name

from ods_base_complex_full

where dt = '2023-01-10'

and is_deleted = '0') complex_info

join

(select id,

name

from ods_base_region_info_full

where dt = '2023-01-10'

and is_deleted = '0') dic_for_prov

on complex_info.province_id = dic_for_prov.id

join

(select id,

name

from ods_base_region_info_full

where dt = '2023-01-10'

and is_deleted = '0') dic_for_city

on complex_info.city_id = dic_for_city.id

left join

(select

collect_set(cast(courier_emp_id as string)) courier_emp_ids,

complex_id

from ods_express_courier_complex_full where dt='2023-01-10' and is_deleted='0'

group by complex_id

) complex_courier

on complex_info.id = complex_courier.complex_id;另外的写法,可读性更好

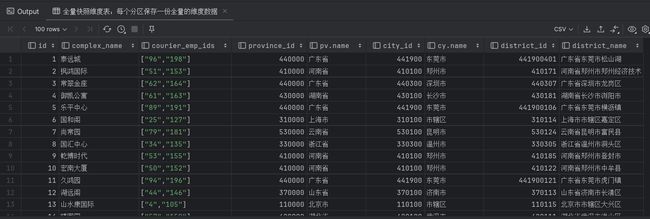

--全量快照维度表:每个分区保存一份全量的维度数据

with cx as (

select id,

complex_name,

province_id,

city_id,

district_id,

district_name

from ods_base_complex_full

where dt='2023-01-10' and is_deleted='0'

),pv as (

select id,

name

from ods_base_region_info_full

where dt='2023-01-10' and is_deleted='0'

),cy as (

select

id,

name

from ods_base_region_info_full

),ex as (

select

collect_set((cast(courier_emp_id as string))) courier_emp_ids,

complex_id

from ods_express_courier_complex_full

where dt='2023-01-10' and is_deleted='0'

group by complex_id

)

insert overwrite table dim_complex_full partition (dt = '2023-01-10')

select

cx.id,

complex_name,

courier_emp_ids,

province_id,

pv.name,

city_id,

cy.name,

district_id,

district_name

from cx left join pv

on cx.province_id = pv.id

left join cy

on cx.city_id = cy.id

left join ex

on cx.id = ex.complex_id;结果:

8.2、用户维度表(拉链表)

8.2.1、建表语句

drop table if exists dim_user_zip;

create external table dim_user_zip(

`id` bigint COMMENT '用户地址信息ID',

`login_name` string COMMENT '用户名称',

`nick_name` string COMMENT '用户昵称',

`passwd` string COMMENT '用户密码',

`real_name` string COMMENT '用户姓名',

`phone_num` string COMMENT '手机号',

`email` string COMMENT '邮箱',

`user_level` string COMMENT '用户级别',

`birthday` string COMMENT '用户生日',

`gender` string COMMENT '性别 M男,F女',

`start_date` string COMMENT '起始日期',

`end_date` string COMMENT '结束日期'

) comment '用户拉链表'

partitioned by (`dt` string comment '统计日期')

stored as orc

location '/warehouse/tms/dim/dim_user_zip'

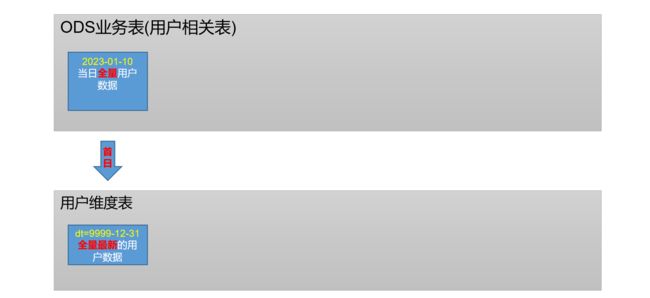

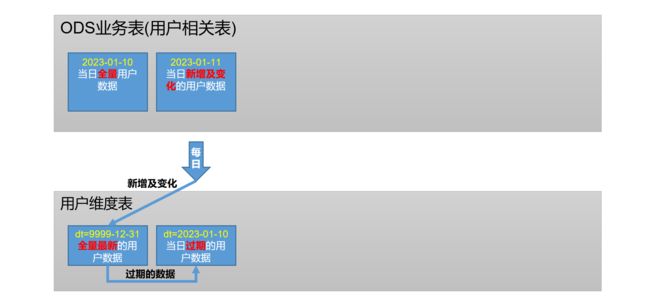

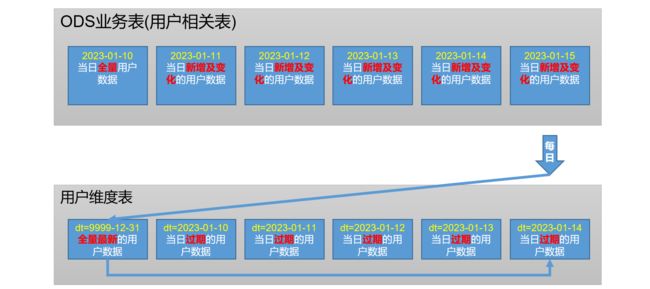

tblproperties('orc.compress'='snappy');8.2.2、分区规划

8.2.3、数据装载

1)数据装载过程

2)数据流向

首日装载

每日装载

3)首日装载

insert overwrite table dim_user_zip

partition (dt = '9999-12-31')

select after.id,

after.login_name,

after.nick_name,

md5(after.passwd) passwd,

md5(after.real_name) realname,

md5(if(after.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',

after.phone_num, null)) phone_num,

md5(if(after.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', after.email, null)) email,

after.user_level,

date_add('1970-01-01', cast(after.birthday as int)) birthday,

after.gender,

date_format(from_utc_timestamp(

cast(after.create_time as bigint), 'UTC'),

'yyyy-MM-dd') start_date,

'9999-12-31' end_date

from ods_user_info_inc

where dt = '2023-01-10'

and after.is_deleted = '0';4)每日装载

(1)装载思路

(2)装载语句

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dim_user_zip

partition (dt)

select id,

login_name,

nick_name,

passwd,

real_name,

phone_num,

email,

user_level,

birthday,

gender,

start_date,

if(rk = 1, end_date, date_add('2023-01-11', -1)) end_date,

if(rk = 1, end_date, date_add('2023-01-11', -1)) dt

from (select id,

login_name,

nick_name,

passwd,

real_name,

phone_num,

email,

user_level,

birthday,

gender,

start_date,

end_date,

row_number() over (partition by id order by start_date desc) rk

from (select id,

login_name,

nick_name,

passwd,

real_name,

phone_num,

email,

user_level,

birthday,

gender,

start_date,

end_date

from dim_user_zip

where dt = '9999-12-31'

union

select id,

login_name,

nick_name,

md5(passwd) passwd,

md5(real_name) realname,

md5(if(phone_num regexp

'^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',

phone_num, null)) phone_num,

md5(if(email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', email, null)) email,

user_level,

cast(date_add('1970-01-01', cast(birthday as int)) as string) birthday,

gender,

'2023-01-11' start_date,

'9999-12-31' end_date

from (select after.id,

after.login_name,

after.nick_name,

after.passwd,

after.real_name,

after.phone_num,

after.email,

after.user_level,

after.birthday,

after.gender,

row_number() over (partition by after.id order by ts desc) rn

from ods_user_info_inc

where dt = '2023-01-11'

and after.is_deleted = '0'

) inc

where rn = 1) full_info) final_info;8.3、数据装载脚本

8.3.1、首日装载脚本

(1)在hadoop102的/home/kingjw/bin目录下创建ods_to_dim_init.sh

[kingjw@hadoop102 bin]$ vim ods_to_dim_init.sh (2)编写如下内容

#!/bin/bash

APP=tms

#1、判断参数是否传入

if [ $# -lt 2 ]

then

echo "必须传入all/表名与数仓上线日期..."

exit

fi

#2、根据表名匹配加载首日数据

dim_complex_full_sql="

insert overwrite table tms.dim_complex_full

partition (dt = '$2')

select complex_info.id id,

complex_name,

courier_emp_ids,

province_id,

dic_for_prov.name province_name,

city_id,

dic_for_city.name city_name,

district_id,

district_name

from (select id,

complex_name,

province_id,

city_id,

district_id,

district_name

from ods_base_complex_full

where dt = '$2'

and is_deleted = '0') complex_info

join

(select id,

name

from ods_base_region_info_full

where dt = '$2'

and is_deleted = '0') dic_for_prov

on complex_info.province_id = dic_for_prov.id

join

(select id,

name

from ods_base_region_info_full

where dt = '$2'

and is_deleted = '0') dic_for_city

on complex_info.city_id = dic_for_city.id

left join

(select collect_set(cast(courier_emp_id as string)) courier_emp_ids,

complex_id

from ods_express_courier_complex_full

where dt = '$2'

and is_deleted = '0'

group by complex_id

) complex_courier

on complex_info.id = complex_courier.complex_id;

"

dim_organ_full_sql="

insert overwrite table tms.dim_organ_full

partition (dt = '$2')

select organ_info.id,

organ_info.org_name,

org_level,

region_id,

region_info.name region_name,

region_info.dict_code region_code,

org_parent_id,

org_for_parent.org_name org_parent_name

from (select id,

org_name,

org_level,

region_id,

org_parent_id

from ods_base_organ_full

where dt = '$2'

and is_deleted = '0') organ_info

left join (

select id,

name,

dict_code

from ods_base_region_info_full

where dt = '$2'

and is_deleted = '0'

) region_info

on organ_info.region_id = region_info.id

left join (

select id,

org_name

from ods_base_organ_full

where dt = '$2'

and is_deleted = '0'

) org_for_parent

on organ_info.org_parent_id = org_for_parent.id;

"

dim_region_full_sql="

insert overwrite table dim_region_full

partition (dt = '$2')

select id,

parent_id,

name,

dict_code,

short_name

from ods_base_region_info_full

where dt = '$2'

and is_deleted = '0';

"

dim_express_courier_full_sql="

insert overwrite table tms.dim_express_courier_full

partition (dt = '$2')

select express_cor_info.id,

emp_id,

org_id,

org_name,

working_phone,

express_type,

dic_info.name express_type_name

from (select id,

emp_id,

org_id,

md5(working_phone) working_phone,

express_type

from ods_express_courier_full

where dt = '$2'

and is_deleted = '0') express_cor_info

join (

select id,

org_name

from ods_base_organ_full

where dt = '$2'

and is_deleted = '0'

) organ_info

on express_cor_info.org_id = organ_info.id

join (

select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0'

) dic_info

on express_type = dic_info.id;

"

dim_shift_full_sql="

insert overwrite table tms.dim_shift_full

partition (dt = '$2')

select shift_info.id,

line_id,

line_info.name line_name,

line_no,

line_level,

org_id,

transport_line_type_id,

dic_info.name transport_line_type_name,

start_org_id,

start_org_name,

end_org_id,

end_org_name,

pair_line_id,

distance,

cost,

estimated_time,

start_time,

driver1_emp_id,

driver2_emp_id,

truck_id,

pair_shift_id

from (select id,

line_id,

start_time,

driver1_emp_id,

driver2_emp_id,

truck_id,

pair_shift_id

from ods_line_base_shift_full

where dt = '$2'

and is_deleted = '0') shift_info

join

(select id,

name,

line_no,

line_level,

org_id,

transport_line_type_id,

start_org_id,

start_org_name,

end_org_id,

end_org_name,

pair_line_id,

distance,

cost,

estimated_time

from ods_line_base_info_full

where dt = '$2'

and is_deleted = '0') line_info

on shift_info.line_id = line_info.id

join (

select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0'

) dic_info on line_info.transport_line_type_id = dic_info.id;

"

dim_truck_driver_full_sql="

insert overwrite table tms.dim_truck_driver_full

partition (dt = '$2')

select driver_info.id,

emp_id,

org_id,

organ_info.org_name,

team_id,

team_info.name team_name,

license_type,

init_license_date,

expire_date,

license_no,

is_enabled

from (select id,

emp_id,

org_id,

team_id,

license_type,

init_license_date,

expire_date,

license_no,

is_enabled

from ods_truck_driver_full

where dt = '$2'

and is_deleted = '0') driver_info

join (

select id,

org_name

from ods_base_organ_full

where dt = '$2'

and is_deleted = '0'

) organ_info

on driver_info.org_id = organ_info.id

join (

select id,

name

from ods_truck_team_full

where dt = '$2'

and is_deleted = '0'

) team_info

on driver_info.team_id = team_info.id;

"

dim_truck_full_sql="

insert overwrite table tms.dim_truck_full

partition (dt = '$2')

select truck_info.id,

team_id,

team_info.name team_name,

team_no,

org_id,

org_name,

manager_emp_id,

truck_no,

truck_model_id,

model_name truck_model_name,

model_type truck_model_type,

dic_for_type.name truck_model_type_name,

model_no truck_model_no,

brand truck_brand,

dic_for_brand.name truck_brand_name,

truck_weight,

load_weight,

total_weight,

eev,

boxcar_len,

boxcar_wd,

boxcar_hg,

max_speed,

oil_vol,

device_gps_id,

engine_no,

license_registration_date,

license_last_check_date,

license_expire_date,

is_enabled

from (select id,

team_id,

md5(truck_no) truck_no,

truck_model_id,

device_gps_id,

engine_no,

license_registration_date,

license_last_check_date,

license_expire_date,

is_enabled

from ods_truck_info_full

where dt = '$2'

and is_deleted = '0') truck_info

join

(select id,

name,

team_no,

org_id,

manager_emp_id

from ods_truck_team_full

where dt = '$2'

and is_deleted = '0') team_info

on truck_info.team_id = team_info.id

join

(select id,

model_name,

model_type,

model_no,

brand,

truck_weight,

load_weight,

total_weight,

eev,

boxcar_len,

boxcar_wd,

boxcar_hg,

max_speed,

oil_vol

from ods_truck_model_full

where dt = '$2'

and is_deleted = '0') model_info

on truck_info.truck_model_id = model_info.id

join

(select id,

org_name

from ods_base_organ_full

where dt = '$2'

and is_deleted = '0'

) organ_info

on org_id = organ_info.id

join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_type

on model_info.model_type = dic_for_type.id

join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_brand

on model_info.brand = dic_for_brand.id;

"

dim_user_zip_sql="

insert overwrite table dim_user_zip

partition (dt = '9999-12-31')

select after.id,

after.login_name,

after.nick_name,

md5(after.passwd) passwd,

md5(after.real_name) realname,

md5(if(after.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',

after.phone_num, null)) phone_num,

md5(if(after.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', after.email, null)) email,

after.user_level,

date_add('1970-01-01', cast(after.birthday as int)) birthday,

after.gender,

date_format(from_utc_timestamp(

cast(after.create_time as bigint), 'UTC'),

'yyyy-MM-dd') start_date,

'9999-12-31' end_date

from ods_user_info_inc

where dt = '$2'

and after.is_deleted = '0';

"

dim_user_address_zip_sql="

insert overwrite table dim_user_address_zip

partition (dt = '9999-12-31')

select after.id,

after.user_id,

md5(if(after.phone regexp

'^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',

after.phone, null)) phone,

after.province_id,

after.city_id,

after.district_id,

after.complex_id,

after.address,

after.is_default,

concat(substr(after.create_time, 1, 10), ' ',

substr(after.create_time, 12, 8)) start_date,

'9999-12-31' end_date

from ods_user_address_inc

where dt = '$2'

and after.is_deleted = '0';

"

case $1 in

"all")

/opt/module/hive/bin/hive -e "use tms;${dim_complex_full_sql}${dim_express_courier_full_sql}${dim_organ_full_sql}${dim_region_full_sql}${dim_shift_full_sql}${dim_truck_driver_full_sql}${dim_truck_full_sql}${dim_user_address_zip_sql}${dim_user_zip_sql}"

;;

"dim_complex_full")

/opt/module/hive/bin/hive -e "use tms;${dim_complex_full_sql}"

;;

"dim_express_courier_full")

/opt/module/hive/bin/hive -e "use tms;${dim_express_courier_full_sql}"

;;

"dim_organ_full")

/opt/module/hive/bin/hive -e "use tms;${dim_organ_full_sql}"

;;

"dim_region_full")

/opt/module/hive/bin/hive -e "use tms;${dim_region_full_sql}"

;;

"dim_shift_full")

/opt/module/hive/bin/hive -e "use tms;${dim_shift_full_sql}"

;;

"dim_truck_driver_full")

/opt/module/hive/bin/hive -e "use tms;${dim_truck_driver_full_sql}"

;;

"dim_truck_full")

/opt/module/hive/bin/hive -e "use tms;${dim_truck_full_sql}"

;;

"dim_user_address_zip")

/opt/module/hive/bin/hive -e "use tms;${dim_user_address_zip_sql}"

;;

"dim_user_zip")

/opt/module/hive/bin/hive -e "use tms;${dim_user_zip_sql}"

;;

*)

echo "表名输入错误..."

;;

esac(3)增加脚本执行权限

[kingjw@hadoop102 bin]$ chmod +x ods_to_dim_init.sh (4)脚本用法

[kingjw@hadoop102 bin]$ ods_to_dim_init.sh all 2023-01-108.3.2、每日装载脚本

(1)在hadoop102的/home/kingjw/bin目录下创建ods_to_dim.sh

[kingjw@hadoop102 bin]$ vim ods_to_dim.sh (2)编写如下内容

#!/bin/bash

APP=tms

#1、判断参数是否传入

if [ $# -lt 1 ]

then

echo "必须传入all/表名..."

exit

fi

#2、判断日期是否传入,传入则加载指定日期数据,没传则加载前一天日期数据

[ "$2" ] && datestr=$2 || datestr=$(date -d '-1 day' +%F)

#3、根据表名匹配加载首日数据

dim_complex_full_sql="

insert overwrite table tms.dim_complex_full

partition (dt = '${datestr}')

select complex_info.id id,

complex_name,

courier_emp_ids,

province_id,

dic_for_prov.name province_name,

city_id,

dic_for_city.name city_name,

district_id,

district_name

from (select id,

complex_name,

province_id,

city_id,

district_id,

district_name

from ods_base_complex_full

where dt = '${datestr}'

and is_deleted = '0') complex_info

join

(select id,

name

from ods_base_region_info_full

where dt = '${datestr}'

and is_deleted = '0') dic_for_prov

on complex_info.province_id = dic_for_prov.id

join

(select id,

name

from ods_base_region_info_full

where dt = '${datestr}'

and is_deleted = '0') dic_for_city

on complex_info.city_id = dic_for_city.id

left join

(select collect_set(cast(courier_emp_id as string)) courier_emp_ids,

complex_id

from ods_express_courier_complex_full

where dt = '${datestr}'

and is_deleted = '0'

group by complex_id

) complex_courier

on complex_info.id = complex_courier.complex_id;

"

dim_organ_full_sql="

insert overwrite table tms.dim_organ_full

partition (dt = '${datestr}')

select organ_info.id,

organ_info.org_name,

org_level,

region_id,

region_info.name region_name,

region_info.dict_code region_code,

org_parent_id,

org_for_parent.org_name org_parent_name

from (select id,

org_name,

org_level,

region_id,

org_parent_id

from ods_base_organ_full

where dt = '${datestr}'

and is_deleted = '0') organ_info

left join (

select id,

name,

dict_code

from ods_base_region_info_full

where dt = '${datestr}'

and is_deleted = '0'

) region_info

on organ_info.region_id = region_info.id

left join (

select id,

org_name

from ods_base_organ_full

where dt = '${datestr}'

and is_deleted = '0'

) org_for_parent

on organ_info.org_parent_id = org_for_parent.id;

"

dim_region_full_sql="

insert overwrite table dim_region_full

partition (dt = '${datestr}')

select id,

parent_id,

name,

dict_code,

short_name

from ods_base_region_info_full

where dt = '${datestr}'

and is_deleted = '0';

"

dim_express_courier_full_sql="

insert overwrite table tms.dim_express_courier_full

partition (dt = '${datestr}')

select express_cor_info.id,

emp_id,

org_id,

org_name,

working_phone,

express_type,

dic_info.name express_type_name

from (select id,

emp_id,

org_id,

md5(working_phone) working_phone,

express_type

from ods_express_courier_full

where dt = '${datestr}'

and is_deleted = '0') express_cor_info

join (

select id,

org_name

from ods_base_organ_full

where dt = '${datestr}'

and is_deleted = '0'

) organ_info

on express_cor_info.org_id = organ_info.id

join (

select id,

name

from ods_base_dic_full

where dt = '${datestr}'

and is_deleted = '0'

) dic_info

on express_type = dic_info.id;

"

dim_shift_full_sql="

insert overwrite table tms.dim_shift_full

partition (dt = '${datestr}')

select shift_info.id,

line_id,

line_info.name line_name,

line_no,

line_level,

org_id,

transport_line_type_id,

dic_info.name transport_line_type_name,

start_org_id,

start_org_name,

end_org_id,

end_org_name,

pair_line_id,

distance,

cost,

estimated_time,

start_time,

driver1_emp_id,

driver2_emp_id,

truck_id,

pair_shift_id

from (select id,

line_id,

start_time,

driver1_emp_id,

driver2_emp_id,

truck_id,

pair_shift_id

from ods_line_base_shift_full

where dt = '${datestr}'

and is_deleted = '0') shift_info

join

(select id,

name,

line_no,

line_level,

org_id,

transport_line_type_id,

start_org_id,

start_org_name,

end_org_id,

end_org_name,

pair_line_id,

distance,

cost,

estimated_time

from ods_line_base_info_full

where dt = '${datestr}'

and is_deleted = '0') line_info

on shift_info.line_id = line_info.id

join (

select id,

name

from ods_base_dic_full

where dt = '${datestr}'

and is_deleted = '0'

) dic_info on line_info.transport_line_type_id = dic_info.id;

"

dim_truck_driver_full_sql="

insert overwrite table tms.dim_truck_driver_full

partition (dt = '${datestr}')

select driver_info.id,

emp_id,

org_id,

organ_info.org_name,

team_id,

team_info.name team_name,

license_type,

init_license_date,

expire_date,

license_no,

is_enabled

from (select id,

emp_id,

org_id,

team_id,

license_type,

init_license_date,

expire_date,

license_no,

is_enabled

from ods_truck_driver_full

where dt = '${datestr}'

and is_deleted = '0') driver_info

join (

select id,

org_name

from ods_base_organ_full

where dt = '${datestr}'

and is_deleted = '0'

) organ_info

on driver_info.org_id = organ_info.id

join (

select id,

name

from ods_truck_team_full

where dt = '${datestr}'

and is_deleted = '0'

) team_info

on driver_info.team_id = team_info.id;

"

dim_truck_full_sql="

insert overwrite table tms.dim_truck_full

partition (dt = '${datestr}')

select truck_info.id,

team_id,

team_info.name team_name,

team_no,

org_id,

org_name,

manager_emp_id,

truck_no,

truck_model_id,

model_name truck_model_name,

model_type truck_model_type,

dic_for_type.name truck_model_type_name,

model_no truck_model_no,

brand truck_brand,

dic_for_brand.name truck_brand_name,

truck_weight,

load_weight,

total_weight,

eev,

boxcar_len,

boxcar_wd,

boxcar_hg,

max_speed,

oil_vol,

device_gps_id,

engine_no,

license_registration_date,

license_last_check_date,

license_expire_date,

is_enabled

from (select id,

team_id,

md5(truck_no) truck_no,

truck_model_id,

device_gps_id,

engine_no,

license_registration_date,

license_last_check_date,

license_expire_date,

is_enabled

from ods_truck_info_full

where dt = '${datestr}'

and is_deleted = '0') truck_info

join

(select id,

name,

team_no,

org_id,

manager_emp_id

from ods_truck_team_full

where dt = '${datestr}'

and is_deleted = '0') team_info

on truck_info.team_id = team_info.id

join

(select id,

model_name,

model_type,

model_no,

brand,

truck_weight,

load_weight,

total_weight,

eev,

boxcar_len,

boxcar_wd,

boxcar_hg,

max_speed,

oil_vol

from ods_truck_model_full

where dt = '${datestr}'

and is_deleted = '0') model_info

on truck_info.truck_model_id = model_info.id

join

(select id,

org_name

from ods_base_organ_full

where dt = '${datestr}'

and is_deleted = '0'

) organ_info

on org_id = organ_info.id

join

(select id,

name

from ods_base_dic_full

where dt = '${datestr}'

and is_deleted = '0') dic_for_type

on model_info.model_type = dic_for_type.id

join

(select id,

name

from ods_base_dic_full

where dt = '${datestr}'

and is_deleted = '0') dic_for_brand

on model_info.brand = dic_for_brand.id;

"

dim_user_zip_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dim_user_zip

partition (dt)

select id,

login_name,

nick_name,

passwd,

real_name,

phone_num,

email,

user_level,

birthday,

gender,

start_date,

if(rk = 1, end_date, date_add('${datestr}', -1)) end_date,

if(rk = 1, end_date, date_add('${datestr}', -1)) dt

from (select id,

login_name,

nick_name,

passwd,

real_name,

phone_num,

email,

user_level,

birthday,

gender,

start_date,

end_date,

row_number() over (partition by id order by start_date desc) rk

from (select id,

login_name,

nick_name,

passwd,

real_name,

phone_num,

email,

user_level,

birthday,

gender,

start_date,

end_date

from dim_user_zip

where dt = '9999-12-31'

union

select id,

login_name,

nick_name,

md5(passwd) passwd,

md5(real_name) realname,

md5(if(phone_num regexp

'^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',

phone_num, null)) phone_num,

md5(if(email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', email, null)) email,

user_level,

cast(date_add('1970-01-01', cast(birthday as int)) as string) birthday,

gender,

'${datestr}' start_date,

'9999-12-31' end_date

from (select after.id,

after.login_name,

after.nick_name,

after.passwd,

after.real_name,

after.phone_num,

after.email,

after.user_level,

after.birthday,

after.gender,

row_number() over (partition by after.id order by ts desc) rn

from ods_user_info_inc

where dt = '${datestr}'

and after.is_deleted = '0'

) inc

where rn = 1) full_info) final_info;

"

dim_user_address_zip_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dim_user_address_zip

partition (dt)

select id,

user_id,

phone,

province_id,

city_id,

district_id,

complex_id,

address,

is_default,

start_date,

if(rk = 1, end_date, date_add('${datestr}', -1)) end_date,

if(rk = 1, end_date, date_add('${datestr}', -1)) dt

from (select id,

user_id,

phone,

province_id,

city_id,

district_id,

complex_id,

address,

is_default,

start_date,

end_date,

row_number() over (partition by id order by start_date desc) rk

from (select id,

user_id,

phone,

province_id,

city_id,

district_id,

complex_id,

address,

is_default,

start_date,

end_date

from dim_user_address_zip

where dt = '9999-12-31'

union

select id,

user_id,

phone,

province_id,

city_id,

district_id,

complex_id,

address,

is_default,

'${datestr}' start_date,

'9999-12-31' end_date

from (select after.id,

after.user_id,

after.phone,

after.province_id,

after.city_id,

after.district_id,

after.complex_id,

after.address,

cast(after.is_default as tinyint) is_default,

row_number() over (partition by after.id order by ts desc) rn

from ods_user_address_inc

where dt = '${datestr}'

and after.is_deleted = '0') inc

where rn = 1

) union_info

) with_rk;

"

case $1 in

"all")

/opt/module/hive/bin/hive -e "use tms;${dim_complex_full_sql}${dim_express_courier_full_sql}${dim_organ_full_sql}${dim_region_full_sql}${dim_shift_full_sql}${dim_truck_driver_full_sql}${dim_truck_full_sql}${dim_user_address_zip_sql}${dim_user_zip_sql}"

;;

"dim_complex_full")

/opt/module/hive/bin/hive -e "use tms;${dim_complex_full_sql}"

;;

"dim_express_courier_full")

/opt/module/hive/bin/hive -e "use tms;${dim_express_courier_full_sql}"

;;

"dim_organ_full")

/opt/module/hive/bin/hive -e "use tms;${dim_organ_full_sql}"

;;

"dim_region_full")

/opt/module/hive/bin/hive -e "use tms;${dim_region_full_sql}"

;;

"dim_shift_full")

/opt/module/hive/bin/hive -e "use tms;${dim_shift_full_sql}"

;;

"dim_truck_driver_full")

/opt/module/hive/bin/hive -e "use tms;${dim_truck_driver_full_sql}"

;;

"dim_truck_full")

/opt/module/hive/bin/hive -e "use tms;${dim_truck_full_sql}"

;;

"dim_user_address_zip")

/opt/module/hive/bin/hive -e "use tms;${dim_user_address_zip_sql}"

;;

"dim_user_zip")

/opt/module/hive/bin/hive -e "use tms;${dim_user_zip_sql}"

;;

*)

echo "表名输入错误..."

;;

esac(3)增加脚本执行权限

[kingjw@hadoop102 bin]$ chmod +x ods_to_dim.sh (4)脚本用法

[kingjw@hadoop102 bin]$ ods_to_dim.sh all 2023-01-119、数仓开发之DWD层

DWD层设计要点:

(1)DWD层的设计依据是维度建模理论,该层存储维度模型的事实表。

(2)DWD层的数据存储格式为orc列式存储+snappy压缩。

(3)DWD层表名的命名规范为dwd_数据域_表名_单分区增量全量标识(inc/full/acc)

9.1、交易域下单事务事实表

9.1.1、建表语句

drop table if exists dwd_trade_order_detail_inc;

create external table dwd_trade_order_detail_inc(

`id` bigint comment '运单明细ID',

`order_id` string COMMENT '运单ID',

`cargo_type` string COMMENT '货物类型ID',

`cargo_type_name` string COMMENT '货物类型名称',

`volumn_length` bigint COMMENT '长cm',

`volumn_width` bigint COMMENT '宽cm',

`volumn_height` bigint COMMENT '高cm',

`weight` decimal(16,2) COMMENT '重量 kg',

`order_time` string COMMENT '下单时间',

`order_no` string COMMENT '运单号',

`status` string COMMENT '运单状态',

`status_name` string COMMENT '运单状态名称',

`collect_type` string COMMENT '取件类型,1为网点自寄,2为上门取件',

`collect_type_name` string COMMENT '取件类型名称',

`user_id` bigint COMMENT '用户ID',

`receiver_complex_id` bigint COMMENT '收件人小区id',

`receiver_province_id` string COMMENT '收件人省份id',

`receiver_city_id` string COMMENT '收件人城市id',

`receiver_district_id` string COMMENT '收件人区县id',

`receiver_name` string COMMENT '收件人姓名',

`sender_complex_id` bigint COMMENT '发件人小区id',

`sender_province_id` string COMMENT '发件人省份id',

`sender_city_id` string COMMENT '发件人城市id',

`sender_district_id` string COMMENT '发件人区县id',

`sender_name` string COMMENT '发件人姓名',

`cargo_num` bigint COMMENT '货物个数',

`amount` decimal(16,2) COMMENT '金额',

`estimate_arrive_time` string COMMENT '预计到达时间',

`distance` decimal(16,2) COMMENT '距离,单位:公里',

`ts` bigint COMMENT '时间戳'

) comment '交易域订单明细事务事实表'

partitioned by (`dt` string comment '统计日期')

stored as orc

location '/warehouse/tms/dwd/dwd_trade_order_detail_inc'

tblproperties('orc.compress' = 'snappy');9.1.2、分区规划

9.1.3、数据装载

1)数据流向

2)首日装载

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trade_order_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volumn_length,

volumn_width,

volumn_height,

weight,

order_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(order_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volumn_length,

after.volumn_width,

after.volumn_height,

after.weight,

concat(substr(after.create_time, 1, 10), ' ', substr(after.create_time, 12, 8)) order_time,

ts

from ods_order_cargo_inc

where dt = '2023-01-10'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance

from ods_order_info_inc

where dt = '2023-01-10'

and after.is_deleted = '0') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '2023-01-10'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '2023-01-10'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '2023-01-10'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string);3)每日装载

insert overwrite table tms.dwd_trade_order_detail_inc

partition (dt = '2023-01-11')

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volumn_length,

volumn_width,

volumn_height,

weight,

order_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts

from (select after.id,

after.order_id,

after.cargo_type,

after.volumn_length,

after.volumn_width,

after.volumn_height,

after.weight,

date_format(

from_utc_timestamp(

to_unix_timestamp(concat(substr(after.create_time, 1, 10), ' ',

substr(after.create_time, 12, 8))) * 1000,

'GMT+8'), 'yyyy-MM-dd HH:mm:ss') order_time,

ts

from ods_order_cargo_inc

where dt = '2023-01-11'

and op = 'c') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance

from ods_order_info_inc

where dt = '2023-01-11'

and op = 'c') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '2023-01-11'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '2023-01-11'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '2023-01-11'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string);9.2、数据装载脚本

9.2.1、首日装载脚本

1)在hadoop102的/home/kingjw/bin目录下创建ods_to_dwd_init.sh

[kingjw@hadoop102 bin]$ vim ods_to_dwd_init.sh 2)编写如下内容

#!/bin/bash

#1、判断表名是否传入

if [ $# -lt 2 ]

then

echo "必须传入all/表名与上线日期..."

exit

fi

dwd_trade_order_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trade_order_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

order_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(order_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

concat(substr(after.create_time, 1, 10), ' ', substr(after.create_time, 12, 8)) order_time,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance

from ods_order_info_inc

where dt = '$2'

and after.is_deleted = '0') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string);

"

dwd_trade_pay_suc_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trade_pay_suc_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

payment_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

payment_type,

dic_for_payment_type.name payment_type_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(payment_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.payment_type,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance,

concat(substr(after.update_time, 1, 10), ' ', substr(after.update_time, 12, 8)) payment_time

from ods_order_info_inc

where dt = '$2'

and after.is_deleted = '0'

and after.status <> '60010'

and after.status <> '60999') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_payment_type

on info.payment_type = cast(dic_for_payment_type.id as string);

"

dwd_trade_order_cancel_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trade_order_cancel_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

cancel_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(cancel_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance,

concat(substr(after.update_time, 1, 10), ' ', substr(after.update_time, 12, 8)) cancel_time

from ods_order_info_inc

where dt = '$2'

and after.is_deleted = '0'

and after.status = '60999') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string);

"

dwd_trans_receive_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trans_receive_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

receive_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

payment_type,

dic_for_payment_type.name payment_type_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(receive_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.payment_type,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance,

concat(substr(after.update_time, 1, 10), ' ', substr(after.update_time, 12, 8)) receive_time

from ods_order_info_inc

where dt = '$2'

and after.is_deleted = '0'

and after.status <> '60010'

and after.status <> '60020'

and after.status <> '60999') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_payment_type

on info.payment_type = cast(dic_for_payment_type.id as string);

"

dwd_trans_dispatch_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trans_dispatch_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

dispatch_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

payment_type,

dic_for_payment_type.name payment_type_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(dispatch_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.payment_type,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance,

concat(substr(after.update_time, 1, 10), ' ', substr(after.update_time, 12, 8)) dispatch_time

from ods_order_info_inc

where dt = '$2'

and after.is_deleted = '0'

and after.status <> '60010'

and after.status <> '60020'

and after.status <> '60030'

and after.status <> '60040'

and after.status <> '60999') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_payment_type

on info.payment_type = cast(dic_for_payment_type.id as string);

"

dwd_trans_bound_finish_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trans_bound_finish_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

bound_finish_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

payment_type,

dic_for_payment_type.name payment_type_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(bound_finish_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,

after.sender_city_id,

after.sender_district_id,

concat(substr(after.sender_name, 1, 1), '*') sender_name,

after.payment_type,

after.cargo_num,

after.amount,

date_format(from_utc_timestamp(

cast(after.estimate_arrive_time as bigint), 'UTC'),

'yyyy-MM-dd HH:mm:ss') estimate_arrive_time,

after.distance,

concat(substr(after.update_time, 1, 10), ' ', substr(after.update_time, 12, 8)) bound_finish_time

from ods_order_info_inc

where dt = '$2'

and after.is_deleted = '0'

and after.status <> '60010'

and after.status <> '60020'

and after.status <> '60030'

and after.status <> '60040'

and after.status <> '60050'

and after.status <> '60999') info

on cargo.order_id = info.id

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_cargo_type

on cargo.cargo_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_status

on info.status = cast(dic_for_status.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_collect_type

on info.collect_type = cast(dic_for_cargo_type.id as string)

left join

(select id,

name

from ods_base_dic_full

where dt = '$2'

and is_deleted = '0') dic_for_payment_type

on info.payment_type = cast(dic_for_payment_type.id as string);

"

dwd_trans_deliver_suc_detail_inc_sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table tms.dwd_trans_deliver_suc_detail_inc

partition (dt)

select cargo.id,

order_id,

cargo_type,

dic_for_cargo_type.name cargo_type_name,

volume_length,

volume_width,

volume_height,

weight,

deliver_suc_time,

order_no,

status,

dic_for_status.name status_name,

collect_type,

dic_for_collect_type.name collect_type_name,

user_id,

receiver_complex_id,

receiver_province_id,

receiver_city_id,

receiver_district_id,

receiver_name,

sender_complex_id,

sender_province_id,

sender_city_id,

sender_district_id,

sender_name,

payment_type,

dic_for_payment_type.name payment_type_name,

cargo_num,

amount,

estimate_arrive_time,

distance,

ts,

date_format(deliver_suc_time, 'yyyy-MM-dd') dt

from (select after.id,

after.order_id,

after.cargo_type,

after.volume_length,

after.volume_width,

after.volume_height,

after.weight,

ts

from ods_order_cargo_inc

where dt = '$2'

and after.is_deleted = '0') cargo

join

(select after.id,

after.order_no,

after.status,

after.collect_type,

after.user_id,

after.receiver_complex_id,

after.receiver_province_id,

after.receiver_city_id,

after.receiver_district_id,

concat(substr(after.receiver_name, 1, 1), '*') receiver_name,

after.sender_complex_id,

after.sender_province_id,