Flink的Reduce算子,Name-Position形式的Row数据在使用保存点/检查点重新启动的时候,变成了Position-Based形式的Row解决

1. 背景

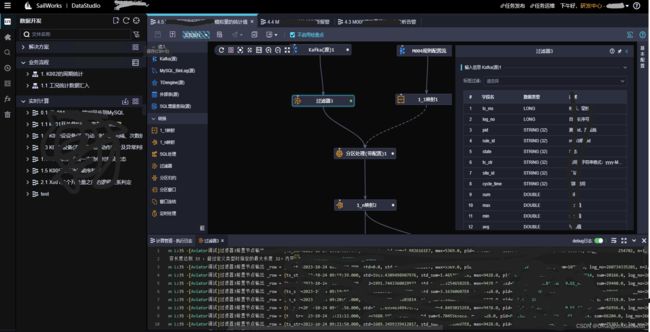

大数据平台XSailboat 提供了基于Apache Flink的实时计算管道开发功能。DataStudio的实时计算管道开发功能中提供了分区规约节点(keyBy+reduce)。

当使用保存点重新部署启动任务的时候,就会出现这样的异常:

首先需要说明,XSailboat实时计算框架(SailFlink)中数据都是以NamePoisition格式的Row形式流转的。从异常和代码上分析得知原因是:

原先NamePosition格式的Row,现在遇到了Position-Based格式的Row了。这个异常总是出现在使用检查点或保存点重启计算任务的时候。所以高度怀疑是不是生成检查点或保存点的时候,没有存name,而是直接存成了数组。存成数组,显然比{name:value}形式更节约存储空间

2. 分析

Row内部数据3种形式:

1.Name-Value形式,键值形式存储,没有位置序号。数据存储字段:

private final @Nullable Map<String, Object> fieldByName;

- Position-Based形式,没有字段名,数据以数组形式存储。数据存储字段:

private final @Nullable Object[] fieldByPosition;

- Name-Position形式,有字段名,同事数据以数组形式存储。数据存储字段:

private final @Nullable Object[] fieldByPosition;

private final @Nullable LinkedHashMap<String, Integer> positionByName;

Row的序列化和反序列化,缺省使用的是类:

org.apache.flink.api.java.typeutils.runtime.RowSerializer

// 省略...

@Override

public void serialize(Row record, DataOutputView target) throws IOException {

// 这里参数为false,会导致name-position形式的Row,不返回字段名

final Set<String> fieldNames = record.getFieldNames(false);

if (fieldNames == null) {

serializePositionBased(record, target); // 以这种形式输出了数据

} else {

serializeNameBased(record, fieldNames, target);

}

}

// 省略...

@Override

public Row deserialize(DataInputView source) throws IOException {

final int length = fieldSerializers.length;

// read bitmask

readIntoMask(source, mask);

// read row kind

final RowKind kind;

if (!supportsRowKind) {

kind = RowKind.INSERT;

} else {

kind = readKindFromMask(mask);

}

// deserialize fields

final Object[] fieldByPosition = new Object[length];

for (int fieldPos = 0; fieldPos < length; fieldPos++) {

if (!mask[rowKindOffset + fieldPos]) {

fieldByPosition[fieldPos] = fieldSerializers[fieldPos].deserialize(source);

}

}

// 即时保存点生成时,没有输出出去,但只要RowSerializer的positionByName不为空,

// 那么生成的Row就是Name-Position模式的

// 把分析重点放在什么时候positionByName为null

return RowUtils.createRowWithNamedPositions(kind, fieldByPosition, positionByName);

}

类:org.apache.flink.types.Row

/**

* Returns the set of field names if this row operates in name-based field mode, otherwise null.

*

* This method is a helper method for serializers and converters but can also be useful for

* other row transformations.

*

* @param includeNamedPositions whether or not to include named positions when this row operates

* in a hybrid field mode

*/

public @Nullable Set<String> getFieldNames(boolean includeNamedPositions) {

if (fieldByName != null) {

return fieldByName.keySet();

}

// 如果includeNamedPositions为false,那么Name-Position形式的Row,不返回字段名

if (includeNamedPositions && positionByName != null) {

return positionByName.keySet();

}

return null;

}

从以上代码可知,序列化出去的时候是以Position-Based方式输出的,但是反序列化的时候,只要RowSerializer的RowSerializer的positionByName不为空,那么生成的Row就是Name-Position模式的,所以把分析重点放在什么时候positionByName为null上。

经过跟踪分析,发现了如下的调用栈:

类:org.apache.flink.api.java.typeutils.runtime.RowSerializerSnapshot

@Override

protected RowSerializer createOuterSerializerWithNestedSerializers(

TypeSerializer<?>[] nestedSerializers) {

// 第2个null参数就是positionByName,所以恢复检查点数据时,恢复出来的Row时Position-Based模式

return new RowSerializer(nestedSerializers, null, supportsRowKind);

}

3. 解决方法

因为先前SailFlink为了支持数据流里面流动命令流,RowTypeInfo改成了自定义的ERowTypeInfo,正好在其中扩展:

类:com.cimstech.sailboat.flink.run.func.ERowTypeInfo

// 省略...

@Override

public TypeSerializer<Row> createSerializer(ExecutionConfig aConfig)

{

Map<String, String> map = aConfig.getGlobalJobParameters().toMap();

if ("true".equals(map.get(ICmdRowConsts.GK_SupportCmdRow)))

{

int len = getArity();

TypeSerializer<?>[] fieldSerializers = new TypeSerializer[len];

for (int i = 0; i < len; i++)

{

fieldSerializers[i] = types[i].createSerializer(aConfig);

}

final LinkedHashMap<String, Integer> positionByName = new LinkedHashMap<>();

for (int i = 0; i < fieldNames.length; i++)

{

positionByName.put(fieldNames[i], i);

}

return new CmdSupportRowSerializer(fieldSerializers, positionByName);

}

int len = getArity();

TypeSerializer<?>[] fieldSerializers = new TypeSerializer[len];

for (int i = 0; i < len; i++)

{

fieldSerializers[i] = types[i].createSerializer(aConfig);

}

final LinkedHashMap<String, Integer> positionByName = new LinkedHashMap<>();

for (int i = 0; i < fieldNames.length; i++)

{

positionByName.put(fieldNames[i], i);

}

return new ERowSerializer(fieldSerializers, positionByName);

}

// 省略...

类:com.cimstech.sailboat.flink.run.func.ERowSerializer

@Override

public TypeSerializerSnapshot<Row> snapshotConfiguration()

{

return new ERowSerializerSnapshot(this);

}

/** A {@link TypeSerializerSnapshot} for RowSerializer. */

public static final class ERowSerializerSnapshot

extends CompositeTypeSerializerSnapshot<Row, ERowSerializer>

{

/**

* Flink的RowSerializer在从检查点恢复的时候,没有保留positionByName的信息,造成恢复的时候

* Row变成了Position-Based模式了,为此修改支持上此点

*/

// private static final int VERSION = 4;

private static final int Yyl_VERSION = 5 ;

private static final int FIRST_VERSION_WITH_ROW_KIND = 3;

private boolean supportsRowKind = true;

LinkedHashMap<String , Integer> mNamePoistionMap ;

public ERowSerializerSnapshot()

{

super(ERowSerializer.class);

}

ERowSerializerSnapshot(ERowSerializer serializerInstance)

{

super(serializerInstance);

this.supportsRowKind = serializerInstance.supportsRowKind;

mNamePoistionMap = serializerInstance.positionByName ;

}

@Override

protected int getCurrentOuterSnapshotVersion()

{

return Yyl_VERSION;

}

@Override

protected void readOuterSnapshot(

int readOuterSnapshotVersion,

DataInputView aIn,

ClassLoader userCodeClassLoader)

throws IOException

{

if (readOuterSnapshotVersion < FIRST_VERSION_WITH_ROW_KIND)

{

supportsRowKind = false;

}

else if (readOuterSnapshotVersion == FIRST_VERSION_WITH_ROW_KIND)

{

supportsRowKind = true;

}

else

{

supportsRowKind = aIn.readBoolean();

}

if(readOuterSnapshotVersion == Yyl_VERSION)

{

final int size = aIn.readInt() ;

if(size >=0)

{

LinkedHashMap<String, Integer> namePositionMap = CS.linkedHashMap() ;

for(int i=0 ; i<size ; i++)

{

namePositionMap.put(aIn.readUTF() , aIn.readInt()) ;

}

mNamePoistionMap = namePositionMap ;

}

}

}

@Override

protected void writeOuterSnapshot(DataOutputView out) throws IOException

{

out.writeBoolean(supportsRowKind);

if(mNamePoistionMap == null)

out.writeInt(-1) ;

else

{

out.writeInt(mNamePoistionMap.size()) ;

for(java.util.Map.Entry<String , Integer> entry : mNamePoistionMap.entrySet())

{

out.writeUTF(entry.getKey()) ;

out.writeInt(entry.getValue()) ;

}

}

}

// 省略...

@Override

protected ERowSerializer createOuterSerializerWithNestedSerializers(

TypeSerializer<?>[] nestedSerializers)

{

return new ERowSerializer(nestedSerializers, mNamePoistionMap , supportsRowKind);

}

}