linux12Devops -->10Jenkins流水线容器化+Harbor私有仓库

Jenkins流水线容器化+Harbor私有仓库

将Jenkins的编译环境迁移至k8s中

Jenkins中自动构建项目的类型

Jenkins中自动构建项目的类型有很多,常用的有以下三种:

自由风格软件项目( FreeStyle Project)

Maven 项目(Maven Project)

流水线项目( Pipeline Project)

# 每种类型的构建其实都可以完成一样的构建过程与结果,只是在操作方式、灵活度等方面有所区别,在实际开发中可以根据自己的需求和习惯来选择。(PS:个人推荐使用流水线类型,因为灵活度非常高)

Harbor

官网: https://goharbor.io/

部署私有镜像仓库(Harbor)

作为一个企业级私有 Registry 服务器,Harbor 提供了更好的性能和安全。提升用户使用 Registry 构建和运行环境传输镜像的效率。Harbor 支持安装在多个 Registry 节点的镜像资源复制,镜像全部保存在私有 Registry 中, 确保数据和知识产权在公司内部网络中管控。

另外,Harbor 也提供了高级的安全特性,诸如用户管理,访问控制和活动审计等

配置HTTPS

默认情况下,Harbor不附带证书,可以在没有安全性的情况下部署Harbor,以便您可以通过HTTP连接到它。但是,只有在没有连接到外部Internet的空白测试或开发环境中,才可以使用HTTP。在没有空隙的环境中使用HTTP会使您遭受中间人攻击;要配置HTTPS,必须创建SSL证书。

您可以使用由受信任的第三方CA签名的证书,也可以使用自签名证书。本节介绍如何使用 OpenSSL创建CA,以及如何使用CA签署服务器证书和客户端证书。

一、生成证书

将Harbor私有镜像仓库装在了k8m-m-01上,也可以安装一台测试机

- 在生产环境中,您应该从CA获得证书。在测试或开发环境中,您可以生成自己的CA。要生成CA证书,请运行以下命令。

1.生成CA证书私钥

mkdir /opt/cert

cd /opt/cert

openssl genrsa -out ca.key 4096

2.生成CA证书

openssl req -x509 -new -nodes -sha512 -days 3650 \

-subj "/C=CN/ST=ShangHai/L=ShangHai/O=Oldboy/OU=Linux/CN=192.168.15.130" \

-key ca.key \

-out ca.crt

3.生成服务器证书

openssl genrsa -out 192.168.15.130.key 4096

4.生成证书签名请求

openssl req -sha512 -new \

-subj "/C=CN/ST=ShangHai/L=ShangHai/O=Oldboy/OU=Linux/CN=192.168.15.130" \

-key 192.168.15.130.key \

-out 192.168.15.130.csr

5.生成一个x509 v3扩展文件

- 域名版(企业用内,此处没用到)

cat > v3.ext <<-EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

DNS.1=yourdomain.com

DNS.2=yourdomain

DNS.3=hostname

EOF

- IP版(此处使用)

cat > v3.ext <<-EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = IP:192.168.15.130

EOF

6.使用该v3.ext文件生成证书

openssl x509 -req -sha512 -days 3650 \

-extfile v3.ext \

-CA ca.crt -CAkey ca.key -CAcreateserial \

-in 192.168.15.130.csr \

-out 192.168.15.130.crt

7.安装Docker

[root@test ~]# vim docker.aliyun.sh

# step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 &&\

# Step 2: 添加软件源信息

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo &&\

# Step 3

sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo &&\

# Step 4: 更新并安装Docker-CE

sudo yum makecache fast &&\

sudo yum -y install docker-ce &&\

# Step 4: 开启Docker服务

systemctl enable --now docker.service &&\

# Step 5: Docker加速优化服务

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://k7eoap03.mirror.aliyuncs.com"]

}

EOF

二、颁发证书

颁发证书给Harbor和Docker来用

生成后ca.crt,192.168.15.130.crt和192.168.15.130.key文件,必须将它们提供给Harbor和Docker,和重新配置港使用它们

1.分发证书

- 将服务器证书和密钥复制到Harbor主机上的certficates文件夹中

/opt/cert/

openssl x509 -inform PEM -in 192.168.15.130.crt -out 192.168.15.130.cert

mkdir -pv /etc/docker/certs.d/192.168.15.130/

cp 192.168.15.130.{cert,key} ca.crt /etc/docker/certs.d/192.168.15.130/

# 如果nginx端口默认部署443和80,后面port则指定为端口号

/etc/docker/certs.d/192.168.15.130:port

/etc/docker/certs.d/192.168.15.130:port

# 复制Harbor证书(当前测试执行此步)

mkdir -p /data/cert

cp 192.168.15.130.crt /data/cert

cp 192.168.15.130.key /data/cert

# 重启docker加载证书

systemctl restart docker

2.安装Harbor

链接:https://github.com/goharbor/harbor

# 进入下载目录

cd /opt

# 下载

wget https://github.com/goharbor/harbor/releases/download/v2.3.1/harbor-offline-installer-v2.3.1.tgz

# 解压

tar xf harbor-offline-installer-v2.3.1.tgz

# 进入harbor目录

cd harbor/

# 下载docker-composrt

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

# 打印出上方地址,复制到浏览器下载

curl -L https://raw.githubusercontent.com/docker/compose/1.29.2/contrib/completion/bash/dock

er-compose > /etc/bash_completion.d/docker-compose

# 设置可执行权限

chmod +x /usr/local/bin/docker-compose

# 测试docker-composer

docker-compose -v

# 修改harbor.yaml以下三处

cp harbor.yml.tmpl harbor.yml

vim harbor.yml

hostname: 192.168.15.130

certificate: /data/cert/192.168.15.130.crt

private_key: /data/cert/192.168.15.130.key

# 生成配置文件,其实就是导入压缩包内的镜像 #

docker load < harbor.v2.3.1.tar.gz

./prepare

# 清空相关容器

docker-compose down

# 安装并启动

./install.sh

# 耐心等待...

Creating network "harbor_harbor" with the default driver

Creating harbor-log ... done

Creating harbor-db ... done

Creating harbor-portal ... done

Creating registry ... done

Creating redis ... done

Creating registryctl ... done

Creating harbor-core ... done

Creating nginx ... done

Creating harbor-jobservice ... done

✔ ----Harbor has been installed and started successfully.----

# 若报错,则查看是否端口被占用,结束相关端口即刻,一般为nginx的80端口

# 修改端口可改为8089或其他,两处都要修改,再次执行脚本即可

vim harbor.yaml

hostname: 192.168.15.130:8089

port: 8089

# 先执行prepare,否则配置不生效

./prepare # 生成harbor仓库

./install.sh # 安装harbor

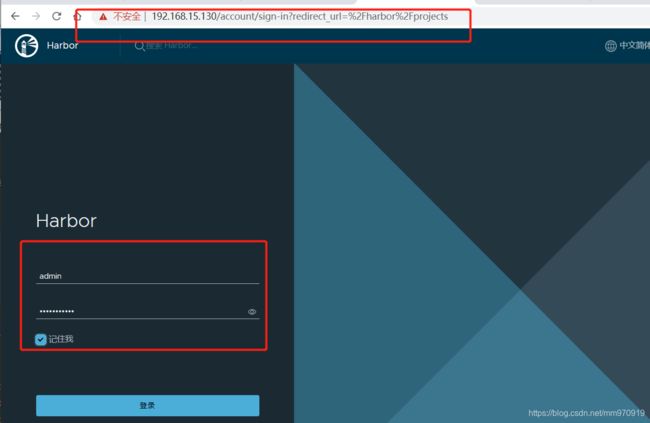

访问ip测试:https://192.168.15.130:8089 #默认是https://192.168.15.130/

用户名:admin

密码 :Harbor12345

#密码配置

[root@test harbor]# cat harbor.yml |grep 12345

harbor_admin_password: Harbor12345

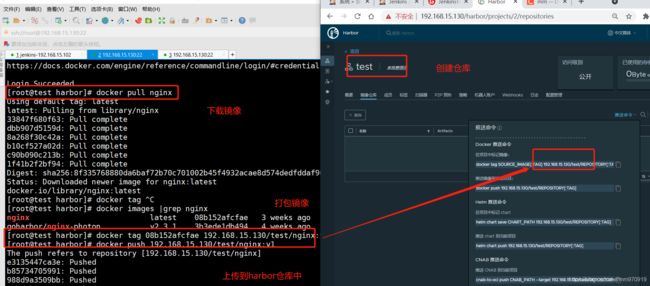

3.测试Harbor

# 测试登录

docker login 192.168.15.130 --username=admin --password=Harbor12345

# 测试打包、推送到私有仓库

[root@k8m-m-01 harbor]# docker pull nginx

[root@k8m-m-01 harbor]# docker tag 62d49f9bab67 192.168.15.130/test/nginx:v1

[root@k8m-m-01 harbor]# docker push 192.168.15.130/test/nginx:v1

# 拉取

[root@k8m-m-01 harbor]# docker pull 192.168.15.130/test/nginx:v1

# k8s集群所有节点都必须给docker做证书认证

for i in n1 n2;

do

ssh $i "mkdir -pv /etc/docker/certs.d/192.169.12.11/"

scp 192.168.15.130.cert 192.168.15.130.key ca.crt root@$i:/etc/docker/certs.d/192.168.15.130/

ssh $i "systemctl restart docker"

done

# n1、n2访问成功

Jenkins流水线实现容器化

最近我们构建和部署服务的方式与原来相比简直就是突飞猛进,像那种笨拙的、单一的、用于构建单体式应用程序的方式已经是过去式了。

现在的应用为了提供更好的拓展性和可维护性,都会去拆解成各种相互依赖小、解耦性强的微服务,这些服务有各自的依赖和进度。这跟我们的Kubernetes不谋而合。

1.用JenKins连接K8S

1)创建admin-csr.json(kubernetes)

mkdir /opt/cert && cd /opt/cert

cat > admin-csr.json << EOF

{

"CN":"admin",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

2)安装证书生成工具(kubernetes)

# 下载

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# 设置执行权限

chmod +x cfssljson_linux-amd64 cfssl_linux-amd64

# 移动到/usr/local/bin

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl_linux-amd64 /usr/local/bin/cfssl

3)创建证书私钥(kubernetes)

cfssl gencert -ca=/etc/kubernetes/pki/ca.crt -ca-key=/etc/kubernetes/pki/ca.key --profile=kubernetes admin-csr.json | cfssljson -bare admin

4)配置证书(kubernetes)

openssl pkcs12 -export -out ./jenkins-admin.pfx -inkey ./admin-key.pem -in ./admin.pem -passout pass:123456

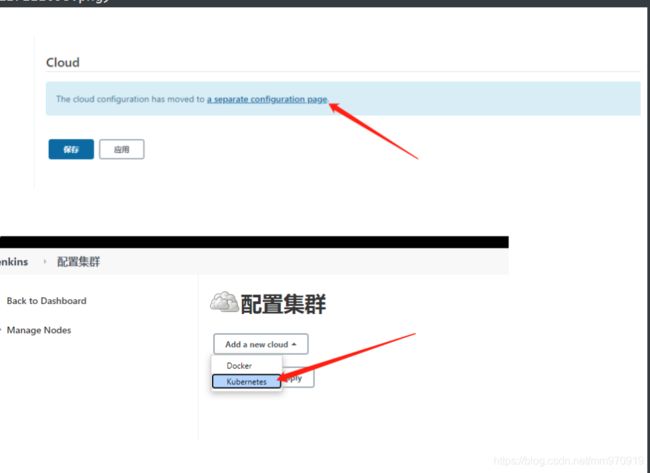

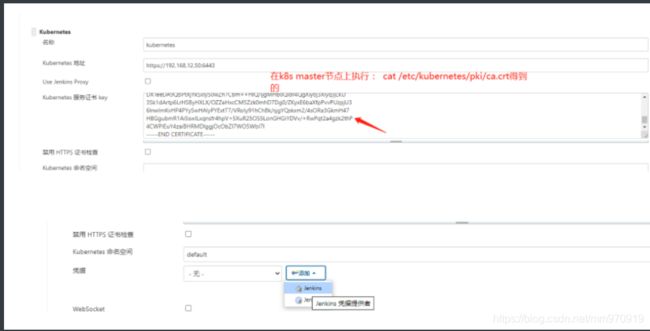

5)配置jenkins链接k8s(Jenkins)

系统管理 ---> 系统配置 ---> cloud

2.链接K8s测试

[root@k8m-m-01 ~]# kubectl create secret generic kubeconfig --from-file=.kube/config

secret/kubeconfig created

pipeline {

agent {

kubernetes{

cloud "${KUBERNETES_NAME}" #集群名字

slaveConnectTimeout 1200 #连接超时时间

yaml '''

apiVersion: v1

kind: pod #metadata 流水线默认创建,此处不许设置

spec:

containers:

- name: jnlp

image: jenkins/inbound-agent:4.7-1-jdk11

args: [\'$(JENKINS_SECRET)\', \'$(JENKINS_NAME)\']

imagePullPolicy: IfNotPresent #镜像拉取策略:不存在则拉取

volumeMounts: #挂载时区,用一个系统时间

- mountPath: "/etc/localtime"

name: "volume-2"

readOnly: false

- name: docker #与流水线中容器名称必须一致container(name: 'docker', shell: 'echo')

image: docker:19.03.15-git #docker容器镜像必须19版本

imagePullPolicy: IfNotPresent

tty: true

volumeMounts:

- mountPath: "/etc/localtime"

name: "volume-2"

readOnly: false

- mountPath: "/var/run/docker.sock"

name: "volume-docker"

readOnly: false

- mountPath: "/etc/hosts"

name: "volume-hosts"

readOnly: false

- name: kubectl #与流水线中容器名称必须一致 container(name: 'kubectl', shell: 'echo')

image: bitnami/kubectl:1.20.2 #kubectl版本要与k8s本版一致

imagePullPolicy: IfNotPresent

tty: true

volumeMounts:

- mountPath: "/etc/localtime"

name: "volume-2"

readOnly: false

- mountPath: "/var/run/docker.sock"

name: "volume-docker"

readOnly: false

- mountPath: "/root/.kube"

name: "kubeconfig"

readOnly: false

- name: golang #业务容器

image: golang:1.16.3

imagePullPolicy: IfNotPresent

tty: true

env:

- name: "LANGUAGE"

value: "en_US:en"

- name: "LC_ALL"

value: "en_US.UTF-8"

- name: "LANG"

value: "en_US.UTF-8"

command: #默认指令,不执行相当于占位符

- "cat"

volumeMounts:

- mountPath: "/etc/localtime"

name: "volume-2"

readOnly: false

- name: maven #java所需容器镜像

image: maven:3.6.3-openjdk-8

tty: true

command:

- "cat"

volumeMounts:

- mountPath: "/etc/localtime"

name: "volume-2"

readOnly: false

- mountPath: "/root/.m2/repository"

name: "volume-maven-repo"

readOnly: false

env:

- name: "LANGUAGE"

value: "en_US:en"

- name: "LC_ALL"

value: "en_US.UTF-8"

- name: "LANG"

value: "en_US.UTF-8"

volumes:

- name: volume-maven-repo

emptyDir: {}

- name: volume-2

hostPath:

path: "/usr/share/zoneinfo/Asia/Shanghai"

- name: kubeconfig

secret:

secretName: kubeconfig

items:

- key: config

path: config

- name: volume-docker

hostPath:

path: "/var/run/docker.sock"

- name: volume-hosts

hostPath:

path: /etc/hosts

'''

}

}

stages {

stage('源代码管理') {

parallel {

stage('拉取代码') {

steps {

git(url: '${GIT_REPOSITORY_URL}', branch: '${GIT_TAG}', changelog: true, credentialsId: '${CREDENTIALS_ID}')

}

}

stage('系统检查及初始化') {

steps {

sh """

echo "Check Sysyem Env"

"""

}

}

}

}

stage('编译及构建') {

parallel {

stage('编译代码') {

steps {

container(name: 'golang', shell: 'echo') {

sh """

go build main.go

"""

}

}

}

stage('初始化操作系统及kubernetes环境') {

steps {

script {

CommitID = sh(returnStdout: true, script: "git log -n 1 --pretty=format:'%h'").trim()

CommitMessage = sh(returnStdout: true, script: "git log -1 --pretty=format:'%h : %an %s'").trim()

def curDate = sh(script: "date '+%Y%m%d-%H%M%S'", returnStdout: true).trim()

TAG = curDate[0..14] + "-" + CommitID + "-master"

}

}

}

}

}

stage('构建镜像及检查kubernetes环境') {

parallel {

stage('构建镜像') {

steps {

withCredentials([usernamePassword(credentialsId: '${DOCKER_REPOSITORY_CREDENTIAL_ID}', passwordVariable: 'PASSWORD', usernameVariable: 'USERNAME')]) {

container(name: 'docker', shell: 'echo') {

sh """

docker build -t ${HARBOR_HOST}/${NAMESPACE_NAME}/${REPOSITORY_NAME}:${TAG} .

docker login ${HARBOR_HOST} --username=${USERNAME} --password=${PASSWORD}

docker push ${HARBOR_HOST}/${NAMESPACE_NAME}/${REPOSITORY_NAME}:${TAG}

"""

}

}

}

}

stage('Check Kubernetes ENV') {

steps {

container(name: 'kubectl', shell: 'echo') {

sh 'sleep 10'

}

}

}

}

}

stage('Deploy Image to Kubernetes') {

steps {

container(name: 'kubectl', shell: 'echo') {

sh 'sleep 10'

}

}

}

stage('Test Service') {

steps {

sh 'sleep 10'

}

}

stage('Send Email to Admin') {

steps {

sh 'sleep 10'

}

}

}

}

stage('Check Kubernetes ENV') {

steps {

container(name: 'kubectl', shell: 'echo') {

sh 'sleep 10'

}

}

}

}

}

stage('Deploy Image to Kubernetes') {

steps {

container(name: 'kubectl', shell: 'echo') {

sh 'sleep 10'

}

}

}

stage('Test Service') {

steps {

sh 'sleep 10'

}

}

stage('Send Email to Admin') {

steps {

sh 'sleep 10'

}

}

}

}