并行程序设计——MPI编程

并行程序设计——MPI编程

实验一

题目描述

实现第5章课件中的梯形积分法的MPI编程熟悉并掌握MPI编程方法,规模自行设定,可探讨不同规模对不同实现方式的影响。

实验代码

# include 不同规模下的实验结果

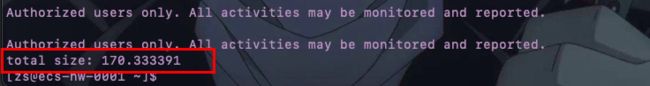

- 当规模为1000时

- 当规模为5000时

实验结论

有上述实验结果可知,当规模越大时,计算的数据越加精确

实验二

实验内容

对于课件中“多个数组排序”的任务不均衡案例进行MPI编程实现,规模可自己设定、调整。

实验代码

#include 实验结果

实验三(附加题)

实验内容

实现高斯消去法解线性方程组的MPI编程,与SSE(或AVX)编程结合,并与Pthread、OpenMP(结合SSE或AVX)版本对比,规模自己设定。

实验代码

#include 实验结果

当规模为2048时