python-----爬电影网站

电影网站

爬取目标网站数据,关键项不能少于5项。

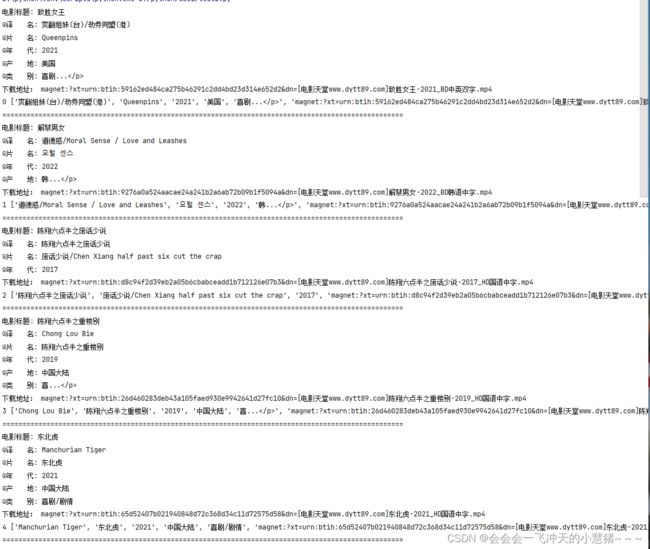

代码如下:

import requests

import re

import xlwt

from bs4 import BeautifulSoup

url = "https://www.piaohua.com/html/xiju/list_22.html"

hd = {

'User-Agent': 'Mozilla/4.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36'

}

def getmagnet(linkurl):

res = requests.get(linkurl, headers=hd)

res.encoding = res.apparent_encoding

soup = BeautifulSoup(res.text, "html.parser")

ret = soup.find_all("a")

for n in ret:

if "magnet" in str(n.string):

return n.string

def saveExcel(worksheet, count, info):

for col, data in enumerate(info):

worksheet.write(count, col, data)

count = 0

total = []

workbook = xlwt.Workbook(encoding="utf-8")

worksheet = workbook.add_sheet('sheet1')

for i in range(22, 23):

url = "https://www.piaohua.com/html/xiju/list_" + str(i) + ".html"

res = requests.get(url, headers=hd)

res.encoding = res.apparent_encoding

soup = BeautifulSoup(res.text, "html.parser")

film_container = soup.find("ul", class_="ul-imgtxt2 row")

movies = film_container.find_all("li", class_="col-md-6")

for movie in movies:

info = [] # Initialize info list for each movie

title_tag = movie.find("h3").find("a").find("b")

if title_tag:

title = title_tag.get_text(strip=True)

title = re.sub(r'\(.*?\)', '', title)

print("电影标题:", title)

pat = re.compile(r"◎译 名(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎译 名:", n)

info.append(n)

pat = re.compile(r"◎片 名(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎片 名:", n)

info.append(n)

pat = re.compile(r"◎年 代(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎年 代:", n)

info.append(n)

pat = re.compile(r"◎产 地(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎产 地:", n)

info.append(n)

pat = re.compile(r"◎类 别(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎类 别:", n)

info.append(n)

linkurl = "https://www.piaohua.com/" + movie.find("a").get("href")

magnet = getmagnet(linkurl)

if magnet:

print("下载地址:", magnet)

info.append(str(magnet))

print(count, info)

saveExcel(worksheet, count, info)

count += 1

print("=" * 100)

workbook.save('movie.xls')

存储数据到数据库,可以进行增删改查操作

代码如下:

import requests

import re

import xlwt

import sqlite3

from bs4 import BeautifulSoup

url = "https://www.piaohua.com/html/xiju/list_22.html"

hd = {

'User-Agent': 'Mozilla/4.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36'

}

def getmagnet(linkurl):

res = requests.get(linkurl, headers=hd)

res.encoding = res.apparent_encoding

soup = BeautifulSoup(res.text, "html.parser")

ret = soup.find_all("a")

for n in ret:

if "magnet" in str(n.string):

return n.string

def saveExcel(worksheet, count, info):

for col, data in enumerate(info):

worksheet.write(count, col, data)

def createTableIfNotExists():

con = sqlite3.connect("movies.db")

cur = con.cursor()

sql_create_table = '''

CREATE TABLE IF NOT EXISTS movies (

id INTEGER PRIMARY KEY AUTOINCREMENT,

original_title TEXT,

translated_title TEXT,

release_year TEXT,

country TEXT,

category TEXT,

download_url TEXT

);

'''

cur.execute(sql_create_table)

con.commit()

cur.close()

con.close()

def addMovie(original_title, translated_title=None, release_year=None, country=None, category=None, download_url=None):

con = sqlite3.connect("movies.db")

cur = con.cursor()

# 获取当前最大的ID值,并生成新的ID

cur.execute("SELECT MAX(id) FROM movies")

max_id = cur.fetchone()[0]

new_id = 1 if max_id is None else max_id + 1

sql_insert_movie = '''

INSERT INTO movies (id, original_title, translated_title, release_year, country, category, download_url)

VALUES (?, ?, ?, ?, ?, ?, ?);

'''

cur.execute(sql_insert_movie, (new_id, original_title, translated_title, release_year, country, category, download_url))

con.commit()

cur.close()

con.close()

def deleteMovie(movie_id):

con = sqlite3.connect("movies.db")

cur = con.cursor()

sql_delete_movie = '''

DELETE FROM movies WHERE id=?;

'''

cur.execute(sql_delete_movie, (movie_id,))

con.commit()

cur.close()

con.close()

def updateMovie(movie_id, category):

con = sqlite3.connect("movies.db")

cur = con.cursor()

sql_update_movie = '''

UPDATE movies SET category=? WHERE id=?;

'''

cur.execute(sql_update_movie, (category, movie_id))

con.commit()

cur.close()

con.close()

def getAllMovies():

con = sqlite3.connect("movies.db")

cur = con.cursor()

cur.execute("SELECT * FROM movies")

movies = cur.fetchall()

cur.close()

con.close()

return movies

def main():

count = 0

total = []

workbook = xlwt.Workbook(encoding="utf-8")

worksheet = workbook.add_sheet('sheet1')

createTableIfNotExists() # Create the data table

# Fetch the movie list

res = requests.get(url, headers=hd)

res.encoding = res.apparent_encoding

soup = BeautifulSoup(res.text, "html.parser")

film_container = soup.find("ul", class_="ul-imgtxt2 row")

movies = film_container.find_all("li", class_="col-md-6")

for movie in movies:

info = [] # Initialize info list for each movie

title_tag = movie.find("h3").find("a").find("b")

if title_tag:

title = title_tag.get_text(strip=True)

title = re.sub(r'\(.*?\)', '', title)

print("电影标题:", title)

pat = re.compile(r"◎译 名(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎译 名:", n)

info.append(n)

pat = re.compile(r"◎片 名(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎片 名:", n)

info.append(n)

pat = re.compile(r"◎年 代(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎年 代:", n)

info.append(n)

pat = re.compile(r"◎产 地(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎产 地:", n)

info.append(n)

pat = re.compile(r"◎类 别(.*)\n")

ret = re.findall(pat, str(movie))

for n in ret:

n = n.replace(u'\u3000', u'')

print("◎类 别:", n)

info.append(n)

linkurl = "https://www.piaohua.com/" + movie.find("a").get("href")

magnet = getmagnet(linkurl)

if magnet:

print("下载地址:", magnet)

info.append(str(magnet))

print(count, info)

saveExcel(worksheet, count, info)

addMovie(*info) # 添加电影信息到数据库

count += 1

print("=" * 100)

workbook.save('movie.xls')

if __name__ == "__main__":

main()

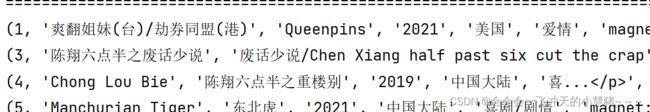

结果:

添加增删改查操作

代码如下:

以上代码不变,在

workbook.save('movie.xls')后面加# 添加一部电影 addMovie("战狼", "战狼", "2015", "中国", "战争,动作", "magnet:xxxxx") # 删除电影(假设电影ID为1) deleteMovie(2) # 更新电影(假设电影ID为2,更新为"爱情") updateMovie(1, "爱情") # 获取所有电影信息 movies = getAllMovies() for movie in movies: print(movie) if __name__ == "__main__": main()

运行结果:

扩展:将库中数据进行可视化展示。

代码如下:

import sqlite3

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import warningsdef plot_bar_chart():

# 连接到数据库

conn = sqlite3.connect("movies.db")

cur = conn.cursor()# 查询电影年代信息

cur.execute("SELECT release_year FROM movies")

data = cur.fetchall()cur.close()

conn.close()# 将查询结果转换成DataFrame,以便后续处理

df = pd.DataFrame(data, columns=["Release Year"])# 绘制柱形图

# 设置中文字符显示的字体为"Microsoft YaHei"

plt.rcParams['font.family'] = 'Microsoft YaHei'# 创建一个新的图表,指定图表大小为10x6英寸

plt.figure(figsize=(10, 6))# 使用Seaborn库的countplot函数绘制柱形图

# 传入DataFrame "df" 和x轴的数据字段名"Release Year"

sns.countplot(data=df, x="Release Year")# 设置x轴和y轴的标签

plt.xlabel("年代")

plt.ylabel("电影数量")# 设置图表标题

plt.title("电影年代分布")# 设置x轴刻度标签旋转角度为45度,防止标签重叠

plt.xticks(rotation=45)# 调整图表布局,防止标签重叠

plt.tight_layout()# 显示图表

plt.show()if __name__ == "__main__":

# 忽略中文字符警告

warnings.filterwarnings("ignore", category=UserWarning)# 调用plot_bar_chart函数生成电影年代分布的柱形图

plot_bar_chart()

运行结果: