理顺 QR 分解算法

咱们网站的这个公式编辑器,估计是后台生成图片后贴回来的,固定分辨率而且分辨率不高。

还不如先离线 latex 生成 pdf 后再截图上来

1. Why QR

When A and b are known, to solver the minimization of ![]() , where

, where ![]() .

.

The reduction of A to various canonical form via orthogonal transformations should use Householder reflections and Givens rotations.

2. preview on orthogonal matrix

2.1 Orthogonal matrix

![]() is orthogonal matrix, if:

is orthogonal matrix, if:

![]()

2.2 rotation matrix is orthogonal matrix

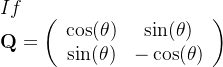

If

![]() is orthogonal and

is orthogonal and ![]() is a rotation matrix.

is a rotation matrix.

If ![]() , then

, then ![]() is obtained by rotating

is obtained by rotating ![]() counterclockwise through an angle

counterclockwise through an angle ![]() .

.

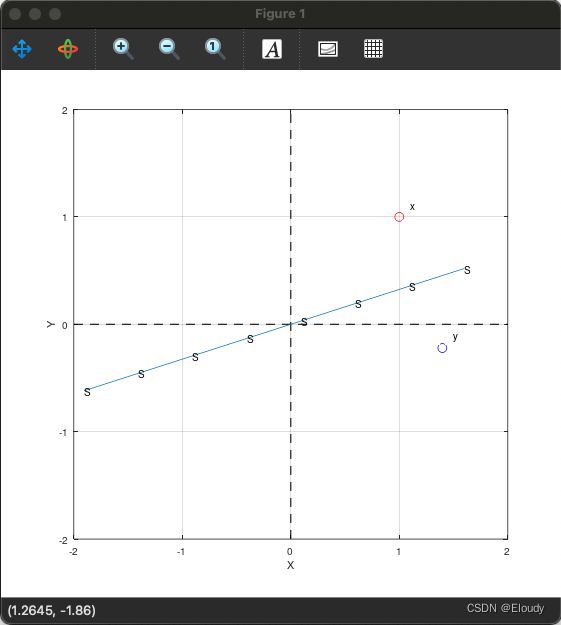

2.3 reflection matrix is orthogonal matrix

![]() is orthogonal and

is orthogonal and ![]() is a reflection matrix.

is a reflection matrix.

If ![]() , then

, then ![]() is obtained by reflecting the vector

is obtained by reflecting the vector ![]() across the line defined by

across the line defined by

That means ![]() and

and ![]() are axial symmetry by S,

are axial symmetry by S,

x is the preimage, y is the image, S is the mirror surface.

looks like :

%input x, ta = theta

%x = [-sqrt(2)/2.0, sqrt(2)/2.0]

x = [1; 1;]

ta = pi/5

S = [cos(ta/2.0), sin(ta/2.0)]

Q = [cos(ta), sin(ta); sin(ta), -cos(ta);]

y = Q*x

figure;

%1. draw axis

xmin = -2

xmax = 2

ymin = -2

ymax = 2

axisx = xmin:xmax;

axisy = zeros(size(axisx));

plot(axisx, axisy, 'k--', 'LineWidth', 0.7); % Plot x=0 axis

hold on;

plot(axisy, xmin:xmax, 'k--', 'LineWidth', 0.7); % Plot y=0 axis

hold on;

%2. draw surface of mirror

sx = -2*S(1):0.5:2*S(1)

sy = (S(2)/S(1))*sx

plot(sx, sy)

text(sx,sy, 'S')

hold on;

%3. draw preimage

plot(x(1), x(2), 'ro')

text(x(1)+0.1, x(2)+0.1, 'x')

hold on;

%4. draw image

plot(y(1), y(2), 'bo')

text(y(1)+0.1, y(2)+0.1, 'y')

%5. axis label

xlabel("X")

ylabel('Y')

v=[xmin, xmax, ymin, ymax]

axis(v)

%axis on

3. Householder transformation

In section 2, the reflection is introduced from the mirror surface. But, in this section, it is introduced from normal direction.

Let ![]()

then ![]() is

is

a ![]()

or ![]()

or ![]()

which are synonyms.

And ![]() is the

is the ![]() .

.

When ![]()

![]() is the image from

is the image from ![]() by reflecting with the hyuperplane

by reflecting with the hyuperplane ![]() and the mirror surface is cross the

and the mirror surface is cross the ![]() point.

point.

![]()

![]()

![]()

![]()

![]()

If ![]()

let ![]() (this is the normal direction)

(this is the normal direction)

![]()

then ![]() ;

;

约化定理:

Let ![]() ,

,

then ![]() st.

st. ![]()

and:

约化定理毕;

约化定理example:

Let ![]() then

then ![]() , and

, and ![]()

to calculate ![]() :

:

![]()

then

![]()

Here is the matlab code:

reduce_01.m:

x=[3;5;1;1;]

sigmaa =sign(x(1))*norm(x)

u = x+sigmaa*eye(4)(:,1)

betaa = 0.5*(norm(u))^2

H = eye(4) - (1.0/betaa)*u*u'

%debug

%h=betaa*H

y = H*x

未完待续 ... ...