跟着狂神学Docker(精髓篇)

容器数据卷

什么是容器数据卷

docker的理念回顾

将应用和环境打包成一个镜像!

数据?如果数据都在容器中,那么我们容器删除,数据就会丢失!需求:数据可以持久化

MySQL,容器删除了,删库跑路!需求:MySQL数据可以存储在本地!

容器之间可以有一个数据共享的技术!Docker容器中产生的数据,同步到本地!

这就是卷技术!目录的挂载,将我们容器内的目录,挂载到Linux上面!

总结一句话:容器的持久化和同步操作!容器间也是可以数据共享的!

使用数据卷

方式一 :直接使用命令挂载 -v

-v, --volume list Bind mount a volume

docker run -it -v 主机目录:容器内目录 -p 主机端口:容器内端口

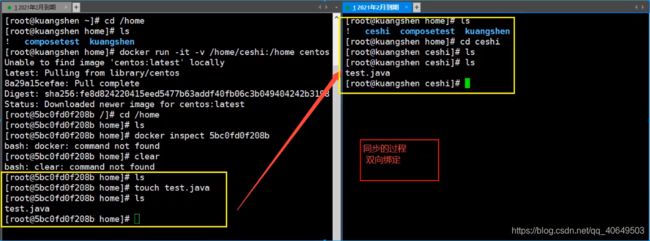

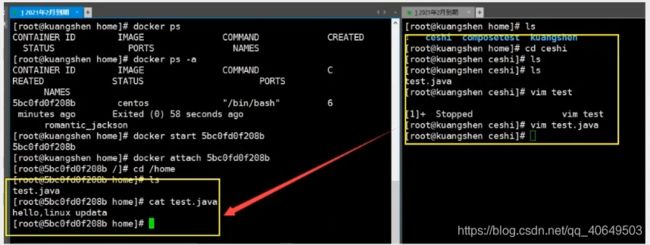

# /home/ceshi:主机home目录下的ceshi文件夹 映射:centos容器中的/home

[root@localhost home]# docker run -it -v /home/ceshi:/home centos /bin/bash

#这时候主机的/home/ceshi文件夹就和容器的/home文件夹关联了,二者可以实现文件或数据同步了

#这里解答一下这不是同步 这是 在磁盘上使用同一个分区物理地址是一个

#新的窗口

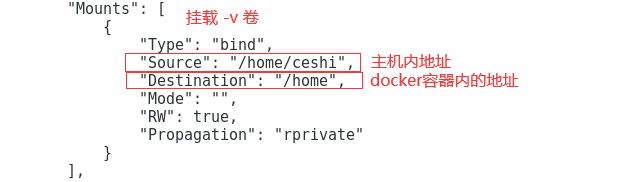

#通过 docker inspect 容器id 查看

[root@localhost home]# docker inspect 5b1e64d8bbc0

这里解答一下这不是同步 这是 在磁盘上使用同一个分区物理地址是一个

再来测试!

1、停止容器

2、宿主机修改文件

3、启动容器

4、容器内的数据依旧是同步的

好处:我们以后修改只需要在本地修改即可,容器内会自动同步!

实战:安装MySQL

Docker商店

https://hub.docker.com/

思考:MySQL的数据持久化的问题

# 获取mysql镜像

[root@localhost home]# docker pull mysql:5.7

# 运行容器,需要做数据挂载 #安装启动mysql,需要配置密码的,这是要注意点!

# 参考官网hub

docker run --name some-mysql -e MYSQL_ROOT_PASSWORD=my-secret-pw -d mysql:tag

#启动我们得

-d 后台运行

-p 端口映射

-v 卷挂载

-e 环境配置

-- name 容器名字

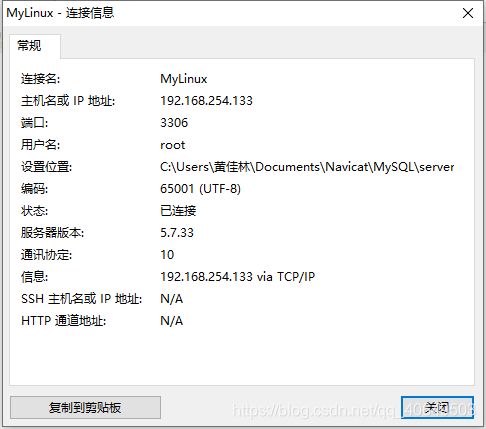

[root@localhost home]# docker run -d -p 3306:3306 -v /home/mysql/conf:/etc/mysql/conf.d -v /home/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 --name mysql01 mysql:5.7

# 启动成功之后,我们在本地使用sqlyog来测试一下

# sqlyog-连接到服务器的3306--和容器内的3306映射

# 在本地测试创建一个数据库,查看一下我们映射的路径是否ok!

[root@localhost home]# ls

ceshi huang.java huangjialin huang.txt mylinux mysql test.java testTmp

[root@localhost home]# cd mysql

[root@localhost mysql]# ls

conf data

[root@localhost mysql]# cd data/

[root@localhost data]# ls

auto.cnf client-cert.pem ibdata1 ibtmp1 private_key.pem server-key.pem

ca-key.pem client-key.pem ib_logfile0 mysql public_key.pem sys

ca.pem ib_buffer_pool ib_logfile1 performance_schema server-cert.pem

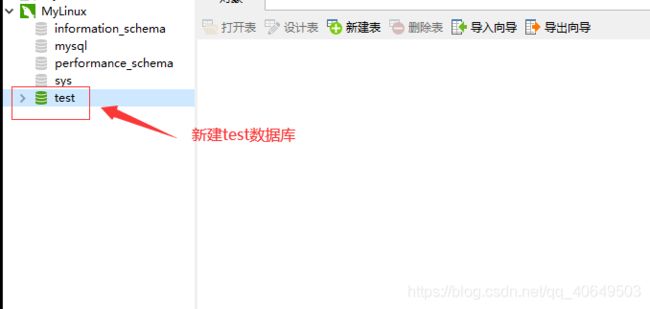

新建数据库

多了test文件

[root@localhost data]# ls

auto.cnf client-cert.pem ibdata1 ibtmp1 private_key.pem server-key.pem

ca-key.pem client-key.pem ib_logfile0 mysql public_key.pem sys

ca.pem ib_buffer_pool ib_logfile1 performance_schema server-cert.pem test

假设我们把容器删除

[root@localhost data]# docker rm -f mysql01

mysql01

[root@localhost data]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5b1e64d8bbc0 centos "/bin/bash" 56 minutes ago Up 39 minutes keen_leavitt

[root@localhost data]# docker ps -a

[root@localhost home]# ls

ceshi huang.java huangjialin huang.txt mylinux mysql test.java testTmp

[root@localhost home]# cd mysql

[root@localhost mysql]# ls

conf data

[root@localhost mysql]# cd data/

[root@localhost data]# ls

auto.cnf client-cert.pem ibdata1 ibtmp1 private_key.pem server-key.pem

ca-key.pem client-key.pem ib_logfile0 mysql public_key.pem sys

ca.pem ib_buffer_pool ib_logfile1 performance_schema server-cert.pem

发现,我们挂载到本地的数据卷依旧没有丢失,这就实现了容器数据持久化功能。

具名和匿名挂载

# 匿名挂载

-v 容器内路径!

[root@localhost home]# docker run -d -P --name nginx01 -v /etc/nginx nginx

# 查看所有的volume(卷)的情况

[root@localhost home]# docker volume ls

DRIVER VOLUME NAME # 容器内的卷名(匿名卷挂载)

local 21159a8518abd468728cdbe8594a75b204a10c26be6c36090cde1ee88965f0d0

local b17f52d38f528893dd5720899f555caf22b31bf50b0680e7c6d5431dbda2802c

# 这里发现,这种就是匿名挂载,我们在 -v只写了容器内的路径,没有写容器外的路径!

# 具名挂载 -P:表示随机映射端口

[root@localhost home]# docker run -d -P --name nginx01 -v /etc/nginx nginx

a35688cedc667161695f56bd300c12a6468312558c63a634d136bf8e73db4680

# 查看所有的volume(卷)的情况

[root@localhost home]# docker volume ls

DRIVER VOLUME NAME

local 8bef6b16668574673c31978a4d05c212609add5b4ca5ea2f09ff8680bdd304a6

local a564a190808af4cdca228f6a0ea3664dd14e34fee81811ed7fbae39158337141

# 具名挂载

[root@localhost home]# ls

ceshi huang.java huangjialin huang.txt mylinux mysql test.java testTmp

[root@localhost home]# docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx nginx #具名挂载

330f5a75c39945c6bed44292925219ed0d8d37adc0b1bc37b37784aae405b520

[root@localhost home]# docker volume ls

DRIVER VOLUME NAME

local 8bef6b16668574673c31978a4d05c212609add5b4ca5ea2f09ff8680bdd304a6

local a564a190808af4cdca228f6a0ea3664dd14e34fee81811ed7fbae39158337141

local juming-nginx # 具名挂载

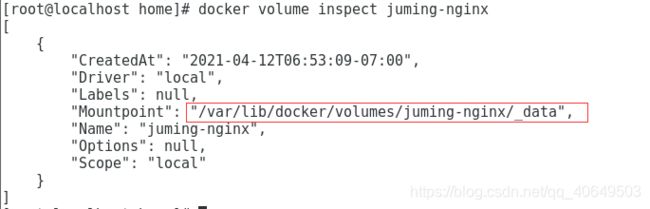

# 通过 -v 卷名:查看容器内路径

# 查看一下这个卷

[root@localhost home]# docker volume inspect juming-nginx

[

{

"CreatedAt": "2021-04-12T06:53:09-07:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/juming-nginx/_data",

"Name": "juming-nginx",

"Options": null,

"Scope": "local"

}

]

所有的docker容器内的卷,没有指定目录的情况下都是在**/var/lib/docker/volumes/自定义的卷名/_data**下,

我们通过具名挂载可以方便的找到我们的一个卷,大多数情况在使用的具名挂载

[root@localhost home]# cd /var/lib/docker

[root@localhost docker]# ls

buildkit containers image network overlay2 plugins runtimes swarm tmp trust volumes

[root@localhost docker]# cd volumes/

[root@localhost volumes]# ls

8bef6b16668574673c31978a4d05c212609add5b4ca5ea2f09ff8680bdd304a6 backingFsBlockDev metadata.db

a564a190808af4cdca228f6a0ea3664dd14e34fee81811ed7fbae39158337141 juming-nginx

[root@localhost volumes]# cd juming-nginx/

[root@localhost juming-nginx]# ls

_data

[root@localhost juming-nginx]# cd _data/

[root@localhost _data]# ls

conf.d fastcgi_params koi-utf koi-win mime.types modules nginx.conf scgi_params uwsgi_params win-utf

[root@localhost _data]# cat nginx.conf

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

如果指定了目录,docker volume ls 是查看不到的。

区分三种挂载方式

# 三种挂载: 匿名挂载、具名挂载、指定路径挂载

-v 容器内路径 #匿名挂载

-v 卷名:容器内路径 #具名挂载

-v /宿主机路径:容器内路径 #指定路径挂载 docker volume ls 是查看不到的

拓展:

# 通过 -v 容器内路径: ro rw 改变读写权限

ro #readonly 只读

rw #readwrite 可读可写

# 一旦这个设置了容器权限,容器对我们挂载出来的内容就有限定了!

[root@localhost home]# docker run -d -P --name nginx05 -v juming:/etc/nginx:ro nginx

[root@localhost home]# docker run -d -P --name nginx05 -v juming:/etc/nginx:rw nginx

# ro 只要看到ro就说明这个路径只能通过宿主机来操作,容器内部是无法操作!

初始Dockerfile

Dockerfile 就是用来构建docker镜像的构建文件!命令脚本!先体验一下!

通过这个脚本可以生成镜像,镜像是一层一层的,脚本是一个个的命令,每个命令都是一层!

[root@localhost /]# cd /home

[root@localhost home]# ls

ceshi huang.java huangjialin huang.txt mylinux mysql test.java testTmp

[root@localhost home]# mkdir docker-test-volume

[root@localhost home]# ls

ceshi docker-test-volume huang.java huangjialin huang.txt mylinux mysql test.java testTmp

[root@localhost home]# cd docker-test-volume/

[root@localhost docker-test-volume]# pwd

/home/docker-test-volume

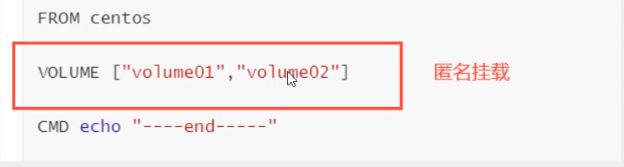

#通过这个脚本可以生成镜像

[root@localhost docker-test-volume]# vim dockerfile1

# 创建一个dockerfile文件,名字可以随便 建议Dockerfile

# 文件中的内容: 指令(大写) + 参数

[root@localhost docker-test-volume]# vim dockerfile1

FROM centos # 当前这个镜像是以centos为基础的

VOLUME ["volume01","volume02"] # 挂载卷的卷目录列表(多个目录)

CMD echo "-----end-----" # 输出一下用于测试

CMD /bin/bash # 默认走bash控制台

[root@localhost docker-test-volume]# cat dockerfile1

FROM centos

VOLUME ["volume01","volume02"]

CMD echo "-----end-----"

CMD /bin/bash

# 这里的每个命令,就是镜像的一层!

# 构建出这个镜像

-f dockerfile1 # f代表file,指这个当前文件的地址(这里是当前目录下的dockerfile1)

-t caoshipeng/centos # t就代表target,指目标目录(注意caoshipeng镜像名前不能加斜杠‘/’)

. # 表示生成在当前目录下

[root@localhost docker-test-volume]# docker build -f dockerfile1 -t huangjialin/centos:1.0 .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM centos

---> 300e315adb2f

Step 2/4 : VOLUME ["volume01","volume02"] # 卷名列表

---> Running in 2d8ae11d9994

Removing intermediate container 2d8ae11d9994

---> 5893b2c78edd

Step 3/4 : CMD echo "-----end-----" # 输出 脚本命令

---> Running in 2483f0e77b68

Removing intermediate container 2483f0e77b68

---> 30bf0ad14072

Step 4/4 : CMD /bin/bash

---> Running in 8fee073c961b

Removing intermediate container 8fee073c961b

---> 74f0e59c6da4

Successfully built 74f0e59c6da4

Successfully tagged huangjialin/centos:1.0

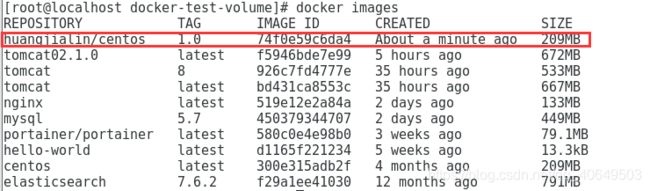

# 查看自己构建的镜像

[root@localhost docker-test-volume]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

huangjialin/centos 1.0 74f0e59c6da4 About a minute ago 209MB

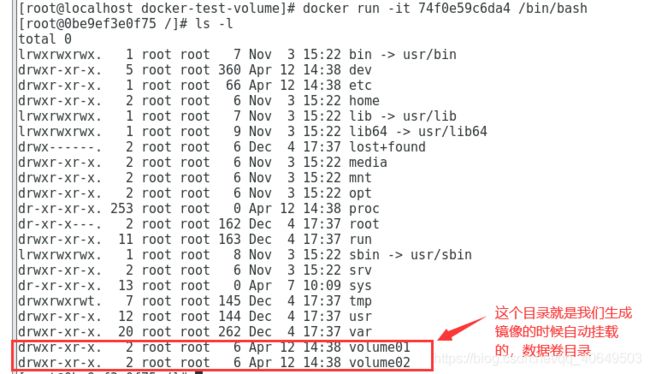

[root@localhost docker-test-volume]# docker run -it 74f0e59c6da4 /bin/bash

[root@0be9ef3e0f75 /]# ls -l

total 0

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 bin -> usr/bin

drwxr-xr-x. 5 root root 360 Apr 12 14:38 dev

drwxr-xr-x. 1 root root 66 Apr 12 14:38 etc

drwxr-xr-x. 2 root root 6 Nov 3 15:22 home

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 lib -> usr/lib

lrwxrwxrwx. 1 root root 9 Nov 3 15:22 lib64 -> usr/lib64

drwx------. 2 root root 6 Dec 4 17:37 lost+found

drwxr-xr-x. 2 root root 6 Nov 3 15:22 media

drwxr-xr-x. 2 root root 6 Nov 3 15:22 mnt

drwxr-xr-x. 2 root root 6 Nov 3 15:22 opt

dr-xr-xr-x. 253 root root 0 Apr 12 14:38 proc

dr-xr-x---. 2 root root 162 Dec 4 17:37 root

drwxr-xr-x. 11 root root 163 Dec 4 17:37 run

lrwxrwxrwx. 1 root root 8 Nov 3 15:22 sbin -> usr/sbin

drwxr-xr-x. 2 root root 6 Nov 3 15:22 srv

dr-xr-xr-x. 13 root root 0 Apr 7 10:09 sys

drwxrwxrwt. 7 root root 145 Dec 4 17:37 tmp

drwxr-xr-x. 12 root root 144 Dec 4 17:37 usr

drwxr-xr-x. 20 root root 262 Dec 4 17:37 var

drwxr-xr-x. 2 root root 6 Apr 12 14:38 volume01

drwxr-xr-x. 2 root root 6 Apr 12 14:38 volume02

[root@0be9ef3e0f75 /]# exit

exit

[root@localhost docker-test-volume]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

330f5a75c399 nginx "/docker-entrypoint.…" 54 minutes ago Up 54 minutes 0.0.0.0:49154->80/tcp nginx02

a35688cedc66 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:49153->80/tcp nginx01

[root@localhost docker-test-volume]# docker run -it 74f0e59c6da4 /bin/bash

[root@f8bd9a180286 /]# cd volume01

[root@f8bd9a180286 volume01]# touch container.txt

[root@f8bd9a180286 volume01]# ls

container.txt

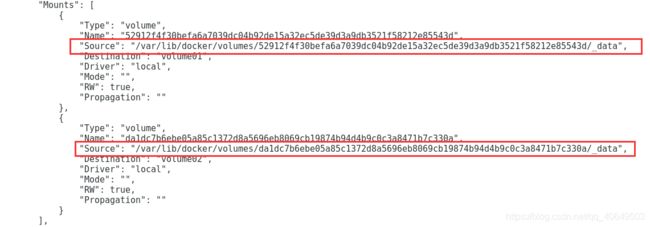

查看一下卷挂载的路径

# docker inspect 容器id

$ docker inspect ca3b45913df5

# 另一个窗口

[root@localhost home]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

huangjialin/centos 1.0 74f0e59c6da4 18 minutes ago 209MB

tomcat02.1.0 latest f5946bde7e99 5 hours ago 672MB

tomcat 8 926c7fd4777e 35 hours ago 533MB

tomcat latest bd431ca8553c 35 hours ago 667MB

nginx latest 519e12e2a84a 2 days ago 133MB

mysql 5.7 450379344707 2 days ago 449MB

portainer/portainer latest 580c0e4e98b0 3 weeks ago 79.1MB

hello-world latest d1165f221234 5 weeks ago 13.3kB

centos latest 300e315adb2f 4 months ago 209MB

elasticsearch 7.6.2 f29a1ee41030 12 months ago 791MB

[root@localhost home]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f8bd9a180286 74f0e59c6da4 "/bin/bash" 3 minutes ago Up 3 minutes gifted_mccarthy

330f5a75c399 nginx "/docker-entrypoint.…" 59 minutes ago Up 59 minutes 0.0.0.0:49154->80/tcp nginx02

a35688cedc66 nginx "/docker-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:49153->80/tcp nginx01

[root@localhost home]# docker inspect f8bd9a180286

[root@localhost home]# cd /var/lib/docker/volumes/52912f4f30befa6a7039dc04b92de15a32ec5de39d3a9db3521f58212e85543d/_data

[root@localhost _data]# ls

container.txt

这种方式使用的十分多,因为我们通常会构建自己的镜像!

假设构建镜像时候没有挂载卷,要手动镜像挂载 -v 卷名:容器内路径!

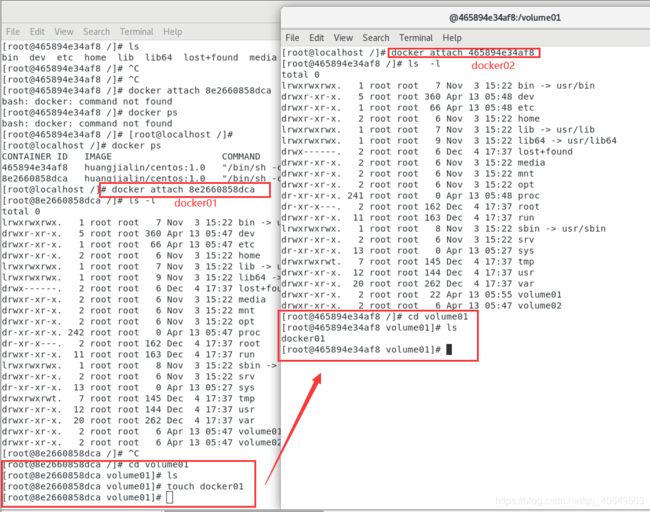

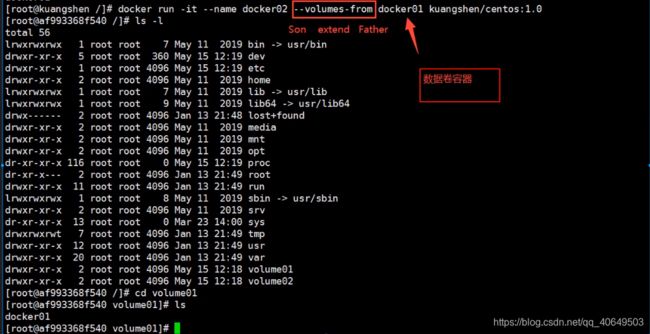

数据卷容器

多个MySQL同步数据!

命名的容器挂载数据卷!

# 测试 启动3个容器,通过刚才自己写的镜像启动

[root@localhost _data]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

huangjialin/centos 1.0 74f0e59c6da4 2 hours ago 209MB

tomcat02.1.0 latest f5946bde7e99 6 hours ago 672MB

tomcat 8 926c7fd4777e 37 hours ago 533MB

tomcat latest bd431ca8553c 37 hours ago 667MB

nginx latest 519e12e2a84a 2 days ago 133MB

mysql 5.7 450379344707 2 days ago 449MB

portainer/portainer latest 580c0e4e98b0 3 weeks ago 79.1MB

hello-world latest d1165f221234 5 weeks ago 13.3kB

centos latest 300e315adb2f 4 months ago 209MB

elasticsearch 7.6.2 f29a1ee41030 12 months ago 791MB

# 创建docker01:因为我本机是最新版,故这里用latest,狂神老师用的是1.0如下图

[root@localhost _data]# docker run -it --name docker01 huangjialin/centos:1.0

# 查看容器docekr01内容

[root@8e2660858dca /]# ls

bin dev etc home lib lib64 lost+found media mnt opt proc root run sbin srv sys tmp usr var volume01 volume02

# 不关闭该容器退出

CTRL + Q + P

# 创建docker02: 并且让docker02 继承 docker01

[root@localhost /]# docker run -it --name docker02 --volumes-from docker01 huangjialin/centos:1.0

# 查看容器docker02内容

[root@465894e34af8 /]# ls

bin dev etc home lib lib64 lost+found media mnt opt proc root run sbin srv sys tmp usr var volume01 volume02

# 进入docker01

[root@localhost /]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

465894e34af8 huangjialin/centos:1.0 "/bin/sh -c /bin/bash" 2 minutes ago Up 2 minutes docker02

8e2660858dca huangjialin/centos:1.0 "/bin/sh -c /bin/bash" 3 minutes ago Up 3 minutes docker01

[root@localhost /]# docker attach 8e2660858dca

[root@8e2660858dca /]# ls -l

total 0

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 bin -> usr/bin

drwxr-xr-x. 5 root root 360 Apr 13 05:47 dev

drwxr-xr-x. 1 root root 66 Apr 13 05:47 etc

drwxr-xr-x. 2 root root 6 Nov 3 15:22 home

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 lib -> usr/lib

lrwxrwxrwx. 1 root root 9 Nov 3 15:22 lib64 -> usr/lib64

drwx------. 2 root root 6 Dec 4 17:37 lost+found

drwxr-xr-x. 2 root root 6 Nov 3 15:22 media

drwxr-xr-x. 2 root root 6 Nov 3 15:22 mnt

drwxr-xr-x. 2 root root 6 Nov 3 15:22 opt

dr-xr-xr-x. 242 root root 0 Apr 13 05:47 proc

dr-xr-x---. 2 root root 162 Dec 4 17:37 root

drwxr-xr-x. 11 root root 163 Dec 4 17:37 run

lrwxrwxrwx. 1 root root 8 Nov 3 15:22 sbin -> usr/sbin

drwxr-xr-x. 2 root root 6 Nov 3 15:22 srv

dr-xr-xr-x. 13 root root 0 Apr 13 05:27 sys

drwxrwxrwt. 7 root root 145 Dec 4 17:37 tmp

drwxr-xr-x. 12 root root 144 Dec 4 17:37 usr

drwxr-xr-x. 20 root root 262 Dec 4 17:37 var

drwxr-xr-x. 2 root root 6 Apr 13 05:47 volume01

drwxr-xr-x. 2 root root 6 Apr 13 05:47 volume02

# 创建文件

[root@8e2660858dca /]# cd volume01

[root@8e2660858dca volume01]# ls

[root@8e2660858dca volume01]# touch docker01

# 进入docker02

[root@localhost /]# docker attach 465894e34af8

[root@465894e34af8 /]# ls -l

total 0

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 bin -> usr/bin

drwxr-xr-x. 5 root root 360 Apr 13 05:48 dev

drwxr-xr-x. 1 root root 66 Apr 13 05:48 etc

drwxr-xr-x. 2 root root 6 Nov 3 15:22 home

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 lib -> usr/lib

lrwxrwxrwx. 1 root root 9 Nov 3 15:22 lib64 -> usr/lib64

drwx------. 2 root root 6 Dec 4 17:37 lost+found

drwxr-xr-x. 2 root root 6 Nov 3 15:22 media

drwxr-xr-x. 2 root root 6 Nov 3 15:22 mnt

drwxr-xr-x. 2 root root 6 Nov 3 15:22 opt

dr-xr-xr-x. 241 root root 0 Apr 13 05:48 proc

dr-xr-x---. 2 root root 162 Dec 4 17:37 root

drwxr-xr-x. 11 root root 163 Dec 4 17:37 run

lrwxrwxrwx. 1 root root 8 Nov 3 15:22 sbin -> usr/sbin

drwxr-xr-x. 2 root root 6 Nov 3 15:22 srv

dr-xr-xr-x. 13 root root 0 Apr 13 05:27 sys

drwxrwxrwt. 7 root root 145 Dec 4 17:37 tmp

drwxr-xr-x. 12 root root 144 Dec 4 17:37 usr

drwxr-xr-x. 20 root root 262 Dec 4 17:37 var

drwxr-xr-x. 2 root root 22 Apr 13 05:55 volume01

drwxr-xr-x. 2 root root 6 Apr 13 05:47 volume02

# 发现docker01创建的文件

[root@465894e34af8 /]# cd volume01

[root@465894e34af8 volume01]# ls

docker01

# 再新建一个docker03同样继承docker01

$ docker run -it --name docker03 --volumes-from docker01 caoshipeng/centos:latest

$ cd volume01 #进入volume01 查看是否也同步docker01的数据

$ ls

docker01.txt

# 测试:可以删除docker01,查看一下docker02和docker03是否可以访问这个文件

# 测试发现:数据依旧保留在docker02和docker03中没有被删除

$ docker run -d -p 3306:3306 -v /home/mysql/conf:/etc/mysql/conf.d -v /home/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 --name mysql01 mysql:5.7

$ docker run -d -p 3310:3306 -e MYSQL_ROOT_PASSWORD=123456 --name mysql02 --volumes-from mysql01 mysql:5.7

# 这个时候,可以实现两个容器数据同步!

结论

容器之间的配置信息的传递,数据卷容器的生命周期一直持续到没有容器使用为止。

但是一旦你持久化到了本地,这个时候,本地的数据是不会删除的!

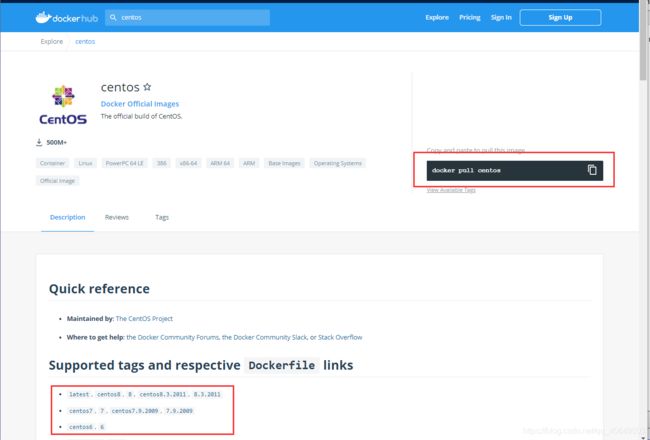

DockerFile

DockerFile介绍

dockerfile是用来构建docker镜像的文件!命令参数脚本!

构建步骤:

1、 编写一个dockerfile文件

2、 docker build 构建称为一个镜像

3、 docker run运行镜像

4、 docker push发布镜像(DockerHub 、阿里云仓库)

很多官方镜像都是基础包,很多功能没有,我们通常会自己搭建自己的镜像!

官方既然可以制作镜像,那我们也可以!

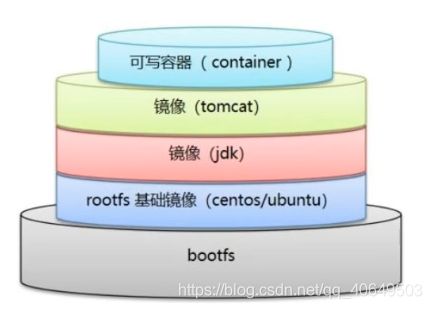

DockerFile构建过程

基础知识:

1、每个保留关键字(指令)都是必须是大写字母

2、执行从上到下顺序

3、#表示注释

4、每一个指令都会创建提交一个新的镜像层,并提交!

Dockerfile是面向开发的,我们以后要发布项目,做镜像,就需要编写dockerfile文件,这个文件十分简单!

Docker镜像逐渐成企业交付的标准,必须要掌握!

步骤:开发、部署、运维缺一不可

DockerFile:构建文件,定义了一切的步骤,源代码

DockerImages:通过DockerFile构建生成的镜像,最终发布和运行产品。

Docker容器:容器就是镜像运行起来提供服务。

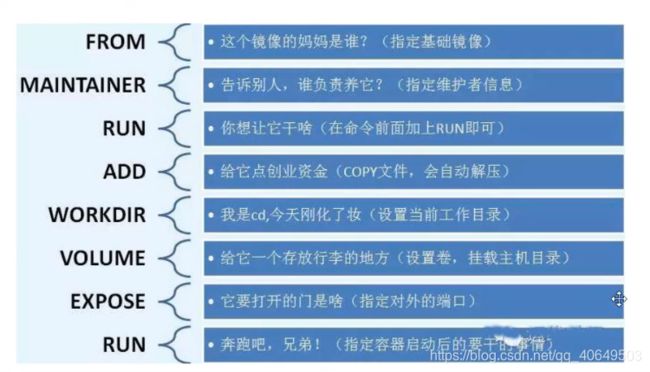

DockerFile的指令

FROM # from:基础镜像,一切从这里开始构建

MAINTAINER # maintainer:镜像是谁写的, 姓名+邮箱

RUN # run:镜像构建的时候需要运行的命令

ADD # add:步骤,tomcat镜像,这个tomcat压缩包!添加内容 添加同目录

WORKDIR # workdir:镜像的工作目录

VOLUME # volume:挂载的目录

EXPOSE # expose:保留端口配置

CMD # cmd:指定这个容器启动的时候要运行的命令,只有最后一个会生效,可被替代

ENTRYPOINT # entrypoint:指定这个容器启动的时候要运行的命令,可以追加命令

ONBUILD # onbuild:当构建一个被继承DockerFile这个时候就会运行onbuild的指令,触发指令

COPY # copy:类似ADD,将我们文件拷贝到镜像中

ENV # env:构建的时候设置环境变量!

实战测试

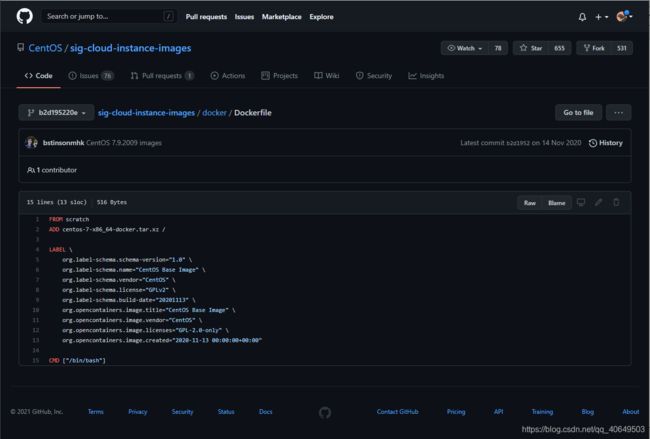

scratch 镜像

FROM scratch

ADD centos-7-x86_64-docker.tar.xz /

LABEL \

org.label-schema.schema-version="1.0" \

org.label-schema.name="CentOS Base Image" \

org.label-schema.vendor="CentOS" \

org.label-schema.license="GPLv2" \

org.label-schema.build-date="20200504" \

org.opencontainers.image.title="CentOS Base Image" \

org.opencontainers.image.vendor="CentOS" \

org.opencontainers.image.licenses="GPL-2.0-only" \

org.opencontainers.image.created="2020-05-04 00:00:00+01:00"

CMD ["/bin/bash"]

Docker Hub 中 99%的镜像都是从这个基础镜像过来的 FROM scratch,然后配置需要的软件和配置来进行构建。

创建一个自己的centos

# 1./home下新建dockerfile目录

[root@localhost /]# cd home

[root@localhost home]# mkdir dockerfile

# 2. dockerfile目录下新建mydockerfile-centos文件

[root@localhost home]# cd dockerfile/

[root@localhost dockerfile]# vim mydockerfile-centos

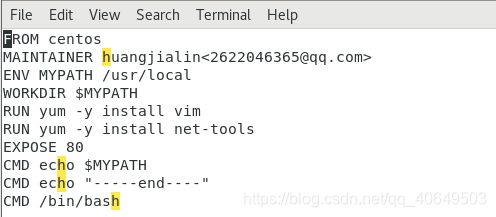

# 3.编写Dockerfile配置文件

FROM centos # 基础镜像是官方原生的centos

MAINTAINER huangjialin<2622046365@qq.com> # 作者

ENV MYPATH /usr/local # 配置环境变量的目录

WORKDIR $MYPATH # 将工作目录设置为 MYPATH

RUN yum -y install vim # 给官方原生的centos 增加 vim指令

RUN yum -y install net-tools # 给官方原生的centos 增加 ifconfig命令

EXPOSE 80 # 暴露端口号为80

CMD echo $MYPATH # 输出下 MYPATH 路径

CMD echo "-----end----"

CMD /bin/bash # 启动后进入 /bin/bash

# 4.通过这个文件构建镜像

# 命令: docker build -f 文件路径 -t 镜像名:[tag] .

[root@localhost dockerfile]# docker build -f mydockerfile-centos -t mysentos:0.1 .

# 5.出现下图后则构建成功

Successfully built 2315251fefdd

Successfully tagged mysentos:0.1

[root@localhost home]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mysentos 0.1 2315251fefdd 52 seconds ago 291MB

huangjialin/centos 1.0 74f0e59c6da4 18 hours ago 209MB

tomcat02.1.0 latest f5946bde7e99 22 hours ago 672MB

tomcat 8 926c7fd4777e 2 days ago 533MB

tomcat latest bd431ca8553c 2 days ago 667MB

nginx latest 519e12e2a84a 3 days ago 133MB

mysql 5.7 450379344707 3 days ago 449MB

portainer/portainer latest 580c0e4e98b0 3 weeks ago 79.1MB

hello-world latest d1165f221234 5 weeks ago 13.3kB

centos latest 300e315adb2f 4 months ago 209MB

elasticsearch 7.6.2 f29a1ee41030 12 months ago 791MB

# 6.测试运行

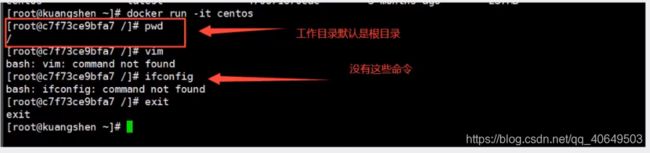

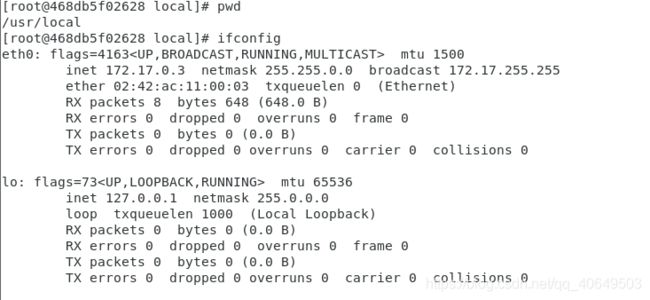

[root@localhost home]# docker run -it mysentos:0.1 # 注意带上版本号,否则每次都回去找最新版latest

[root@468db5f02628 local]# pwd

/usr/local # 与Dockerfile文件中 WORKDIR 设置的 MYPATH 一致

[root@468db5f02628 local]# vim # vim 指令可以使用

[root@468db5f02628 local]# ifconfig # ifconfig 指令可以使用

# docker history 镜像id 查看镜像构建历史步骤

[root@localhost home]# docker history 2315251fefdd

IMAGE CREATED CREATED BY SIZE COMMENT

2315251fefdd 6 minutes ago /bin/sh -c #(nop) CMD ["/bin/sh" "-c" "/bin… 0B

be99d496b25c 6 minutes ago /bin/sh -c #(nop) CMD ["/bin/sh" "-c" "echo… 0B

34fd97cceb13 6 minutes ago /bin/sh -c #(nop) CMD ["/bin/sh" "-c" "echo… 0B

baa95b2530ae 6 minutes ago /bin/sh -c #(nop) EXPOSE 80 0B

ef9b50de0b5b 6 minutes ago /bin/sh -c yum -y install net-tools 23.4MB

071ef8153ad4 6 minutes ago /bin/sh -c yum -y install vim 58.1MB

d6a38a2948f3 7 minutes ago /bin/sh -c #(nop) WORKDIR /usr/local 0B

98bd16198bff 7 minutes ago /bin/sh -c #(nop) ENV MYPATH=/usr/local 0B

53816b10cd26 7 minutes ago /bin/sh -c #(nop) MAINTAINER huangjialin<26… 0B

300e315adb2f 4 months ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B

4 months ago /bin/sh -c #(nop) LABEL org.label-schema.sc… 0B

4 months ago /bin/sh -c #(nop) ADD file:bd7a2aed6ede423b7… 209MB

之前的

之后的

变更历史

我们平时拿到一个镜像,可以用 “docker history 镜像id” 研究一下是什么做的

CMD 和 ENTRYPOINT区别

CMD # 指定这个容器启动的时候要运行的命令,只有最后一个会生效,可被替代。

ENTRYPOINT # 指定这个容器启动的时候要运行的命令,可以追加命令

测试CMD

# 编写dockerfile文件

[root@localhost dockerfile]# vim dockerfile-test-cmd

FROM centos

CMD ["ls","-a"] # 启动后执行 ls -a 命令

# CMD [] :要运行的命令是存放在一个数组结构中的。

# 构建镜像

[root@localhost dockerfile]# docker build -f dockerfile-test-cmd -t cmd-test:0.1 .

Sending build context to Docker daemon 3.072kB

Step 1/2 : FROM centos

---> 300e315adb2f

Step 2/2 : CMD ["ls","-a"]

---> Running in 3d990f51fd5a

Removing intermediate container 3d990f51fd5a

---> 0e927777d383

Successfully built 0e927777d383

Successfully tagged cmd-test:0.1

# 运行镜像

[root@localhost dockerfile]# docker run 0e927777d383 # 由结果可得,运行后就执行了 ls -a 命令

.

..

.dockerenv

bin

dev

etc

home

lib

lib64

lost+found

media

mnt

opt

proc

root

run

sbin

srv

sys

tmp

usr

var

# 想追加一个命令 -l 成为ls -al:展示列表详细数据

[root@localhost dockerfile]# docker run cmd-test:0.1 -l

docker: Error response from daemon: OCI runtime create failed: container_linux.go:349: starting container process caused "exec: \"-l\":

executable file not found in $PATH": unknown.

ERRO[0000] error waiting for container: context canceled

# cmd的情况下 -l 替换了CMD["ls","-l"] 而 -l 不是命令所以报错

[root@localhost dockerfile]# docker run 0e927777d383 ls -al

total 0

drwxr-xr-x. 1 root root 6 Apr 13 08:38 .

drwxr-xr-x. 1 root root 6 Apr 13 08:38 ..

-rwxr-xr-x. 1 root root 0 Apr 13 08:38 .dockerenv

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 bin -> usr/bin

drwxr-xr-x. 5 root root 340 Apr 13 08:38 dev

drwxr-xr-x. 1 root root 66 Apr 13 08:38 etc

drwxr-xr-x. 2 root root 6 Nov 3 15:22 home

lrwxrwxrwx. 1 root root 7 Nov 3 15:22 lib -> usr/lib

lrwxrwxrwx. 1 root root 9 Nov 3 15:22 lib64 -> usr/lib64

drwx------. 2 root root 6 Dec 4 17:37 lost+found

drwxr-xr-x. 2 root root 6 Nov 3 15:22 media

drwxr-xr-x. 2 root root 6 Nov 3 15:22 mnt

drwxr-xr-x. 2 root root 6 Nov 3 15:22 opt

dr-xr-xr-x. 247 root root 0 Apr 13 08:38 proc

dr-xr-x---. 2 root root 162 Dec 4 17:37 root

drwxr-xr-x. 11 root root 163 Dec 4 17:37 run

lrwxrwxrwx. 1 root root 8 Nov 3 15:22 sbin -> usr/sbin

drwxr-xr-x. 2 root root 6 Nov 3 15:22 srv

dr-xr-xr-x. 13 root root 0 Apr 13 05:27 sys

drwxrwxrwt. 7 root root 145 Dec 4 17:37 tmp

drwxr-xr-x. 12 root root 144 Dec 4 17:37 usr

drwxr-xr-x. 20 root root 262 Dec 4 17:37 var

测试ENTRYPOINT

# 编写dockerfile文件

$ vim dockerfile-test-entrypoint

FROM centos

ENTRYPOINT ["ls","-a"]

# 构建镜像

$ docker build -f dockerfile-test-entrypoint -t cmd-test:0.1 .

# 运行镜像

$ docker run entrypoint-test:0.1

.

..

.dockerenv

bin

dev

etc

home

lib

lib64

lost+found ...

# 我们的命令,是直接拼接在我们得ENTRYPOINT命令后面的

$ docker run entrypoint-test:0.1 -l

total 56

drwxr-xr-x 1 root root 4096 May 16 06:32 .

drwxr-xr-x 1 root root 4096 May 16 06:32 ..

-rwxr-xr-x 1 root root 0 May 16 06:32 .dockerenv

lrwxrwxrwx 1 root root 7 May 11 2019 bin -> usr/bin

drwxr-xr-x 5 root root 340 May 16 06:32 dev

drwxr-xr-x 1 root root 4096 May 16 06:32 etc

drwxr-xr-x 2 root root 4096 May 11 2019 home

lrwxrwxrwx 1 root root 7 May 11 2019 lib -> usr/lib

lrwxrwxrwx 1 root root 9 May 11 2019 lib64 -> usr/lib64 ....

Dockerfile中很多命令都十分的相似,我们需要了解它们的区别,我们最好的学习就是对比他们然后测试效果!

实战:Tomcat镜像

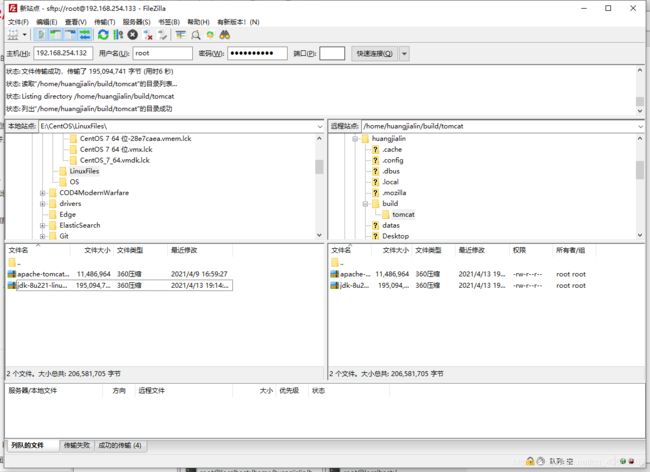

1、准备镜像文件

准备tomcat 和 jdk 到当前目录,编写好README

[root@localhost /]# cd /home

[root@localhost home]# ls

ceshi dockerfile docker-test-volume huang.java huangjialin huang.txt mylinux mysql test.java testTmp

[root@localhost home]# cd huangjialin

[root@localhost huangjialin]# ls

datas Desktop Documents Downloads Music Pictures Public Templates Videos

# 创建目录

[root@localhost huangjialin]# mkdir -vp build/tomcat

mkdir: created directory ‘build’

mkdir: created directory ‘build/tomcat’

[root@localhost huangjialin]# ls

build datas Desktop Documents Downloads Music Pictures Public Templates Videos

[root@localhost huangjialin]# cd build

[root@localhost build]# cd tomcat

# 根据下图导入数据包

[root@localhost tomcat]# ls

apache-tomcat-9.0.45.tar.gz jdk-8u221-linux-x64.tar.gz

2、编写dokerfile

官方命名Dockerfile,build会自动寻找这个文件,就不需要-f指定了!

[root@localhost tomcat]# ls

apache-tomcat-9.0.45.tar.gz jdk-8u221-linux-x64.tar.gz

[root@localhost tomcat]# touch readme.txt

[root@localhost tomcat]# vim Dockerfile

FROM centos # 基础镜像centos

MAINTAINER huangjialin<2622046365@qq.com> # 作者

COPY readme.txt /usr/local/readme.txt # 复制README文件

ADD jdk-8u221-linux-x64.tar.gz /usr/local/ # 添加jdk,ADD 命令会自动解压

ADD apache-tomcat-9.0.45.tar.gz /usr/local/ # 添加tomcat,ADD 命令会自动解压

RUN yum -y install vim # 安装 vim 命令

ENV MYPATH /usr/local # 环境变量设置 工作目录

WORKDIR $MYPATH

ENV JAVA_HOME /usr/local/jdk1.8.0_221 # 环境变量: JAVA_HOME环境变量

ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

ENV CATALINA_HOME /usr/local/apache-tomcat-9.0.45 # 环境变量: tomcat环境变量

ENV CATALINA_BASH /usr/local/apache-tomcat-9.0.45

# 设置环境变量 分隔符是:

ENV PATH $PATH:$JAVA_HOME/bin:$CATALINA_HOME/lib:$CATALINA_HOME/bin

EXPOSE 8080 # 设置暴露的端口

CMD /usr/local/apache-tomcat-9.0.45/bin/startup.sh && tail -F /usr/local/apache-tomcat-9.0.45/logs/catalina.out # 设置默认命令

3、构建镜像

# 因为Dockerfile命名使用默认命名 因此不用使用-f 指定文件

[root@localhost tomcat]# docker build -t diytomcat .

运行成功

[root@localhost tomcat]# docker build -t diytomcat .

Sending build context to Docker daemon 206.6MB

Step 1/15 : FROM centos

---> 300e315adb2f

Step 2/15 : MAINTAINER huangjialin<2622046365@qq.com>

---> Using cache

---> 53816b10cd26

Step 3/15 : COPY readme.txt /usr/local/readme.txt

---> b17277a321e4

Step 4/15 : ADD jdk-8u221-linux-x64.tar.gz /usr/local/

---> bf206090dd5b

Step 5/15 : ADD apache-tomcat-9.0.45.tar.gz /usr/local/

---> e7e5d7cb0c43

Step 6/15 : RUN yum -y install vim

---> Running in 7984f5e786a8

CentOS Linux 8 - AppStream 791 kB/s | 6.3 MB 00:08

CentOS Linux 8 - BaseOS 1.2 MB/s | 2.3 MB 00:01

CentOS Linux 8 - Extras 18 kB/s | 9.6 kB 00:00

Last metadata expiration check: 0:00:01 ago on Tue Apr 13 11:41:15 2021.

Dependencies resolved.

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

vim-enhanced x86_64 2:8.0.1763-15.el8 appstream 1.4 M

Installing dependencies:

gpm-libs x86_64 1.20.7-15.el8 appstream 39 k

vim-common x86_64 2:8.0.1763-15.el8 appstream 6.3 M

vim-filesystem noarch 2:8.0.1763-15.el8 appstream 48 k

which x86_64 2.21-12.el8 baseos 49 k

Transaction Summary

================================================================================

Install 5 Packages

Total download size: 7.8 M

Installed size: 30 M

Downloading Packages:

(1/5): gpm-libs-1.20.7-15.el8.x86_64.rpm 187 kB/s | 39 kB 00:00

(2/5): vim-filesystem-8.0.1763-15.el8.noarch.rp 402 kB/s | 48 kB 00:00

(3/5): which-2.21-12.el8.x86_64.rpm 106 kB/s | 49 kB 00:00

(4/5): vim-enhanced-8.0.1763-15.el8.x86_64.rpm 500 kB/s | 1.4 MB 00:02

(5/5): vim-common-8.0.1763-15.el8.x86_64.rpm 599 kB/s | 6.3 MB 00:10

--------------------------------------------------------------------------------

Total 672 kB/s | 7.8 MB 00:11

warning: /var/cache/dnf/appstream-02e86d1c976ab532/packages/gpm-libs-1.20.7-15.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID 8483c65d: NOKEY

CentOS Linux 8 - AppStream 107 kB/s | 1.6 kB 00:00

Importing GPG key 0x8483C65D:

Userid : "CentOS (CentOS Official Signing Key) "

Fingerprint: 99DB 70FA E1D7 CE22 7FB6 4882 05B5 55B3 8483 C65D

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

Key imported successfully

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : which-2.21-12.el8.x86_64 1/5

Installing : vim-filesystem-2:8.0.1763-15.el8.noarch 2/5

Installing : vim-common-2:8.0.1763-15.el8.x86_64 3/5

Installing : gpm-libs-1.20.7-15.el8.x86_64 4/5

Running scriptlet: gpm-libs-1.20.7-15.el8.x86_64 4/5

Installing : vim-enhanced-2:8.0.1763-15.el8.x86_64 5/5

Running scriptlet: vim-enhanced-2:8.0.1763-15.el8.x86_64 5/5

Running scriptlet: vim-common-2:8.0.1763-15.el8.x86_64 5/5

Verifying : gpm-libs-1.20.7-15.el8.x86_64 1/5

Verifying : vim-common-2:8.0.1763-15.el8.x86_64 2/5

Verifying : vim-enhanced-2:8.0.1763-15.el8.x86_64 3/5

Verifying : vim-filesystem-2:8.0.1763-15.el8.noarch 4/5

Verifying : which-2.21-12.el8.x86_64 5/5

Installed:

gpm-libs-1.20.7-15.el8.x86_64 vim-common-2:8.0.1763-15.el8.x86_64

vim-enhanced-2:8.0.1763-15.el8.x86_64 vim-filesystem-2:8.0.1763-15.el8.noarch

which-2.21-12.el8.x86_64

Complete!

Removing intermediate container 7984f5e786a8

---> 5e55934a1698

Step 7/15 : ENV MYPATH /usr/local

---> Running in 1c27b1556650

Removing intermediate container 1c27b1556650

---> bf7676c461b7

Step 8/15 : WORKDIR $MYPATH

---> Running in ea7b376828ee

Removing intermediate container ea7b376828ee

---> 829e13be0dd2

Step 9/15 : ENV JAVA_HOME /usr/local/jdk1.8.0_221

---> Running in e870347689db

Removing intermediate container e870347689db

---> 9addfa9f2af9

Step 10/15 : ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

---> Running in 35be0d0d1e87

Removing intermediate container 35be0d0d1e87

---> 2b8399db457f

Step 11/15 : ENV CATALINA_HOME /usr/local/apache-tomcat-9.0.45

---> Running in 6268510dbb10

Removing intermediate container 6268510dbb10

---> f587d0e0d7b1

Step 12/15 : ENV CATALINA_BASH /usr/local/apache-tomcat-9.0.45

---> Running in 584842f44cb2

Removing intermediate container 584842f44cb2

---> 9352b4a38800

Step 13/15 : ENV PATH $PATH:$JAVA_HOME/bin:$CATALINA_HOME/lib:$CATALINA_HOME/bin

---> Running in 3882c79944b1

Removing intermediate container 3882c79944b1

---> 2189e809aa16

Step 14/15 : EXPOSE 8080

---> Running in 2fdcc0bb062d

Removing intermediate container 2fdcc0bb062d

---> 2ada2912e565

Step 15/15 : CMD /usr/local/apache-tomcat-9.0.45/bin/startup.sh && tail -F /usr/local/apache-tomcat-9.0.45/logs/catalina.out

---> Running in b5253d274a87

Removing intermediate container b5253d274a87

---> 62c96b0fe815

Successfully built 62c96b0fe815

Successfully tagged diytomcat:latest

4、run镜像

# -d:后台运行 -p:暴露端口 --name:别名 -v:绑定路径

[root@localhost tomcat]# docker run -d -p 9090:8080 --name diytomcat -v /home/huangjialin/build/tomcat/test:/usr/local/apache-tomcat-9.0.45/webapps/test -v /home/huangjialin/build/tomcat/tomcatlogs/:/usr/local/apache-tomcat-9.0.45/logs diytomcat

eefdce2320da80a26d04951fa62ead9155260c9ec2fc7195fb5d78332e70b20e

[root@localhost tomcat]# docker exec -it eefdce2320da80a2 /bin/bash

[root@eefdce2320da local]# ls

apache-tomcat-9.0.45 bin etc games include jdk1.8.0_221 lib lib64 libexec readme.txt sbin share src

[root@eefdce2320da local]# pwd

/usr/local

[root@eefdce2320da local]# ls -l

total 0

drwxr-xr-x. 1 root root 45 Apr 13 11:40 apache-tomcat-9.0.45

drwxr-xr-x. 2 root root 6 Nov 3 15:22 bin

drwxr-xr-x. 2 root root 6 Nov 3 15:22 etc

drwxr-xr-x. 2 root root 6 Nov 3 15:22 games

drwxr-xr-x. 2 root root 6 Nov 3 15:22 include

drwxr-xr-x. 7 10 143 245 Jul 4 2019 jdk1.8.0_221

drwxr-xr-x. 2 root root 6 Nov 3 15:22 lib

drwxr-xr-x. 3 root root 17 Dec 4 17:37 lib64

drwxr-xr-x. 2 root root 6 Nov 3 15:22 libexec

-rw-r--r--. 1 root root 0 Apr 13 11:24 readme.txt

drwxr-xr-x. 2 root root 6 Nov 3 15:22 sbin

drwxr-xr-x. 5 root root 49 Dec 4 17:37 share

drwxr-xr-x. 2 root root 6 Nov 3 15:22 src

[root@eefdce2320da local]# cd apache-tomcat-9.0.45/

[root@eefdce2320da apache-tomcat-9.0.45]# ls

BUILDING.txt CONTRIBUTING.md LICENSE NOTICE README.md RELEASE-NOTES RUNNING.txt bin conf lib logs temp webapps work

[root@eefdce2320da apache-tomcat-9.0.45]# ls -l

total 128

-rw-r-----. 1 root root 18984 Mar 30 10:29 BUILDING.txt

-rw-r-----. 1 root root 5587 Mar 30 10:29 CONTRIBUTING.md

-rw-r-----. 1 root root 57092 Mar 30 10:29 LICENSE

-rw-r-----. 1 root root 2333 Mar 30 10:29 NOTICE

-rw-r-----. 1 root root 3257 Mar 30 10:29 README.md

-rw-r-----. 1 root root 6898 Mar 30 10:29 RELEASE-NOTES

-rw-r-----. 1 root root 16507 Mar 30 10:29 RUNNING.txt

drwxr-x---. 2 root root 4096 Mar 30 10:29 bin

drwx------. 1 root root 22 Apr 13 12:34 conf

drwxr-x---. 2 root root 4096 Mar 30 10:29 lib

drwxr-xr-x. 2 root root 197 Apr 13 12:34 logs

drwxr-x---. 2 root root 30 Mar 30 10:29 temp

drwxr-x---. 1 root root 18 Apr 13 12:34 webapps

drwxr-x---. 1 root root 22 Apr 13 12:34 work

# 另一个窗口

[root@localhost tomcat]# curl localhost:9090

<!DOCTYPE html>

"en">

"UTF-8" />

Apache Tomcat<span class="token operator">/</span>9<span class="token punctuation">.</span>0<span class="token punctuation">.</span>45<<span class="token operator">/</span>title>

<link href=<span class="token string">"favicon.ico"</span> rel=<span class="token string">"icon"</span> <span class="token function">type</span>=<span class="token string">"image/x-icon"</span> <span class="token operator">/</span>>

<link href=<span class="token string">"tomcat.css"</span> rel=<span class="token string">"stylesheet"</span> <span class="token function">type</span>=<span class="token string">"text/css"</span> <span class="token operator">/</span>>

<<span class="token operator">/</span>head>

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

</code></pre>

<h4>5、访问测试</h4>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it 自定义容器的id /bin/bash</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># cul localhost:9090</span>

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/b887794666b4403a8eebaa369d887424.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/b887794666b4403a8eebaa369d887424.jpg" alt="跟着狂神学Docker(精髓篇)_第26张图片" width="650" height="412" style="border:1px solid black;"></a></p>

<h4>6、发布项目</h4>

<p>(由于做了卷挂载,我们直接在本地编写项目就可以发布了!)</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># ls</span>

apache<span class="token operator">-</span>tomcat<span class="token operator">-</span>9<span class="token punctuation">.</span>0<span class="token punctuation">.</span>45<span class="token punctuation">.</span>tar<span class="token punctuation">.</span>gz Dockerfile jdk<span class="token operator">-</span>8u221<span class="token operator">-</span>linux<span class="token operator">-</span>x64<span class="token punctuation">.</span>tar<span class="token punctuation">.</span>gz readme<span class="token punctuation">.</span>txt test tomcatlogs

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># cd test/</span>

<span class="token namespace">[root@localhost test]</span><span class="token comment"># pwd</span>

<span class="token operator">/</span>home<span class="token operator">/</span>huangjialin<span class="token operator">/</span>build<span class="token operator">/</span>tomcat<span class="token operator">/</span>test

<span class="token namespace">[root@localhost test]</span><span class="token comment"># mkdir WEB-INF</span>

<span class="token namespace">[root@localhost test]</span><span class="token comment"># ls</span>

WEB<span class="token operator">-</span>INF

<span class="token namespace">[root@localhost test]</span><span class="token comment"># cd WEB-INF/</span>

<span class="token namespace">[root@localhost WEB-INF]</span><span class="token comment"># vim web.xml</span>

<span class="token namespace">[root@localhost WEB-INF]</span><span class="token comment"># cd ../</span>

<span class="token namespace">[root@localhost test]</span><span class="token comment"># vim index.jsp</span>

</code></pre>

<p>参考链接 https://segmentfault.com/a/1190000011404088</p>

<blockquote>

<p>web.xml</p>

</blockquote>

<pre><code class="prism language-powershell"> <?xml version=<span class="token string">"3.0"</span> encoding=<span class="token string">"UTF-8"</span>?>

<web<span class="token operator">-</span>app xmlns=<span class="token string">"http://java.sun.com/xml/ns/javaee"</span>

xmlns:xsi=<span class="token string">"http://www.w3.org/2001/XMLSchema-instance"</span>

xsi:schemaLocation=<span class="token string">"http://java.sun.com/xml/ns/javaee

http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"</span>

version=<span class="token string">"3.0"</span>>

<<span class="token operator">/</span>web<span class="token operator">-</span>app>

</code></pre>

<p>参考链接 https://www.runoob.com/jsp/jsp-syntax.html</p>

<blockquote>

<p>index.jsp</p>

</blockquote>

<pre><code class="prism language-powershell"><<span class="token operator">%</span>@ page language=<span class="token string">"java"</span> contentType=<span class="token string">"text/html; charset=UTF-8"</span>

pageEncoding=<span class="token string">"UTF-8"</span><span class="token operator">%</span>>

<<span class="token operator">!</span>DOCTYPE html>

<html>

<head>

<meta charset=<span class="token string">"utf-8"</span>>

<title>huangjialin<span class="token operator">-</span>菜鸟教程<span class="token punctuation">(</span>runoob<span class="token punctuation">.</span>com<span class="token punctuation">)</span><<span class="token operator">/</span>title>

<<span class="token operator">/</span>head>

<body>

Hello World<span class="token operator">!</span><br<span class="token operator">/</span>>

<<span class="token operator">%</span>

System<span class="token punctuation">.</span>out<span class="token punctuation">.</span>println<span class="token punctuation">(</span><span class="token string">"你的 IP 地址 "</span> <span class="token operator">+</span> request<span class="token punctuation">.</span>getRemoteAddr<span class="token punctuation">(</span><span class="token punctuation">)</span><span class="token punctuation">)</span><span class="token punctuation">;</span>

<span class="token operator">%</span>>

<<span class="token operator">/</span>body>

<<span class="token operator">/</span>html>

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/9d1f5059fe724121aad2dcced7ccf25d.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/9d1f5059fe724121aad2dcced7ccf25d.jpg" alt="跟着狂神学Docker(精髓篇)_第27张图片" width="650" height="202" style="border:1px solid black;"></a><br> 发现:项目部署成功,可以直接访问!</p>

<p>我们以后开发的步骤:需要掌握<code>Dockerfile</code>的编写!我们之后的一切都是使用<code>docker</code>镜像来发布运行!</p>

<h3>发布自己的镜像</h3>

<h4>发布到 Docker Hub</h4>

<p>1、地址 https://hub.docker.com/</p>

<p>2、确定这个账号可以登录</p>

<p>3、登录</p>

<p><a href="http://img.e-com-net.com/image/info8/6fe87273bd0240e8ad4f86fdf898e038.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/6fe87273bd0240e8ad4f86fdf898e038.jpg" alt="跟着狂神学Docker(精髓篇)_第28张图片" width="650" height="341" style="border:1px solid black;"></a><br> <a href="http://img.e-com-net.com/image/info8/414a63bc6f664d62ac746e26af041e55.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/414a63bc6f664d62ac746e26af041e55.jpg" alt="跟着狂神学Docker(精髓篇)_第29张图片" width="650" height="369" style="border:1px solid black;"></a></p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker login --help</span>

Usage: docker login <span class="token namespace">[OPTIONS]</span> <span class="token namespace">[SERVER]</span>

Log in to a Docker registry<span class="token punctuation">.</span>

<span class="token keyword">If</span> no server is specified<span class="token punctuation">,</span> the default is defined by the daemon<span class="token punctuation">.</span>

Options:

<span class="token operator">-</span>p<span class="token punctuation">,</span> <span class="token operator">--</span>password string Password

<span class="token operator">--</span>password<span class="token operator">-</span>stdin Take the password <span class="token keyword">from</span> stdin

<span class="token operator">-</span>u<span class="token punctuation">,</span> <span class="token operator">--</span>username string Username

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker login -u 你的用户名 -p 你的密码</span>

</code></pre>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker login -u yanghuihui520</span>

Password:

WARNING<span class="token operator">!</span> Your password will be stored unencrypted in <span class="token operator">/</span>root<span class="token operator">/</span><span class="token punctuation">.</span>docker<span class="token operator">/</span>config<span class="token punctuation">.</span>json<span class="token punctuation">.</span>

Configure a credential helper to remove this warning<span class="token punctuation">.</span> See

https:<span class="token operator">/</span><span class="token operator">/</span>docs<span class="token punctuation">.</span>docker<span class="token punctuation">.</span>com<span class="token operator">/</span>engine<span class="token operator">/</span>reference<span class="token operator">/</span>commandline<span class="token operator">/</span>login<span class="token operator">/</span><span class="token comment">#credentials-store</span>

Login Succeeded

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/372757f072b640bc96703032998d3d58.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/372757f072b640bc96703032998d3d58.jpg" alt="跟着狂神学Docker(精髓篇)_第30张图片" width="650" height="128" style="border:1px solid black;"></a><br> 4、提交 push镜像<br> <a href="http://img.e-com-net.com/image/info8/9953bab29fbd4c3c97c3b746250a2c84.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/9953bab29fbd4c3c97c3b746250a2c84.jpg" alt="跟着狂神学Docker(精髓篇)_第31张图片" width="650" height="162" style="border:1px solid black;"></a></p>

<pre><code class="prism language-powershell"><span class="token comment"># 会发现push不上去,因为如果没有前缀的话默认是push到 官方的library</span>

<span class="token comment"># 解决方法:</span>

<span class="token comment"># 第一种 build的时候添加你的dockerhub用户名,然后在push就可以放到自己的仓库了</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker build -t kuangshen/mytomcat:0.1 .</span>

<span class="token comment"># 第二种 使用docker tag #然后再次push</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker tag 容器id kuangshen/mytomcat:1.0</span>

<span class="token comment">#然后再次push</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker push kuangshen/mytomcat:1.0</span>

</code></pre>

<p><mark>必须对应账号名正解</mark></p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker tag 62c96b0fe815 yanghuihui520/tomcat:1.0</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker images</span>

REPOSITORY TAG IMAGE ID CREATED SIZE

huangjialin<span class="token operator">/</span>tomcat 1<span class="token punctuation">.</span>0 62c96b0fe815 3 hours ago 690MB

。。。

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker push yanghuihui520/tomcat:1.0</span>

The push refers to repository <span class="token namespace">[docker.io/yanghuihui520/tomcat]</span>

a7b8bb209ca2: Pushing <span class="token punctuation">[</span>==========> <span class="token punctuation">]</span> 12<span class="token punctuation">.</span>47MB<span class="token operator">/</span>58<span class="token punctuation">.</span>05MB

5ba6c0e6c8ff: Pushing <span class="token punctuation">[</span>==========> <span class="token punctuation">]</span> 3<span class="token punctuation">.</span>186MB<span class="token operator">/</span>15<span class="token punctuation">.</span>9MB

b3f3595d4705: Pushing <span class="token punctuation">[</span>> <span class="token punctuation">]</span> 3<span class="token punctuation">.</span>277MB<span class="token operator">/</span>406<span class="token punctuation">.</span>7MB

50c4c2648dca: Layer already exists

2653d992f4ef: Pushing <span class="token punctuation">[</span>=> <span class="token punctuation">]</span> 7<span class="token punctuation">.</span>676MB<span class="token operator">/</span>209<span class="token punctuation">.</span>3MB

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/c7325d138a274104aa6f9b406613af6d.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/c7325d138a274104aa6f9b406613af6d.jpg" alt="在这里插入图片描述" width="650" height="96"></a></p>

<h4>发布到 阿里云镜像服务上</h4>

<p>看官网 很详细https://cr.console.aliyun.com/repository/</p>

<pre><code class="prism language-powershell">$ sudo docker login <span class="token operator">--</span>username=zchengx registry<span class="token punctuation">.</span>cn<span class="token operator">-</span>shenzhen<span class="token punctuation">.</span>aliyuncs<span class="token punctuation">.</span>com

$ sudo docker tag <span class="token namespace">[ImageId]</span> registry<span class="token punctuation">.</span>cn<span class="token operator">-</span>shenzhen<span class="token punctuation">.</span>aliyuncs<span class="token punctuation">.</span>com<span class="token operator">/</span>dsadxzc<span class="token operator">/</span>cheng:<span class="token punctuation">[</span>镜像版本号<span class="token punctuation">]</span>

<span class="token comment"># 修改id 和 版本</span>

sudo docker tag a5ef1f32aaae registry<span class="token punctuation">.</span>cn<span class="token operator">-</span>shenzhen<span class="token punctuation">.</span>aliyuncs<span class="token punctuation">.</span>com<span class="token operator">/</span>dsadxzc<span class="token operator">/</span>cheng:1<span class="token punctuation">.</span>0

<span class="token comment"># 修改版本</span>

$ sudo docker push registry<span class="token punctuation">.</span>cn<span class="token operator">-</span>shenzhen<span class="token punctuation">.</span>aliyuncs<span class="token punctuation">.</span>com<span class="token operator">/</span>dsadxzc<span class="token operator">/</span>cheng:<span class="token punctuation">[</span>镜像版本号<span class="token punctuation">]</span>

</code></pre>

<h3>小结</h3>

<p><a href="http://img.e-com-net.com/image/info8/a5b82f5e94cd43cf9a5d3ce895306b8d.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/a5b82f5e94cd43cf9a5d3ce895306b8d.jpg" alt="跟着狂神学Docker(精髓篇)_第32张图片" width="650" height="526" style="border:1px solid black;"></a></p>

<h2>Docker 网络</h2>

<h3>理解Docker0</h3>

<p>学习之前清空下前面的<code>docker</code> 镜像、容器</p>

<pre><code class="prism language-powershell"><span class="token comment"># 删除全部容器</span>

$ docker <span class="token function">rm</span> <span class="token operator">-</span>f $<span class="token punctuation">(</span>docker <span class="token function">ps</span> <span class="token operator">-</span>aq<span class="token punctuation">)</span>

<span class="token comment"># 删除全部镜像</span>

$ docker rmi <span class="token operator">-</span>f $<span class="token punctuation">(</span>docker images <span class="token operator">-</span>aq<span class="token punctuation">)</span>

</code></pre>

<p>测试<br> <a href="http://img.e-com-net.com/image/info8/3f43304420a3490887867e1f4baed5e8.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/3f43304420a3490887867e1f4baed5e8.jpg" alt="跟着狂神学Docker(精髓篇)_第33张图片" width="650" height="165" style="border:1px solid black;"></a></p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># ip addr</span>

1: lo: <LOOPBACK<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1<span class="token operator">/</span>128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc pfifo_fast state UP <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 00:0c:29:1a:80:de brd ff:ff:ff:ff:ff:ff

inet 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>254<span class="token punctuation">.</span>133<span class="token operator">/</span>24 brd 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>254<span class="token punctuation">.</span>255 scope global noprefixroute dynamic ens33

valid_lft 1365sec preferred_lft 1365sec

inet6 fe80::78ba:483e:9794:f6c2<span class="token operator">/</span>64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: virbr0: <NO<span class="token operator">-</span>CARRIER<span class="token punctuation">,</span>BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP> mtu 1500 qdisc noqueue state DOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 52:54:00:a2:05:5a brd ff:ff:ff:ff:ff:ff

inet 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>122<span class="token punctuation">.</span>1<span class="token operator">/</span>24 brd 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>122<span class="token punctuation">.</span>255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0<span class="token operator">-</span>nic: <BROADCAST<span class="token punctuation">,</span>MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 52:54:00:a2:05:5a brd ff:ff:ff:ff:ff:ff

5: docker0: <NO<span class="token operator">-</span>CARRIER<span class="token punctuation">,</span>BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP> mtu 1500 qdisc noqueue state DOWN <span class="token function">group</span> default

link<span class="token operator">/</span>ether 02:42:2a:09:31:67 brd ff:ff:ff:ff:ff:ff

inet 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>16 brd 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>255<span class="token punctuation">.</span>255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:2aff:fe09:3167<span class="token operator">/</span>64 scope link

valid_lft forever preferred_lft forever

</code></pre>

<h3>三个网络</h3>

<p>问题: docker 是如果处理容器网络访问的?</p>

<p><a href="http://img.e-com-net.com/image/info8/5f69978d6028449eb252b23e5c77cd1b.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/5f69978d6028449eb252b23e5c77cd1b.jpg" alt="跟着狂神学Docker(精髓篇)_第34张图片" width="650" height="101" style="border:1px solid black;"></a></p>

<pre><code class="prism language-powershell"><span class="token comment"># 测试 运行一个tomcat</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker run -d -P --name tomcat01 tomcat</span>

<span class="token comment"># 查看容器内部网络地址</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it 容器id ip add</span>

<span class="token comment"># 发现容器启动的时候会得到一个 eth0@if91 ip地址,docker分配!</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it tomcat01 ip addr</span>

1: lo: <LOOPBACK<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>8 scope host lo

valid_lft forever preferred_lft forever

36: eth0@if37: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether 02:42:<span class="token function">ac</span>:11:00:02 brd ff:ff:ff:ff:ff:ff link<span class="token operator">-</span>netnsid 0

inet 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2<span class="token operator">/</span>16 brd 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>255<span class="token punctuation">.</span>255 scope global eth0

valid_lft forever preferred_lft forever

<span class="token comment"># 思考? linux能不能ping通容器内部! 可以 容器内部可以ping通外界吗? 可以!</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># ping 172.17.0.2</span>

PING 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2<span class="token punctuation">)</span> 56<span class="token punctuation">(</span>84<span class="token punctuation">)</span> bytes of <span class="token keyword">data</span><span class="token punctuation">.</span>

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=1 ttl=64 time=19<span class="token punctuation">.</span>5 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=2 ttl=64 time=0<span class="token punctuation">.</span>105 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=3 ttl=64 time=0<span class="token punctuation">.</span>051 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=4 ttl=64 time=0<span class="token punctuation">.</span>071 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=5 ttl=64 time=0<span class="token punctuation">.</span>124 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=6 ttl=64 time=0<span class="token punctuation">.</span>052 ms

。。。

</code></pre>

<h3>原理</h3>

<p>1、我们每启动一个<code>docker</code>容器,<code>docker</code>就会给<code>docker</code>容器分配一个<code>ip</code>,我们只要按照了<code>docker</code>,就会有一个<code>docker0</code>桥接模式,使用的技术是<code>veth-pair</code>技术!</p>

<p>https://www.cnblogs.com/bakari/p/10613710.html</p>

<p>再次测试 ip addr</p>

<pre><code class="prism language-powershell"><span class="token comment"># 多了一个网卡</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># ip addr</span>

1: lo: <LOOPBACK<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1<span class="token operator">/</span>128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc pfifo_fast state UP <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 00:0c:29:1a:80:de brd ff:ff:ff:ff:ff:ff

inet 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>254<span class="token punctuation">.</span>133<span class="token operator">/</span>24 brd 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>254<span class="token punctuation">.</span>255 scope global noprefixroute dynamic ens33

valid_lft 1499sec preferred_lft 1499sec

inet6 fe80::78ba:483e:9794:f6c2<span class="token operator">/</span>64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: virbr0: <NO<span class="token operator">-</span>CARRIER<span class="token punctuation">,</span>BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP> mtu 1500 qdisc noqueue state DOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 52:54:00:a2:05:5a brd ff:ff:ff:ff:ff:ff

inet 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>122<span class="token punctuation">.</span>1<span class="token operator">/</span>24 brd 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>122<span class="token punctuation">.</span>255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0<span class="token operator">-</span>nic: <BROADCAST<span class="token punctuation">,</span>MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 52:54:00:a2:05:5a brd ff:ff:ff:ff:ff:ff

5: docker0: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether 02:42:2a:09:31:67 brd ff:ff:ff:ff:ff:ff

inet 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>16 brd 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>255<span class="token punctuation">.</span>255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:2aff:fe09:3167<span class="token operator">/</span>64 scope link

valid_lft forever preferred_lft forever

37: veth8f89171@if36: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether f2:72:f8:7d:6e:7b brd ff:ff:ff:ff:ff:ff link<span class="token operator">-</span>netnsid 0

inet6 fe80::f072:f8ff:fe7d:6e7b<span class="token operator">/</span>64 scope link

valid_lft forever preferred_lft forever

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/a06279b12ec441a4b80f49a0a031da09.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/a06279b12ec441a4b80f49a0a031da09.jpg" alt="跟着狂神学Docker(精髓篇)_第35张图片" width="650" height="309" style="border:1px solid black;"></a><br> 2 、再启动一个容器测试,发现又多了一对网络</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker run -d -P --name tomcat02 tomcat</span>

781895f439c26dfd5fd489bf1316ab52d0d747d7a5c4f214656ea8ab9bc7d760

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># ip addr</span>

1: lo: <LOOPBACK<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1<span class="token operator">/</span>128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc pfifo_fast state UP <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 00:0c:29:1a:80:de brd ff:ff:ff:ff:ff:ff

inet 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>254<span class="token punctuation">.</span>133<span class="token operator">/</span>24 brd 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>254<span class="token punctuation">.</span>255 scope global noprefixroute dynamic ens33

valid_lft 1299sec preferred_lft 1299sec

inet6 fe80::78ba:483e:9794:f6c2<span class="token operator">/</span>64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: virbr0: <NO<span class="token operator">-</span>CARRIER<span class="token punctuation">,</span>BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP> mtu 1500 qdisc noqueue state DOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 52:54:00:a2:05:5a brd ff:ff:ff:ff:ff:ff

inet 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>122<span class="token punctuation">.</span>1<span class="token operator">/</span>24 brd 192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>122<span class="token punctuation">.</span>255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0<span class="token operator">-</span>nic: <BROADCAST<span class="token punctuation">,</span>MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>ether 52:54:00:a2:05:5a brd ff:ff:ff:ff:ff:ff

5: docker0: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether 02:42:2a:09:31:67 brd ff:ff:ff:ff:ff:ff

inet 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>16 brd 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>255<span class="token punctuation">.</span>255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:2aff:fe09:3167<span class="token operator">/</span>64 scope link

valid_lft forever preferred_lft forever

37: veth8f89171@if36: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether f2:72:f8:7d:6e:7b brd ff:ff:ff:ff:ff:ff link<span class="token operator">-</span>netnsid 0

inet6 fe80::f072:f8ff:fe7d:6e7b<span class="token operator">/</span>64 scope link

valid_lft forever preferred_lft forever

39: veth701a9f4@if38: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether 0a:c9:02:a1:57:a9 brd ff:ff:ff:ff:ff:ff link<span class="token operator">-</span>netnsid 1

inet6 fe80::8c9:2ff:fea1:57a9<span class="token operator">/</span>64 scope link

valid_lft forever preferred_lft forever

<span class="token comment">#查看tomcat02容器地址</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it tomcat02 ip addr</span>

1: lo: <LOOPBACK<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN <span class="token function">group</span> default qlen 1000

link<span class="token operator">/</span>loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127<span class="token punctuation">.</span>0<span class="token punctuation">.</span>0<span class="token punctuation">.</span>1<span class="token operator">/</span>8 scope host lo

valid_lft forever preferred_lft forever

38: eth0@if39: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether 02:42:<span class="token function">ac</span>:11:00:03 brd ff:ff:ff:ff:ff:ff link<span class="token operator">-</span>netnsid 0

inet 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>3<span class="token operator">/</span>16 brd 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>255<span class="token punctuation">.</span>255 scope global eth0

valid_lft forever preferred_lft forever

</code></pre>

<p><a href="http://img.e-com-net.com/image/info8/f31a3ca1adad4f77b0cd385afe08afff.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/f31a3ca1adad4f77b0cd385afe08afff.jpg" alt="跟着狂神学Docker(精髓篇)_第36张图片" width="650" height="229" style="border:1px solid black;"></a></p>

<pre><code class="prism language-powershell"><span class="token comment"># 我们发现这个容器带来网卡,都是一对对的</span>

<span class="token comment"># veth-pair 就是一对的虚拟设备接口,他们都是成对出现的,一端连着协议,一端彼此相连</span>

<span class="token comment"># 正因为有这个特性 veth-pair 充当一个桥梁,连接各种虚拟网络设备的</span>

<span class="token comment"># OpenStac,Docker容器之间的连接,OVS的连接,都是使用veth-pair技术</span>

</code></pre>

<p>3、我们来测试下tomcat01和tomcat02是否可以ping通</p>

<pre><code class="prism language-powershell"><span class="token comment"># 获取tomcat01的ip 172.17.0.2</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it tomcat01 ip addr </span>

550: eth0@if551: <BROADCAST<span class="token punctuation">,</span>MULTICAST<span class="token punctuation">,</span>UP<span class="token punctuation">,</span>LOWER_UP> mtu 1500 qdisc noqueue state UP <span class="token function">group</span> default

link<span class="token operator">/</span>ether 02:42:<span class="token function">ac</span>:11:00:02 brd ff:ff:ff:ff:ff:ff link<span class="token operator">-</span>netnsid 0

inet 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2<span class="token operator">/</span>16 brd 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>255<span class="token punctuation">.</span>255 scope global eth0

valid_lft forever preferred_lft forever

<span class="token comment"># 让tomcat02 ping tomcat01 </span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it tomcat02 ping 172.17.0.2</span>

PING 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2<span class="token punctuation">)</span> 56<span class="token punctuation">(</span>84<span class="token punctuation">)</span> bytes of <span class="token keyword">data</span><span class="token punctuation">.</span>

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=1 ttl=64 time=9<span class="token punctuation">.</span>07 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=2 ttl=64 time=0<span class="token punctuation">.</span>145 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=3 ttl=64 time=0<span class="token punctuation">.</span>153 ms

64 bytes <span class="token keyword">from</span> 172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>2: icmp_seq=4 ttl=64 time=0<span class="token punctuation">.</span>104 ms

<span class="token comment"># 结论:容器和容器之间是可以互相ping通</span>

</code></pre>

<h3>网络模型图</h3>

<p><a href="http://img.e-com-net.com/image/info8/d9c6d71bcd67454fb3d26cd5d5dfd8a0.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/d9c6d71bcd67454fb3d26cd5d5dfd8a0.jpg" alt="跟着狂神学Docker(精髓篇)_第37张图片" width="650" height="416" style="border:1px solid black;"></a></p>

<p>结论:<code>tomcat01</code>和<code>tomcat02</code>公用一个路由器,<code>docker0</code>。</p>

<p>所有的容器不指定网络的情况下,都是<code>docker0</code>路由的,<code>docker</code>会给我们的容器分配一个默认的可用ip。</p>

<h3>小结</h3>

<p><code>Docker</code>使用的是<code>Linux</code>的桥接,宿主机是一个<code>Docker</code>容器的网桥 <code>docker0</code><br> <a href="http://img.e-com-net.com/image/info8/e29eddf479ec45ab998149d9b2f7edc4.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/e29eddf479ec45ab998149d9b2f7edc4.jpg" alt="跟着狂神学Docker(精髓篇)_第38张图片" width="650" height="481" style="border:1px solid black;"></a><br> <code>Docker</code>中所有网络接口都是虚拟的,虚拟的转发效率高(内网传递文件)<br> 只要容器删除,对应的网桥一对就没了!</p>

<p>思考一个场景:我们编写了一个微服务,<code>database url=ip</code>: 项目不重启,数据ip换了,我们希望可以处理这个问题,可以通过名字来进行访问容器?</p>

<p><a href="http://img.e-com-net.com/image/info8/9c7927a1080c468b9717b85edc219203.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/9c7927a1080c468b9717b85edc219203.jpg" alt="跟着狂神学Docker(精髓篇)_第39张图片" width="650" height="242" style="border:1px solid black;"></a></p>

<h3>–link</h3>

<pre><code class="prism language-powershell"><span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it tomcat02 ping tomca01 # ping不通</span>

ping: tomca01: Name or service not known

<span class="token comment"># 运行一个tomcat03 --link tomcat02 </span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker run -d -P --name tomcat03 --link tomcat02 tomcat</span>

5f9331566980a9e92bc54681caaac14e9fc993f14ad13d98534026c08c0a9aef

<span class="token comment"># 3连接2</span>

<span class="token comment"># 用tomcat03 ping tomcat02 可以ping通</span>

<span class="token namespace">[root@localhost tomcat]</span><span class="token comment"># docker exec -it tomcat03 ping tomcat02</span>

PING tomcat02 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>3<span class="token punctuation">)</span> 56<span class="token punctuation">(</span>84<span class="token punctuation">)</span> bytes of <span class="token keyword">data</span><span class="token punctuation">.</span>

64 bytes <span class="token keyword">from</span> tomcat02 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>3<span class="token punctuation">)</span>: icmp_seq=1 ttl=64 time=12<span class="token punctuation">.</span>6 ms

64 bytes <span class="token keyword">from</span> tomcat02 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>3<span class="token punctuation">)</span>: icmp_seq=2 ttl=64 time=1<span class="token punctuation">.</span>07 ms

64 bytes <span class="token keyword">from</span> tomcat02 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>3<span class="token punctuation">)</span>: icmp_seq=3 ttl=64 time=0<span class="token punctuation">.</span>365 ms

64 bytes <span class="token keyword">from</span> tomcat02 <span class="token punctuation">(</span>172<span class="token punctuation">.</span>17<span class="token punctuation">.</span>0<span class="token punctuation">.</span>3<span class="token punctuation">)</span>: icmp_seq=4 ttl=64 time=0<span class="token punctuation">.</span>185 ms