k8s 进阶实战笔记 | Scheduler 调度策略总结

文章目录

- Scheduler 调度策略总结

-

- 调度原理和过程

- 调度策略

-

- nodeSelect

- 亲和性和反亲和性

- NodeAffinify亲和验证

- PodAffinity 亲和验证

- PodAntiAffinity 反亲和验证

- 污点与容忍

- 跳过 Scheduler 调度策略

- 调度策略场景总结

Scheduler 调度策略总结

调度原理和过程

Scheduler

一直监听着 api-server,如果获取到Pod.Spec.NodeName为空,会对每一个pod创建一个binding,表示放在哪一个节点上运行

把 pod 按照预设的调度策略分配到集群的节点上

- 公平

- 资源高效利用

- 效率

- 灵活

调度过程

- 预选(predicate)

过滤步满足条件的节点

-

PodFitsResources :节点上剩余的资源是否大于pod 请求的资源

-

PodFitsHost :如果pod 指定了NodeName,检查节点名称是否和NodeName匹配

-

PodFitsHostPorts :节点上已经使用的port是否和pod申请的port冲突

-

PodselectorMatches :过滤掉和pod指定的 label 不匹配的节点

-

NoDiskConflict :已经mount的volume和pod指定的volume不冲突,除非它们都是只读

- 优选(priority)

对节点按照优先级排序

- 选择出最优的节点

- 如果预选的时候没有合适的节点,pod 会一直处于 pending状态,并且会不断重试调度,直到有调度结果

调度策略

nodeSelect

- 最简单的调度形式:节点选择约束,将 pod 调度在拥有你指定的标签的节点上

示例

一个简单的nginx编排文件如下

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

不进行特殊调度设置

kubectl apply -f nginx-demo.yaml

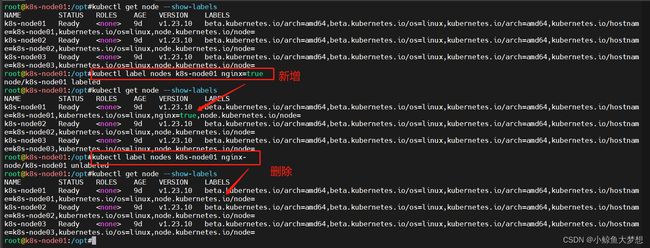

给节点设置一个独一无二的标签信息

# 查看节点标签信息

kubectl get node --show-labels

# 给节点新增标签信息(kubectl label nodes key=value)

kubectl label nodes k8s-node01 nginx=true

# 取消节点的标签信息

kubectl label nodes k8s-node01 nginx-

**加上调度策略nodeSelect **

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

nodeSelector:

app: nginx

containers:

- name: nginx

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

查看效果

亲和性和反亲和性

- 相比之下,亲和性和反亲和性的表达能力更强

- nodeSelector 只能选择所有固定标签的节点

requiredDuringSchedulingIgnoredDuringExecution

调度器只有在规则被满足的时候才能执行调度

preferredDuringSchedulingIgnoredDuringExecution

调度器会尝试寻找满足对应规则的节点,如果找不到,调度会在其他节点调度这个 pod

节点亲和性

NodeAffinity

- “软需求”:调度器在无法找到匹配节点时候仍然会调度 pod

- “硬需求”:如果没有节点满足需求,则不会被调度,一直处于 pending 状态

Pod亲和性

PodAffinity

- pod可以和哪些pod部署在同一个拓扑中

Pod互斥性

PodAntiAffinity

- pod不和哪些pod部署在同一个拓扑中

调度策略

| 调度策略 | 匹配标签 | 操作符 | 拓扑支持 | 调度目标 |

|---|---|---|---|---|

| nodeAffinity | node节点 | In, NotIn,Exists,DoesNotExist, Gt,Lt | 否 | 指定主机 |

| podAffinity | pod | In, NotIn,Exists,DoesNotExist | 是 | pod与指定pod一个拓扑域 |

| PodAntiAffinity | pod | In, NotIn,Exists,DoesNotExist | 是 | pod与指定pod不在一个拓扑域 |

操作符

- In:label 的值在某个列表中

- NotIn:label 的值不在某个列表中

- Gt:label 的值大于某个值

- Lt:label 的值小于某个值

- Exists:某个 label 存在

- DoesNotExist:某个 label 不存在

NodeAffinify亲和验证

标准yaml文件

###第一个

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

###第二个

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx2

namespace: default

labels:

app: nginx2

spec:

selector:

matchLabels:

app: nginx2

replicas: 3

template:

metadata:

labels:

app: nginx2

spec:

containers:

- name: nginx2

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

NodeAffinify验证

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node03

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

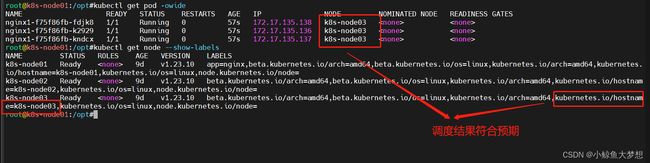

调度结果

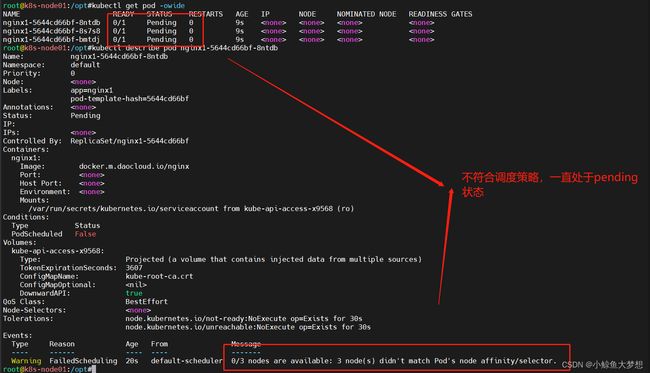

硬策略不符合要求的时候的调度结果

如果是软策略

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node04

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

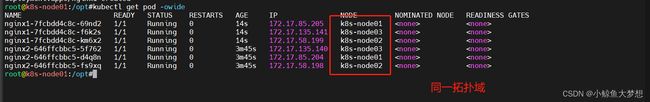

PodAffinity 亲和验证

看下nginx1的硬策略

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx2

topologyKey: kubernetes.io/hostname

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

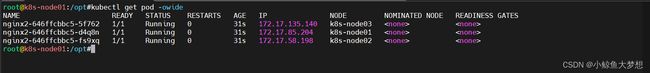

调度结果

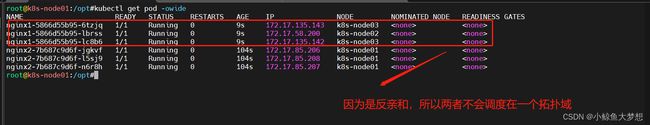

PodAntiAffinity 反亲和验证

nginx2的运行情况

nginx1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx2

topologyKey: kubernetes.io/hostname

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

调度结果

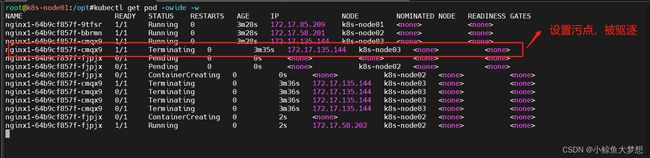

污点与容忍

-

污点:taints

-

容忍:tolerations

-

如果一个节点标记的有污点,如果对pod不进行标识为可以容忍,否则该节点不可调度pod

-

污点组成:key=value:effect

effect:污点的作用

- NoSchedule :表示 k8s 将不会将 Pod 调度到具有该污点的 Node 上

- PreferNoSchedule :表示 k8s 将 尽量避免 将 Pod 调度到具有该污点的 Node 上

- NoExecute :表示 k8s 将不会将 Pod 调度到具有该污点的 Node 上,同时会将 Node 上已经存在的 Pod 驱逐出去

- 基本操作

### 查看节点污点

kubectl describe nodes | grep -P "Name:|Taints"

### 设置污点

kubectl taint nodes <NodeName> key=value:effect

### 删除污点

kubectl taint nodes <NodeName> key=value:effect-

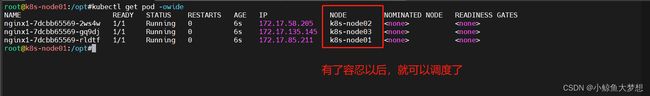

- 验证

### 设置一个污点,看是否符合预期,多开一个窗口观察

kubectl taint nodes k8s-node03 app=nginx:NoExecute

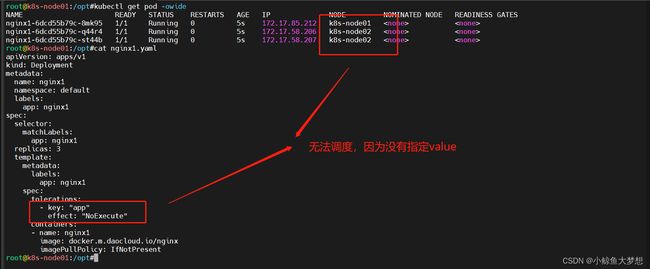

设置容忍度再看下调度结果

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

tolerations:

- key: "app"

operator: "Exists"

effect: "NoExecute"

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

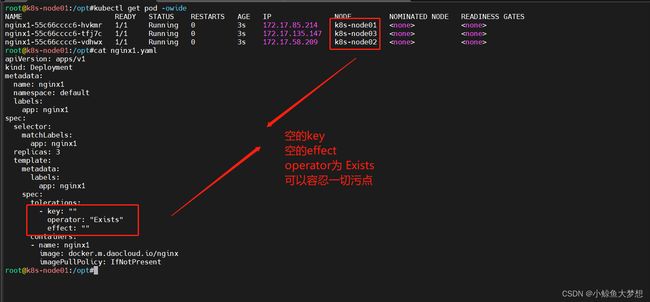

tolerations 属性的写法

- key、value、effect 与 Node 的 Taint 设置需保持一致

- operator=Exists,value可以省略

- operator=Equal,key与value之间就是等于的关系,不能省略

- 不指定operator,则默认就是Equal

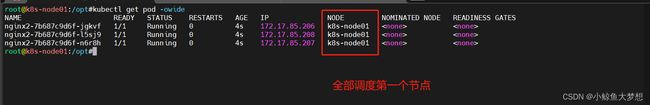

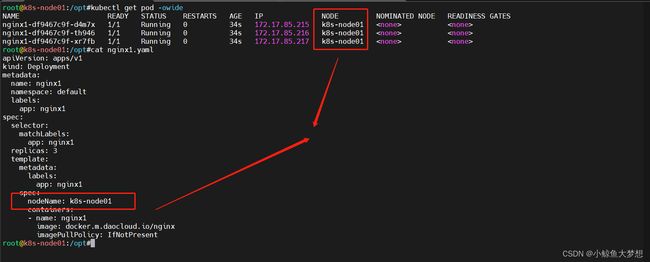

跳过 Scheduler 调度策略

- 将 pod 直接调度到指定的Node节点上

###第一个

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

namespace: default

labels:

app: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 3

template:

metadata:

labels:

app: nginx1

spec:

nodeName: k8s-node01

containers:

- name: nginx1

image: docker.m.daocloud.io/nginx

imagePullPolicy: IfNotPresent

调度策略场景总结

| 调度策略 | 场景说明 |

|---|---|

| nodeName | 调度固定节点,用于验证特定节点数据、故障复现等 |

| nodeSelect | 调度到拥有特定标签的节点,特殊部门、特殊业务线、测试等 |

| podAffinity | 使pod处于同一个拓扑域,用于上下游应用存在大量数据交互的场景 |

| podAntiAffinity | 使pod不处于同一个拓扑域,多个计算型应用是不适合调度同一台节点 |

| nodeAffinity | 指定调度到指定的节点,核心应用必须调度到核心节点 |

| nodeAntiAffinity | 指定不调度到指定的节点,核心节点不能被普通应用使用 |

| taints | 污点节点不允许被调度pod,比如master节点,GPU高计算性能节点等 |

| tolerations | 配置了才可以调度到taints节点 |