Ubuntu22.04三台虚拟机Hadoop集群安装和搭建(全面详细的过程)

虚拟机Ubuntu22.04 Hadoop集群安装和搭建(全面详细的过程)

- 环境配置安装

-

- 安装JDK

- 安装Hadoop

- 三台虚拟机设置

-

- 克隆三台虚拟机

- 设置静态IP

- 修改虚拟机host

- ssh免密登录

- 关闭防火墙

- Hadoop配置

-

- core-site.xml

- hdfs-site.xml

- yarn-site.xml

- mapred-site.xml

- workers

- 设置hadoop集群用户权限

- xsync分发给其他虚拟机

- 格式化namenode配置

- 启动集群

- 测试

- ref

环境配置安装

| 项目 | Value |

|---|---|

| linux | ubuntu22.04.3 |

| java | 1.8_202 |

| hadoop | 3.2.4 |

| vmware workstation | 16.2.3 |

安装JDK

在vmware workstation安装好ubuntu系统后,下载jdk包。

Java 的官网下载链接:https://www.oracle.com/java/technologies/downloads/

Java 华为云镜像:https://repo.huaweicloud.com/java/jdk/

下载后解压到自定义文件夹

sudo tar -zxvf jdk-8u202-linux-x64.tar.gz -C /opt

设置环境变量:

sudo gedit /etc/profile

添加java路径

export JAVA_HOME=/opt/jdk1.8.0_202

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=$PATH:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=$PATH:${JAVA_HOME}/bin:${JRE_HOME}/bin

激活环境后,查看java版本

source /etc/profile # 激活环境

java -version # 查看java版本

java version "1.8.0_202"

Java(TM) SE Runtime Environment (build 1.8.0_202-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.202-b08, mixed mode)

配置软链接

sudo ln -s /opt/jdk1.8.0_202/bin/java /bin/java

java 安装完成

安装Hadoop

下载后解压到自定义文件夹

sudo tar -zxvf hadoop-3.2.4.tar.gz -C /opt

为 hadoop 配置 java 环境,打开hadoop安装目录的etc/hadoop/hadoop-env.sh文件

sudo gedit /opt/hadoop-3.2.4/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/opt/jdk1.8.0_202

打开hadoop安装目录,查看是否安装成功

/opt/hadoop-3.2.4/bin/hadoop version

Hadoop 3.2.4

Source code repository Unknown -r 7e5d9983b388e372fe640f21f048f2f2ae6e9eba

Compiled by ubuntu on 2022-07-12T11:58Z

Compiled with protoc 2.5.0

From source with checksum ee031c16fe785bbb35252c749418712

This command was run using /opt/hadoop-3.2.4/share/hadoop/common/hadoop-common-3.2.4.jar

三台虚拟机设置

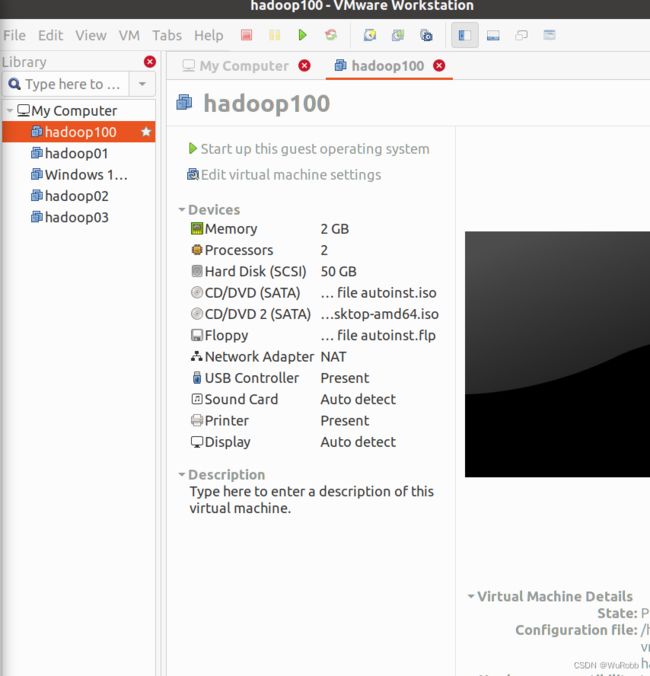

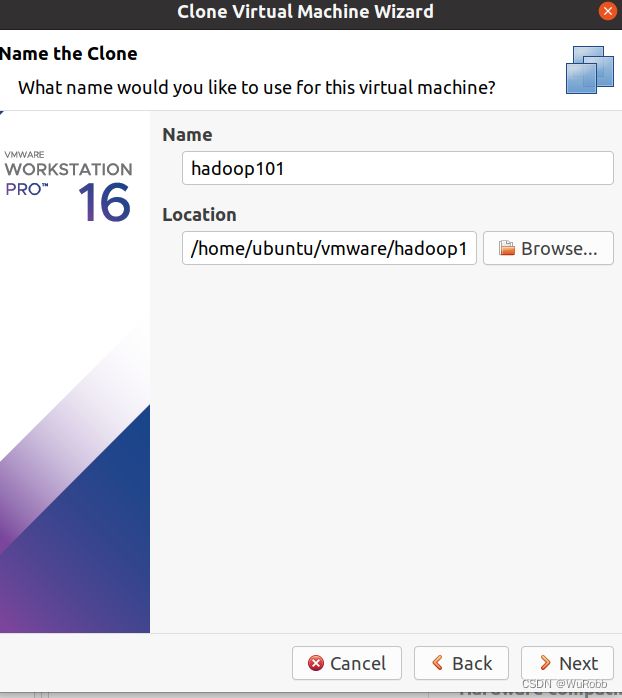

克隆三台虚拟机

打开vmware的library面板

右键需要复制的虚拟机,选择 management->clone

一直下一步,等待完成,一直复制两台虚拟机hadoop101,hadoop102。

设置静态IP

三台虚拟机之间通过ip通信,所以要固定三台机器静态ip,以便相互访问

查看虚拟机的虚拟网卡,查看NAT模式配置

查看NAT Settings

记录网关地址Gateway IP

打开网卡配置文件

sudo gedit /etc/netplan/01-network-manager-all.yaml

自定义设置addresses和routes

# Let NetworkManager manage all devices on this

network:

renderer: NetworkManager

ethernets:

ens33:

addresses:

# 根据自己的IP地址更改下边的IP即可

- 172.16.228.100/24

nameservers:

# 域名解析器

addresses: [4.2.2.2, 8.8.8.8]

routes:

- to: default

# 网关,上一步记录的Gateway IP

via: 172.16.228.2

version: 2

# 备用

# 根据自己的IP地址更改下边的IP即可

# IP地址

# IPADDR=172.16.228.101

# 网关

# GATEWAY=172.16.228.2

# 域名解析器

# DNS1=4.2.2.2

# DNS2=8.8.8.8

保存重启网络服务

sudo netplan apply

查看ip,设置完成

其余两台虚拟机也设置好静态ip,并将三个ip记录。

修改虚拟机host

修改hostname和hosts便于通信

sudo gedit /etc/hostname

将三台虚拟机hostname分别设置为hadoop100、hadoop101、hadoop102

sudo gedit /etc/hosts

在后面添加各自设置的静态ip

172.16.228.100 hadoop100

172.16.228.101 hadoop101

172.16.228.102 hadoop102

分别在三台虚拟机测试能否ping通

ping hadoop100

ping hadoop101

ping hadoop102

ssh免密登录

为三台机器添加ssh密钥,实现远程无密码访问

sudo apt-get install openssh-server #安装服务,一路回车

sudo /etc/init.d/ssh restart #启动服务

sudo ufw disable #关闭防火墙

在节点生成SSH公钥,并分发给其他机器

ssh localhost

cd ~/.ssh

rm ./id_rsa*

ssh-keygen -t rsa

生成密钥

分发给节点,根据提示输入虚拟机密码

ssh-copy-id hadoop100

ssh-copy-id hadoop101

ssh-copy-id hadoop102

ssh hadoop100 测试是否分发成功。

关闭防火墙

systemctl stop firewalld.service

Hadoop配置

打开hadoop安装目录,进入/etc/hadoop路径,找到配置文件,并按照如下配置

| hadoop100 | hadoop101 | hadoop102 | |

|---|---|---|---|

| HDFS | DataNode NameNode |

DataNode | SecondaryNameNode DataNode |

| YARN | NodeManager | NodeManager ResourceManager |

NodeManager |

core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop100:8020value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/opt/hadoop-3.2.4/datavalue>

property>

<property>

<name>hadoop.http.staticuser.username>

<value>hadoopvalue>

property>

configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-addressname>

<value>hadoop100:9870value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>hadoop102:9868value>

property>

configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>hadoop101value>

<description>resourcemanagerdescription>

property>

<property>

<name>yarn.nodemanager.env-whitelistname>

<value>

JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

value>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

property>

<property>

<name>yarn.log.server.urlname>

<value>http://hadoop100:19888/jobhistory/logsvalue>

property>

<property>

<name>yarn.log-aggregation.retain-secondsname>

<value>604800value>

property>

configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>yarn.app.mapreduce.am.envname>

<value>

HADOOP_MAPRED_HOME=/opt/hadoop-3.2.4/etc/hadoop:/opt/hadoop-3.2.4/share/hadoop/common/lib/*:/opt/hadoop-3.2.4/share/hadoop/common/*:/opt/hadoop-3.2.4/share/hadoop/hdfs:/opt/hadoop-3.2.4/share/hadoop/hdfs/lib/*:/opt/hadoop-3.2.4/share/hadoop/hdfs/*:/opt/hadoop-3.2.4/share/hadoop/mapreduce/lib/*:/opt/hadoop-3.2.4/share/hadoop/mapreduce/*:/opt/hadoop-3.2.4/share/hadoop/yarn:/opt/hadoop-3.2.4/share/hadoop/yarn/lib/*:/opt/hadoop-3.2.4/share/hadoop/yarn/*

value>

property>

<property>

<name>mapreduce.map.envname>

<value>

HADOOP_MAPRED_HOME=/opt/hadoop-3.2.4/etc/hadoop:/opt/hadoop-3.2.4/share/hadoop/common/lib/*:/opt/hadoop-3.2.4/share/hadoop/common/*:/opt/hadoop-3.2.4/share/hadoop/hdfs:/opt/hadoop-3.2.4/share/hadoop/hdfs/lib/*:/opt/hadoop-3.2.4/share/hadoop/hdfs/*:/opt/hadoop-3.2.4/share/hadoop/mapreduce/lib/*:/opt/hadoop-3.2.4/share/hadoop/mapreduce/*:/opt/hadoop-3.2.4/share/hadoop/yarn:/opt/hadoop-3.2.4/share/hadoop/yarn/lib/*:/opt/hadoop-3.2.4/share/hadoop/yarn/*

value>

property>

<property>

<name>mapreduce.reduce.envname>

<value>

HADOOP_MAPRED_HOME=/opt/hadoop-3.2.4/etc/hadoop:/opt/hadoop-3.2.4/share/hadoop/common/lib/*:/opt/hadoop-3.2.4/share/hadoop/common/*:/opt/hadoop-3.2.4/share/hadoop/hdfs:/opt/hadoop-3.2.4/share/hadoop/hdfs/lib/*:/opt/hadoop-3.2.4/share/hadoop/hdfs/*:/opt/hadoop-3.2.4/share/hadoop/mapreduce/lib/*:/opt/hadoop-3.2.4/share/hadoop/mapreduce/*:/opt/hadoop-3.2.4/share/hadoop/yarn:/opt/hadoop-3.2.4/share/hadoop/yarn/lib/*:/opt/hadoop-3.2.4/share/hadoop/yarn/*

value>

property>

configuration>

workers

hadoop100

hadoop101

hadoop102

设置hadoop集群用户权限

sudo gedit /etc/profile

添加

export HDFS_NAMENODE_USER=hadoop

export HDFS_DATANODE_USER=hadoop

export HDFS_SECONDARYNAMENODE_USER=hadoop

export YARN_RESOURCEMANAGER_USER=hadoop

export YARN_NODEMANAGER_USER=hadoop

更新配置

source /etc/profile

xsync分发给其他虚拟机

cd /opt/jdk1.8.0_202/bin # 该目录创建xsync脚本,可自定义到系统任何环境目录中

sudo gedit xsync

编写脚本

#!/bin/bash

#1. 判断参数个数

if [ $# -lt 1 ]

then

echo Not Enough Arguement!

exit;

fi

#2. 遍历集群所有机器

for host in hadoop100 hadoop101 hadoop102

do

echo ==================== $host ====================

#3. 遍历所有目录,挨个发送

for file in $@

do

#4. 判断文件是否存在

if [ -e $file ]

then

#5. 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

#6. 获取当前文件的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

设置执行权限

sudo chmod -R 777 xsync

分发hadoop配置,只分发配置文件,其余文件不同虚拟机有自己id不要修改。

xsync /opt/hadoop-3.2.4/etc/hadoop

格式化namenode配置

namenode节点会产生node id,需要在namenode机器上格式化配置,这里是hadoop100。

/opt/hadoop-3.2.4/bin/hdfs namenode -format

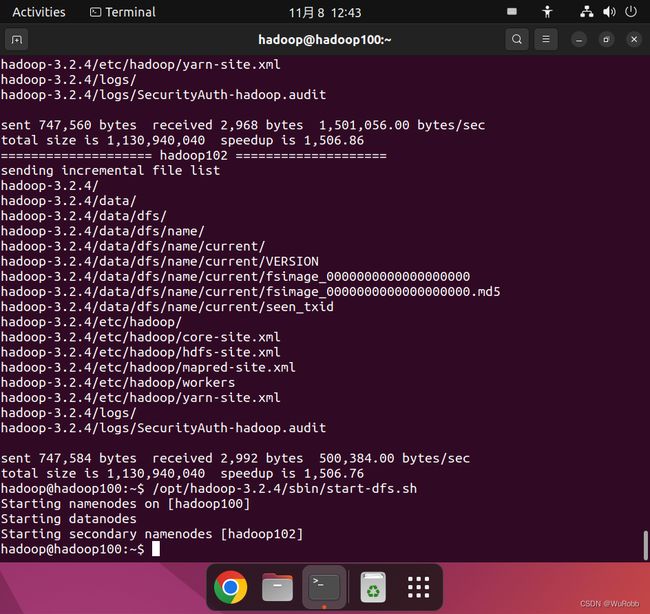

启动集群

在hadoop100上启动dfs

hadoop@hadoop100:~$ /opt/hadoop-3.2.4/sbin/start-dfs.sh

在hadoop101上启动yarn

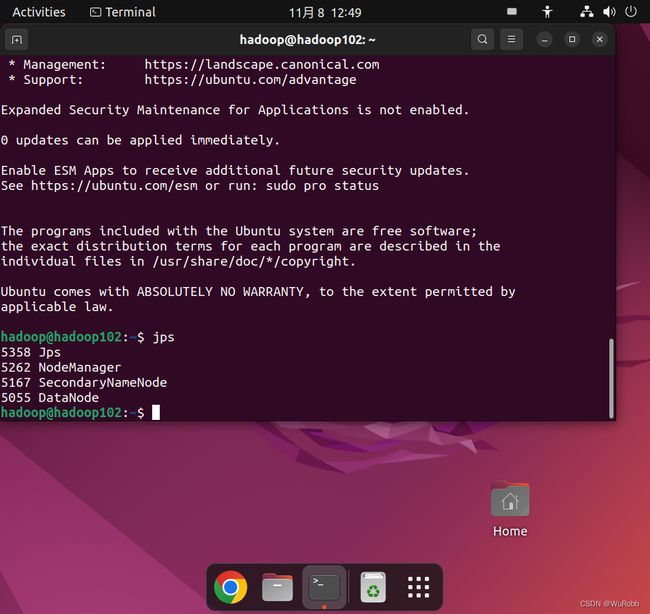

分别 在三台机器输入上查看hadoop进程

jps

hadoop100

hadoop101

hadoop102

配置完成

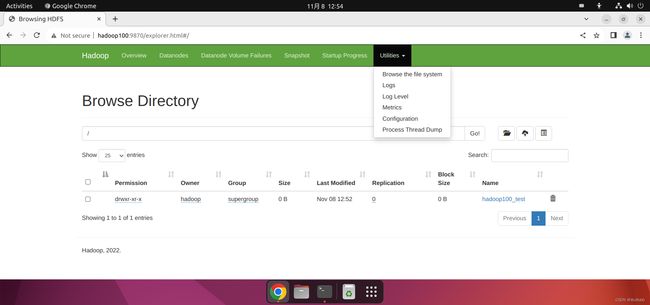

测试

在hadoop100上打开hadoop:9870

hadoop100上传文件到集群

hadoop@hadoop100:~$ /opt/hadoop-3.2.4/bin/hadoop fs -mkdir /hadoop100_test

ref

尚硅谷大数据教程:https://www.bilibili.com/video/BV1Qp4y1n7EN