经典目标检测YOLO系列(三)YOLOV3的复现(1)总体网络架构及前向处理过程

经典目标检测YOLO系列(三)YOLOV3的复现(1)总体网络架构及前向处理过程

和之前实现的YOLOv2一样,根据《YOLO目标检测》(ISBN:9787115627094)一书,在不脱离YOLOv3的大部分核心理念的前提下,重构一款较新的YOLOv3检测器,来对YOLOv3有更加深刻的认识。

书中源码连接: RT-ODLab: YOLO Tutorial

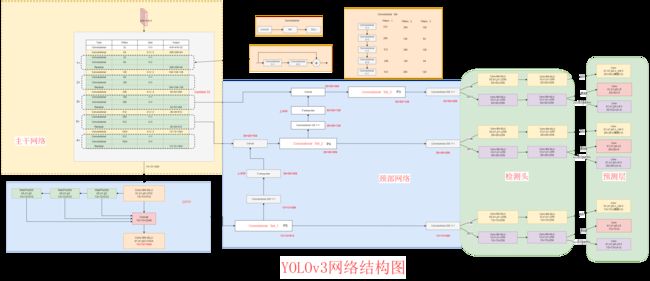

1、YOLOv3网络架构

1.1 DarkNet53主干网络

- 这里使用原版YOLOv3中提出的DarkNet53作为主干网络(backbone)。这里,作者还提供了DarkNetTiny版本的网络结构。

- 可以在https://github.com/yjh0410/image_classification_pytorch中,手动下载作者提供的在ImageNet数据集的预训练权重。

1.1.1 DarkNet53的残差模块

-

DarkNet53主要就是由一系列残差模块组成的,组成为【1、2、8、8、4】。

-

首先,我们搭建了由1×1卷积层和3×3卷积层组成的Bottleneck模块,其中shortcut参数用于决定是否使用残差连接。

# RT-ODLab/models/detectors/yolov3/yolov3_basic.py

# BottleNeck

class Bottleneck(nn.Module):

def __init__(self,

in_dim,

out_dim,

expand_ratio=0.5,

shortcut=False,

depthwise=False,

act_type='silu',

norm_type='BN'):

super(Bottleneck, self).__init__()

inter_dim = int(out_dim * expand_ratio) # hidden channels

self.cv1 = Conv(in_dim, inter_dim, k=1, norm_type=norm_type, act_type=act_type)

self.cv2 = Conv(inter_dim, out_dim, k=3, p=1, norm_type=norm_type, act_type=act_type, depthwise=depthwise)

self.shortcut = shortcut and in_dim == out_dim

def forward(self, x):

h = self.cv2(self.cv1(x))

return x + h if self.shortcut else h

- 然后,我们构建ResBlock类,通过调整nblocks决定使用多少个Bottleneck模块。

# RT-ODLab/models/detectors/yolov3/yolov3_basic.py

# ResBlock

class ResBlock(nn.Module):

def __init__(self,

in_dim,

out_dim,

nblocks=1,

act_type='silu',

norm_type='BN'):

super(ResBlock, self).__init__()

assert in_dim == out_dim

self.m = nn.Sequential(*[

Bottleneck(in_dim, out_dim, expand_ratio=0.5, shortcut=True,

norm_type=norm_type, act_type=act_type)

for _ in range(nblocks)

])

def forward(self, x):

return self.m(x)

1.1.2 构建DarkNet53网络

- 使用经典的【1、2、8、8、4】结构堆叠残差模块,层与层之间的降采样操作由stride=2的卷积来实现。

- 这里使用SiLU替代LeakyReLU激活函数,SiLU是Sigmoid和ReLU的改进版。SiLU具备无上界有下界、平滑、非单调的特性。

- DarkNet53返回C3、C4和C5三个尺度的特征图,目的是做FPN以及多级检测。

- 源码中,作者还提供了一个DarkNetTiny版本的网络结构。

- 完成yolov3_backbone的搭建后,可以在yolov3.py文件中,通过build_backbone函数进行调用。

# RT-ODLab/models/detectors/yolov3/yolov3_backbone.py

import torch

import torch.nn as nn

try:

from .yolov3_basic import Conv, ResBlock

except:

from yolov3_basic import Conv, ResBlock

model_urls = {

"darknet_tiny": "https://github.com/yjh0410/image_classification_pytorch/releases/download/weight/darknet_tiny.pth",

"darknet53": "https://github.com/yjh0410/image_classification_pytorch/releases/download/weight/darknet53_silu.pth"

}

# --------------------- DarkNet-53 -----------------------

## DarkNet-53

class DarkNet53(nn.Module):

def __init__(self, act_type='silu', norm_type='BN'):

super(DarkNet53, self).__init__()

self.feat_dims = [256, 512, 1024]

# P1

self.layer_1 = nn.Sequential(

Conv(3, 32, k=3, p=1, act_type=act_type, norm_type=norm_type),

Conv(32, 64, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(64, 64, nblocks=1, act_type=act_type, norm_type=norm_type)

)

# P2

self.layer_2 = nn.Sequential(

Conv(64, 128, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(128, 128, nblocks=2, act_type=act_type, norm_type=norm_type)

)

# P3

self.layer_3 = nn.Sequential(

Conv(128, 256, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(256, 256, nblocks=8, act_type=act_type, norm_type=norm_type)

)

# P4

self.layer_4 = nn.Sequential(

Conv(256, 512, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(512, 512, nblocks=8, act_type=act_type, norm_type=norm_type)

)

# P5

self.layer_5 = nn.Sequential(

Conv(512, 1024, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(1024, 1024, nblocks=4, act_type=act_type, norm_type=norm_type)

)

def forward(self, x):

c1 = self.layer_1(x)

c2 = self.layer_2(c1)

c3 = self.layer_3(c2)

c4 = self.layer_4(c3)

c5 = self.layer_5(c4)

outputs = [c3, c4, c5]

return outputs

## DarkNet-Tiny

class DarkNetTiny(nn.Module):

def __init__(self, act_type='silu', norm_type='BN'):

super(DarkNetTiny, self).__init__()

self.feat_dims = [64, 128, 256]

# stride = 2

self.layer_1 = nn.Sequential(

Conv(3, 16, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(16, 16, nblocks=1, act_type=act_type, norm_type=norm_type)

)

# stride = 4

self.layer_2 = nn.Sequential(

Conv(16, 32, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(32, 32, nblocks=1, act_type=act_type, norm_type=norm_type)

)

# stride = 8

self.layer_3 = nn.Sequential(

Conv(32, 64, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(64, 64, nblocks=3, act_type=act_type, norm_type=norm_type)

)

# stride = 16

self.layer_4 = nn.Sequential(

Conv(64, 128, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(128, 128, nblocks=3, act_type=act_type, norm_type=norm_type)

)

# stride = 32

self.layer_5 = nn.Sequential(

Conv(128, 256, k=3, p=1, s=2, act_type=act_type, norm_type=norm_type),

ResBlock(256, 256, nblocks=2, act_type=act_type, norm_type=norm_type)

)

def forward(self, x):

c1 = self.layer_1(x)

c2 = self.layer_2(c1)

c3 = self.layer_3(c2)

c4 = self.layer_4(c3)

c5 = self.layer_5(c4)

outputs = [c3, c4, c5]

return outputs

# --------------------- Functions -----------------------

def build_backbone(model_name='darknet53', pretrained=False):

"""Constructs a darknet-53 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

if model_name == 'darknet53':

backbone = DarkNet53(act_type='silu', norm_type='BN')

feat_dims = backbone.feat_dims

elif model_name == 'darknet_tiny':

backbone = DarkNetTiny(act_type='silu', norm_type='BN')

feat_dims = backbone.feat_dims

if pretrained:

url = model_urls[model_name]

if url is not None:

print('Loading pretrained weight ...')

checkpoint = torch.hub.load_state_dict_from_url(

url=url, map_location="cpu", check_hash=True)

# checkpoint state dict

checkpoint_state_dict = checkpoint.pop("model")

# model state dict

model_state_dict = backbone.state_dict()

# check

for k in list(checkpoint_state_dict.keys()):

if k in model_state_dict:

shape_model = tuple(model_state_dict[k].shape)

shape_checkpoint = tuple(checkpoint_state_dict[k].shape)

if shape_model != shape_checkpoint:

checkpoint_state_dict.pop(k)

else:

checkpoint_state_dict.pop(k)

print(k)

backbone.load_state_dict(checkpoint_state_dict)

else:

print('No backbone pretrained: DarkNet53')

return backbone, feat_dims

if __name__ == '__main__':

import time

from thop import profile

model, feats = build_backbone(model_name='darknet53', pretrained=True)

x = torch.randn(1, 3, 224, 224)

t0 = time.time()

outputs = model(x)

t1 = time.time()

print('Time: ', t1 - t0)

for out in outputs:

print(out.shape)

x = torch.randn(1, 3, 224, 224)

print('==============================')

flops, params = profile(model, inputs=(x, ), verbose=False)

print('==============================')

print('GFLOPs : {:.2f}'.format(flops / 1e9 * 2))

print('Params : {:.2f} M'.format(params / 1e6))

1.2 搭建neck网络

1.2.1 添加SPPF模块

- 原始的YOLOv3中,neck只有特征金字塔,后来又出现了添加了SPP模块的YOLOv3,后续版本也能找到SPP模块,因此我们继续使用之前自己实现的YOLOv1、YOLOv2中的SPPF模块。

- 代码在RT-ODLab/models/detectors/yolov3/yolov3_neck.py文件中,和之前一致,不在赘述。

- 对于添加的SPPF模块,仅仅用来处理主干网络输出的C5特征图,这样可以提高网络的感受野。另外,激活函数换为SiLU。

1.2.2 添加特征金字塔

- 在YOLOv3特征金字塔的基础上做了一些改进。

- 去除YOLOv3最后3层单独的3×3卷积,替换为3层1×1卷积

- 将每个尺度的通道数调整为256,方便后续利用解耦检测头进行检测。

# RT-ODLab/models/detectors/yolov3/yolov3_fpn.py

import torch

import torch.nn as nn

import torch.nn.functional as F

from .yolov3_basic import Conv, ConvBlocks

# Yolov3FPN

class Yolov3FPN(nn.Module):

def __init__(self,

in_dims=[256, 512, 1024],

width=1.0,

depth=1.0,

out_dim=None,

act_type='silu',

norm_type='BN'):

super(Yolov3FPN, self).__init__()

self.in_dims = in_dims

self.out_dim = out_dim

c3, c4, c5 = in_dims

# P5 -> P4

self.top_down_layer_1 = ConvBlocks(c5, int(512*width), act_type=act_type, norm_type=norm_type)

self.reduce_layer_1 = Conv(int(512*width), int(256*width), k=1, act_type=act_type, norm_type=norm_type)

# P4 -> P3

self.top_down_layer_2 = ConvBlocks(c4 + int(256*width), int(256*width), act_type=act_type, norm_type=norm_type)

self.reduce_layer_2 = Conv(int(256*width), int(128*width), k=1, act_type=act_type, norm_type=norm_type)

# P3

self.top_down_layer_3 = ConvBlocks(c3 + int(128*width), int(128*width), act_type=act_type, norm_type=norm_type)

# output proj layers

if out_dim is not None:

# output proj layers

self.out_layers = nn.ModuleList([

Conv(in_dim, out_dim, k=1,

norm_type=norm_type, act_type=act_type)

for in_dim in [int(128 * width), int(256 * width), int(512 * width)]

])

self.out_dim = [out_dim] * 3

else:

self.out_layers = None

self.out_dim = [int(128 * width), int(256 * width), int(512 * width)]

def forward(self, features):

c3, c4, c5 = features

# p5/32

# 1、经过Convolutional Set1得到P5

p5 = self.top_down_layer_1(c5)

# p4/16

# 2、P5先降维,然后进行上采样,拼接后经过Convolutional Set2得到P4

p5_up = F.interpolate(self.reduce_layer_1(p5), scale_factor=2.0)

p4 = self.top_down_layer_2(torch.cat([c4, p5_up], dim=1))

# P3/8

# 3、同样,P3先降维,然后进行上采样,拼接后经过Convolutional Set3得到P3

p4_up = F.interpolate(self.reduce_layer_2(p4), scale_factor=2.0)

p3 = self.top_down_layer_3(torch.cat([c3, p4_up], dim=1))

out_feats = [p3, p4, p5]

# output proj layers

if self.out_layers is not None:

# output proj layers

out_feats_proj = []

# 4、对p3, p4, p5分别调整通道数为256

for feat, layer in zip(out_feats, self.out_layers):

out_feats_proj.append(layer(feat))

return out_feats_proj

return out_feats

def build_fpn(cfg, in_dims, out_dim=None):

model = cfg['fpn']

# build neck

if model == 'yolov3_fpn':

fpn_net = Yolov3FPN(in_dims=in_dims,

out_dim=out_dim,

width=cfg['width'],

depth=cfg['depth'],

act_type=cfg['fpn_act'],

norm_type=cfg['fpn_norm']

)

return fpn_net

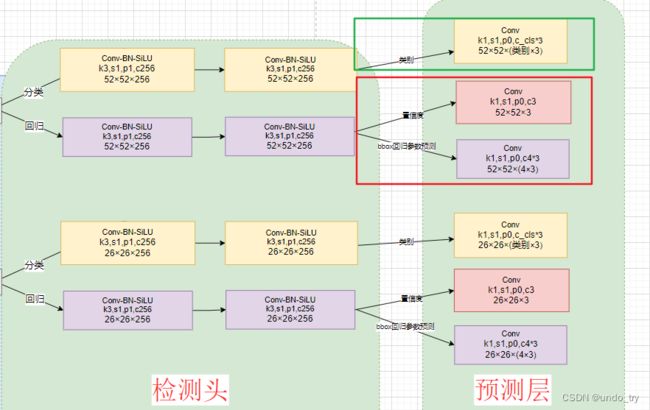

1.3 搭建检测头

- 官方YOLOv3中的检测头是耦合的,将置信度、类别及边界框由1层1×1卷积在一个特张图上全部预测出来。

- 我们这里使用两条并行分支,同时去完成分类和定位,继续采用解耦检测头。

- 尽管不同尺度的解耦检测头的结构相同,但是参数不共享,这一点不同于RetinaNet的检测头。

# RT-ODLab/models/detectors/yolov3/yolov3_head.py

import torch

import torch.nn as nn

try:

from .yolov3_basic import Conv

except:

from yolov3_basic import Conv

class DecoupledHead(nn.Module):

def __init__(self, cfg, in_dim, out_dim, num_classes=80):

super().__init__()

print('==============================')

print('Head: Decoupled Head')

self.in_dim = in_dim

self.num_cls_head=cfg['num_cls_head']

self.num_reg_head=cfg['num_reg_head']

self.act_type=cfg['head_act']

self.norm_type=cfg['head_norm']

# cls head

cls_feats = []

self.cls_out_dim = max(out_dim, num_classes)

for i in range(cfg['num_cls_head']):

if i == 0:

cls_feats.append(

Conv(in_dim, self.cls_out_dim, k=3, p=1, s=1,

act_type=self.act_type,

norm_type=self.norm_type,

depthwise=cfg['head_depthwise'])

)

else:

cls_feats.append(

Conv(self.cls_out_dim, self.cls_out_dim, k=3, p=1, s=1,

act_type=self.act_type,

norm_type=self.norm_type,

depthwise=cfg['head_depthwise'])

)

# reg head

reg_feats = []

self.reg_out_dim = max(out_dim, 64)

for i in range(cfg['num_reg_head']):

if i == 0:

reg_feats.append(

Conv(in_dim, self.reg_out_dim, k=3, p=1, s=1,

act_type=self.act_type,

norm_type=self.norm_type,

depthwise=cfg['head_depthwise'])

)

else:

reg_feats.append(

Conv(self.reg_out_dim, self.reg_out_dim, k=3, p=1, s=1,

act_type=self.act_type,

norm_type=self.norm_type,

depthwise=cfg['head_depthwise'])

)

self.cls_feats = nn.Sequential(*cls_feats)

self.reg_feats = nn.Sequential(*reg_feats)

def forward(self, x):

"""

in_feats: (Tensor) [B, C, H, W]

"""

cls_feats = self.cls_feats(x)

reg_feats = self.reg_feats(x)

return cls_feats, reg_feats

# build detection head

def build_head(cfg, in_dim, out_dim, num_classes=80):

head = DecoupledHead(cfg, in_dim, out_dim, num_classes)

return head

- 因为需要在三个尺度上都需要检测头,因此使用nn.ModuleList完成。

# RT-ODLab/models/detectors/yolov3/yolov3.py

# YOLOv3

class YOLOv3(nn.Module):

def __init__(self,

cfg,

device,

num_classes=20,

conf_thresh=0.01,

topk=100,

nms_thresh=0.5,

trainable=False,

deploy=False,

nms_class_agnostic=False):

super(YOLOv3, self).__init__()

......

# ------------------- Network Structure -------------------

## 主干网络

self.backbone, feats_dim = build_backbone(

cfg['backbone'], trainable&cfg['pretrained'])

## 颈部网络: SPP模块

self.neck = build_neck(cfg, in_dim=feats_dim[-1], out_dim=feats_dim[-1])

feats_dim[-1] = self.neck.out_dim

## 颈部网络: 特征金字塔

self.fpn = build_fpn(cfg=cfg, in_dims=feats_dim, out_dim=int(256*cfg['width']))

self.head_dim = self.fpn.out_dim

## 检测头

self.non_shared_heads = nn.ModuleList(

[build_head(cfg, head_dim, head_dim, num_classes) for head_dim in self.head_dim

])

1.4 搭建预测层

最后我们搭建每个尺度的预测层。

- 对于类别预测,我们在解耦检测头的类别分支后接一层1×1卷积,去做分类;

- 对于边界框预测,我们在解耦检测头的回归分支后接一层1×1卷积,去做定位;

- 对于置信度预测,我们在解耦检测头的回归分支后接一层1×1卷积,预测边界框的预测框。

# RT-ODLab/models/detectors/yolov3/yolov3.py

## 预测层

self.obj_preds = nn.ModuleList(

[nn.Conv2d(head.reg_out_dim, 1 * self.num_anchors, kernel_size=1)

for head in self.non_shared_heads

])

self.cls_preds = nn.ModuleList(

[nn.Conv2d(head.cls_out_dim, self.num_classes * self.num_anchors, kernel_size=1)

for head in self.non_shared_heads

])

self.reg_preds = nn.ModuleList(

[nn.Conv2d(head.reg_out_dim, 4 * self.num_anchors, kernel_size=1)

for head in self.non_shared_heads

])

1.5 改进YOLOv3的详细网络图

- 至此,我们完成了YOLOv3的网络结构的搭建,详解网络图如下:

2、YOLOv3的前向推理过程

2.1 解耦边界框坐标

2.1.1 先验框矩阵的生成

YOLOv3网络配置参数如下,我们从中能看到anchor_size变量。这是基于kmeans聚类,在COCO数据集上聚类出的先验框,由于COCO数据集更大、图片更加丰富,因此我们将这几个先验框用在VOC数据集上。

# RT-ODLab/config/model_config/yolov3_config.py

# YOLOv3 Config

yolov3_cfg = {

'yolov3':{

# ---------------- Model config ----------------

## Backbone

'backbone': 'darknet53',

'pretrained': True,

'stride': [8, 16, 32], # P3, P4, P5

'width': 1.0,

'depth': 1.0,

'max_stride': 32,

## Neck

'neck': 'sppf',

'expand_ratio': 0.5,

'pooling_size': 5,

'neck_act': 'silu',

'neck_norm': 'BN',

'neck_depthwise': False,

## FPN

'fpn': 'yolov3_fpn',

'fpn_act': 'silu',

'fpn_norm': 'BN',

'fpn_depthwise': False,

## Head

'head': 'decoupled_head',

'head_act': 'silu',

'head_norm': 'BN',

'num_cls_head': 2,

'num_reg_head': 2,

'head_depthwise': False,

'anchor_size': [[10, 13], [16, 30], [33, 23], # P3

[30, 61], [62, 45], [59, 119], # P4

[116, 90], [156, 198], [373, 326]], # P5

# ---------------- Train config ----------------

## input

'trans_type': 'yolov5_large',

'multi_scale': [0.5, 1.0],

# ---------------- Assignment config ----------------

## matcher

'iou_thresh': 0.5,

# ---------------- Loss config ----------------

## loss weight

'loss_obj_weight': 1.0,

'loss_cls_weight': 1.0,

'loss_box_weight': 5.0,

# ---------------- Train config ----------------

'trainer_type': 'yolov8',

},

'yolov3_tiny':{

# ---------------- Model config ----------------

## Backbone

'backbone': 'darknet_tiny',

'pretrained': True,

'stride': [8, 16, 32], # P3, P4, P5

'width': 0.25,

'depth': 0.34,

'max_stride': 32,

## Neck

'neck': 'sppf',

'expand_ratio': 0.5,

'pooling_size': 5,

'neck_act': 'silu',

'neck_norm': 'BN',

'neck_depthwise': False,

## FPN

'fpn': 'yolov3_fpn',

'fpn_act': 'silu',

'fpn_norm': 'BN',

'fpn_depthwise': False,

## Head

'head': 'decoupled_head',

'head_act': 'silu',

'head_norm': 'BN',

'num_cls_head': 2,

'num_reg_head': 2,

'head_depthwise': False,

'anchor_size': [[10, 13], [16, 30], [33, 23], # P3

[30, 61], [62, 45], [59, 119], # P4

[116, 90], [156, 198], [373, 326]], # P5

# ---------------- Train config ----------------

## input

'trans_type': 'yolov5_nano',

'multi_scale': [0.5, 1.0],

# ---------------- Assignment config ----------------

## matcher

'iou_thresh': 0.5,

# ---------------- Loss config ----------------

## loss weight

'loss_obj_weight': 1.0,

'loss_cls_weight': 1.0,

'loss_box_weight': 5.0,

# ---------------- Train config ----------------

'trainer_type': 'yolov8',

},

}

-

YOLOv3在C3、C4和C5每个特征图上,在每个网格处放置3个先验框。

- C3特征图,每个网格处放置(10, 13)、(16, 30)、(33, 23)三个先验框,用来检测较小的物体。

- C4特征图,每个网格处放置(30, 61)、(62, 45)、(59, 119)三个先验框,用来检测中等大小的物体。

- C5特征图,每个网格处放置(116, 90)、(156, 198)、(373, 326)三个先验框,用来检测较大的物体。

-

YOLOv3先验框矩阵生成的代码逻辑和YOLOv2相同。只是多1个level参数,用于标记是三个尺度的哪一个。每一个尺度都需要生成相应的先验框矩阵。

# RT-ODLab/models/detectors/yolov3/yolov3.py

## generate anchor points

def generate_anchors(self, level, fmp_size):

"""

fmp_size: (List) [H, W]

level=0, 默认缩放后的图像为416×416,那么经过8倍下采样后, fmp_size为52×52

level=1, 默认缩放后的图像为416×416,那么经过16倍下采样后,fmp_size为26×26

level=2, 默认缩放后的图像为416×416,那么经过32倍下采样后,fmp_size为13×13

"""

# 1、特征图的宽和高

fmp_h, fmp_w = fmp_size

# [KA, 2]

anchor_size = self.anchor_size[level]

# 2、生成网格的x坐标和y坐标

anchor_y, anchor_x = torch.meshgrid([torch.arange(fmp_h), torch.arange(fmp_w)])

# 3、将xy两部分的坐标拼接起来,shape为[H, W, 2]

# 再转换下, shape变为[HW, 2]

anchor_xy = torch.stack([anchor_x, anchor_y], dim=-1).float().view(-1, 2)

# 4、引入了anchor box机制,每个网格包含A个anchor,因此每个(grid_x, grid_y)的坐标需要复制A(Anchor nums)份

# 相当于 每个level每个网格左上角的坐标点复制3份 作为3个不同宽高anchor box的中心点

# [HW, 2] -> [HW, KA, 2] -> [M, 2]

anchor_xy = anchor_xy.unsqueeze(1).repeat(1, self.num_anchors, 1)

anchor_xy = anchor_xy.view(-1, 2).to(self.device)

# 5、每一个特征图的3组anchor box的宽高都复制fmp_size(例如: 13×13)份

# [KA, 2] -> [1, KA, 2] -> [HW, KA, 2] -> [M, 2]

anchor_wh = anchor_size.unsqueeze(0).repeat(fmp_h*fmp_w, 1, 1)

anchor_wh = anchor_wh.view(-1, 2).to(self.device)

# 6、将中心点和宽高cat起来,得到的shape为[M, 4]

# level=0, 其中M=52×52×3 表示feature map为52×52,每个网格有3组anchor box

# level=1, 其中M=26×26×3 表示feature map为26×26,每个网格有3组anchor box

# level=2, 其中M=13×13×3 表示feature map为13×13,每个网格有3组anchor box

anchors = torch.cat([anchor_xy, anchor_wh], dim=-1)

return anchors

2.1.2 解算边界框

- 生成先验框矩阵后,我们就能通过边界框偏移量reg_pred解耦出边界框坐标box_pred。

- 在前向推理中,和之前YOLOv2逻辑一致,仅仅是多了多级检测部分的代码,需要经过for循环收集三个尺度的obj_preds, cls_preds和box_preds预测。

# RT-ODLab/models/detectors/yolov3/yolov3.py

import torch

import torch.nn as nn

from utils.misc import multiclass_nms

from .yolov3_backbone import build_backbone

from .yolov3_neck import build_neck

from .yolov3_fpn import build_fpn

from .yolov3_head import build_head

# YOLOv3

class YOLOv3(nn.Module):

def __init__(self,

cfg,

device,

num_classes=20,

conf_thresh=0.01,

topk=100,

nms_thresh=0.5,

trainable=False,

deploy=False,

nms_class_agnostic=False):

super(YOLOv3, self).__init__()

# ------------------- Basic parameters -------------------

self.cfg = cfg # 模型配置文件

self.device = device # cuda或者是cpu

self.num_classes = num_classes # 类别的数量

self.trainable = trainable # 训练的标记

self.conf_thresh = conf_thresh # 得分阈值

self.nms_thresh = nms_thresh # NMS阈值

self.topk = topk # topk

self.stride = [8, 16, 32] # 网络的输出步长

self.deploy = deploy

self.nms_class_agnostic = nms_class_agnostic

# ------------------- Anchor box -------------------

self.num_levels = 3

self.num_anchors = len(cfg['anchor_size']) // self.num_levels

self.anchor_size = torch.as_tensor(

cfg['anchor_size']

).float().view(self.num_levels, self.num_anchors, 2) # [S, A, 2]

# ------------------- Network Structure -------------------

## 主干网络

self.backbone, feats_dim = build_backbone(

cfg['backbone'], trainable&cfg['pretrained'])

## 颈部网络: SPP模块

self.neck = build_neck(cfg, in_dim=feats_dim[-1], out_dim=feats_dim[-1])

feats_dim[-1] = self.neck.out_dim

## 颈部网络: 特征金字塔

self.fpn = build_fpn(cfg=cfg, in_dims=feats_dim, out_dim=int(256*cfg['width']))

self.head_dim = self.fpn.out_dim

## 检测头

self.non_shared_heads = nn.ModuleList(

[build_head(cfg, head_dim, head_dim, num_classes) for head_dim in self.head_dim

])

## 预测层

self.obj_preds = nn.ModuleList(

[nn.Conv2d(head.reg_out_dim, 1 * self.num_anchors, kernel_size=1)

for head in self.non_shared_heads

])

self.cls_preds = nn.ModuleList(

[nn.Conv2d(head.cls_out_dim, self.num_classes * self.num_anchors, kernel_size=1)

for head in self.non_shared_heads

])

self.reg_preds = nn.ModuleList(

[nn.Conv2d(head.reg_out_dim, 4 * self.num_anchors, kernel_size=1)

for head in self.non_shared_heads

])

# ---------------------- Basic Functions ----------------------

## generate anchor points

def generate_anchors(self, level, fmp_size):

......

## post-process

def post_process(self, obj_preds, cls_preds, box_preds):

pass

# ---------------------- Main Process for Inference ----------------------

@torch.no_grad()

def inference(self, x):

# x.shape = (1, 3, 416, 416)

# 主干网络

# pyramid_feats[0] = (1, 256, 52, 52)

# pyramid_feats[1] = (1, 512, 26, 26)

# pyramid_feats[2] = (1, 1024, 13, 13)

pyramid_feats = self.backbone(x)

# 颈部网络(SPPF)

# pyramid_feats[-1] = (1, 1024, 13, 13)

pyramid_feats[-1] = self.neck(pyramid_feats[-1])

# 特征金字塔

# pyramid_feats[0] = (1, 256, 52, 52)

# pyramid_feats[1] = (1, 256, 26, 26)

# pyramid_feats[2] = (1, 256, 13, 13)

pyramid_feats = self.fpn(pyramid_feats)

# 检测头

all_obj_preds = []

all_cls_preds = []

all_box_preds = []

for level, (feat, head) in enumerate(zip(pyramid_feats, self.non_shared_heads)):

cls_feat, reg_feat = head(feat)

# 回归分支和分类分支分别经过1×1卷积得到预测结果

# [1, C, H, W]

# level=0, obj_pred=(1, 3, 52, 52),cls_pred=(1, 3*20, 52, 52),cls_pred=(1, 3*4, 52, 52)

# level=1, obj_pred=(1, 3, 26, 26),cls_pred=(1, 3*20, 26, 26),cls_pred=(1, 3*4, 26, 26)

# level=2, obj_pred=(1, 3, 13, 13),cls_pred=(1, 3*20, 13, 13),cls_pred=(1, 3*4, 13, 13)

obj_pred = self.obj_preds[level](reg_feat)

cls_pred = self.cls_preds[level](cls_feat)

reg_pred = self.reg_preds[level](reg_feat)

# 每一个尺度,都需要生成边界框矩阵

# anchors: [M, 2]

fmp_size = cls_pred.shape[-2:]

anchors = self.generate_anchors(level, fmp_size)

# [1, AC, H, W] -> [H, W, AC] -> [M, C]

obj_pred = obj_pred[0].permute(1, 2, 0).contiguous().view(-1, 1)

cls_pred = cls_pred[0].permute(1, 2, 0).contiguous().view(-1, self.num_classes)

reg_pred = reg_pred[0].permute(1, 2, 0).contiguous().view(-1, 4)

# decode bbox

# 解算边界框

ctr_pred = (torch.sigmoid(reg_pred[..., :2]) + anchors[..., :2]) * self.stride[level]

wh_pred = torch.exp(reg_pred[..., 2:]) * anchors[..., 2:]

pred_x1y1 = ctr_pred - wh_pred * 0.5

pred_x2y2 = ctr_pred + wh_pred * 0.5

box_pred = torch.cat([pred_x1y1, pred_x2y2], dim=-1)

all_obj_preds.append(obj_pred)

all_cls_preds.append(cls_pred)

all_box_preds.append(box_pred)

# 循环结束,就得到了all_obj_preds, all_cls_preds, all_box_preds

# 然后进行后处理

if self.deploy:

obj_preds = torch.cat(all_obj_preds, dim=0)

cls_preds = torch.cat(all_cls_preds, dim=0)

box_preds = torch.cat(all_box_preds, dim=0)

scores = torch.sqrt(obj_preds.sigmoid() * cls_preds.sigmoid())

bboxes = box_preds

# [n_anchors_all, 4 + C]

outputs = torch.cat([bboxes, scores], dim=-1)

return outputs

else:

# post process

bboxes, scores, labels = self.post_process(

all_obj_preds, all_cls_preds, all_box_preds)

return bboxes, scores, labels

# ---------------------- Main Process for Training ----------------------

def forward(self, x):

if not self.trainable:

return self.inference(x)

else:

......

2.2 后处理

- 经过for循环得到三个尺度所有的预测后,就进入到了后处理阶段。

- 和YOLOv2的后处理的代码逻辑相同,但是因为多了多级检测,因此需要通过for循环,对每一个尺度的预测进行后处理。

- 实现后处理的代码后,模型的forward函数就清晰了,不再赘述。

# RT-ODLab/models/detectors/yolov3/yolov3.py

## post-process

def post_process(self, obj_preds, cls_preds, box_preds):

"""

Input:

obj_preds: List(Tensor) [[H x W x A, 1], ...] ,即[[52×52×3, 1], [26×26×3, 1], [13×13×3, 1]]

cls_preds: List(Tensor) [[H x W x A, C], ...] ,即[[52×52×3, 20], [26×26×3, 20], [13×13×3, 20]]

box_preds: List(Tensor) [[H x W x A, 4], ...] ,即[[52×52×3, 4], [26×26×3, 4], [13×13×3, 4]]

anchors: List(Tensor) [[H x W x A, 2], ...]

"""

all_scores = []

all_labels = []

all_bboxes = []

# 对每一个尺度循环

for obj_pred_i, cls_pred_i, box_pred_i in zip(obj_preds, cls_preds, box_preds):

# (H x W x KA x C,)

scores_i = (torch.sqrt(obj_pred_i.sigmoid() * cls_pred_i.sigmoid())).flatten()

# 1、topk操作

# Keep top k top scoring indices only.

num_topk = min(self.topk, box_pred_i.size(0))

# torch.sort is actually faster than .topk (at least on GPUs)

predicted_prob, topk_idxs = scores_i.sort(descending=True)

topk_scores = predicted_prob[:num_topk]

topk_idxs = topk_idxs[:num_topk]

# 2、滤掉低得分(边界框的score低于给定的阈值)的预测边界框

# filter out the proposals with low confidence score

keep_idxs = topk_scores > self.conf_thresh

scores = topk_scores[keep_idxs]

topk_idxs = topk_idxs[keep_idxs]

# 获取flatten之前topk_scores所在的idx以及相应的label

anchor_idxs = torch.div(topk_idxs, self.num_classes, rounding_mode='floor')

labels = topk_idxs % self.num_classes

bboxes = box_pred_i[anchor_idxs]

all_scores.append(scores)

all_labels.append(labels)

all_bboxes.append(bboxes)

# 将三个尺度的预测结果concat起来,然后进行nms

scores = torch.cat(all_scores)

labels = torch.cat(all_labels)

bboxes = torch.cat(all_bboxes)

# to cpu & numpy

scores = scores.cpu().numpy()

labels = labels.cpu().numpy()

bboxes = bboxes.cpu().numpy()

# nms

# 3、滤掉那些针对同一目标的冗余检测。

scores, labels, bboxes = multiclass_nms(

scores, labels, bboxes, self.nms_thresh, self.num_classes, self.nms_class_agnostic)

return bboxes, scores, labels

接下来,就到了正样本的匹配和损失函数计算了、以及数据预处理。

- 正样本的匹配和损失函数计算,我们会延续之前YOLOv2的做法。

- 对于数据预处理、数据增强等,我们不再采用之前SSD风格的处理手段,而是选择YOLOv5的数据处理方法来训练我们的YOLOv3。