MongoDB(3)

文章目录

- 一、副本集

-

- 简介

- 副本集的三个角色

- 副本集的创建

-

- 创建主节点

- 创建副本节点

- 创建仲裁节点

- 初始化配置副本集和主节点

- 副本集的数据读写操作

- 主节点的选举原则

- 集群故障分析

-

- 副本节点故障

- 主节点故障

- 仲裁节点故障

- 仲裁节点和主节点故障

- 仲裁节点和从节点故障

- 主节点和从节点故障

- 二、分片集群

-

- 分片概念

- 分片集群包含的组件

- 分片集群架构目标

- 分片机制

-

- 数据如何切分

- 如何保证均衡

- 分片(存储)节点副本集的创建

-

- 第一套副本集shard1

- 第二套副本集shard2

- 配置节点副本集的创建 config server

- 路由节点的创建和操作mongos

-

- 第一个路由节点的创建和连接

- 在路由节点上进行分片设置操作

- 分片后插入数据测试

- 再增加一个路由节点

一、副本集

简介

MongoDB中的副本集(Replica Set)是一组维护相同数据集的Mongod服务。副本集可提供冗余和高可用性,是所有生产部署的基础。

也可以说,副本集类似有自动故障恢复功能的主从集群。通俗的讲就是用多台机器进行同一数据的异步同步,从而使多台机器拥有同一数据的多个副本,并且当主库宕掉时不需要用户干预的情况下自动切换其他备份服务器做主库。而且还可以利用副本服务器做只读服务器,实现读写分离,提高负载。

- 冗余和数据可用性

- 复制提供冗余并提高数据可用性。通过在不同数据库服务器上提供多个数据副本,复制可提供一定级别的容错功能,以防止丢失单个数据库服务器。在某些情况下,复制可以提供增加的读取性能,因为客户端可以将读取操作发送到不同的服务上,在不同数据中心维护数据副本可以增加分布式应用程序的数据位置和可用性。您还可以为专用目的的维护其他副本,例如灾难恢复,报告或备份。

- MongoDB中的复制

- 副本集是一组维护相同数据集的mongod实例。副本集包含多个数据承载节点和可选的一个仲裁节点。在承载数据的节点中,一个且仅一个成员被视为主节点,而其他节点被视为次要(从)节点。主节点接收所有写操作。副本集只能有一个主要能够确认具有{w: “most”}写入关注的写入;虽然在某些情况下,另一个mongod实例可能暂时认为自己也是主要的。主要记录其操作日志中的数据集的所有更改,即oplog。

- 主从复制和副本集区别

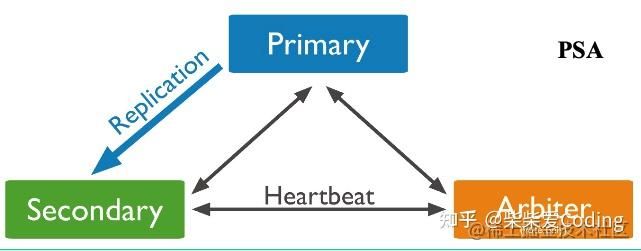

副本集的三个角色

副本集有两种类型三种角色

- 两种类型

- 主节点(primary)类型:数据操作的主要连接点,可读写

- 次要(辅助、从)节点(Secondaries)类型:数据冗余备份节点,可以读或选举

- 三种角色

- 主要成员(Primary):主要接收所有写操作。就是主节点。

- 副本成员(Replicate):从节点通过复制操作以维护相同的数据集,即备份数据,不可写操作,但可以读操作(但需要配置)。是默认的一种从节点类型

- 仲裁者(Arbiter):不保留任何数据的副本,只具有投票选举作用,当然也可以将仲裁服务器维护为副本集的一部分,集副本成员同时也可以是仲裁者。也是一种从节点类型

- 建议

- 如果你的副本+主节点个数是偶数,建议加一个仲裁者,形成奇数,容易满足大多数的投票。

- 如果你的副本+主节点的个数是奇数,可以不加仲裁者。

副本集的创建

在一台服务器上做多实例,一主一从一仲裁

创建主节点

- 建立存档数据和日志的目录

mkdir -p /usr/local/mongodb/replica_sets/myrs_27017/log

mkdir -p /usr/local/mongodb/replica_sets/myrs_27017/data/db

- 新建或修改配置文件

vim /usr/local/mongodb/replica_sets/myrs_27017/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/myrs_27017/log/mongod.log"

logAppend: true

storage:

dbpath:"/usr/local/mongodb/replica_sets/myrs_27017/data/db"

journal:

# 启用持久化日志

enabled: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/myrs_27017/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27017

replication:

replSetName:myrs

- 启动节点服务

mongod -f /usr/local/mongodb/replica_sets/myrs_27017/mongod.conf

创建副本节点

- 建立存档数据和日志的目录

mkdir -p /usr/local/mongodb/replica_sets/myrs_27018/log

mkdir -p /usr/local/mongodb/replica_sets/myrs_27018/data/db

- 新建或修改配置文件

vim /usr/local/mongodb/replica_sets/myrs_27018/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/myrs_27018/log/mongod.log"

logAppend: true

storage:

dbpath:"/usr/local/mongodb/replica_sets/myrs_27018/data/db"

journal:

# 启用持久化日志

enabled: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/myrs_27018/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27018

replication:

replSetName:myrs

- 启动节点服务

mongod -f /usr/local/mongodb/replica_sets/myrs_27018/mongod.conf

创建仲裁节点

- 建立存档数据和日志的目录

mkdir -p /usr/local/mongodb/replica_sets/myrs_27019/log

mkdir -p /usr/local/mongodb/replica_sets/myrs_27019/data/db

- 新建或修改配置文件

vim /usr/local/mongodb/replica_sets/myrs_27019/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/myrs_27019/log/mongod.log"

logAppend: true

storage:

dbpath:"/usr/local/mongodb/replica_sets/myrs_27019/data/db"

journal:

# 启用持久化日志

enabled: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/myrs_27019/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27019

replication:

replSetName:myrs

- 启动节点服务

mongod -f /usr/local/mongodb/replica_sets/myrs_27019/mongod.conf

初始化配置副本集和主节点

- 使用客户端命令连接任一个节点,但这里尽量要连接主节点(27017节点)

mongo --host=localhost --port=27107

- 此时很多命令无法使用,必须初始化副本集

> rs.initiate()

myrs:SECONDARY>

myrs:PRIMARY>

# 命令提示符发生变化,变成了一个从节点角色,此时默认不能读写,稍等片刻,回车,变成主节点

- 查看副本节点配置

myrs:PRIMARY> rs.conf()

- 添加副本集和仲裁节点

rs.add("192.168.64.129:27018")

# 添加副本节点

rs.addArb("192.168.64.129:27019")

# 添加仲裁节点

- 设置从节点可读

rs.slaveOk()

副本集的数据读写操作

- 测试三个不同角色的节点的数据读写情况

- 主节点测试

mongo --port=27107

use articledb

db.comment.insert(

{

"articleid":"10000",

"content":"今天我们来学习mongodb",

"userid":"1001",

"nickname":"www",

"createdatetime":new Date(),

"likenum":NumberInt(10),

"state":null

}

)

- 从节点测试

mongo --port=27108

use articledb

db.comment.insert(

{

"articleid":"10000",

"content":"今天我们来学习mongodb",

"userid":"1001",

"nickname":"www",

"createdatetime":new Date(),

"likenum":NumberInt(10),

"state":null

}

)

show tables

- 仲裁节点不存放任何数据

mongo --port=27109

rs.slaveOk()

show dbs

# 也会报错

主节点的选举原则

-

MongoDB在副本集中,会自动进行主节点的选举,主节点选举的出发条件

-

主节点故障

-

主节点网络不可达(默认心跳信息为10秒)

-

人工干预(rs.stepDown(600))

primary直接降级在600s内不会把自己选为primary

-

-

一旦触发选举,就要根据一定规则来选择主节点。

-

选举规则是根据票数来决定谁获胜

- 票数最高,且获得了”大多数“成员的投票支持的节点获胜。

- ”大多数“的定义为:假设复制集内投票成员是N,则大多数为N/2+1。当复制集内存活成员数量不足大多数时,整个复制集将无法选举primary,复制集将无法提供写服务,处于只读状态。

- 若票数相同,且都获得了”大多数“成员的投票支持的,数据新的节点获胜。

- 数据的新旧是通过操作日志oplog来对比的。

-

在获得票数的时候,优先级(priority)参数影响重大。

-

可以通过设置优先级来设置额外票数。优先级即权重,取值为0-1000,相当于增加0-1000的票数,优先级的值越大,就越可能获得多数成员的投票。指定较高的值可使成员更有资格成为主要成员,更低的值可使成员更不符合条件

-

默认情况下,优先级的值是1

集群故障分析

副本节点故障

- 主节点和仲裁节点对副本节点的心跳失败。因此主节点还在,因此没有触发投票选举。

- 如果此时,在主节点写入数据。再启动从节点,会发现,主节点写入的数据,会自动同步给从节点。

- 此时:不影响正常使用

主节点故障

- 从节点和仲裁节点对主节点的心跳失败,当失败超过10秒,此时因为没有主节点了,会自动发起投票。

- 而副本节点只有一台,因此,候选人只有一个就是副本节点,开始投票。

- 仲裁节点向副本节点投了一票,副本节点本身自带一票,因此共两票,超过了”大多数“

- 27019是仲裁节点,没有选举权,27018不向其投票,其票数是0

- 最终结果,27018成为主节点。具备读写功能

- 再启动27017主节点,发现27017变成了从节点,27018仍保持主节点。

- 登录27017节点,发现是从节点了,数据自动从27018同步。

- 此时,不影响正常使用

仲裁节点故障

- 主节点和副本节点对仲裁节点的心跳失败,因此主节点还在,没有触发投票选举。

- 此时,不影响正常使用

仲裁节点和主节点故障

- 副本集中没有主节点了,导致此时,副本集是只读状态,无法写入。

- 因为27017的票数,没有获得大多数,即没有大于等于2,它只有默认的一票(优先级是1)

- 如果要触发选举,随便加入一个成员即可。

- 如果只加入27019仲裁节点成员,则主节点一定是27017,因为没得选了,仲裁节点不参与选举,但参与投票。

- 如果只加入27018节点,会发起选举。因为27017和27018都是一票,则按照谁数据新,谁当主节点。

- 此时:影响正常使用,需要处理

仲裁节点和从节点故障

- 10秒后,27017主节点自动降级为副本节点。(服务降级)

- 副本集不可写数据了,已经故障了

- 此时,影响正常使用,需要处理

主节点和从节点故障

- 此时,影响正常使用,需要处理

二、分片集群

分片概念

分片(sharding)是一种跨多台机器分布数据的方法,MongoDB使用分片来支持具有非常大的数据集和高吞吐量操作的部署。分片是将数据拆分,散到不同的机器上,不需要功能强大的大型计算机就可以存储更多的数据,处理更多的负载。有时也叫分区(partitioning)。

具有大型数据集或高吞吐量应用程序的数据库系统可能会挑战单个服务器的容量。例如,高查询率会耗尽服务器的CPU容量。工作集大小大于系统的RAM会强调磁盘驱动的I/O容量。

有两种解决系统增长的方法:垂直扩展和水平扩展。

垂直扩展是增加单个服务器的容量,例如使用更强大的CPU,添加更多RAM或增加存储空间量。可用技术的局限性可能会限制单个机器对于给定工作负载而言足够强大。此外,基于云的提供商基于可用的硬件配置具有硬性上限,垂直缩放有实际的最大值。

水平扩展是划分系统数据集并分散加载到多个服务器上,添加其他服务器以根据需要增加容量。虽然单个机器的总体速度或容量可能不高,但每台机器处理整个工作负载的子集,可能提供比单个高速大容量服务器更高的效率。扩展部署容量只需要根据需要添加额外的服务器,这可能比单个机器的高端硬件的总成本更低。但是这样基础架构和部署维护的复杂性会增加。

分片集群包含的组件

- 分片(存储):每个分片包含分片数据的子集。每个分片都可以部署为副本集。

- mongos(路由):mongos充当查询路由器,在客户端应用程序和分片集群之间提供接口。

- config servers(”调度“的配置):配置服务器存储群集的元数据和配置设置。从MongoDB 3.4开始,必须将配置服务器部署为副本集(CSRS)。

分片集群架构目标

两个分片节点副本集(3+3)+一个配置节点副本集(3)+两个路由节点(2),共11个服务节点。

分片机制

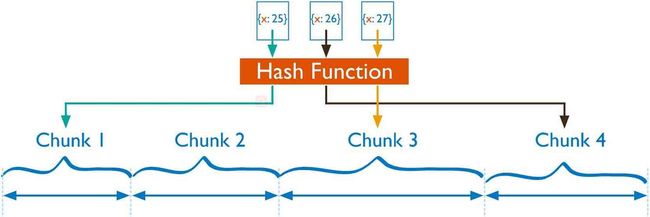

数据如何切分

基于分片切分后的数据块称为chunk,一个分片后的集合会包含多个chunk,每个chunk位于哪个分片(shard)则记录在Config Server(配置服务器)上。

Mongos在操作分片集合时,会自动根据分片键找到对应的chunk,并向该chunk所在的分片发起操作请求。

数据是根据分片策略来进行切分的,而分片策略则由分片键(ShardKey)+分片算法(ShardStrategy)组成。

MongoDB支持两种分片算法:哈希分片和范围分片

哈希分片使用哈希索引来在分片集群中对数据进行划分。哈希索引计算某一个字段的哈希值作为索引键,这个值被用作片键。

如何保证均衡

数据是分布在不同的chunk上的,而chunk则会分配到不同的分片上,那么如何保证分配上的数据(chunk)是均衡的呢?

- A 全预分配,chunk的数控和shard都是预先定义好的,比如10个shard,存储1000个chunk,那么每个shard分别拥有100个chunk。此时集群已经是均衡的状态(这里假设)

- B 非预分配,这种情况则比较复杂,一般当一个chunk太大时会产生分裂(split),不断分裂的结果会导致不均衡;或者动态扩容增加分片时,也会出现不均衡的状态。这种不均衡的状态由集群均衡器进行检测,一旦发现了不均衡则执行chunk数据的搬迁达到均衡。

分片(存储)节点副本集的创建

第一套副本集shard1

- 准备存放数据和日志的目录

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27018/log

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27018/data/db

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27118/log

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27118/data/db

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27218/log

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27218/data/db

- 建立配置文件

vim /usr/local/mongodb/sharded_cluster/myshardrs01_27018/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/myrs_27018/log/mongod.log"

logAppend: true

storage:

dbpath:"/usr/local/mongodb/replica_sets/myrs_27018/data/db"

journal:

# 启用持久化日志

enabled: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/myrs_27018/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27018

replication:

replSetName: myshardrs01

sharding:

clusterRole: shardsvr

# 分片角色,shardsvr为分片节点,configsvr配置节点

- 27118和27218配置文件省略,记得改掉端口

- 启动第一套副本集:一主一副本一仲裁

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27018/mongod.conf

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27118/mongod.conf

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27218/mongod.conf

ps -ef | grep mongod

- 初始化副本集和创建主节点,尽量连接主节点

mongo --port 27018

rs.initiate()

rs.status()

rs.add("192.168.64.129:27118")

rs.addArb("192.168.64.129:27218")

rs.conf()

第二套副本集shard2

- 跟第一套副本集一样,端口号27318、27418、27518,副本集名称:myshardrs02

- 准备存放数据和日志的目录

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27318/log

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27318/data/db

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27418/log

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27418/data/db

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27518/log

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27518/data/db

- 建立配置文件

vim /usr/local/mongodb/sharded_cluster/myshardrs01_27318/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/myrs_27318/log/mongod.log"

logAppend: true

storage:

dbpath:"/usr/local/mongodb/replica_sets/myrs_27318/data/db"

journal:

# 启用持久化日志

enabled: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/myrs_27318/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27318

replication:

replSetName: myshardrs02

sharding:

clusterRole: shardsvr

# 分片角色,shardsvr为分片节点,configsvr配置节点

- 27418和27518配置文件省略,记得改掉端口

- 启动第二套副本集:一主一副本一仲裁

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27318/mongod.conf

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27418/mongod.conf

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27518/mongod.conf

ps -ef | grep mongod

- 初始化副本集和创建主节点,尽量连接主节点

mongo --port 27318

rs.initiate()

rs.status()

rs.add("192.168.64.129:27418")

rs.addArb("192.168.64.129:27518")

rs.conf()

配置节点副本集的创建 config server

- 准备存放数据和日志的目录

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27019/log

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27019/data/db

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27119/log

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27119/data/db

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27219/log

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27219/data/db

- 建立配置文件,注意这边配置文件和上面不太一样,分片角色设为配置节点,sharding: clusterRole: configsvr

vim /usr/local/mongodb/sharded_cluster/myshardrs01_27019/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/myrs_27019/log/mongod.log"

logAppend: true

storage:

dbpath:"/usr/local/mongodb/replica_sets/myrs_27019/data/db"

journal:

# 启用持久化日志

enabled: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/myrs_27019/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27019

replication:

replSetName: myconfigr

sharding:

clusterRole: configsvr

# 分片角色,shardsvr为分片节点,configsvr配置节点

- 27119和27219配置文件省略,记得改掉端口

- 启动第一套副本集:一主一副本一仲裁

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27019/mongod.conf

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27119/mongod.conf

mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27219/mongod.conf

ps -ef | grep mongod

- 初始化副本集和创建主节点,尽量连接主节点

- 这里就不用添加仲裁节点了,否则会报错

mongo --port 27019

rs.initiate()

rs.status()

rs.add("192.168.64.129:27119")

rs.add("192.168.64.129:27219")

rs.conf()

路由节点的创建和操作mongos

第一个路由节点的创建和连接

- 准备存放日志的目录

mkdir -p /usr/local/mongodb/sharded_cluster/mymongos_27017/log

- 建立配置文件

vim /usr/local/mongodb/sharded_cluster/mymongos_27017/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/mymongos_27017/log/mongod.log"

logAppend: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/mymongos_27017/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27017

sharding:

configDB: myconfigrs/192.168.64.129:27019,192.168.64.129:27119,192.168.64.129:27219

# 分片角色,shardsvr为分片节点,configsvr配置节点

- 启动mongos

mongod -f /usr/local/mongodb/sharded_cluster/mymongos_27017/mongod.conf

- 客户端登录mongos

- 此时,写不进去数据,如果写数据会报错;原因:通过路由节点操作,现在只是连接了配置节点,还没有连接分片数据节点,因此无法写入业务数据。

mongo --port 27017

mongos> use add

mongos> db.aa.insert({aa:"aa"})

在路由节点上进行分片设置操作

- 将第一套分片副本集添加进来

mongos> sh.addShard("myshardrs01/192.168.64.129:27018,192.168.64.129:27118,192.168.64.129:27218")

- 查看分片状态情况

mongos> sh.status()

- 将第二套分片副本集添加进来

mongos> sh.addShard("myshardrs02/192.168.175.10:27318,192.168.175.10:27418,192.168.175.10:27518")

- 查看分片状态情况

mongos> sh.status()

- 移除分片

- 注意:如果只删除最后一个shard,是无法删除的。移除时会自动转移分片数据,需要一个时间过程。完成后,再次执行删除分片命令才能真正删除。

use admin

db.runCommand({removeShard:"myshardrs02"})

mongos> db.runCommand({removeShard:"myshardrs02"})

mongos> sh.status()

# 可以看到转移数据过程

- 开启分片功能:sh.enableSharding(“库名”)、sh.shardCollection(“库名.集合名”,{“key”:1})

mongos> sh.enableSharding("articledb")

- 集合分片 对集合分片,必须使用sh.shardCollection()方法指定集合和分片键

mongos> sh.shardCollection(namespace,key,unique)

- 参数

- namespace

- 要分片共享的目标集合的命名空间

- key

- 用作分片键的索引规范文档

- unique

- 当值为true情况下,片键字段上会限制为确保是唯一索引

- namespace

对集合进行分片时,需要选择一个片键(shard Key),shard Key是每条记录都必须包含的,且建立了索引的单个字段或复合字段,MongoDB按照片键将数据划分到不同的数据块中,并将数据块均衡地分布到所有分片中。为了按照片键划分分数块,MongoDB使用基于哈希的分片方式(随机平均分配)或者基于范围的分片方式(数值大小分配)。用什么字段当片键都可以,如:nickname作为片键,但一定是必填字段。

- 分片规则一:哈希策略

- MongoDB计算一个字段的哈希值,并用这个哈希值来创建数据块。在使用基于哈希分片的系统中,拥有”相近“片键的文档很可能不会存储在同一个数据块中,因此数据的分离性更好一些。

- 使用nickname作为片键,根据其值的哈希值进行数据分片

sh.shardCollection("articledb.comment",{"nickname":"hashed"})

# 对comment这个集合使用hash方式分片

sh.status()

# 可以看到转移数据过程

- 分片规则二:范围策略

- 对于基于范围的分片,MongoDB按照片键的范围把数据分成不同部分。假设有一个数字的片键,想象一个从负无穷到正无穷的直线,每一个片键的值都在直线上画了一个点.MongoDB把这条执行划分为更短的不重叠的片段,并称之为数据块,每个数据块包含了片键在一定范围内的数据。在使用片键做范围划分的系统中,拥有”相近“片键的文档很可能存储在同一个数据块中,因此也会存储在同一个分片中。

mongos> sh.shardCollection("articledb.author",{"age":1})

# 如使用做着年龄字段作为片键,按照年龄的值进行分片

- 一个集合只能指定一个片键,否则报错

分片后插入数据测试

- 测试一(哈希规则),登录mongs后,向comment循环插入1000条数据做测试

mongos> use articledb

switched to db articledb

mongos> for(var i=1;i<=1000;i++) {db.comment.insert({_id:i+"",nickname:"Test"+i})}

WriteResult({ "nInserted" : 1 })

mongos> db.comment.count()

1000

-

js的语法,因为mongo的shell是一个JavaScript的shell

-

从路由上插入的数据,必须包含片键,否则无法插入

-

分别登录两个片的主节点,统计文档数量

myshardrs01:

mongo --port 27018

PRIMARY> use articledb

switched to db articledb

myshardrs01:PRIMARY> db.comment.count()

505

myshardrs02:

mongo --port 27318

PRIMARY> use articledb

switched to db articledb

myshardrs02:PRIMARY> db.comment.count()

495

- 数据是比较分散的

myshardrs02:PRIMARY> db.comment.find()

{ "_id" : "1", "nickname" : "Test1" }

{ "_id" : "3", "nickname" : "Test3" }

{ "_id" : "5", "nickname" : "Test5" }

{ "_id" : "6", "nickname" : "Test6" }

{ "_id" : "7", "nickname" : "Test7" }

{ "_id" : "10", "nickname" : "Test10" }

{ "_id" : "11", "nickname" : "Test11" }

{ "_id" : "12", "nickname" : "Test12" }

{ "_id" : "14", "nickname" : "Test14" }

{ "_id" : "17", "nickname" : "Test17" }

{ "_id" : "22", "nickname" : "Test22" }

{ "_id" : "23", "nickname" : "Test23" }

{ "_id" : "24", "nickname" : "Test24" }

{ "_id" : "28", "nickname" : "Test28" }

{ "_id" : "29", "nickname" : "Test29" }

{ "_id" : "30", "nickname" : "Test30" }

{ "_id" : "34", "nickname" : "Test34" }

{ "_id" : "37", "nickname" : "Test37" }

{ "_id" : "39", "nickname" : "Test39" }

{ "_id" : "44", "nickname" : "Test44" }

- 测试二(范围规则),登录mongs后,向author循环插入20000条测试数据

mongos> use articledb

switched to db articledb

mongos> for (var i=1;i<=2000;i++) {db.author.save({"name":"test"+i,"age":NumberInt(i%120)})}

WriteResult({ "nInserted" : 1 })

mongos> db.author.count()

2000

- 插入成功后,仍然要分别查看两个分片副本集的数据情况

myshardrs02:PRIMARY> db.author.count()

2000

-

发现所有的数据都集中在了一个分片副本上

-

如果发现没有分片:

- 系统繁忙,正在分片中

- 数据块(chunk)没有填满,默认的数据块尺寸是64M,填满后才会向其他片的数据库填充数据,为了测试可以改小,但是生产环境请勿改动

use config

db.settings.save({_id:"chunksize",value:1})

# 改成1M

db.settings.save({_id:"chunksize",value:64})

集合使用哈希策略后,在其集合上插入数据会发现大致在两个分片集群上能够均匀分布

集合使用范围策略后,数据会首先分配到其中的一个分片集群上

再增加一个路由节点

- 准备存放日志的目录

mkdir -p /usr/local/mongodb/sharded_cluster/mymongos_27117/log

- 建立配置文件

vim /usr/local/mongodb/sharded_cluster/mymongos_27117/mongod.conf

systemLog:

destination:file

path:"/usr/local/mongodb/replica_sets/mymongos_27117/log/mongod.log"

logAppend: true

processManagement:

fork:true

pidFilePath:"/usr/local/mongodb/replica_sets/mymongos_27117/log/mongod.pid"

net:

bindIp:localhost,192.168.64.129

port:27117

sharding:

configDB: myconfigrs/192.168.64.129:217019,192.168.64.129:27119,192.168.64.129:27219

# 分片角色,shardsvr为分片节点,configsvr配置节点

- 启动mongos

mongod -f /usr/local/mongodb/sharded_cluster/mymongos_27117/mongod.conf

- 客户端登录mongos

- 使用mongo客户端登录27117,发现第二个路由器无需配置,因为分片配置都保存到了配置服务器中了

mongo --port 27117

mongos> db.status()