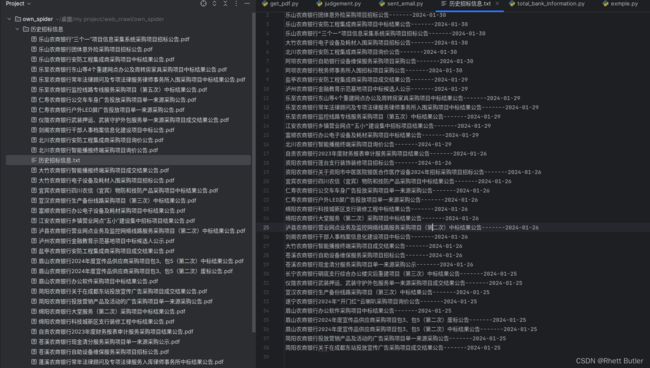

四川某银行招标信息爬虫

刚入门爬虫,尝试着做了一个爬虫项目,仍有诸多不足,望大佬指正。

项目要求:从四川农信银行爬取招标信息,根据时间,关键字等为划分依据爬取两天以内招标信息。将招标公告保存到本地,并以pdf的形式保存,最后写一个自动发邮箱的程序,定时发送某类别招标信息到相关公司负责人的邮箱;

项目痛点:

1:招标公告在网址上是以jpg和png格式存在的,我也只会以图片的形式存储。只有等图片下载后再用第三方库把图片转换为pdf,再用shutil库删除已经下载好的图片以及其文件夹,我认为这样的效率是很低的。

代码如下,后续会添加部分个人写代码时的思路以及部分讲解,如有不当望指正:

import os

import requests

from bs4 import BeautifulSoup as BS

import re

from tqdm import tqdm

headers = {

"Referer": "https://www.scrcu.com/other/zbcg/index.html",

"Host": "www.scrcu.com",

"User-Agent": "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:121.0) Gecko/20100101 Firefox/121.0",

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8",

}

def page_turning(nums):

url_list = []

for i in range(1, nums + 1):

if i == 1:

url = f"https://www.scrcu.com/other/zbcg/index.html"

else:

i = "_" + str(i)

url = f"https://www.scrcu.com/other/zbcg/index{i}.html"

url_list.append(url)

return url_list

def get_information(url):

s = requests.session()

response = s.get(url, headers=headers).content

soup = BS(response, "lxml")

mai = soup.find("li", {"class": "cl"})

ls = mai.find_all_next("li")

label_list = []

for name in ls:

label = name.text

label = label.strip().replace("\n", "")

label_list.append(label)

label_dict = {}

for elements in label_list:

match = re.search(r"(\d{4}-\d{2}-\d{2})", elements)

if match:

time_part = match.group(1)

char_part = elements.replace(time_part, "")

else:

time_part = None

char_part = elements

label_dict[char_part] = time_part

return label_dict

def get_page_url(url):

s = requests.session()

response = s.get(url, headers=headers).content

soup = BS(response, "lxml")

page_url_tags = soup.find_all("a", {"class": "left"})

page_url_list = [tag["href"] for tag in page_url_tags if "href" in tag.attrs]

page_url_list1 = []

for element in page_url_list:

element = "https://www.scrcu.com" + element

page_url_list1.append(element)

return page_url_list1

def get_image_url(url):

img_url_list = []

s = requests.session()

response = s.get(url, headers=headers).content

soup = BS(response, "lxml")

img_tags = soup.find_all("img")

img_tags = [img for img in img_tags if not re.search("logo", img.get("src", ""))]

img_tags = [

img for img in img_tags if not re.search("/r/cms/www", img.get("src", ""))

]

img_tags = [

img

for img in img_tags

if not re.search("share_wechat_close", img.get("src", ""))

]

for img in img_tags:

img_url = img.get("src")

img_url_list.append(img_url)

if None in img_url_list:

img_url_list.remove(None)

return img_url_list

def get_pdf(url_list, path):

for index, img_url in enumerate(url_list):

index = index + 1

response = requests.get(img_url)

image_name = f"{index}.jpg"

image_path = path + "/" + image_name

with open(image_path, "wb") as f:

f.write(response.content)

def main(nums):

if nums > 50:

nums = 50

print("一次性最多向前浏览50页")

url_list = page_turning(nums)

if os.path.exists("历史招标信息"):

print("文件夹已经存在")

else:

os.mkdir("历史招标信息")

label_dict = {}

for url in tqdm(url_list, ncols=100, position=0):

label_dict1 = get_information(url)

label_dict.update(label_dict1)

folder_path = "历史招标信息"

file_path = os.path.join(folder_path, "历史招标信息.txt")

with open(file_path, "a+") as f:

for key, value in zip(label_dict.keys(), label_dict.values()):

f.write(key + "-------" + value + "\n")

page_url_list = []

for url in tqdm(url_list, ncols=100, position=0):

page_url_list1 = get_page_url(url)

page_url_list.extend(page_url_list1)

img_url_list = []

img_url_len_list = []

for page_url in tqdm(page_url_list, ncols=100, position=0):

img_url_list1 = get_image_url(page_url)

img_url_len = len(img_url_list1)

img_url_len_list.append(img_url_len)

img_url_list.extend(img_url_list1)

start = 0

for img_url, key, number in tqdm(

zip(img_url_list, label_dict.keys(), img_url_len_list),

ncols=100,

position=0,

):

new_folder = key

end = start + number

new_folder_path = os.path.join(folder_path, new_folder)

if os.path.exists(new_folder_path):

pass

else:

os.mkdir(new_folder_path)

get_pdf(img_url_list[start:end], new_folder_path)

start = end

return label_dictimport os

from PIL import Image

from reportlab.pdfgen import canvas

from reportlab.lib.pagesizes import letter

import shutil

def get_img_dir():

dir_path = '历史招标信息'

img_dir_list = []

for image_dirs in os.listdir(dir_path):

if os.path.isdir(os.path.join(dir_path, image_dirs)):

img_dir_list.append(image_dirs)

return img_dir_list

def turn_pdf(img_dir_path, output_pdf_path):

c = canvas.Canvas(output_pdf_path, pagesize=letter)

image_list = os.listdir(img_dir_path)[::-1]

for img_file in image_list:

if img_file.lower().endswith(('.png', '.jpg', '.jpeg', '.gif', '.bmp')):

img_path = os.path.join(img_dir_path, img_file)

img = Image.open(img_path)

width, height = img.size

c.drawImage(img_path, x=0, y=0, width=width - 100, height=height - 170) # 设置图片的位置和大小

c.showPage()

c.save()

def del_folder():

dir_path = '历史招标信息'

for img_dirs in os.listdir(dir_path):

if os.path.isdir(os.path.join(dir_path, img_dirs)):

path = os.path.join(dir_path, img_dirs)

shutil.rmtree(path)

def main():

img_dir_list = get_img_dir()

for img_dir_path in img_dir_list:

img_dir_path = f'历史招标信息//{img_dir_path}'

output_pdf_path = f'{img_dir_path}.pdf'

turn_pdf(img_dir_path, output_pdf_path)

del_folder()

from total_bank_information import main

from tqdm import tqdm

import get_pdf

from datetime import datetime, timedelta

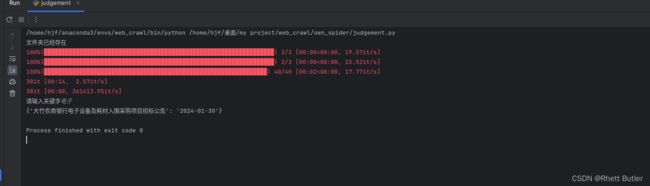

label_dict = main(2)

today = datetime.today()

yesterday = today-timedelta(days=1)

today = today.strftime("%Y-%m-%d")

yesterday = yesterday.strftime("%Y-%m-%d")

nowadays_label_dict = {}

for key, value in tqdm(zip(label_dict.keys(), label_dict.values())):

if value == today or value == yesterday:

nowadays_label_dict[key] = value

else:

pass

def get_keyword():

keyword = '招标'

dict1 = {}

for elements in nowadays_label_dict.keys():

if keyword in elements:

dict1[elements] = nowadays_label_dict[elements]

else:

pass

print(dict1)

return dict1

get_pdf.main()

if __name__ == '__main__':

get_keyword()

import smtplib

from email.mime.text import MIMEText

from email.header import Header

import schedule

import time

import judgement

smtp_server = 'smtp.163.com'

smtp_port = 465

sender = '发送邮箱'

password = '授权码'

receiver = '接受邮箱'

subject = '招标信息'

body = str(judgement.get_keyword()).replace(',', '/n')

def send_email():

msg = MIMEText(body, 'plain', 'utf-8')

msg['Subject'] = Header(subject, 'utf-8')

msg['From'] = Header(sender, 'utf-8')

msg['To'] = Header(receiver, 'utf-8')

try:

s = smtplib.SMTP_SSL(smtp_server, smtp_port)

s.login(sender, password)

s.sendmail(sender, receiver, msg.as_string())

print('邮件发送成功!')

except smtplib.SMTPException as e:

print('邮件发送失败,错误信息:', e)

finally:

s.quit()

schedule.every(1440).minutes.do(send_email) # 这里调整发送邮箱的频率,单位为分钟

while True:

schedule.run_pending()

time.sleep(1)运行结果展示: