k8s之安装部署及kuboard发布应用

目录

环境准备

系统规划

配置免密

将桥接的IPv4流量传递到iptables的链

系统基础配置

安装docker

安装docker及基础依赖

配置docker的仓库下载地址

部署k8s

添加阿里云的k8s源

安装kubeadm,kubelet和kubectl

初始化masteer节点

部署node节点

部署flannel网络插件

下载插件

应用flannel文件

查看flannel状态

部署kuboard

查看kuboard所需的镜像

所有节点下载kuboard镜像

修改kuboard.yaml文件

应用kuboard文件

查看token

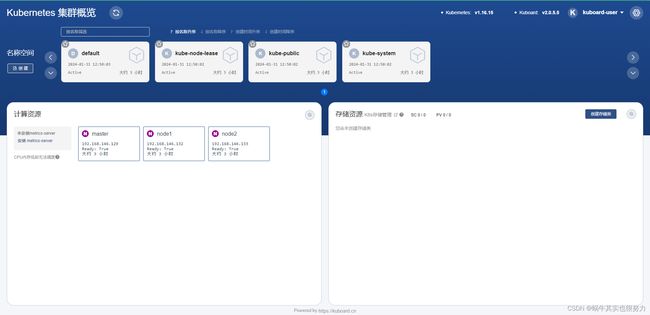

访问kuboard

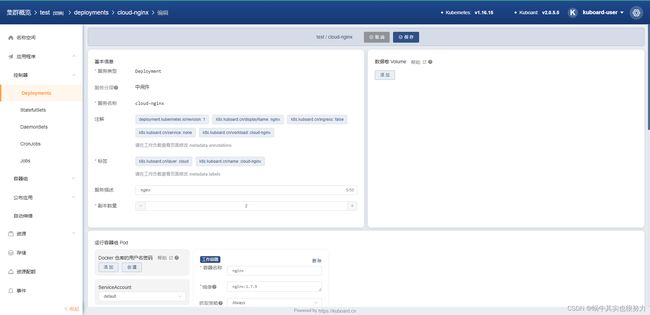

简单创建个应用

创建名称空间

创建nginx容器

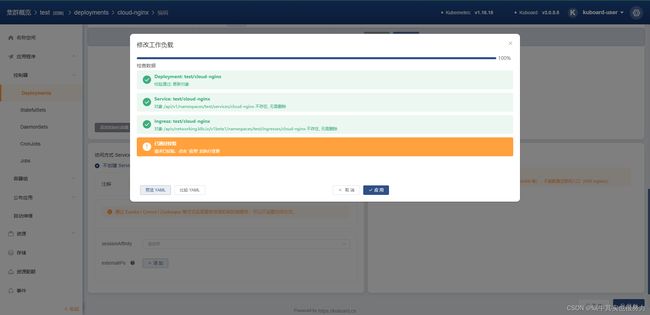

保存应用

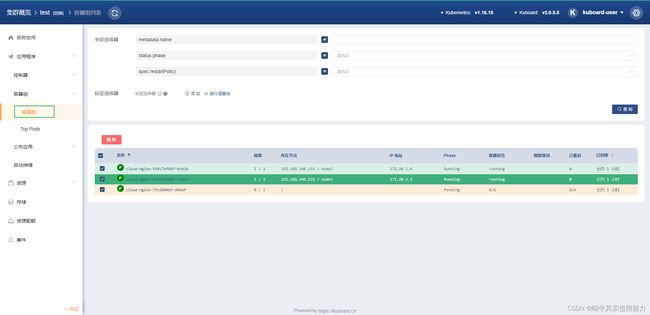

查看容器

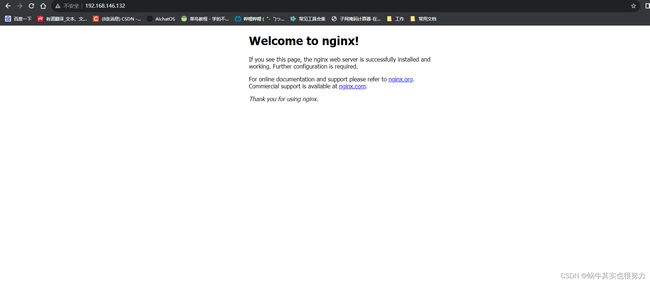

访问容器

环境准备

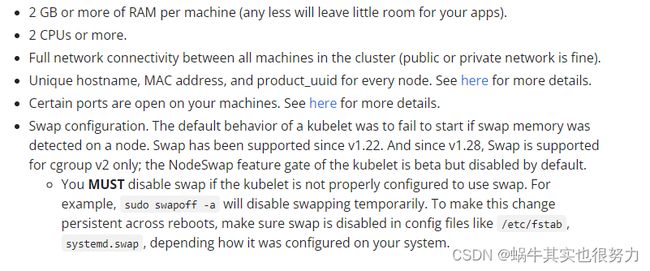

可参考官方文档

Installing kubeadm | Kubernetes

- 至少三台虚拟机

- 内存2G以上

- CPU至少2C

- 关闭swap分区或者初始化的时候加参数

系统规划

| 虚拟机 | cpu | 内存 | 硬盘 | 系统版本 | 网络 | hostname |

| master | 2c | 2G | 20G | Centos 7.9 | 192.168.146.129 | master |

| node1 | 2c | 2G | 20G | Centos 7.9 | 192.168.146.132 | node1 |

| node2 | 2c | 2G | 20G | Centos 7.9 | 192.168.146.133 | node2 |

配置免密

方便后续拷贝文件,不是必须操作

master主机上执行:

ssh-keygen将密钥拷贝给master和node1还有node2

ssh-copy-id master

ssh-copy-id node1

ssh-copy-id node2ssh测试不需要密码即可

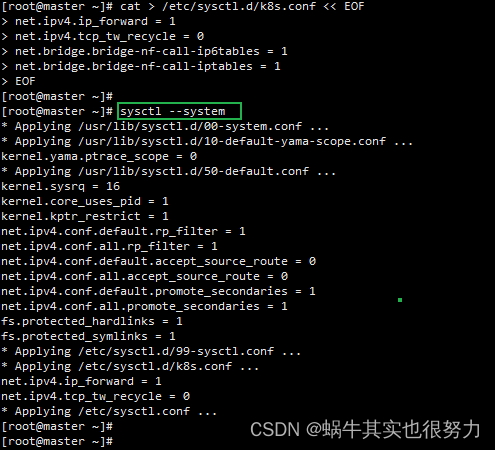

ssh node1将桥接的IPv4流量传递到iptables的链

三台主机均执行:

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.ipv4.tcp_tw_recycle = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system 系统基础配置

三台主机均执行:

- 关闭防火墙

- 关闭软件防护

- 禁用swap

- 添加host映射

systemctl stop firewalld && systemctl disable firewalld setenforce 0 sed -i s/SELINUX=enforcing/SELINUX=disabled/ /etc/selinux/config swapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab cat >> /etc/hosts << EOF 192.168.146.129 master 192.168.146.132 node1 192.168.146.133 node2 EOF

三台主机分别执行:

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2安装docker

安装docker及基础依赖

yum update

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker

systemctl enable docker

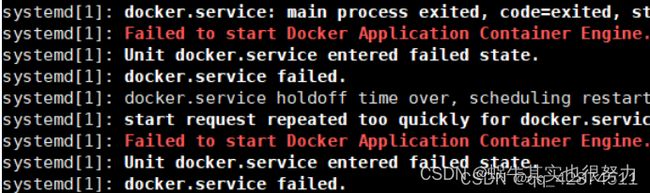

如果出现如下错误,参考下述方案解决

第一步:卸载

yum remove docker-*

第二步:更新Linux的内核,

yum update

第三步:通过管理员安装 docker 容器

yum install docker

第四步:启动docker容器

systemctl start docker

第五步:检查docker容器状态

systemctl status docker

配置docker的仓库下载地址

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://uj9wvi24.mirror.aliyuncs.com"]

}

systemctl daemon-reload

systemctl restart docker部署k8s

添加阿里云的k8s源

三台主机均执行:

cat /etc/yum.repos.d/kubernetes.repo [k8s]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

安装kubeadm,kubelet和kubectl

三台主机均执行:

yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2

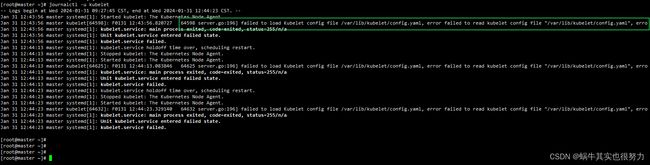

systemctl start kubelet

systemctl enable kubelet查看kubelt服务启动异常,网上查了查报错资料,说是

这是在kubeadm 进行初始化的时候,我一直以为需要kubelet启动成功才能进行初始化,其实后来发现只有初始化后才能成功启动。原文参考:

K8S服务搭建过程中出现的憨批错误_failed to load kubelet config file /var/lib/kubele-CSDN博客

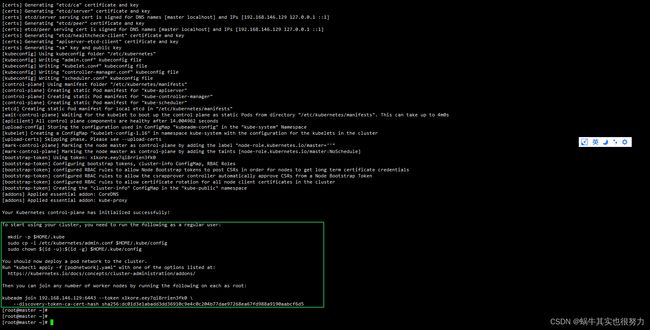

初始化masteer节点

master上执行:

kubeadm init --apiserver-advertise-address=192.168.146.129 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.1.0.0/16 --pod-network-cidr=172.20.0.0/16

- --apiserver-advertise-address:指定 Kubernetes API Server 广播的 IP 地址,即集群的管理地址。其他组件和用户将使用此地址与 API Server 进行通信。(本机master地址)

- --image-repository:指定容器镜像仓库的地址,使用了阿里云的容器镜像仓库

- --service-cidr:指定 Service 网络的 CIDR 范围。Service 是 Kubernetes 中一种抽象的概念,用于公开应用程序或服务。该参数定义了 Service 将使用的 IP 地址范围。

- --pod-network-cidr:指定 Pod 网络的 CIDR 范围。Pod 是 Kubernetes 中最小的可调度单元,每个 Pod 都有自己的 IP 地址。该参数定义了 Pod 将使用的 IP 地址范围。

显示如图:successfully即成功

注:如果初始化输入错了,可以使用此命令来重置

kubeadm reset

接着参考如上输出提示,执行以下命令

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config部署node节点

根据实际的master节点IP及生成的token去加入

kubeadm join 192.168.146.129:6443 --token x1kore.eey7qi8rrien3fk0 --discovery-token-ca-cert-hash sha256:dc01d3e1abadd3dd36910c9e4c0c204b77dae97268ea67fd988a9190aabcf6d5部署flannel网络插件

Flannel实质上是一种“覆盖网络(overlaynetwork)”,也就是将TCP数据包装在另一种网络包里面进行路由转发和通信,目前已经支持udp、vxlan、host-gw、aws-vpc、gce和alloc路由等数据转发方式,默认的节点间数据通信方式是UDP转发。

它的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址。

Flannel的设计目的就是为集群中的所有节点重新规划IP地址的使用规则,从而使得不同节点上的容器能够获得同属一个内网且不重复的IP地址,并让属于不同节点上的容器能够直接通过内网IP通信。

Flannel是作为一个二进制文件的方式部署在每个node上,主要实现两个功能:

- 为每个node分配subnet,容器将自动从该子网中获取IP地址

- 当有node加入到网络中时,为每个node增加路由配置

下载插件

参考的如下博客:

k8s安装网络插件-flannel_k8s安装flannel网络插件-CSDN博客

复制以下文件内容

cat kube-flannel.yml---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

seLinux:

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

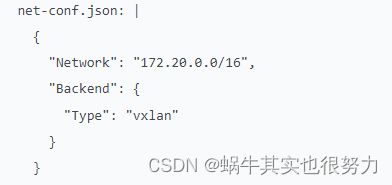

net-conf.json: |

{

"Network": "172.20.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: rancher/mirrored-flannelcni-flannel:v0.18.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: rancher/mirrored-flannelcni-flannel:v0.18.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

注意:Network的地址等同于kubeadm init的<--pod-network-cidr>地址

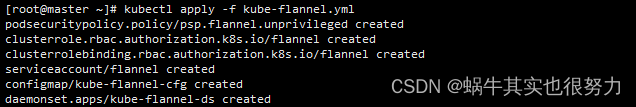

应用flannel文件

kubectl apply -f kube-flannel.yml查看flannel状态

查看所有命名空间(flannel正在初始化)

flannel初始化完成后,状态为running,随之coredns服务也正常了

flannel初始化完成后,状态为running,随之coredns服务也正常了

部署kuboard

注: kuboard是一款基于 Kubernetes 的微服务管理界面,初学者可以部署这个来学习使用

wget https://kuboard.cn/install-script/kuboard.yaml

查看kuboard所需的镜像

grep image kuboard.yaml

image: eipwork/kuboard:latest

imagePullPolicy: Always所有节点下载kuboard镜像

docker pull eipwork/kuboard:latest

修改kuboard.yaml文件

修改镜像下载策略为IfNotPresent

vim kuboard.yaml

grep image kuboard.yaml

image: eipwork/kuboard:latest

imagePullPolicy: IfNotPresent应用kuboard文件

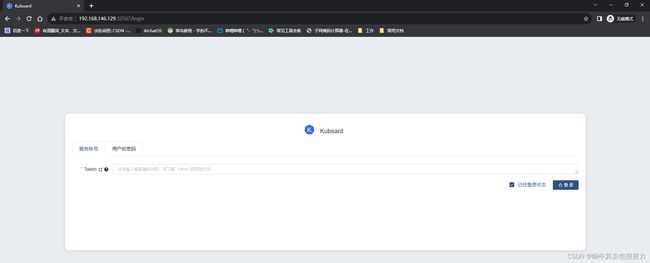

kubectl apply -f kuboard.yaml查看token

查看访问kuboard的端口及获取kuboard登录的token

kubectl get all -n kube-system | grep kuboard

echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)访问kuboard

浏览器访问:http://192.168.146.129:32567/

简单创建个应用

创建名称空间

点击创建的test命名空间的控制器

创建deployment

不明白的可以点击在线文档

创建nginx容器

参考在线文档的示例去创建

保存应用

基本上没报错就可以了

查看容器

访问容器

还可以查看deployment的yaml文件

--- apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: '2' k8s.kuboard.cn/displayName: nginx k8s.kuboard.cn/ingress: 'false' k8s.kuboard.cn/service: none k8s.kuboard.cn/workload: cloud-nginx creationTimestamp: '2024-01-31T07:05:51Z' generation: 2 labels: k8s.kuboard.cn/layer: cloud k8s.kuboard.cn/name: cloud-nginx name: cloud-nginx namespace: test resourceVersion: '26553' selfLink: /apis/apps/v1/namespaces/test/deployments/cloud-nginx uid: 9b4b9dd4-7e84-4d92-91c1-3689e3b9c52e spec: progressDeadlineSeconds: 600 replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: k8s.kuboard.cn/layer: cloud k8s.kuboard.cn/name: cloud-nginx strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: labels: k8s.kuboard.cn/layer: cloud k8s.kuboard.cn/name: cloud-nginx spec: containers: - image: 'nginx:1.7.9' imagePullPolicy: Always name: nginx ports: - containerPort: 80 hostPort: 80 name: http protocol: TCP terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler serviceAccount: default serviceAccountName: default terminationGracePeriodSeconds: 30 status: availableReplicas: 2 conditions: - lastTransitionTime: '2024-02-01T00:50:54Z' lastUpdateTime: '2024-02-01T00:50:54Z' message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: 'True' type: Available - lastTransitionTime: '2024-02-01T02:30:03Z' lastUpdateTime: '2024-02-01T02:30:03Z' message: ReplicaSet "cloud-nginx-7fcd944bf" has timed out progressing. reason: ProgressDeadlineExceeded status: 'False' type: Progressing observedGeneration: 2 readyReplicas: 2 replicas: 3 unavailableReplicas: 1 updatedReplicas: 1

——————至此,k8s部署及简单应用的流程是ok的——————