Feature refinement 的阅读笔记

Pattern Recognition上一篇微表情识别的文章,记录一下其中的关键信息点。

摘要:

This paper proposes a novel Feature Refinement(FR) with expression-specific feature learning and fusion for micro-expression recognition.

本文的贡献是,提出了一个新颖的特征提纯方法,一个表达能力较强的特征学习与融合的方法,用于微表情识别。

First,an inception module is designed basedon optical flow to obtain expression-shared features.Second,in order to extract salient and discriminative features for specific expression,expression-shared features are fed into an expression proposal module with attention factorsand proposal loss.

先用光流法获取共享的特征,再用注意力机制获取可分类的特征。

一、简介

Automatic micro-expression analysis in-volves micro-expression spotting and micro-expression recognition(MER).

微表情分析分为两大子分支,微表情检测与微表情识别。

However,these existing methods char-acterize the discrimination of micro-expressions inef-ficiently.On the other hand,it is time-consuming to design handcrafted feature[7]and manually adjust theoptimal parameters[8].

现有的特征方法低效,手工设计耗时,且需要手动调参。

The FR consists of three feature refinement stages:expression-shared feature learning,expression-specificfeature distilling,and expression-specific feature fusion.

FR有三步:共享特征学习,专属特征蒸馏与专属特征融合。

方法的贡献:

1.We propose a deep learning based three-level fea-ture refinement architecture for MER.

提出了基于深度学习的微表情识别三级特征提取结构。

2.We propose a constructive but straightforward at-tention strategy and a simple proposal loss in ex-pression proposal module for expression-specific feature learning.

提出了一个对应的损失函数。

3.We extensively validate our FR on three bench-marks of MER.

在三个数据集上做了验证。

二、相关工作

2.1手工特征

The features are gener-ally categorized into appearance-based and geometric-based features.

手工特征分为外表特征与几何特征。

2.1.1外表特征

Local Binary Pattern from Three Orthogonal Planes(LBP-TOP)[10]is the most widely used appearance-based feature for micro-expression recognition.

外表特征用的主要是LBP.

2.1.2几何特征

They can be categorized into optical flow based and texture variations based features.

又能分成基于光流法和基于纹理变化法

三、提出的方法

It leverages two-stream Inception network as a back-bone for expression-shared feature learning, an expres-sion proposal module with attention mechanism for expression-specific feature learning, and a classification module for label prediction by fused expression-refined features.

利用双流Inception网络作为骨架进行表情共享特征学习,利用带有注意力机制的表情提议模块进行表情特异性特征学习,利用融合的表情细化特征进行标签预测。

3.1共享特征

he expression-shared feature learning module consists of three critical components: apex frame chosen,optical flow extraction and two-stream Inception network.

共享特征学习模块,包含峰值帧提取,光流提取和双路网络。

The inter frame-Diff defines the index of apex frames tapex as follows

峰值帧定义的公式

the optical flow of each sample is extracted from the onset and apex frames

光流法计算某帧与峰值帧之间的差异。

Specifically,inception block is designed to capture both the global and local information of the optical component for fea-ture learning.

两路网络分别水平与垂直光流的特征学习。

3.2特殊特征学习

expression proposal module with the at-tention mechanism and proposal loss is introduced to learn the expression-specific features.

第二步通过注意力机制和提出的损失函数,学习特殊的表情特征。

a proposal module with K sub-branches is designed,where each of sub-branch learns a set of specific-feature for each micro-expression category (total K categories).

提出的模型有k个子分支,每个子分支学习一类微表情的特征。

Attention mechanism provides the flexibility to our model to learn K expression-specific features from the same set of expression-shared feature.

注意力机制用于从相同的共享特征中,学习出不同类别的特表特征。

Af-ter activated by Softmax,we gain the attention weight of z for each specific expression,

这里是关键,注意力机制生成了一个权重向量。

Each expression-specific detector(‘Detector k’in Fig.1) is aligned with a feature vec-tor z∗k,which contains two fully connected layers witha Sigmoid layer as the output.

每个特殊表情检测器生成一个特征向量,包含两个全连接层和一个sigmoid层。

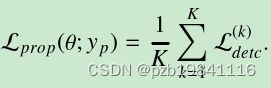

每一类检测器的损失函数

整体的损失函数,是每个子类损失函数的均值。

3.3混合后的特征用于分类

this method will cause the high dimension and contain more trainable parameters.

将所有特殊表情拼成一个向量,维度太高包含太多的训练参数。

The efficiency evaluation about element-wise sum for fusion can be referred to

用的特征融合方法为元素级别的累加。

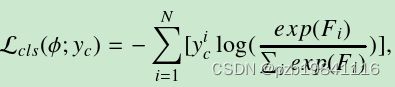

分类的损失函数

总的损失函数,λ用于调整特征表征与分类之间的权重。

四、实验

没有记录,肯定是他的最好。

五、结论

Different from the existing deep learning methods in MER which focus on learning expression-shared features,our approach aims to learn a set of expression-refined features by expression-specificfeature learning and fusion.

与MER中现有的深度学习方法侧重于学习表情共享特征不同,我们的方法旨在通过表情特征学习和融合来学习一组表情精化特征。

In the future,we will consider an end-to-end approach for MER,find more effective ways to enrich the micro-expression samples,and use transfer learning from the large-scale databases to makebenefit for MER.

在未来的研究中,我们将考虑一种端到端的MER方法,寻找更有效的方法来丰富微表情样本,并从大规模数据库中使用迁移学习来为MER带来好处。