Filebeat7.17收集SpringBoot应用k8s容器日志

版本

SpringBoot 2.6.6

Filebeat7.17.6

Kafka_2.13-2.8.2

ELK7.17.6

应用场景

springboot应用通过Deployment方式部署到k8s集群中,收集springboot应用日志。

关键实现

在filebeat配置文件中,采用filebeat.autodiscover方式,采集容器日志;通过template+condition方式,对k8s namespace进行过滤,只采集特定的namespace;根据namespace将采集的日志发送到kafka的不同topic;通过processors:script对采集的kubernetes信息进行聚合;通过drop_fields删除无关字段。

在deployment.yaml中,通过k8s Downward API获取Pod name和namespace注入到环境变量;通过subPathExpr配置不同应用的日志目录。

SpringBoot日志配置

https://docs.spring.io/spring-boot/docs/current/reference/html/howto.html#howto.logging

根据配置方式的不同,springboot应用日志可以输出到控制台或日志文件。

1、只输出到控制台

不做任何配置,默认情况下日志只输出到控制台。

2、只输出到文件

src/main/resources/logback-spring.xml:

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml" />

<property name="LOG_FILE" value="${LOG_FILE:-${LOG_PATH:-${LOG_TEMP:-${java.io.tmpdir:-/tmp}}/}spring.log}"/>

<include resource="org/springframework/boot/logging/logback/file-appender.xml" />

<root level="INFO">

<appender-ref ref="FILE" />

root>

configuration>

3、同时输出到控制台和文件

默认情况下日志会输出到控制台,输出到文件可以在application.properties文件中配置日志级别和日志文件名称

logging.file.name=/var/appdeploy/appdeploy.log

更复杂的配置需要在xml日志配置文件中完成。

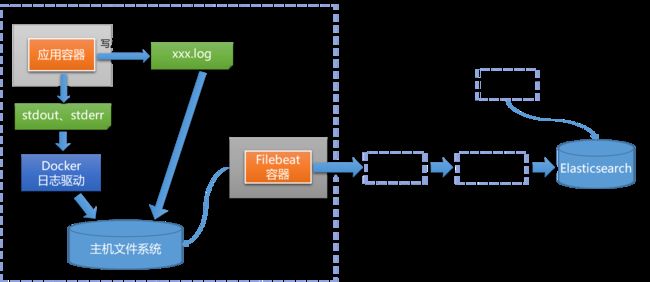

日志收集架构

数据流向

控制台日志输出:Pod -> /var/log/containers/.log -> Filebeat -> Kafka -> Logstash -> Elasticsearch -> Kibana

文件日志输出:Pod -> /logs//*.log -> Filebeat -> Kafka -> Logstash -> Elasticsearch -> Kibana

文件日志输出,在创建Deployment的时候,通过hostpath写到主机的/logs/{应用名称}/目录下。

部署方式

采用Daemonset方式部署轻量级日志收集程序Filebeat,对节点的/var/logs/containers/目录及 /logs/*/目录进行日志采集。

ELK采用elasticsearch-7.17.6-linux-x86_64.tar.gz等tar包方式安装,详见《ELK7安装》。

Kafka采用集群方式安装,详见《kafka2.8.2集群安装》。

Filebeat配置

部署2套filebeat,将容器日志采集和文件日志采集的filebeat分开部署,因为测试时发现在一个filebeat配置文件中同时配置autodiscover和filebeat.inputs(type: filestream),filebeat.inputs并没有被采集。(也可能是配置有误,期待高手指导)

- 采用filebeat.autodiscover方式,采集容器日志。

- 通过template+condition方式,对k8s namespace进行过滤,只采集特定的namespace。

- 根据namespace将采集的日志发送到kafka的不同topic。

- 通过processors:script对采集的kubernetes信息进行聚合。

- 通过drop_fields删除无关字段。

filebeat部署文件如下所示:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:7.17.6

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: varlog

mountPath: /var/log

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0640

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: varlog

hostPath:

path: /var/log

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

# When filebeat runs as non-root user, this directory needs to be writable by group (g+w).

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: filebeat

namespace: kube-system

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: Role

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: filebeat-kubeadm-config

namespace: kube-system

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: Role

name: filebeat-kubeadm-config

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

- nodes

verbs:

- get

- watch

- list

- apiGroups: ["apps"]

resources:

- replicasets

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: filebeat

# should be the namespace where filebeat is running

namespace: kube-system

labels:

k8s-app: filebeat

rules:

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs: ["get", "create", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: filebeat-kubeadm-config

namespace: kube-system

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- configmaps

resourceNames:

- kubeadm-config

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

---

filebeat容器采集配置文件:

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.autodiscover:

providers:

- type: kubernetes

hints.enabled: true

hints.default_config.enabled: false

templates:

- condition:

and:

- not:

equals:

kubernetes.namespace: kube-system

- not:

equals:

kubernetes.namespace: ceph-csi

- not:

equals:

kubernetes.namespace: tekton-pipelines

config:

- type: container

paths:

- /var/log/containers/*-${data.kubernetes.container.id}.log

processors:

- script:

lang: javascript

source: >

function process(event){

var k8s = event.Get("kubernetes");

var k8sInfo = {

namespace: k8s.namespace,

podName: k8s.pod.name,

hostName: k8s.node.hostname,

containerName: k8s.container.name,

labels: k8s.labels,

k8sName: "cicd"

}

event.Put("k8s", k8sInfo);

}

- drop_fields:

fields: ["host", "ecs", "log", "agent", "input", "tags", "container", "stream", "orchestrator", "kubernetes"]

ignore_missing: true

output.kafka:

hosts: ["27.196.38.200:9092", "27.196.38.201:9092", "27.196.38.202:9092"]

topic: '%{[k8s.k8sName]}-%{[k8s.namespace]]}'

partition.round_robin:

reachable_only: false

required_acks: 1

keep-alive: 120

max_message_bytes: 1000000

filebeat文件采集配置文件:

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config-app

namespace: kube-system

labels:

k8s-app: filebeat-app

data:

filebeat.yml: |-

filebeat.inputs:

- type: filestream

id: log-input

paths:

- "/logs/*/*.log"

parsers:

- multiline:

type: pattern

pattern: '^[0-9]{4}-[0-9]{2}-[0-9]{2}'

negate: true

match: after

processors:

- script:

lang: javascript

source: >

function process(event){

var path = event.Get("log.file.path");

if(path !== null){

var pathList = path.split("/");

if(pathList.length > 2){

var subDir = pathList[2];

var nsPod = subDir.split("_");

var k8sInfo = {

namespace: nsPod[0],

podName: nsPod[1],

containerName: nsPod[2],

k8sName: "cicd"

};

event.Put("k8s", k8sInfo);

}

}

}

- drop_fields:

fields: ["host", "ecs", "agent", "input"]

ignore_missing: true

output.kafka:

hosts: ["27.196.38.200:9092", "27.196.38.201:9092", "27.196.38.202:9092"]

topic: '%{[k8s.k8sName]}-%{[k8s.namespace]}'

partition.round_robin:

reachable_only: false

required_acks: 1

keep-alive: 120

max_message_bytes: 1000000

Logstash配置

input {

kafka {

bootstrap_servers => "27.196.38.200:9092,27.196.38.201:9092,27.196.38.202:9092"

codec => json {

charset => "UTF-8"

}

topics_pattern => "cicd-.*"

decorate_events => "basic"

auto_offset_reset => "latest"

group_id => "cicd_log"

client_id => "logstash"

consumer_threads => 3

}

}

output {

elasticsearch {

hosts => ["http://27.196.38.200:9200","http://27.196.38.201:9200","http://27.196.38.202:9200"]

index => "%{[k8s][k8sName]}-%{[k8s][namespace]}-%{+YYYY.MM.dd}"

}

}

Deployment配置

通过k8s Downward API获取Pod name和namespace注入到环境变量中。

通过subPathExpr配置不同应用的日志目录。

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s.deploy.cn/appId: "1"

k8s.deploy.cn/name: appdeloy

name: appdeloy

namespace: default

spec:

replicas: 1

selector:

matchLabels:

k8s.deploy.cn/appId: "1"

k8s.deploy.cn/name: appdeloy

template:

metadata:

labels:

k8s.deploy.cn/appId: "1"

k8s.deploy.cn/name: appdeloy

spec:

containers:

- env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

image: appdeploy:v1

imagePullPolicy: IfNotPresent

name: appdeloy

volumeMounts:

- mountPath: /var/appdeploy/

name: app-log

subPathExpr: $(NAMESPACE)_$(POD_NAME)_appdeloy

volumes:

- hostPath:

path: /logs

type: "DirectoryOrCreate"

name: app-log