kubernetes 1.24.2实战与源码(1)

kubernetes 1.24.2实战与源码

第1章 准备工作

1.1 关于Kubernetes的介绍与核心对象概念

关于Kubernetes的介绍与核心对象概念-阿里云开发者社区

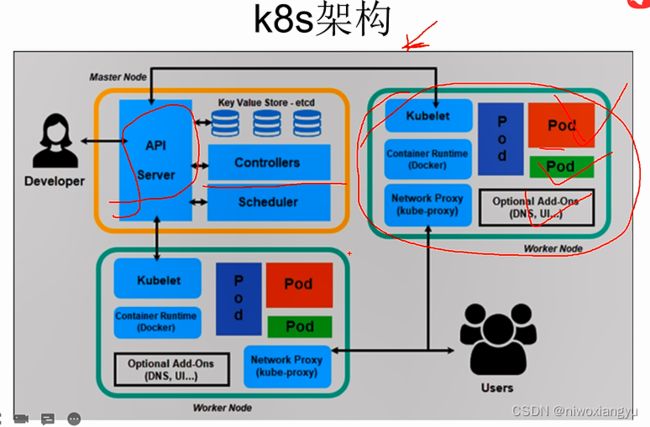

k8s架构

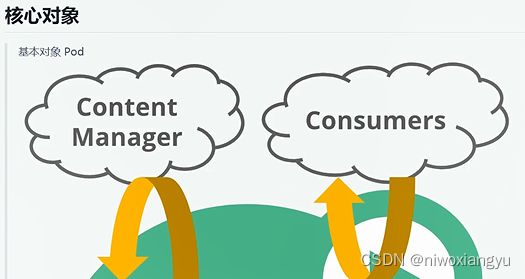

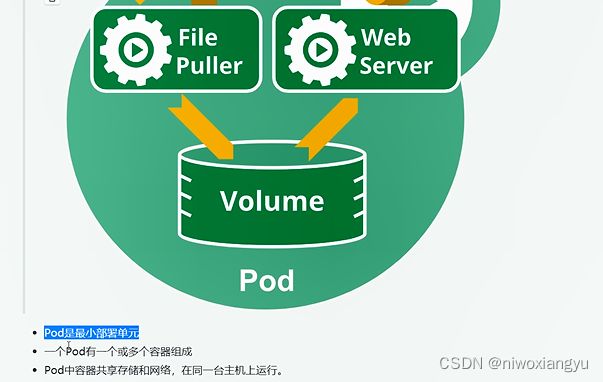

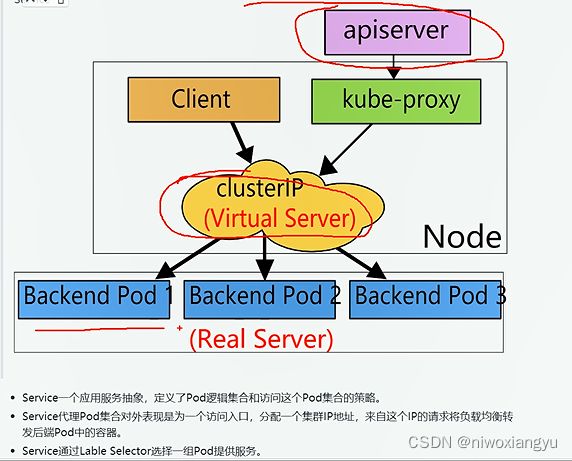

核心对象

使用kubeadm+10分钟部署k8集群

使用 KuboardSpray 安装kubernetes_v1.23.1 | Kuboard

k8s-上部署第一个应用程序

Deployment基本概念

给应用添加service,执行扩容和滚动更新

安装Kuboard在页面上熟悉k8s集群

kubernetes 1.24.2安装kuboard v3

static pod 安装 kuboard

安装命令

curl -fsSL https://addons.kuboard.cn/kuboard/kuboard-static-pod.sh -o kuboard.sh

sh kuboard.sh阅读k8s源码的准备工作

vscode

下载k8s 1.24.2源码

k8s组件代码仓库地址

第2章 创建pod时kubectl的执行流程和它的设计模式

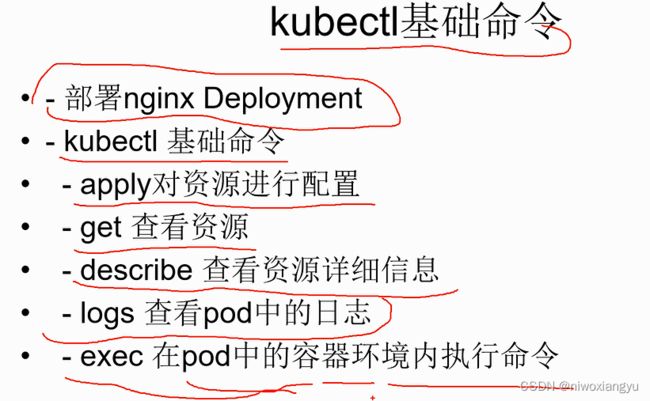

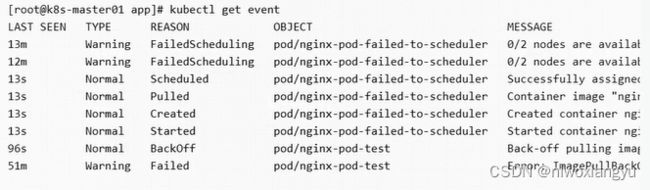

2.1 使用kubectl部署一个简单的nginx-pod

从创建pod开始看流程和源码

Kubernetes源码分析一叶知秋(一)kubectl中Pod的创建流程 - 掘金

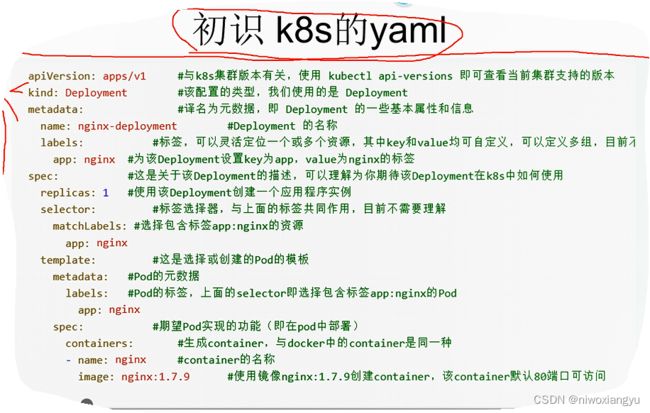

编写一个创建nginx pod的yaml

使用kubelet部署这个pod

2.2 命令行解析工具cobra的使用

cobra 中有个重要的概念,分别是 commands、arguments 和 flags。其中 commands 代表执行动作,arguments 就是执行参数,flags 是这些动作的标识符。执行命令行程序时的一般格式为:

APPNAME COMMAND ARG --FLAG

比如下面的例子:

# server是 commands,port 是 flag

hugo server --port=1313

# clone 是 commands,URL 是 arguments,brae 是 flag

git clone URL --barekubectl create命令 执行入口在cmd目录下各个组件的下面

代码位置/home/gopath/src/k8s.io/kubernetes-1.24.2/cmd/kubectl/kubectl.go

package main

import (

"k8s.io/component-base/cli"

"k8s.io/kubectl/pkg/cmd"

"k8s.io/kubectl/pkg/cmd/util"

// Import to initialize client auth plugins.

_ "k8s.io/client-go/plugin/pkg/client/auth"

)

func main() {

command := cmd.NewDefaultKubectlCommand()

if err := cli.RunNoErrOutput(command); err != nil {

// Pretty-print the error and exit with an error.

util.CheckErr(err)

}

}

rand.Seed设置随机数

调用kubectl库调用cmd创建command对象

command := cmd.NewDefaultKubectlCommand()D:\Workspace\Go\src\k8s.io\[email protected]\staging\src\k8s.io\kubectl\pkg\cmd\cmd.go

staging\src\k8s.io\kubectl\pkg\cmd\cmd.go

github.com/spf13/cobra

cobra的主要功能如下

Cobra主要提供的功能

* 简易的子命令行模式,如 app server, app fetch等等

* 完全兼容posix命令行模式

* 嵌套子命令subcommand

* 支持全局,局部,串联flags

* 使用Cobra很容易的生成应用程序和命令,使用cobra create appname和cobra add cmdname

* 如果命令输入错误,将提供智能建议,如 app srver,将提示srver没有,是否是app server

* 自动生成commands和flags的帮助信息

* 自动生成详细的help信息,如app help

* 自动识别-h,--help帮助flag

* 自动生成应用程序在bash下命令自动完成功能

* 自动生成应用程序的man手册

* 命令行别名

* 自定义help和usage信息

* 可选的紧密集成的[viper](http://github.com/spf13/viper) apps

创建cobra应用

go install github.com/spf13/cobra-cli@latest

mkdir my_cobra

cd my_cobra

// 打开my_cobra项目,执行go mod init后可以看到相关的文件

go mod init github.com/spf13/my_cobra

find

go run main.go

// 修改root.go

// Uncomment the following line if your bare application

// has an action associated with it:

// Run: func(cmd *cobra.Command, args []string) { },

Run: func(cmd *cobra.Command, args []string) {

fmt.Println("my_cobra")

},

// 编译运行后打印

go run main.go

[root@k8s-worker02 my_cobra]# go run main.go

# github.com/spf13/my_cobra/cmd

cmd/root.go:29:10: undefined: fmt

[root@k8s-worker02 my_cobra]# go run main.go

my_cobra

// 用cobra程序生成应用程序框架

cobra-cli init

// 除了init生成应用程序框架,还可以通过cobra-cli add命令生成子命令的代码文件,比如下面的命令会添加两个子命令image和container相关的代码文件:

cobra-cli add image

cobra-cli add container

[root@k8s-worker02 my_cobra]# find

.

./go.mod

./main.go

./cmd

./cmd/root.go

./cmd/image.go

./LICENSE

./go.sum

[root@k8s-worker02 my_cobra]# cobra-cli add container

container created at /home/gopath/src/my_cobra

[root@k8s-worker02 my_cobra]# go run main.go image

image called

[root@k8s-worker02 my_cobra]# go run main.go container

container called可以看出执行的是对应xxxCmd下的Run方法

// containerCmd represents the container command

var containerCmd = &cobra.Command{

Use: "container",

Short: "A brief description of your command",

Long: `A longer description that spans multiple lines and likely contains examples

and usage of using your command. For example:

Cobra is a CLI library for Go that empowers applications.

This application is a tool to generate the needed files

to quickly create a Cobra application.`,

Run: func(cmd *cobra.Command, args []string) {

fmt.Println("container called")

},

}

赋值cmd/container.go为version.go添加version信息

package cmd

import (

"fmt"

"github.com/spf13/cobra"

)

// versionCmd represents the version command

var versionCmd = &cobra.Command{

Use: "version",

Short: "A brief description of your command",

Long: `A longer description that spans multiple lines and likely contains examples

and usage of using your command. For example:

Cobra is a CLI library for Go that empowers applications.

This application is a tool to generate the needed files

to quickly create a Cobra application.`,

Run: func(cmd *cobra.Command, args []string) {

fmt.Println("my_cobra version is v1.0")

},

}

func init() {

rootCmd.AddCommand(versionCmd)

}设置一个MinimumNArgs的验证

新增一个cmd/times.go

package cmd

import (

"fmt"

"strings"

"github.com/spf13/cobra"

)

// containerCmd respresents the container command

var echoTimes int

var timesCmd = &cobra.Command{

Use: "times [string to echo]",

Short: "Echo anything to the screen more times",

Long: `echo things multiple times back to the user by providing a count and a string`,

Args: cobra.MinimumNArgs(1),

Run: func(cmd *cobra.Command, args []string) {

for i := 0; i < echoTimes; i++ {

fmt.Println("Echo: " + strings.Join(args, " "))

}

},

}

func init() {

rootCmd.AddCommand(timesCmd)

timseCmd.Flags().IntVarP(&echoTimes, "times", "t", 1, "times to echo the input")

}因为我们为timeCmd命令设置了Args: cobra.MinimumNArgs(1),所以必须为times子命令传入一个参数,不然times子命令会报错:

go run main.go times -t=4 k8s[root@k8s-worker02 my_cobra]# go run main.go times -t=4 k8s

Echo: k8s

Echo: k8s

Echo: k8s

Echo: k8s

修改rootCmd

PersistentPreRun: func(cmd *cobra.Command, args []string) {

fmt.Printf("[step_1]PersistentPreRun with args: %v\n", args)

},

PreRun: func(cmd *cobra.Command, args []string) {

fmt.Printf("[step_2]PreRun with args: %v\n", args)

},

Run: func(cmd *cobra.Command, args []string) {

fmt.Printf("[step_3]my_cobra version is v1.0: %v\n", args")

},

PostRun: func(cmd *cobra.Command, args []string) {

fmt.Printf("[step_4]PostRun with args: %v\n", args)

},

PersistentPostRun: func(cmd *cobra.Command, args []string) {

fmt.Printf("[step_5]PersistentPostRun with args: %v\n", args)

},

[root@k8s-worker02 my_cobra]# go run main.go

[step_1]PersistentPreRun with args: []

[step_2]PreRun with args: []

[step_3]my_cobra version is v1.0: []

[step_4]PostRun with args: []

[step_5]PersistentPostRun with args: []

kubectl命令行设置pprof抓取火焰图

cmd调用入口

D:\Workspace\Go\src\k8s.io\[email protected]\staging\src\k8s.io\kubectl\pkg\cmd\cmd.go

// NewDefaultKubectlCommand creates the `kubectl` command with default arguments

func NewDefaultKubectlCommand() *cobra.Command {

return NewDefaultKubectlCommandWithArgs(KubectlOptions{

PluginHandler: NewDefaultPluginHandler(plugin.ValidPluginFilenamePrefixes),

Arguments: os.Args,

ConfigFlags: defaultConfigFlags,

IOStreams: genericclioptions.IOStreams{In: os.Stdin, Out: os.Stdout, ErrOut: os.Stderr},

})

}

底层函数NewKubectlCommand解析

func NewKubectlCommand(o KubectlOptions) *cobra.Command {}使用cobra创建rootCmd

// Parent command to which all subcommands are added.

cmds := &cobra.Command{

Use: "kubectl",

Short: i18n.T("kubectl controls the Kubernetes cluster manager"),

Long: templates.LongDesc(`

kubectl controls the Kubernetes cluster manager.

Find more information at:

https://kubernetes.io/docs/reference/kubectl/overview/`),

Run: runHelp,

// Hook before and after Run initialize and write profiles to disk,

// respectively.

PersistentPreRunE: func(*cobra.Command, []string) error {

rest.SetDefaultWarningHandler(warningHandler)

return initProfiling()

},

PersistentPostRunE: func(*cobra.Command, []string) error {

if err := flushProfiling(); err != nil {

return err

}

if warningsAsErrors {

count := warningHandler.WarningCount()

switch count {

case 0:

// no warnings

case 1:

return fmt.Errorf("%d warning received", count)

default:

return fmt.Errorf("%d warnings received", count)

}

}

return nil

},

}配合后面的addProfilingFlags(flags)添加pprof的flag

在persistentPreRunE设置pprof采集相关指令

代码位置

staging\src\k8s.io\kubectl\pkg\cmd\profiling.go

意思是有两个选项

--profile代表pprof统计哪类指标,可以是cpu,block等

--profile-output代表输出的pprof结果文件

initProfiling代码

func addProfilingFlags(flags *pflag.FlagSet) {

flags.StringVar(&profileName, "profile", "none", "Name of profile to capture. One of (none|cpu|heap|goroutine|threadcreate|block|mutex)")

flags.StringVar(&profileOutput, "profile-output", "profile.pprof", "Name of the file to write the profile to")

}

func initProfiling() error {

var (

f *os.File

err error

)

switch profileName {

case "none":

return nil

case "cpu":

f, err = os.Create(profileOutput)

if err != nil {

return err

}

err = pprof.StartCPUProfile(f)

if err != nil {

return err

}

// Block and mutex profiles need a call to Set{Block,Mutex}ProfileRate to

// output anything. We choose to sample all events.

case "block":

runtime.SetBlockProfileRate(1)

case "mutex":

runtime.SetMutexProfileFraction(1)

default:

// Check the profile name is valid.

if profile := pprof.Lookup(profileName); profile == nil {

return fmt.Errorf("unknown profile '%s'", profileName)

}

}

// If the command is interrupted before the end (ctrl-c), flush the

// profiling files

c := make(chan os.Signal, 1)

signal.Notify(c, os.Interrupt)

go func() {

<-c

f.Close()

flushProfiling()

os.Exit(0)

}()

return nil

}并且在PersistentPostRunE中设置了pprof统计结果落盘

PersistentPostRunE: func(*cobra.Command, []string) error {

if err := flushProfiling(); err != nil {

return err

}

if warningsAsErrors {

count := warningHandler.WarningCount()

switch count {

case 0:

// no warnings

case 1:

return fmt.Errorf("%d warning received", count)

default:

return fmt.Errorf("%d warnings received", count)

}

}

return nil

},对应执行的flushProfiling

func flushProfiling() error {

switch profileName {

case "none":

return nil

case "cpu":

pprof.StopCPUProfile()

case "heap":

runtime.GC()

fallthrough

default:

profile := pprof.Lookup(profileName)

if profile == nil {

return nil

}

f, err := os.Create(profileOutput)

if err != nil {

return err

}

defer f.Close()

profile.WriteTo(f, 0)

}

return nil

}

执行采集pprof cpu的kubelet命令

# 执行命令

kubectl get node --profile=cpu --profile-output=cpu.pprof

# 查看结果文件

ll cpu.pprof

# 生成svg

go tool pprof -svg cpu.pprof > kubectl_get_node_cpu.svg

kubectl get node --profile=goroutine --profile-output=goroutine.pprof

go tool pprof -text goroutine.pprofcpu火焰图svg结果

2.4 kubectl命令行设置7大命令分组

kubectl架构图

![]()

用cmd工厂函数f创建7大分组命令

基础初级命令Basic Commands(Beginner)

基础中级命令Basic Commands(Intermediate)

部署命令Deploy Commands

集群管理分组 Cluster Management Commands

故障排查和调试Troubleshooting and Debugging Commands

高级命令Advanced Commands

设置命令Settings Commands

设置参数-替换方法

flags := cmds.PersistentFlags()

addProfilingFlags(flags)

flags.BoolVar(&warningsAsErrors, "warnings-as-errors", warningsAsErrors, "Treat warnings received from the server as errors and exit with a non-zero exit code")设置kubeconfig相关的命令行

kubeConfigFlags := o.ConfigFlags

if kubeConfigFlags == nil {

kubeConfigFlags = defaultConfigFlags

}

kubeConfigFlags.AddFlags(flags)

matchVersionKubeConfigFlags := cmdutil.NewMatchVersionFlags(kubeConfigFlags)

matchVersionKubeConfigFlags.AddFlags(flags)设置cmd工厂函数f,主要是封装了与kube-apiserver交互客户端

后面的子命令都使用这个f创建

f := cmdutil.NewFactory(matchVersionKubeConfigFlags)创建proxy子命令

proxyCmd := proxy.NewCmdProxy(f, o.IOStreams)

proxyCmd.PreRun = func(cmd *cobra.Command, args []string) {

kubeConfigFlags.WrapConfigFn = nil

}创建7大分组命令

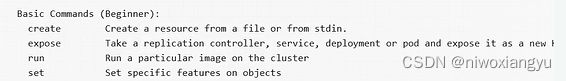

1.基础初级命令Basic Commands (Begginner)

代码

{

Message: "Basic Commands (Beginner):",

Commands: []*cobra.Command{

create.NewCmdCreate(f, o.IOStreams),

expose.NewCmdExposeService(f, o.IOStreams),

run.NewCmdRun(f, o.IOStreams),

set.NewCmdSet(f, o.IOStreams),

},

},命令行使用kubectl

对应的输出

释义

create代表创建资源

expose将一种资源暴露成service

run运行一个镜像

set在对象上设置一些功能

2.基础中级命令Basic Commands(Intermediate)

{

Message: "Basic Commands (Intermediate):",

Commands: []*cobra.Command{

explain.NewCmdExplain("kubectl", f, o.IOStreams),

getCmd,

edit.NewCmdEdit(f, o.IOStreams),

delete.NewCmdDelete(f, o.IOStreams),

},

},打印的help效果

释义

explain获取资源的文档

get显示资源

edit编辑资源

delete删除资源

3.部署命令Deploy Commands

{

Message: "Deploy Commands:",

Commands: []*cobra.Command{

rollout.NewCmdRollout(f, o.IOStreams),

scale.NewCmdScale(f, o.IOStreams),

autoscale.NewCmdAutoscale(f, o.IOStreams),

},

},释义

rollout滚动更新

scale扩缩容

autoscale自动扩缩容

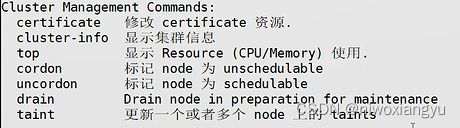

4.集群管理分组Cluster Management Commands:

{

Message: "Cluster Management Commands:",

Commands: []*cobra.Command{

certificates.NewCmdCertificate(f, o.IOStreams),

clusterinfo.NewCmdClusterInfo(f, o.IOStreams),

top.NewCmdTop(f, o.IOStreams),

drain.NewCmdCordon(f, o.IOStreams),

drain.NewCmdUncordon(f, o.IOStreams),

drain.NewCmdDrain(f, o.IOStreams),

taint.NewCmdTaint(f, o.IOStreams),

},

},释义

certificate管理证书

cluster-info展示集群信息

top展示资源消耗top

cordon将节点标记为不可用

uncordon将节点标记为可用

drain驱逐pod

taint设置节点污点

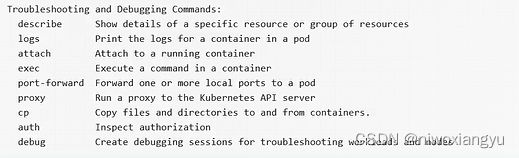

5.故障排查和调试Troubleshooting and Debugging Commands

{

Message: "Troubleshooting and Debugging Commands:",

Commands: []*cobra.Command{

describe.NewCmdDescribe("kubectl", f, o.IOStreams),

logs.NewCmdLogs(f, o.IOStreams),

attach.NewCmdAttach(f, o.IOStreams),

cmdexec.NewCmdExec(f, o.IOStreams),

portforward.NewCmdPortForward(f, o.IOStreams),

proxyCmd,

cp.NewCmdCp(f, o.IOStreams),

auth.NewCmdAuth(f, o.IOStreams),

debug.NewCmdDebug(f, o.IOStreams),

},

},输出

释义

describe展示资源详情

logs打印pod中容器日志

attach进入容器

exec在容器中执行命令

port-forward端口转发

proxy运行代理

cp拷贝文件

auth检查鉴权

debug打印debug

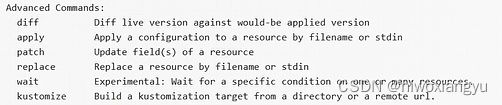

6.高级命令Advanced Commands

代码

{

Message: "Advanced Commands:",

Commands: []*cobra.Command{

diff.NewCmdDiff(f, o.IOStreams),

apply.NewCmdApply("kubectl", f, o.IOStreams),

patch.NewCmdPatch(f, o.IOStreams),

replace.NewCmdReplace(f, o.IOStreams),

wait.NewCmdWait(f, o.IOStreams),

kustomize.NewCmdKustomize(o.IOStreams),

},

},输出

释义

diff对比当前和应该运行的版本

apply应用变更或配置

patch更新资源的字段

replace替换资源

wait等待资源的特定状态

kustomize从目录或远程url构建kustomization目标

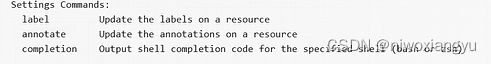

7.设置命令Setting Commands

代码

{

Message: "Settings Commands:",

Commands: []*cobra.Command{

label.NewCmdLabel(f, o.IOStreams),

annotate.NewCmdAnnotate("kubectl", f, o.IOStreams),

completion.NewCmdCompletion(o.IOStreams.Out, ""),

},

},输出

释义

label打标签

annotate更新注释

completion在shell上设置补全

本节重点总结

设置cmd工厂函数f,主要是封装了与kube-apiserver交互客户端

用cmd工厂函数f创建7大分组命令

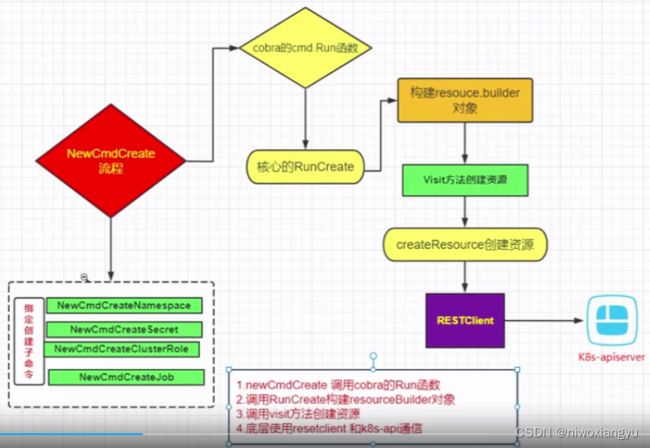

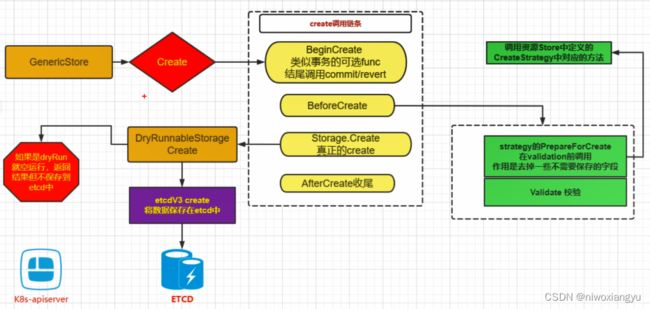

2.5 create命令执行流程

kubectl create架构图

create流程

newCmdCreate调用cobra的Run函数

调用RunCreate构建resourceBuilder对象

调用visit方法创建资源

底层使用resetclient和看k8s-api通信

create的流程NewCmdCreate

代码入口staging\src\k8s.io\kubectl\pkg\cmd\create\create.go

创建Create选项对象

o := NewCreateOptions(ioStreams)初始化cmd

cmd := &cobra.Command{

Use: "create -f FILENAME",

DisableFlagsInUseLine: true,

Short: i18n.T("Create a resource from a file or from stdin"),

Long: createLong,

Example: createExample,

Run: func(cmd *cobra.Command, args []string) {

if cmdutil.IsFilenameSliceEmpty(o.FilenameOptions.Filenames, o.FilenameOptions.Kustomize) {

ioStreams.ErrOut.Write([]byte("Error: must specify one of -f and -k\n\n"))

defaultRunFunc := cmdutil.DefaultSubCommandRun(ioStreams.ErrOut)

defaultRunFunc(cmd, args)

return

}

cmdutil.CheckErr(o.Complete(f, cmd))

cmdutil.CheckErr(o.ValidateArgs(cmd, args))

cmdutil.CheckErr(o.RunCreate(f, cmd))

},

}设置选项

具体绑定到o的各个字段上

// bind flag structs

o.RecordFlags.AddFlags(cmd)

usage := "to use to create the resource"

cmdutil.AddFilenameOptionFlags(cmd, &o.FilenameOptions, usage)

cmdutil.AddValidateFlags(cmd)

cmd.Flags().BoolVar(&o.EditBeforeCreate, "edit", o.EditBeforeCreate, "Edit the API resource before creating")

cmd.Flags().Bool("windows-line-endings", runtime.GOOS == "windows",

"Only relevant if --edit=true. Defaults to the line ending native to your platform.")

cmdutil.AddApplyAnnotationFlags(cmd)

cmdutil.AddDryRunFlag(cmd)

cmdutil.AddLabelSelectorFlagVar(cmd, &o.Selector)

cmd.Flags().StringVar(&o.Raw, "raw", o.Raw, "Raw URI to POST to the server. Uses the transport specified by the kubeconfig file.")

cmdutil.AddFieldManagerFlagVar(cmd, &o.fieldManager, "kubectl-create")

o.PrintFlags.AddFlags(cmd)绑定创建子命令

// create subcommands

cmd.AddCommand(NewCmdCreateNamespace(f, ioStreams))

cmd.AddCommand(NewCmdCreateQuota(f, ioStreams))

cmd.AddCommand(NewCmdCreateSecret(f, ioStreams))

cmd.AddCommand(NewCmdCreateConfigMap(f, ioStreams))

cmd.AddCommand(NewCmdCreateServiceAccount(f, ioStreams))

cmd.AddCommand(NewCmdCreateService(f, ioStreams))

cmd.AddCommand(NewCmdCreateDeployment(f, ioStreams))

cmd.AddCommand(NewCmdCreateClusterRole(f, ioStreams))

cmd.AddCommand(NewCmdCreateClusterRoleBinding(f, ioStreams))

cmd.AddCommand(NewCmdCreateRole(f, ioStreams))

cmd.AddCommand(NewCmdCreateRoleBinding(f, ioStreams))

cmd.AddCommand(NewCmdCreatePodDisruptionBudget(f, ioStreams))

cmd.AddCommand(NewCmdCreatePriorityClass(f, ioStreams))

cmd.AddCommand(NewCmdCreateJob(f, ioStreams))

cmd.AddCommand(NewCmdCreateCronJob(f, ioStreams))

cmd.AddCommand(NewCmdCreateIngress(f, ioStreams))

cmd.AddCommand(NewCmdCreateToken(f, ioStreams))核心的cmd.Run函数

校验文件参数

if cmdutil.IsFilenameSliceEmpty(o.FilenameOptions.Filenames, o.FilenameOptions.Kustomize) {

ioStreams.ErrOut.Write([]byte("Error: must specify one of -f and -k\n\n"))

defaultRunFunc := cmdutil.DefaultSubCommandRun(ioStreams.ErrOut)

defaultRunFunc(cmd, args)

return

}完善并填充所需字段

cmdutil.CheckErr(o.Complete(f, cmd))校验参数

cmdutil.CheckErr(o.ValidateArgs(cmd, args))核心的RunCreate

cmdutil.CheckErr(o.RunCreate(f, cmd))如果配置了apiserver的raw-uri就直接发送请求

if len(o.Raw) > 0 {

restClient, err := f.RESTClient()

if err != nil {

return err

}

return rawhttp.RawPost(restClient, o.IOStreams, o.Raw, o.FilenameOptions.Filenames[0])

}如果配置了创建前edit就执行RunEditOnCreate

if o.EditBeforeCreate {

return RunEditOnCreate(f, o.PrintFlags, o.RecordFlags, o.IOStreams, cmd, &o.FilenameOptions, o.fieldManager)

}根据配置中validate决定是否开启validate

--validate=true: 使用一种模式校验一下配置,模式是true的

cmdNamespace, enforceNamespace, err := f.ToRawKubeConfigLoader().Namespace()

if err != nil {

return err

}构建builder对象,建造者模式

r := f.NewBuilder().

Unstructured().

Schema(schema).

ContinueOnError().

NamespaceParam(cmdNamespace).DefaultNamespace().

FilenameParam(enforceNamespace, &o.FilenameOptions).

LabelSelectorParam(o.Selector).

Flatten().

Do()

err = r.Err()

if err != nil {

return err

}FilenameParam读取配置文件

除了支持简单的本地文件,也支持标准输入和http/https协议访问的文件,保存为Visitor

代码位置 staging\src\k8s.io\cli-runtime\pkg\resource\builder.go

// FilenameParam groups input in two categories: URLs and files (files, directories, STDIN)

// If enforceNamespace is false, namespaces in the specs will be allowed to

// override the default namespace. If it is true, namespaces that don't match

// will cause an error.

// If ContinueOnError() is set prior to this method, objects on the path that are not

// recognized will be ignored (but logged at V(2)).

func (b *Builder) FilenameParam(enforceNamespace bool, filenameOptions *FilenameOptions) *Builder {

if errs := filenameOptions.validate(); len(errs) > 0 {

b.errs = append(b.errs, errs...)

return b

}

recursive := filenameOptions.Recursive

paths := filenameOptions.Filenames

for _, s := range paths {

switch {

case s == "-":

b.Stdin()

case strings.Index(s, "http://") == 0 || strings.Index(s, "https://") == 0:

url, err := url.Parse(s)

if err != nil {

b.errs = append(b.errs, fmt.Errorf("the URL passed to filename %q is not valid: %v", s, err))

continue

}

b.URL(defaultHttpGetAttempts, url)

default:

matches, err := expandIfFilePattern(s)

if err != nil {

b.errs = append(b.errs, err)

continue

}

if !recursive && len(matches) == 1 {

b.singleItemImplied = true

}

b.Path(recursive, matches...)

}

}

if filenameOptions.Kustomize != "" {

b.paths = append(

b.paths,

&KustomizeVisitor{

mapper: b.mapper,

dirPath: filenameOptions.Kustomize,

schema: b.schema,

fSys: filesys.MakeFsOnDisk(),

})

}

if enforceNamespace {

b.RequireNamespace()

}

return b

}调用visit函数创建资源

err = r.Visit(func(info *resource.Info, err error) error {

if err != nil {

return err

}

if err := util.CreateOrUpdateAnnotation(cmdutil.GetFlagBool(cmd, cmdutil.ApplyAnnotationsFlag), info.Object, scheme.DefaultJSONEncoder()); err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

if err := o.Recorder.Record(info.Object); err != nil {

klog.V(4).Infof("error recording current command: %v", err)

}

if o.DryRunStrategy != cmdutil.DryRunClient {

if o.DryRunStrategy == cmdutil.DryRunServer {

if err := o.DryRunVerifier.HasSupport(info.Mapping.GroupVersionKind); err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

}

obj, err := resource.

NewHelper(info.Client, info.Mapping).

DryRun(o.DryRunStrategy == cmdutil.DryRunServer).

WithFieldManager(o.fieldManager).

WithFieldValidation(o.ValidationDirective).

Create(info.Namespace, true, info.Object)

if err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

info.Refresh(obj, true)

}Create函数追踪底层调用createResource创建资源

代码位置D:\Workspace\Go\src\k8s.io\[email protected]\staging\src\k8s.io\cli-runtime\pkg\resource\helper.go

func (m *Helper) createResource(c RESTClient, resource, namespace string, obj runtime.Object, options *metav1.CreateOptions) (runtime.Object, error) {

return c.Post().

NamespaceIfScoped(namespace, m.NamespaceScoped).

Resource(resource).

VersionedParams(options, metav1.ParameterCodec).

Body(obj).

Do(context.TODO()).

Get()

}底层使用restfulclient.post

代码位置staging\src\k8s.io\cli-runtime\pkg\resource\interfaces.go

// RESTClient is a client helper for dealing with RESTful resources

// in a generic way.

type RESTClient interface {

Get() *rest.Request

Post() *rest.Request

Patch(types.PatchType) *rest.Request

Delete() *rest.Request

Put() *rest.Request

}本节重点总结

1.newCmdCreate调用cobra的Run函数

2.调用RunCreate构建resourceBuilder对象

3.调用Visit方法创建资源

4.底层使用resetclient和k8s-api通信

2.6 createCmd中的builder建造者设计模式模式

本节重点总结

设计模式之建造者模式

优点

缺点

kubectl中的创建者模式

设计模式之建造者模式

建造者(Builder)模式:指将一个复杂对象的构造与它的表示分离

使同样的构建过程可以创建不同的对象,这样的设计模式被称为建造者模式

它是将一个复杂的对象分解为多个简单的对象,然后一步一步构建而成

它将变与不变相分离,即产品的组成部分是不变的,但每一部分是可以灵活选择的。

更多用来针对复杂对象的创建

优点

封装性好,构建和表示分离

扩展性好,各个具体的建造者相互分离,有利于系统的解耦

客户端不必知道产品内部组成的细节,建造者可以对创建过程逐步细化,而不对其他模块产生任何影响,便于控制细节风险。

缺点

产品的组成部分必须相同,这限制了其使用范围

如果产品的内部变化复杂,如果产品内部发生变化,则建造者也要同步修改,后期维护成本较大。

kubectl中的创建者模式

kubectl中的Builder对象

特点1 针对复杂对象的创建,字段非常多

特点2 开头的方法要返回要创建对象的指针

特点3 所有的方法都返回的是建造者对象的指针

特点1 针对复杂对象的创建,字段非常多

kubectl中的Builder对象,可以看到字段非常多

如果使用Init函数构造参数会非常多

而且参数是不固定的,即可以根据用户传入的参数情况构造不同对象

代码位置staging\src\k8s.io\cli-runtime\pkg\resource\builder.go

// Builder provides convenience functions for taking arguments and parameters

// from the command line and converting them to a list of resources to iterate

// over using the Visitor interface.

type Builder struct {

categoryExpanderFn CategoryExpanderFunc

// mapper is set explicitly by resource builders

mapper *mapper

// clientConfigFn is a function to produce a client, *if* you need one

clientConfigFn ClientConfigFunc

restMapperFn RESTMapperFunc

// objectTyper is statically determinant per-command invocation based on your internal or unstructured choice

// it does not ever need to rely upon discovery.

objectTyper runtime.ObjectTyper

// codecFactory describes which codecs you want to use

negotiatedSerializer runtime.NegotiatedSerializer

// local indicates that we cannot make server calls

local bool

errs []error

paths []Visitor

stream bool

stdinInUse bool

dir bool

labelSelector *string

fieldSelector *string

selectAll bool

limitChunks int64

requestTransforms []RequestTransform

resources []string

subresource string

namespace string

allNamespace bool

names []string

resourceTuples []resourceTuple

defaultNamespace bool

requireNamespace bool

flatten bool

latest bool

requireObject bool

singleResourceType bool

continueOnError bool

singleItemImplied bool

schema ContentValidator

// fakeClientFn is used for testing

fakeClientFn FakeClientFunc

}特点2 开头的方法要返回要创建对象的指针

func NewBuilder(restClientGetter RESTClientGetter) *Builder {

categoryExpanderFn := func() (restmapper.CategoryExpander, error) {

discoveryClient, err := restClientGetter.ToDiscoveryClient()

if err != nil {

return nil, err

}

return restmapper.NewDiscoveryCategoryExpander(discoveryClient), err

}

return newBuilder(

restClientGetter.ToRESTConfig,

restClientGetter.ToRESTMapper,

(&cachingCategoryExpanderFunc{delegate: categoryExpanderFn}).ToCategoryExpander,

)

}特点3 所有的方法都返回的是建造者对象的指针

staging\src\k8s.io\kubectl\pkg\cmd\create\create.go

r := f.NewBuilder().

Unstructured().

Schema(schema).

ContinueOnError().

NamespaceParam(cmdNamespace).DefaultNamespace().

FilenameParam(enforceNamespace, &o.FilenameOptions).

LabelSelectorParam(o.Selector).

Flatten().

Do()调用时看着像链式调用,链上的每个方法都返回这个要建造对象的指针

如

func (b *Builder) Schema(schema ContentValidator) *Builder {

b.schema = schema

return b

}

func (b *Builder) ContinueOnError() *Builder {

b.continueOnError= true

return b

}看起来就是设置构造对象的各种属性

2.7 createCmd中的visitor访问者设计模式

visitor访问者模式简介

访问者模式(Visitor Pattern)是一种将数据结构与数据操作作分离的设计模式,

指封装一些作用于某种数据结构中的各元素的操作,

可以在不改变数据结构的前提下定义作用于这些元素的新的操作,

属于行为型设计模式。

kubectl中的访问者模式

在kubectl中多个Visitor是来访问一个数据结构的不同部分

这种情况下,数据结构有点像一个数据库,而各个Visitor会成为一个个小应用

本节重点总结:

visitor访问者模式简介

kubeclt中的visitor应用

visitor访问者模式简介

访问者模式(Visitor Pattern)是一种将数据结构与数据操作分离的设计模式,

指封装一些作用于某种数据结构中的各元素的操作,

可以在不改变数据结构的前提下定义作用于这些元素的新操作,

属于行为型设计模式。

kubectl中的访问者模式

在kubectl中多个Visitor是来访问一个数据结构的不同部分。

这种情况下,数据结构有点像一个数据库,而各个Visitor会成为一个个小应用。

访问者模式主要适用于以下应用场景:

(1)数据结构稳定,作用于数据结构稳定的操作经常变化的场景。

(2)需要数据结构与数据作分离的场景。

(3)需要对不同数据类型(元素)进行操作,而不使用分支判断具体类型的场景。

访问者模式的优点

(1)解耦了数据结构与数据操作,使得操作集合可以独立变化。

(2)可以通过扩展访问者角色,实现对数据集的不同操作,程序扩展性更好。

(3)元素具体类型并非单一,访问者均可操作。

(4)各角色职责分离,符合单一职责原则。

访问者模式的缺点

(1)无法增加元素类型:若系统数据结构对象易于变化,

经常有新的数据对象增加进来,

则访问者类必须增加对应元素类型的操作,违背了开闭原则。

(2)具体元素变更困难:具体元素增加属性、删除属性等操作,

会导致对应的访问者类需要进行相应的修改,

尤其当有大量访问类时,修改范围太大。

(3)违背依赖倒置原则:为了达到“区别对待”,

访问者角色依赖的具体元素类型,而不是抽象。

kubectl中访问者模式

在kubectl中多个Visitor是来访问一个数据结构的不同部分

这种情况下,数据结构有点像一个数据库,而各个Visitor会成为一个个小应用。

Visitor接口和VisitorFunc定义

位置在kubernetes/staging/src/k8s.io/cli-runtime/pkg/resource/interfaces.go

// Visitor lets clients walk a list of resources.

type Visitor interface {

Visit(VisitorFunc) error

}

// VisitorFunc implements the Visitor interface for a matching function.

// If there was a problem walking a list of resources, the incoming error

// will describe the problem and the function can decide how to handle that error.

// A nil returned indicates to accept an error to continue loops even when errors happen.

// This is useful for ignoring certain kinds of errors or aggregating errors in some way.

type VisitorFunc func(*Info, error) errorresult的Visit方法

func(r *Result) Visit(fn VisitorFunc) error {

if r.err != nil {

return r.err

}

err := r.visitor.Visit(fn)

return utilerrors.FilterOut(err, r.ignoreErrors...)

}具体的visitor的visit方法定义,参数都是一个VisitorFunc的fn

// Visit in a FileVisitor is just taking care of opening/closing files

func (v *FileVisitor) Visit(fn VisitorFunc) error {

var f *os.File

if v.Path == constSTDINstr {

f = os.Stdin

} else {

var err error

f, err = os.Open(v.Path)

if err != nil {

return err

}

defer f.Close()

}

// TODO: Consider adding a flag to force to UTF16, apparently some

// Windows tools don't write the BOM

utf16bom := unicode.BOMOverride(unicode.UTF8.NewDecoder())

v.StreamVisitor.Reader = transform.NewReader(f, utf16bom)

return v.StreamVisitor.Visit(fn)

}kubectl create中 通过Builder模式创建visitor并执行的过程

FilenameParam解析 -f文件参数 创建一个visitor

位置kubernetes/staging/src/k8s.io/cli-runtime/pkg/resource/builder.go

validate校验-f参数

func (o *FilenameOptions) validate() []error {

var errs []error

if len(o.Filenames) > 0 && len(o.Kustomize) > 0 {

errs = append(errs, fmt.Errorf("only one of -f or -k can be specified"))

}

if len(o.Kustomize) > 0 && o.Recursive {

errs = append(errs, fmt.Errorf("the -k flag can't be used with -f or -R"))

}

return errs

}-k代表使用Kustomize配置

如果-f -k都存在报错only one of -f or -k can be specified

kubectl create -f rule.yaml -k rule.yaml

error: only one of -f or -k can be specified-k不支持递归 -R

kubectl create -k rule.yaml -R

error: the -k flag can't be used with -f or -R调用path解析文件

recursive := filenameOptions.Recursive

paths := filenameOptions.Filenames

for _, s := range paths {

switch {

case s == "-":

b.Stdin()

case strings.Index(s, "http://") == 0 || strings.Index(s, "https://") == 0:

url, err := url.Parse(s)

if err != nil {

b.errs = append(b.errs, fmt.Errorf("the URL passed to filename %q is not valid: %v", s, err))

continue

}

b.URL(defaultHttpGetAttempts, url)

default:

matches, err := expandIfFilePattern(s)

if err != nil {

b.errs = append(b.errs, err)

continue

}

if !recursive && len(matches) == 1 {

b.singleItemImplied = true

}

b.Path(recursive, matches...)

}

}遍历-f传入的paths

如果是-代表从标准输入传入

如果是http开头的代表从远端http接口读取,调用b.URL

默认是文件,调用b.Path解析

b.Path调用ExpandPathsToFileVisitors生产visitor

// ExpandPathsToFileVisitors will return a slice of FileVisitors that will handle files from the provided path.

// After FileVisitors open the files, they will pass an io.Reader to a StreamVisitor to do the reading. (stdin

// is also taken care of). Paths argument also accepts a single file, and will return a single visitor

func ExpandPathsToFileVisitors(mapper *mapper, paths string, recursive bool, extensions []string, schema ContentValidator) ([]Visitor, error) {

var visitors []Visitor

err := filepath.Walk(paths, func(path string, fi os.FileInfo, err error) error {

if err != nil {

return err

}

if fi.IsDir() {

if path != paths && !recursive {

return filepath.SkipDir

}

return nil

}

// Don't check extension if the filepath was passed explicitly

if path != paths && ignoreFile(path, extensions) {

return nil

}

visitor := &FileVisitor{

Path: path,

StreamVisitor: NewStreamVisitor(nil, mapper, path, schema),

}

visitors = append(visitors, visitor)

return nil

})

if err != nil {

return nil, err

}

return visitors, nil

}底层调用的StreamVisitor,把对应的方法注册到visitor中

位置D:\Workspace\Go\kubernetes\staging\src\k8s.io\cli-runtime\pkg\resource\visitor.go

// Visit implements Visitor over a stream. StreamVisitor is able to distinct multiple resources in one stream.

func (v *StreamVisitor) Visit(fn VisitorFunc) error {

d := yaml.NewYAMLOrJSONDecoder(v.Reader, 4096)

for {

ext := runtime.RawExtension{}

if err := d.Decode(&ext); err != nil {

if err == io.EOF {

return nil

}

return fmt.Errorf("error parsing %s: %v", v.Source, err)

}

// TODO: This needs to be able to handle object in other encodings and schemas.

ext.Raw = bytes.TrimSpace(ext.Raw)

if len(ext.Raw) == 0 || bytes.Equal(ext.Raw, []byte("null")) {

continue

}

if err := ValidateSchema(ext.Raw, v.Schema); err != nil {

return fmt.Errorf("error validating %q: %v", v.Source, err)

}

info, err := v.infoForData(ext.Raw, v.Source)

if err != nil {

if fnErr := fn(info, err); fnErr != nil {

return fnErr

}

continue

}

if err := fn(info, nil); err != nil {

return err

}

}

}

用jsonYamlDecoder解析文件

ValidateSchema会解析文件中字段进行校验,比如我们把spec故意写成aspec

kubectl apply -f rule.yaml

error: error validating "rule.yaml": error validating data: [ValidationError(PrometheusRule): Unknown field "aspec" in infoForData将解析结果转换为Info对象

创建Info。object就是k8s的对象

位置staging\src\k8s.io\cli-runtime\pkg\resource\mapper.go

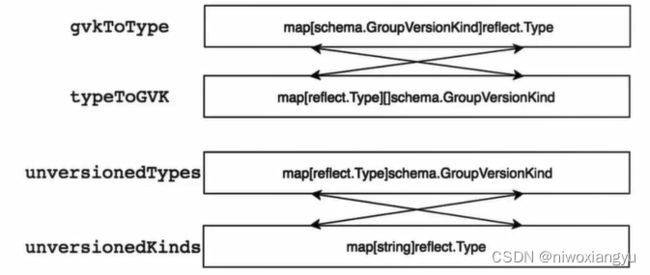

m.decoder.Decode解析出object和gvk对象

其中object代表就是k8s的对象

gvk是Group/Vsersion/Kind的缩写

// InfoForData creates an Info object for the given data. An error is returned

// if any of the decoding or client lookup steps fail. Name and namespace will be

// set into Info if the mapping's MetadataAccessor can retrieve them.

func (m *mapper) infoForData(data []byte, source string) (*Info, error) {

obj, gvk, err := m.decoder.Decode(data, nil, nil)

if err != nil {

return nil, fmt.Errorf("unable to decode %q: %v", source, err)

}

name, _ := metadataAccessor.Name(obj)

namespace, _ := metadataAccessor.Namespace(obj)

resourceVersion, _ := metadataAccessor.ResourceVersion(obj)

ret := &Info{

Source: source,

Namespace: namespace,

Name: name,

ResourceVersion: resourceVersion,

Object: obj,

}

if m.localFn == nil || !m.localFn() {

restMapper, err := m.restMapperFn()

if err != nil {

return nil, err

}

mapping, err := restMapper.RESTMapping(gvk.GroupKind(), gvk.Version)

if err != nil {

if _, ok := err.(*meta.NoKindMatchError); ok {

return nil, fmt.Errorf("resource mapping not found for name: %q namespace: %q from %q: %v\nensure CRDs are installed first",

name, namespace, source, err)

}

return nil, fmt.Errorf("unable to recognize %q: %v", source, err)

}

ret.Mapping = mapping

client, err := m.clientFn(gvk.GroupVersion())

if err != nil {

return nil, fmt.Errorf("unable to connect to a server to handle %q: %v", mapping.Resource, err)

}

ret.Client = client

}

return ret, nil

}k8s对象object讲解

Object k8s对象

文档地址https://kubenetes.io/zh/docs/concepts/overview/working-objects/kubernetes-objects/

位置staging\src\k8s.io\apimachinery\pkg\runtime\interfaces.go

// Object interface must be supported by all API types registered with Scheme. Since objects in a scheme are

// expected to be serialized to the wire, the interface an Object must provide to the Scheme allows

// serializers to set the kind, version, and group the object is represented as. An Object may choose

// to return a no-op ObjectKindAccessor in cases where it is not expected to be serialized.

type Object interface {

GetObjectKind() schema.ObjectKind

DeepCopyObject() Object

}作用

Kubernetes对象是持久化的实体

Kubernetes使用这些实体去表示整个集群的状态。特别地,它们描述了如下信息:

哪些容器化应用在运行(以及在哪些节点上)

可以被应用使用的资源

关于应用运行时表现的策略,比如重启策略、升级策略,以及容错策略

操作Kubernetes对象,无论是创建、修改,或者删除,需要使用Kubernetes API

期望状态

Kuberetes对象是“目标性记录”一旦创建对象,Kubernetes系统将持续工作以确保对象存在

通过创建对象,本质上是在告知Kubernetes系统,所需要的集群工作负载看起来是什么样子的,这就是Kubernetes集群的期望状态(Desired State)

对象规约(Spec)与状态(Status)

几乎每个Kuberneter对象包含两个嵌套的对象字段,它们负责管理对象的配置:对象spec(规约)和对象status(状态)

对于具有spec的对象,你必须在创建时设置其内容,描述你希望对象所具有的特征:期望状态(Desired State)。

status描述了对象的当前状态(Current State),它是由Kubernetes系统和组件设置并更新的。在任何时刻,Kubernetes控制平面都一直积极地管理者对象的实际状态,以使之与期望状态相匹配。

yaml中的必须字段

在想要创建的Kubernetes对象对应的.yaml文件中,需要配置如下的字段:

apiVersion - 创建该对象所使用的Kubernetes API的版本

kind - 想要创建的对象的类别

metadata-帮助唯一性标识对象的一些数据,包括一个name字符串、UID和可选的namespace

Do中创建一批visitor

// Do returns a Result object with a Visitor for the resources identified by the Builder.

// The visitor will respect the error behavior specified by ContinueOnError. Note that stream

// inputs are consumed by the first execution - use Infos() or Object() on the Result to capture a list

// for further iteration.

func (b *Builder) Do() *Result {

r := b.visitorResult()

r.mapper = b.Mapper()

if r.err != nil {

return r

}

if b.flatten {

r.visitor = NewFlattenListVisitor(r.visitor, b.objectTyper, b.mapper)

}

helpers := []VisitorFunc{}

if b.defaultNamespace {

helpers = append(helpers, SetNamespace(b.namespace))

}

if b.requireNamespace {

helpers = append(helpers, RequireNamespace(b.namespace))

}

helpers = append(helpers, FilterNamespace)

if b.requireObject {

helpers = append(helpers, RetrieveLazy)

}

if b.continueOnError {

r.visitor = ContinueOnErrorVisitor{Visitor: r.visitor}

}

r.visitor = NewDecoratedVisitor(r.visitor, helpers...)

return r

}

helpers代表一批VisitorFunc

比如校验namespace的RequireNamespace

// RequireNamespace will either set a namespace if none is provided on the

// Info object, or if the namespace is set and does not match the provided

// value, returns an error. This is intended to guard against administrators

// accidentally operating on resources outside their namespace.

func RequireNamespace(namespace string) VisitorFunc {

return func(info *Info, err error) error {

if err != nil {

return err

}

if !info.Namespaced() {

return nil

}

if len(info.Namespace) == 0 {

info.Namespace = namespace

UpdateObjectNamespace(info, nil)

return nil

}

if info.Namespace != namespace {

return fmt.Errorf("the namespace from the provided object %q does not match the namespace %q. You must pass '--namespace=%s' to perform this operation.", info.Namespace, namespace, info.Namespace)

}

return nil

}

}创建带装饰器的visitor DecoratedVisitor

if b.continueOnError {

r.visitor = ContinueOnErrorVisitor{Visitor: r.visitor}

}

r.visitor = NewDecoratedVisitor(r.visitor, helpers...)对应的visit方法

// Visit implements Visitor

func (v DecoratedVisitor) Visit(fn VisitorFunc) error {

return v.visitor.Visit(func(info *Info, err error) error {

if err != nil {

return err

}

for i := range v.decorators {

if err := v.decorators[i](info, nil); err != nil {

return err

}

}

return fn(info, nil)

})

}visitor的调用

Visitor调用链分析

外层调用result.Visit方法,内部的func

err = r.Visit(func(info *resource.Info, err error) error {

if err != nil {

return err

}

if err := util.CreateOrUpdateAnnotation(cmdutil.GetFlagBool(cmd, cmdutil.ApplyAnnotationsFlag), info.Object, scheme.DefaultJSONEncoder()); err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

if err := o.Recorder.Record(info.Object); err != nil {

klog.V(4).Infof("error recording current command: %v", err)

}

if o.DryRunStrategy != cmdutil.DryRunClient {

if o.DryRunStrategy == cmdutil.DryRunServer {

if err := o.DryRunVerifier.HasSupport(info.Mapping.GroupVersionKind); err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

}

obj, err := resource.

NewHelper(info.Client, info.Mapping).

DryRun(o.DryRunStrategy == cmdutil.DryRunServer).

WithFieldManager(o.fieldManager).

WithFieldValidation(o.ValidationDirective).

Create(info.Namespace, true, info.Object)

if err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

info.Refresh(obj, true)

}

count++

return o.PrintObj(info.Object)

})visitor接口中的调用方法

// Visit implements the Visitor interface on the items described in the Builder.

// Note that some visitor sources are not traversable more than once, or may

// return different results. If you wish to operate on the same set of resources

// multiple times, use the Infos() method.

func (r *Result) Visit(fn VisitorFunc) error {

if r.err != nil {

return r.err

}

err := r.visitor.Visit(fn)

return utilerrors.FilterOut(err, r.ignoreErrors...)

}最终的调用就是前面注册的各个visitor的Visit方法

外层VisitorFunc分析

如果出错就返回错误

DryRunStraregy代表试运行策略

默认为None代表不试运行

client代表客户端试运行,不发送请求到server

server点服务端试运行,发送请求,但是如果会改变状态的话就不做

最终调用,Create创建资源,然后调用o.PrintObj(info.Object)打印结果

func(info *resource.Info, err error) error {

if err != nil {

return err

}

if err := util.CreateOrUpdateAnnotation(cmdutil.GetFlagBool(cmd, cmdutil.ApplyAnnotationsFlag), info.Object, scheme.DefaultJSONEncoder()); err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

if err := o.Recorder.Record(info.Object); err != nil {

klog.V(4).Infof("error recording current command: %v", err)

}

if o.DryRunStrategy != cmdutil.DryRunClient {

if o.DryRunStrategy == cmdutil.DryRunServer {

if err := o.DryRunVerifier.HasSupport(info.Mapping.GroupVersionKind); err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

}

obj, err := resource.

NewHelper(info.Client, info.Mapping).

DryRun(o.DryRunStrategy == cmdutil.DryRunServer).

WithFieldManager(o.fieldManager).

WithFieldValidation(o.ValidationDirective).

Create(info.Namespace, true, info.Object)

if err != nil {

return cmdutil.AddSourceToErr("creating", info.Source, err)

}

info.Refresh(obj, true)

}

count++

return o.PrintObj(info.Object)

}2.8 kubectl功能和对象总结

kubectl的职责

主要的工作是处理用户提交的东西(包括,命令行参数,yaml文件等)

然后其会把用户提交的这些东西组织成一个数据结构体

然后把其发送给API Server

kubectl的代码原理

cobra从命令行和yaml文件中获取信息

通过Builder模式并把其转成一系列的资源

最后用Visitor模式来迭代处理这些Resources,实现各类资源对象的解析和校验

用RESTClient将Object发送到kube-apiserver

kubectl架构图

create流程

kubectl中的核心对象

RESTClient 和k8s-api通信的restful-client

位置D:\Workspace\Go\kubernetes\staging\src\k8s.io\cli-runtime\pkg\resource\interfaces.go

type RESTClientGetter interface {

ToRESTConfig() (*rest.Config, error)

ToDiscoveryClient() (discovery.CachedDiscoveryInterface, error)

ToRESTMapper() (meta.RESTMapper, error)

}Object k8s对象

文档地址https://kubenetes.io/zh/docs/concepts/overview/working-objects/kubernetes-objects/

staging\src\k8s.io\cli-runtime\pkg\resource\interfaces.go

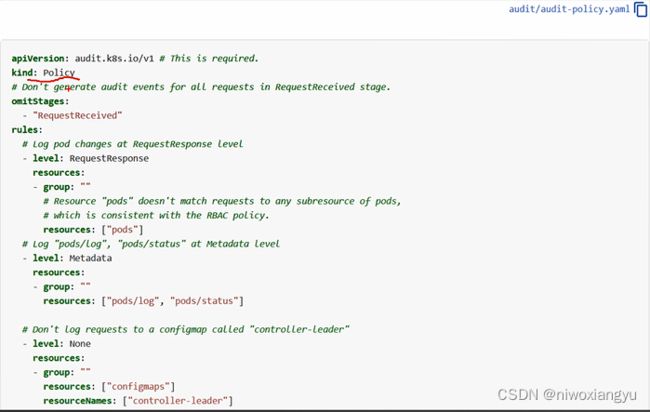

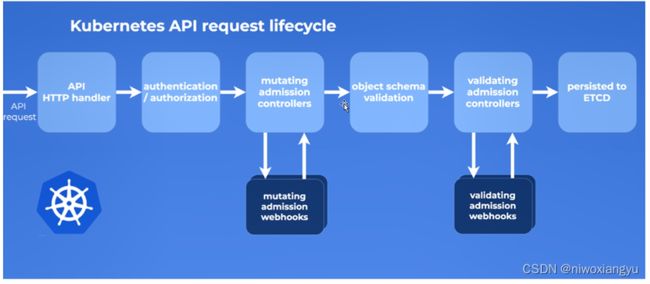

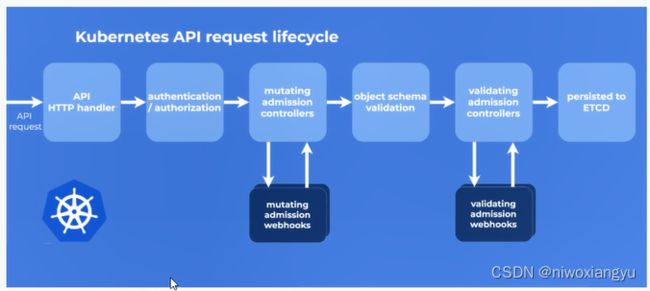

第3章 apiserver中的权限相关

3.1 apiserver启动主流程分析

本节重点总结:

apiserver启动流程

CreateServerChain创建3个server

CreateKubeAPIServer创建kubeAPIServer代表API核心服务,包括常见的Pod/Deployment/Service

createAPIExtensionsServer创建apiExtensionsServer代表API扩展服务,主要针对CRD

createAggregatorServer创建aggregatorServer代表处理merics的服务

apiserver启动流程

入口地址

位置D:\Workspace\Go\kubernetes\cmd\kube-apiserver\apiserver.go

初始化apiserver的cmd并执行

func main() {

command := app.NewAPIServerCommand()

code := cli.Run(command)

os.Exit(code)

}

newCmd执行流程

之前我们说过cobra的几个func执行顺序

// The *Run functions are executed in the following order:

// * PersistenPreRun()

// * PreRun()

// * Run()

// * *PostRun()

// * *PersistentPostRun()

// All functions get the same args, the arguments after the command name.

//PersistentPreRunE准备

设置WarningHandler

PersistentPreRunE: func(*cobra.Command, []string) error {

// silence client-go warnings.

// kube-apiserver loopback clients should not log self-issued warnings.

rest.SetDefaultWarningHandler(rest.NoWarnings{})

return nil

},runE解析 准备工作

打印版本信息

verflag.PrintAndExitIfRequested()

...

// PrintAndExitIfRequested will check if the -version flag was passed

// and, if so, print the version and exit.

func PrintAndExitIfRequested() {

if *versionFlag == VersionRaw {

fmt.Printf("%#v\n", version.Get())

os.Exit(0)

} else if *versionFlag == VersionTrue {

fmt.Printf("%s %s\n", programName, version.Get())

os.Exit(0)

}

}

打印命令行参数

// PrintFlags logs the flags in the flagset

func PrintFlags(flags *pflag.FlagSet) {

flags.VisitAll(func(flag *pflag.Flag) {

klog.V(1).Infof("FLAG: --%s=%q", flag.Name, flag.Value)

})

}检查不安全的端口

delete this check after insecure flags removed in v1.24

Complete设置默认值

// set default options

completedOptions, err := Complete(s)

if err != nil {

return err

}检查命令行参数

// validate options

if errs := completedOptions.Validate(); len(errs) != 0 {

return utilerrors.NewAggregate(errs)

}

cmd\kube-apiserver\app\options\validation.go

// Validate checks ServerRunOptions and return a slice of found errs.

func (s *ServerRunOptions) Validate() []error {

var errs []error

if s.MasterCount <= 0 {

errs = append(errs, fmt.Errorf("--apiserver-count should be a positive number, but value '%d' provided", s.MasterCount))

}

errs = append(errs, s.Etcd.Validate()...)

errs = append(errs, validateClusterIPFlags(s)...)

errs = append(errs, validateServiceNodePort(s)...)

errs = append(errs, validateAPIPriorityAndFairness(s)...)

errs = append(errs, s.SecureServing.Validate()...)

errs = append(errs, s.Authentication.Validate()...)

errs = append(errs, s.Authorization.Validate()...)

errs = append(errs, s.Audit.Validate()...)

errs = append(errs, s.Admission.Validate()...)

errs = append(errs, s.APIEnablement.Validate(legacyscheme.Scheme, apiextensionsapiserver.Scheme, aggregatorscheme.Scheme)...)

errs = append(errs, validateTokenRequest(s)...)

errs = append(errs, s.Metrics.Validate()...)

errs = append(errs, validateAPIServerIdentity(s)...)

return errs

}举一个例子,比如这个校验etcd的src\k8s.io\apiserver\pkg\server\options\etcd.go

func (s *EtcdOptions) Validate() []error {

if s == nil {

return nil

}

allErrors := []error{}

if len(s.StorageConfig.Transport.ServerList) == 0 {

allErrors = append(allErrors, fmt.Errorf("--etcd-servers must be specified"))

}

if s.StorageConfig.Type != storagebackend.StorageTypeUnset && !storageTypes.Has(s.StorageConfig.Type) {

allErrors = append(allErrors, fmt.Errorf("--storage-backend invalid, allowed values: %s. If not specified, it will default to 'etcd3'", strings.Join(storageTypes.List(), ", ")))

}

for _, override := range s.EtcdServersOverrides {

tokens := strings.Split(override, "#")

if len(tokens) != 2 {

allErrors = append(allErrors, fmt.Errorf("--etcd-servers-overrides invalid, must be of format: group/resource#servers, where servers are URLs, semicolon separated"))

continue

}

apiresource := strings.Split(tokens[0], "/")

if len(apiresource) != 2 {

allErrors = append(allErrors, fmt.Errorf("--etcd-servers-overrides invalid, must be of format: group/resource#servers, where servers are URLs, semicolon separated"))

continue

}

}

return allErrors

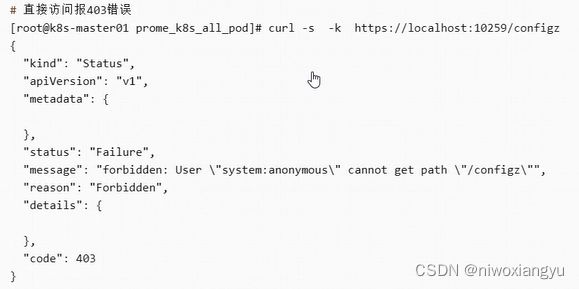

}kubectl get pod -n kube-system

ps -ef |grep apiserver

ps -ef |grep apiserver |grep etcd真正的Run函数

Run(completeOptions, genericapiserver.SetupSignalHandler())completedOptions代表ServerRunOptions

第二个参数解析stopCh

在底层的Run函数定义上可以看到第二个参数类型是一个只读的stop chan, stopCh <- chan struct{}

对应的genericapiserver.SetupSignalHandler()解析

var onlyOneSignalHandler = make(chan struct{})

var shutdownHandler chan os.Signal

// SetupSignalHandler registered for SIGTERM and SIGINT. A stop channel is returned

// which is closed on one of these signals. If a second signal is caught, the program

// is terminated with exit code 1.

// Only one of SetupSignalContext and SetupSignalHandler should be called, and only can

// be called once.

func SetupSignalHandler() <-chan struct{} {

return SetupSignalContext().Done()

}

// SetupSignalContext is same as SetupSignalHandler, but a context.Context is returned.

// Only one of SetupSignalContext and SetupSignalHandler should be called, and only can

// be called once.

func SetupSignalContext() context.Context {

close(onlyOneSignalHandler) // panics when called twice

shutdownHandler = make(chan os.Signal, 2)

ctx, cancel := context.WithCancel(context.Background())

signal.Notify(shutdownHandler, shutdownSignals...)

go func() {

<-shutdownHandler

cancel()

<-shutdownHandler

os.Exit(1) // second signal. Exit directly.

}()

return ctx

}从上面可以看出这是一个context的Done方法返回,就是一个<-chan struct{}

CreateServerChain创建3个server

CreateKubeAPIServer创建 kubeAPIServer代表API核心服务,包括常见的Pod/Deployment/Service

createAPIExtensionsServer创建 apiExtensionsServer 代表API扩展服务,主要针对CRD

createAggregatorServer创建aggregatorServer代表处理metrics的服务

然后运行

这一小节先简单过一下运行的流程,后面再慢慢看细节

// Run runs the specified APIServer. This should never exit.

func Run(completeOptions completedServerRunOptions, stopCh <-chan struct{}) error {

// To help debugging, immediately log version

klog.Infof("Version: %+v", version.Get())

klog.InfoS("Golang settings", "GOGC", os.Getenv("GOGC"), "GOMAXPROCS", os.Getenv("GOMAXPROCS"), "GOTRACEBACK", os.Getenv("GOTRACEBACK"))

server, err := CreateServerChain(completeOptions, stopCh)

if err != nil {

return err

}

prepared, err := server.PrepareRun()

if err != nil {

return err

}

return prepared.Run(stopCh)

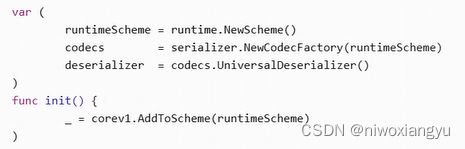

}3.2 API核心服务通用配置genericConifg的准备工作

本节重点总结

API核心服务需要的通用配置工作中的准备工作

创建和节点通信的结构体proxyTransport,使用缓存长连接来提高效率

创建clientset

初始化etcd存储

CreateKubeAPIServerConfig创建所需配置解析

D:\Workspace\Go\src\github.com\kubernetes\kubernetes\cmd\kube-apiserver\app\server.go

创建和节点通信的结构体proxyTransport,使用缓存长连接提高效率

proxyTransport := CreateProxyTransport()http.transport功能简介

transport的主要功能其实就是缓存了长连接

用于大量http请求场景下的连接复用

减少发送请求时TCP(TLS)连接建立的时间损耗

创建通用配置genericConfig

genericConfig, versionedInformers, serviceResolver, pluginInitializers, admissionPostStartHook, storageFactory, err := buildGenericConfig(s.ServerRunOptions, proxyTransport)下面是众多的ApplyTo分析

众多ApplyTo分析,并且有对应的AddFlags标记命令行参数

先创建genericConfig

genericConfig = genericapiserver.NewConfig(legacyscheme.Codecs)以检查https配置的ApplyTo分析

if lastErr = s.SecureServing.ApplyTo(&genericConfig.SecureServing, &genericConfig.LoopbackClientConfig); lastErr != nil {

return

}底层调用SecureServingOptions的ApplyTo,有对应的AddFlags方法标记命令行参数,位置再

staging\src\k8s.io\apiserver\pkg\server\options\serving.go

func (s *SecureServingOptions) AddFlags(fs *pflag.FlagSet) {

if s == nil {

return

}

fs.IPVar(&s.BindAddress, "bind-address", s.BindAddress, ""+

"The IP address on which to listen for the --secure-port port. The "+

"associated interface(s) must be reachable by the rest of the cluster, and by CLI/web "+

"clients. If blank or an unspecified address (0.0.0.0 or ::), all interfaces will be used.")

desc := "The port on which to serve HTTPS with authentication and authorization."

if s.Required {

desc += " It cannot be switched off with 0."

} else {

desc += " If 0, don't serve HTTPS at all."

}

fs.IntVar(&s.BindPort, "secure-port", s.BindPort, desc)

初始化etcd存储

创建存储工厂配置

storageFactoryConfig := kubeapiserver.NewStorageFactoryConfig()

storageFactoryConfig.APIResourceConfig = genericConfig.MergedResourceConfig

completedStorageFactoryConfig, err := storageFactoryConfig.Complete(s.Etcd)

if err != nil {

lastErr = err

return

}

初始化存储工厂

storageFactory, lastErr = completedStorageFactoryConfig.New()

if lastErr != nil {

return

}将存储工厂应用到服务端运行对象中,后期可以通过RESTOptionsGetter获取操作Etcd的句柄

if lastErr = s.Etcd.ApplyWithStorageFactoryTo(storageFactory, genericConfig); lastErr != nil {

return

}

func (s *EtcdOptions) ApplyWithStorageFactoryTo(factory serverstorage.StorageFactory, c *server.Config) error {

if err := s.addEtcdHealthEndpoint(c); err != nil {

return err

}

// use the StorageObjectCountTracker interface instance from server.Config

s.StorageConfig.StorageObjectCountTracker = c.StorageObjectCountTracker

c.RESTOptionsGetter = &StorageFactoryRestOptionsFactory{Options: *s, StorageFactory: factory}

return nil

}

addEtcdHealthEndpoint创建etcd的健康检测

func (s *EtcdOptions) addEtcdHealthEndpoint(c *server.Config) error {

healthCheck, err := storagefactory.CreateHealthCheck(s.StorageConfig)

if err != nil {

return err

}

c.AddHealthChecks(healthz.NamedCheck("etcd", func(r *http.Request) error {

return healthCheck()

}))

if s.EncryptionProviderConfigFilepath != "" {

kmsPluginHealthzChecks, err := encryptionconfig.GetKMSPluginHealthzCheckers(s.EncryptionProviderConfigFilepath)

if err != nil {

return err

}

c.AddHealthChecks(kmsPluginHealthzChecks...)

}

return nil

}从CreateHealthCheck得知,只支持etcdV3的接口

// CreateHealthCheck creates a healthcheck function based on given config.

func CreateHealthCheck(c storagebackend.Config) (func() error, error) {

switch c.Type {

case storagebackend.StorageTypeETCD2:

return nil, fmt.Errorf("%s is no longer a supported storage backend", c.Type)

case storagebackend.StorageTypeUnset, storagebackend.StorageTypeETCD3:

return newETCD3HealthCheck(c)

default:

return nil, fmt.Errorf("unknown storage type: %s", c.Type)

}

}设置使用protobufs用来内部交互,并且禁用压缩功能

因为内部网络速度快,没必要为了节省带宽而将cpu浪费在压缩和解压上

// Use protobufs for self-communication.

// Since not every generic apiserver has to support protobufs, we

// cannot default to it in generic apiserver and need to explicitly

// set it in kube-apiserver.

genericConfig.LoopbackClientConfig.ContentConfig.ContentType = "application/vnd.kubernetes.protobuf"

// Disable compression for self-communication, since we are going to be

// on a fast local network

genericConfig.LoopbackClientConfig.DisableCompression = true

创建clientset

kubeClientConfig := genericConfig.LoopbackClientConfig

clientgoExternalClient, err := clientgoclientset.NewForConfig(kubeClientConfig)

if err != nil {

lastErr = fmt.Errorf("failed to create real external clientset: %v", err)

return

}

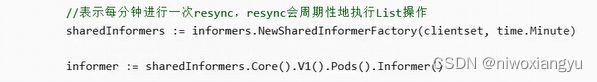

versionedInformers = clientgoinformers.NewSharedInformerFactory(clientgoExternalClient, 10*time.Minute)

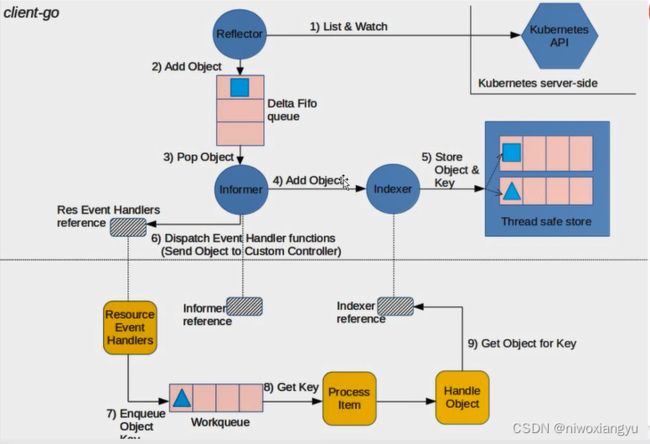

versionedInformers代表k8s-client的informer对象,用于listAndWatch k8s对象的

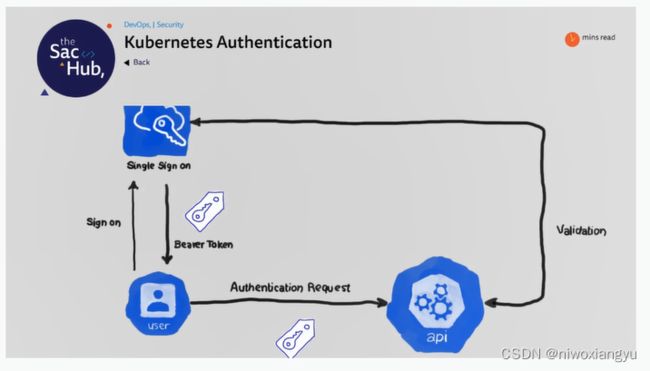

3.3 API核心服务的Authentication认证

Authenticatioon的目的

验证你是谁 确认“你是不是你”

包括多种方式,如Client Certificates,Password,and Plain Tokens, Bootstarp Tokens, and JWT Tokens等

Kubernets使用身份认证插件利用下面的策略来认证API请求的身份

-客户端证书

-持有者令牌(Bearer Token)

-身份认证代理(Proxy)

-HTTP基本认证机制

union认证的规则

-如果某一个认证方法报错就返回,说明认证没过

-如果某一个认证方法报ok,说明认证过了,直接return了,无需再运行其他认证了

-如果所有的认证方法都没报ok,则认证没过

验证你是谁 确认“你是不是你”

包括多种方式,如Client Certificates,Password,and Plain Tokens, Bootstarp Tokens, and JWT Tokens等

文档地址https://kubernetes.io/zh/docs/reference/access-authn-authz/authentication/

所有Kubernetes集群都有两类用户:由Kubernetes管理的服务账号和普通用户

所以认证要围绕这两类用户展开

身份认证策略

Kubernetes使用身份认证插件利用客户端证书、持有者令牌(Bearer Token)、身份认证代理(Proxy)或者HTTP基本认证机制来认证API请求的身份

Http请求发给API服务器时,插件会将以下属性关联到请求本身:

- 用户名:用来辨识最终用户的字符串。常见的值可以是kube-admin或[email protected]。

- 用户ID:用来辨识最终用户的字符串。旨在比用户名有更好的一致性和唯一性。

- 用户组:取值为一组字符串,其中各个字符串用来表明用户是某个命名的用户逻辑集合的成员。常见的值可能是sysytem:masters或者devops-team等。

-附加字段:一组额外的键-值映射,键是字符串,值是一组字符串;用来保存一些鉴权组件可能觉得有额外信息

你可以同时启用多种身份认证方法,并且你通常会至少使用两种方法:

-针对服务账号使用服务账号令牌

-至少另外一种方法对用户的身份进行认证

当集群中启用了多个身份认证模块时,第一个成功地对请求完成身份认证的模块会直接做出评估决定。API服务器并不保证身份认证模块的运行顺序。

对于所有通过身份认证的用户,system:authenticated组都会被添加到其组列表中。

与其他身份认证协议(LDAP、SAML、Kuberos、X509的替代模块等等)都可以通过使用一个身份认证代理或身份认证webhook来实现。

代码解读

D:\Workspace\Go\src\github.com\kubernetes\kubernetes\cmd\kube-apiserver\app\server.go

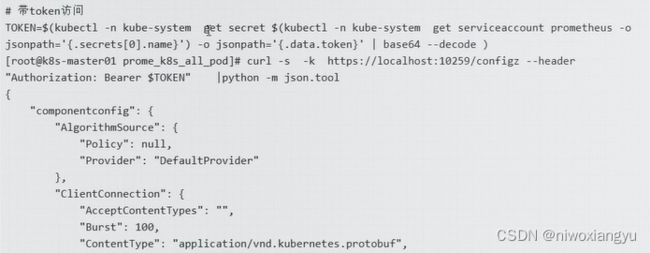

之前构建server之前生成通用配置buildGenericConfig里

// Authentication.ApplyTo requires already applied OpenAPIConfig and EgressSelector if present

if lastErr = s.Authentication.ApplyTo(&genericConfig.Authentication, genericConfig.SecureServing, genericConfig.EgressSelector, genericConfig.OpenAPIConfig, genericConfig.OpenAPIV3Config, clientgoExternalClient, versionedInformers); lastErr != nil {

return

}真正的Authentication初始化

D:\Workspace\Go\src\github.com\kubernetes\kubernetes\pkg\kubeapiserver\options\authorization.go

authInfo.Authenticator, openAPIConfig.SecurityDefinitions, err = authenticatorConfig.New()New代码、创建认证实例,支持多种认证方式:请求Header认证、Auth文件认证、CA证书认证、Bearer token认证

D:\Workspace\Go\src\github.com\kubernetes\kubernetes\pkg\kubeapiserver\authenticator\config.go

核心变量1 tokenAuthenticators []authenticator.Token代表Bearer token认证

// Token checks a string value against a backing authentication store and

// returns a Response or an error if the token could not be checked.

type Token interface {

AuthenticateToken(ctx context.Context, token string) (*Response, bool, error)

}不断添加到数组中,最终创建union对象,最终调用unionAuthTokenHandler.AuthenticateToken

// Union the token authenticators

tokenAuth := tokenunion.New(tokenAuthenticators...)

// AuthenticateToken authenticates the token using a chain of authenticator.Token objects.

func (authHandler *unionAuthTokenHandler) AuthenticateToken(ctx context.Context, token string) (*authenticator.Response, bool, error) {

var errlist []error

for _, currAuthRequestHandler := range authHandler.Handlers {

info, ok, err := currAuthRequestHandler.AuthenticateToken(ctx, token)

if err != nil {

if authHandler.FailOnError {

return info, ok, err

}

errlist = append(errlist, err)

continue

}

if ok {

return info, ok, err

}

}

return nil, false, utilerrors.NewAggregate(errlist)

}核心变量2 authenticator.Request代表用户认证的接口,其中AuthenticateRequest是对应得认证方法

// Request attempts to extract authentication information from a request and

// returns a Response or an error if the request could not be checked.

type Request interface {

AuthenticateRequest(req *http.Request) (*Response, bool, error)

}然后不断添加到切片中,比如x509认证

// X509 methods

if config.ClientCAContentProvider != nil {

certAuth := x509.NewDynamic(config.ClientCAContentProvider.VerifyOptions, x509.CommonNameUserConversion)

authenticators = append(authenticators, certAuth)

}把上面得unionAuthTokenHandler也加入到链中

authenticators = append(authenticators, bearertoken.New(tokenAuth), websocket.NewProtocolAuthenticator(tokenAuth))最后创建一个union对象unionAuthRequestHandler

authenticator := union.New(authenticators...)最终调用得unionAuthRequestHandler.AuthenticateRequest方法遍历认证方法认证

// AuthenticateRequest authenticates the request using a chain of authenticator.Request objects.

func (authHandler *unionAuthRequestHandler) AuthenticateRequest(req *http.Request) (*authenticator.Response, bool, error) {

var errlist []error

for _, currAuthRequestHandler := range authHandler.Handlers {

resp, ok, err := currAuthRequestHandler.AuthenticateRequest(req)

if err != nil {

if authHandler.FailOnError {

return resp, ok, err

}

errlist = append(errlist, err)

continue

}

if ok {

return resp, ok, err

}

}

return nil, false, utilerrors.NewAggregate(errlist)

}代码解读:

-如果某一个认证方法报错就返回,说明认证没过

-如果某一个认证方法报ok,说明认证过了,直接retrun了,无需再运行其他认证了

-如果所有得认证方法都没报ok,则认证没过

本节重点总结:

Authentication的目的

Kubernetes使用身份认证插件利用下面的策略来认证API请求的身份

-客户端证书

-持有者令牌(Bearer Token)

-身份认证代理(Proxy)

-HTTP基本认证机制

union认证的规则

-如果某一个认证方法报错就返回,说明认证没过

-如果某一个认证方法报ok,说明认证过了,直接retrun了,无需再运行其他认证了

-如果所有得认证方法都没报ok,则认证没过

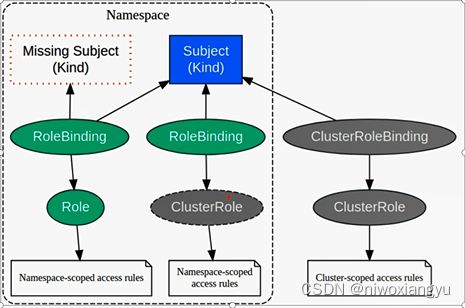

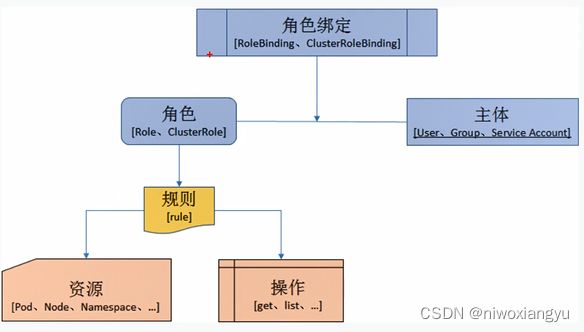

3.4API核心服务的Authorization鉴权

Authorization鉴权

确认“你是不是有权力做这件事”。怎样判定是否有权利,通过配置策略

4种鉴权模块

鉴权执行链unionAuthorizHandler

Authorization鉴权相关

Authorization鉴权,确认“你是不是有权力做这件事”。怎样判定是否有权利,通过配置策略。

Kubernetes使用API服务器对API请求进行鉴权

它根据所有策略评估所有请求属性来决定允许或拒绝请求。

一个API请求的所有部分都必须被某些策略允许才能继续。这意味着默认情况下拒绝权限。

当系统配置了多个鉴权模块时,Kubernetes将按顺序使用每个模块。如果任何鉴权模块批准或拒绝请求·,则立即返回该决定,并不会与其他鉴权模块协商。如果所有模块对请求没有意见,则拒绝该请求。被拒绝相应返回HTTP状态码403.

文档地址https://kubernrtes.io/zh/docs/reference/access-authn-authz/authorization/

4种鉴权模块

文档地址https://kubernetes.io/zh/docs/references/access-authn-authz/authorization/#authorization-modules

Node - 一个专用鉴权组件,根据调度到kubelet上运行的Pod为kubelet授予权限。了解有关使用节点鉴权模式的更多信息,请参阅节点鉴权。

ABAC-基于属性的访问控制(ABAC)定义了一种访问控制范型,通过使用将属性组合在一起的策略,将访问权限授予用户。策略可以使用任何类型的属性(用户属性、资源属性、对象、环境属性等)。要了解有关ABAC模式更多信息,请参阅ABAC模式。

RBAC-基于角色的访问控制(RBAC)是一种基于企业内个人用户的角色管理对计算机或网络资源的访问的方法。在此上下文中,权限是单个用户执行特定任务的能力,例如查看、创建或修改文件。要了解有关使用RBAC模式更多信息,请参阅RBAC模式。

- 被启用之后,RBAC(基于角色的访问控制)使用rbac.authorization.k8s.io API组成驱动鉴权决策,从而允许管理员通过Kubernetes API动态配置权限策略。

- 要启用RBAC,请使用--authorization-mode = RBAC启动API服务器。

Webhook-Webhook是一个HTTP回调:发生某些事情时调用的HTTP POST:通过HTTP POST进行简单的事件通知。实现Webhook的Web应用程序会在发生某些事情时将消息发布到UrL。要了解有关使用Webhook模型的更多信息,请参阅webhook模式。

代码解析

入口还在buildGenericConfig D:\Workspace\Go\src\k8s.io\kubernetes\cmd\kube-apiserver\app\server.go

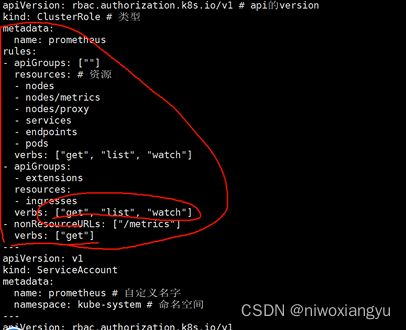

genericConfig.Authorization.Authorizer, genericConfig.RuleResolver, err = BuildAuthorizer(s, genericConfig.EgressSelector, versionedInformers)还是通过New构造,位置D:\Workspace\Go\src\k8s.io\kubernetes\pkg\kubeapiserver\authorizer\config.go

authorizationConfig.New()构造函数New分析

核心变量1 authorizers

D:\Workspace\Go\src\k8s.io\kubernetes\staging\src\k8s.io\apiserver\pkg\authorization\authorizer\interfaces.go

// Authorizer makes an authorization decision based on information gained by making

// zero or more calls to methods of the Attributes interface. It returns nil when an action is

// authorized, otherwise it returns an error.

type Authorizer interface {

Authorize(ctx context.Context, a Attributes) (authorized Decision, reason string, err error)

}鉴权的接口,有对应的Authorize执行鉴权操作,返回参数如下

Decision代表鉴权结果,有

- 拒绝DecisionDeny

-通过DecisionAllow

- 未表态 DecisionNoOpinion

reason代表拒绝的原因

核心变量2 ruleResolvers

D:\Workspace\Go\src\k8s.io\kubernetes\staging\src\k8s.io\apiserver\pkg\authorization\authorizer\interfaces.go

// RuleResolver provides a mechanism for resolving the list of rules that apply to a given user within a namespace.

type RuleResolver interface {

// RulesFor get the list of cluster wide rules, the list of rules in the specific namespace, incomplete status and errors.

RulesFor(user user.Info, namespace string) ([]ResourceRuleInfo, []NonResourceRuleInfo, bool, error)

}获取rule的接口,有对应的RulesFor执行获取rule操作,返回参数如下

[]ResourceRuleInfo代表资源型的rule

[]NonResourceRuleInfo代表非资源型的如nonResourceURLs:["/metrics"]

遍历鉴权模块判断,向上述切片中append

for _, authorizationMode := range config.AuthorizationModes {

// Keep cases in sync with constant list in k8s.io/kubernetes/pkg/kubeapiserver/authorizer/modes/modes.go.

switch authorizationMode {

case modes.ModeNode:

node.RegisterMetrics()

graph := node.NewGraph()

node.AddGraphEventHandlers(

graph,

config.VersionedInformerFactory.Core().V1().Nodes(),

config.VersionedInformerFactory.Core().V1().Pods(),

config.VersionedInformerFactory.Core().V1().PersistentVolumes(),

config.VersionedInformerFactory.Storage().V1().VolumeAttachments(),

)

nodeAuthorizer := node.NewAuthorizer(graph, nodeidentifier.NewDefaultNodeIdentifier(), bootstrappolicy.NodeRules())

authorizers = append(authorizers, nodeAuthorizer)

ruleResolvers = append(ruleResolvers, nodeAuthorizer)

case modes.ModeAlwaysAllow:

alwaysAllowAuthorizer := authorizerfactory.NewAlwaysAllowAuthorizer()

authorizers = append(authorizers, alwaysAllowAuthorizer)

ruleResolvers = append(ruleResolvers, alwaysAllowAuthorizer)

case modes.ModeAlwaysDeny:

alwaysDenyAuthorizer := authorizerfactory.NewAlwaysDenyAuthorizer()

authorizers = append(authorizers, alwaysDenyAuthorizer)

ruleResolvers = append(ruleResolvers, alwaysDenyAuthorizer)

case modes.ModeABAC:

abacAuthorizer, err := abac.NewFromFile(config.PolicyFile)

if err != nil {

return nil, nil, err

}

authorizers = append(authorizers, abacAuthorizer)

ruleResolvers = append(ruleResolvers, abacAuthorizer)

case modes.ModeWebhook:

if config.WebhookRetryBackoff == nil {

return nil, nil, errors.New("retry backoff parameters for authorization webhook has not been specified")

}

clientConfig, err := webhookutil.LoadKubeconfig(config.WebhookConfigFile, config.CustomDial)

if err != nil {

return nil, nil, err

}

webhookAuthorizer, err := webhook.New(clientConfig,

config.WebhookVersion,

config.WebhookCacheAuthorizedTTL,

config.WebhookCacheUnauthorizedTTL,

*config.WebhookRetryBackoff,

)

if err != nil {

return nil, nil, err

}

authorizers = append(authorizers, webhookAuthorizer)

ruleResolvers = append(ruleResolvers, webhookAuthorizer)

case modes.ModeRBAC:

rbacAuthorizer := rbac.New(

&rbac.RoleGetter{Lister: config.VersionedInformerFactory.Rbac().V1().Roles().Lister()},

&rbac.RoleBindingLister{Lister: config.VersionedInformerFactory.Rbac().V1().RoleBindings().Lister()},

&rbac.ClusterRoleGetter{Lister: config.VersionedInformerFactory.Rbac().V1().ClusterRoles().Lister()},

&rbac.ClusterRoleBindingLister{Lister: config.VersionedInformerFactory.Rbac().V1().ClusterRoleBindings().Lister()},

)

authorizers = append(authorizers, rbacAuthorizer)

ruleResolvers = append(ruleResolvers, rbacAuthorizer)

default:

return nil, nil, fmt.Errorf("unknown authorization mode %s specified", authorizationMode)

}

}最后返回两个对象的union对象,跟authentication一样

return union.New(authorizers...), union.NewRuleResolvers(ruleResolvers...), nilauthorizaers的union unionauthzHandler

位置D:\Workspace\Go\src\k8s.io\kubernetes\staging\src\k8s.io\apiserver\pkg\authorization\union\union.go

// New returns an authorizer that authorizes against a chain of authorizer.Authorizer objects

func New(authorizationHandlers ...authorizer.Authorizer) authorizer.Authorizer {

return unionAuthzHandler(authorizationHandlers)

}

// Authorizes against a chain of authorizer.Authorizer objects and returns nil if successful and returns error if unsuccessful

func (authzHandler unionAuthzHandler) Authorize(ctx context.Context, a authorizer.Attributes) (authorizer.Decision, string, error) {

var (

errlist []error

reasonlist []string

)

for _, currAuthzHandler := range authzHandler {

decision, reason, err := currAuthzHandler.Authorize(ctx, a)

if err != nil {

errlist = append(errlist, err)

}

if len(reason) != 0 {

reasonlist = append(reasonlist, reason)

}

switch decision {

case authorizer.DecisionAllow, authorizer.DecisionDeny:

return decision, reason, err

case authorizer.DecisionNoOpinion:

// continue to the next authorizer

}

}

return authorizer.DecisionNoOpinion, strings.Join(reasonlist, "\n"), utilerrors.NewAggregate(errlist)

}unionAuthzHandler的鉴权执行方法Auhorize同样是遍历执行内部的鉴权方法Authoriza

如果任一方法的鉴权结果decision为通过或者拒绝,就直接返回

否则代表不表态,继续执行下一个Authorize方法

ruleResolvers的union unionauthzHandler

位置D:\Workspace\Go\src\k8s.io\kubernetes\staging\src\k8s.io\apiserver\pkg\authorization\union\union.go

// unionAuthzRulesHandler authorizer against a chain of authorizer.RuleResolver

type unionAuthzRulesHandler []authorizer.RuleResolver

// NewRuleResolvers returns an authorizer that authorizes against a chain of authorizer.Authorizer objects

func NewRuleResolvers(authorizationHandlers ...authorizer.RuleResolver) authorizer.RuleResolver {

return unionAuthzRulesHandler(authorizationHandlers)

}

// RulesFor against a chain of authorizer.RuleResolver objects and returns nil if successful and returns error if unsuccessful

func (authzHandler unionAuthzRulesHandler) RulesFor(user user.Info, namespace string) ([]authorizer.ResourceRuleInfo, []authorizer.NonResourceRuleInfo, bool, error) {

var (

errList []error

resourceRulesList []authorizer.ResourceRuleInfo

nonResourceRulesList []authorizer.NonResourceRuleInfo

)

incompleteStatus := false

for _, currAuthzHandler := range authzHandler {

resourceRules, nonResourceRules, incomplete, err := currAuthzHandler.RulesFor(user, namespace)

if incomplete {

incompleteStatus = true

}

if err != nil {

errList = append(errList, err)

}

if len(resourceRules) > 0 {

resourceRulesList = append(resourceRulesList, resourceRules...)

}

if len(nonResourceRules) > 0 {

nonResourceRulesList = append(nonResourceRulesList, nonResourceRules...)

}

}

return resourceRulesList, nonResourceRulesList, incompleteStatus, utilerrors.NewAggregate(errList)

}unionAuthzRulesHandler的执行方法RulesFor中遍历内部的authzHandler

执行他们的RulesFor方法获取resourceRules和nonResourceRules

并将结果添加到resourceRuleList和nonReourceRulesList,返回

本节重点总结:

Authorization鉴权的目的

4种鉴权模块

鉴权执行链unionAuthzHandler

3.5 node类型的Authorization鉴权

Authorization鉴权

本节重点总结:

节点鉴权是一种特殊用途的鉴权模式,专门对kubelet发出的API请求进行鉴权

4种规则解读-如果不是node的请求则拒绝

- 如果nodeName没找到则拒绝

-如果请求的是configmap、pod、pv、pvc、secret需要校验

- 如果动作是非get,拒绝

- 如果请求的资源和节点没关系,拒绝

- 如果请求其他资源,需要按照定义好的rule匹配

节点鉴权

文档地址https://kubernetes.io/zh/docs/reference/access-authn-authz/node/

节点鉴权是一种特殊用途的鉴权模式,专门对kubelet发出的API请求进行鉴权。

概述

节点鉴权器允许kubelet执行API操作,包括:

读取操作:

services

endpoints

nodes

pods

secrets、configmaps、pvcs以及绑定到kubelet节点的与pod相关的持久卷

写入操作:

节点和节点状态(启用NodeRestriction准入插件以限制kubelet只能修改自己的节点)

Pod和Pod状态(启用NodeRestriction准入插件以限制kubelet只能修改绑定到自身的Pod)

鉴权相关操作:

对于基于TLS的启动引导过程时使用的certificationsigningrequests API的读/写权限

为委派的身份验证/授权检查创建tokenreviews和subjectaccessreviews的能力

源码解读

位置D:\Workspace\Go\src\k8s.io\kubernetes\plugin\pkg\auth\authorizer\node\node_authorizer.go

func (r *NodeAuthorizer) Authorize(ctx context.Context, attrs authorizer.Attributes) (authorizer.Decision, string, error) {

nodeName, isNode := r.identifier.NodeIdentity(attrs.GetUser())

if !isNode {

// reject requests from non-nodes

return authorizer.DecisionNoOpinion, "", nil

}

if len(nodeName) == 0 {

// reject requests from unidentifiable nodes

klog.V(2).Infof("NODE DENY: unknown node for user %q", attrs.GetUser().GetName())

return authorizer.DecisionNoOpinion, fmt.Sprintf("unknown node for user %q", attrs.GetUser().GetName()), nil

}

// subdivide access to specific resources

if attrs.IsResourceRequest() {

requestResource := schema.GroupResource{Group: attrs.GetAPIGroup(), Resource: attrs.GetResource()}

switch requestResource {

case secretResource:

return r.authorizeReadNamespacedObject(nodeName, secretVertexType, attrs)

case configMapResource:

return r.authorizeReadNamespacedObject(nodeName, configMapVertexType, attrs)

case pvcResource:

if attrs.GetSubresource() == "status" {

return r.authorizeStatusUpdate(nodeName, pvcVertexType, attrs)

}

return r.authorizeGet(nodeName, pvcVertexType, attrs)

case pvResource:

return r.authorizeGet(nodeName, pvVertexType, attrs)

case vaResource:

return r.authorizeGet(nodeName, vaVertexType, attrs)

case svcAcctResource:

return r.authorizeCreateToken(nodeName, serviceAccountVertexType, attrs)

case leaseResource:

return r.authorizeLease(nodeName, attrs)

case csiNodeResource:

return r.authorizeCSINode(nodeName, attrs)

}

}

// Access to other resources is not subdivided, so just evaluate against the statically defined node rules

if rbac.RulesAllow(attrs, r.nodeRules...) {

return authorizer.DecisionAllow, "", nil

}

return authorizer.DecisionNoOpinion, "", nil

}规则解读

// NodeAuthorizer authorizes requests from kubelets, with the following logic:

// 1. If a request is not from a node (NodeIdentity() returns isNode=false), reject

// 2. If a specific node cannot be identified (NodeIdentity() returns nodeName=""), reject

// 3. If a request is for a secret, configmap, persistent volume or persistent volume claim, reject unless the verb is get, and the requested object is related to the requesting node:

// node <- configmap

// node <- pod

// node <- pod <- secret

// node <- pod <- configmap

// node <- pod <- pvc

// node <- pod <- pvc <- pv

// node <- pod <- pvc <- pv <- secret

// 4. For other resources, authorize all nodes uniformly using statically defined rules前两条规则很好理解

规则3解读

第三条如果请求的资源时secret,configmap,persistent volume or persistent volume claim,需要验证动作是否是get

以secretResource为例,调用authorizeReadNamespaceObject方法

case secretResource:

return r.authorizeReadNamespaceObject(nodeName, secretVertexType, attrs)authorizeReadNamespaceObject验证namespace的方法

authorizeReadNamespaceObject方法是装饰方法,先校验资源是否是namespace级别的,再调用底层的authorize方法

// authorizeReadNamespacedObject authorizes "get", "list" and "watch" requests to single objects of a

// specified types if they are related to the specified node.

func (r *NodeAuthorizer) authorizeReadNamespacedObject(nodeName string, startingType vertexType, attrs authorizer.Attributes) (authorizer.Decision, string, error) {

switch attrs.GetVerb() {

case "get", "list", "watch":

//ok

default:

klog.V(2).Infof("NODE DENY: '%s' %#v", nodeName, attrs)

return authorizer.DecisionNoOpinion, "can only read resources of this type", nil

}

if len(attrs.GetSubresource()) > 0 {

klog.V(2).Infof("NODE DENY: '%s' %#v", nodeName, attrs)

return authorizer.DecisionNoOpinion, "cannot read subresource", nil

}

if len(attrs.GetNamespace()) == 0 {

klog.V(2).Infof("NODE DENY: '%s' %#v", nodeName, attrs)

return authorizer.DecisionNoOpinion, "can only read namespaced object of this type", nil

}

return r.authorize(nodeName, startingType, attrs)

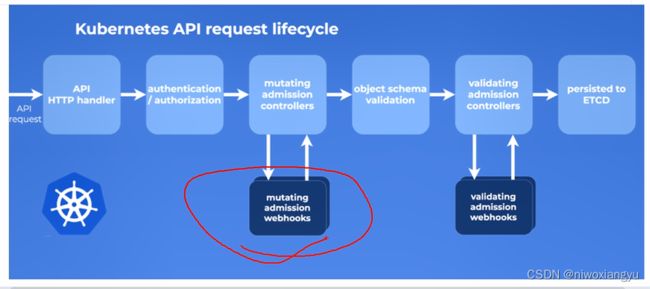

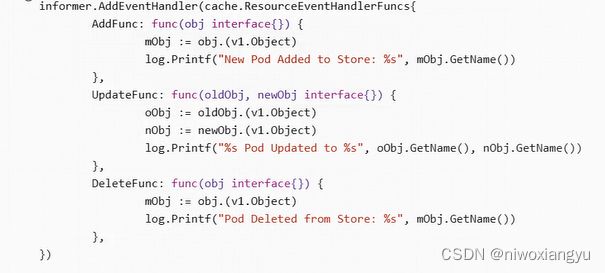

}解读