MPI 集体通信(collective communication)

1、MPI调用接口

(1)广播MPI_BCAST

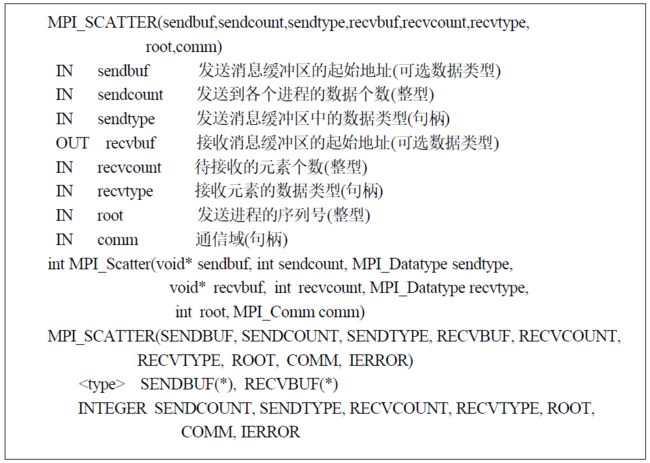

(2)散发MPI_SCATTER

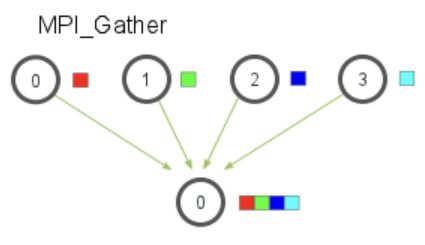

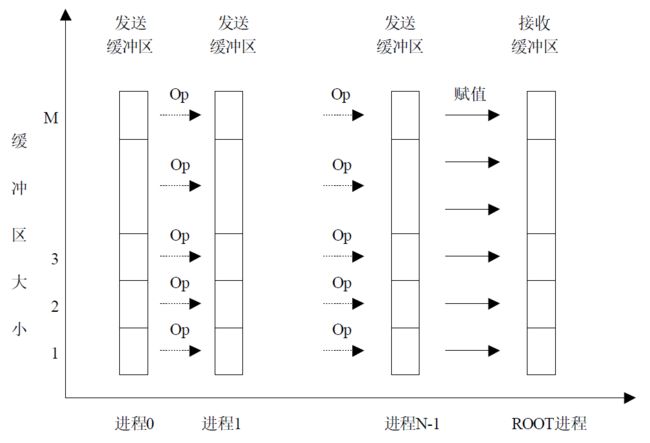

(3)收集MPI_GATHER

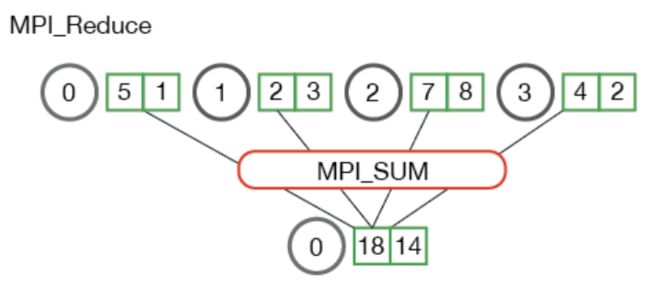

(4)归约MI_REDUCE

MPI_REDUCE将组内每个进程输入缓冲区中的数据按给定的操作op进行运算,并将其结果返回到序列号为root的进程的输出缓冲区中。

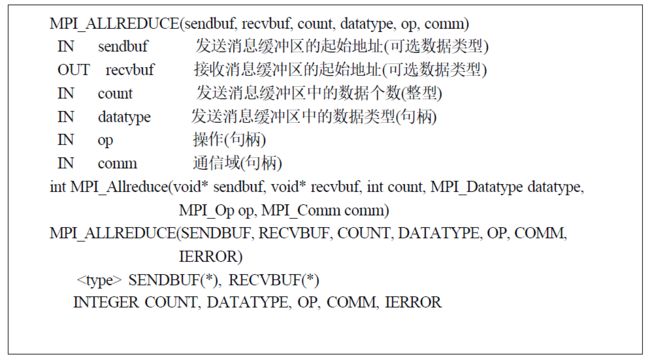

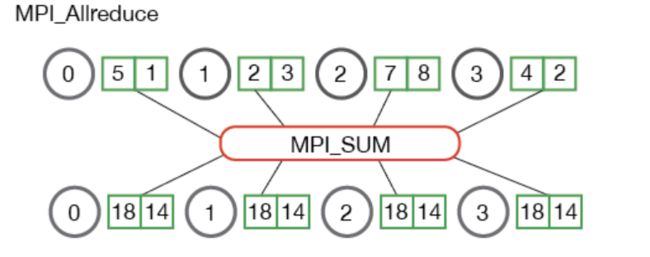

(5)MPI_ALLREDUCE

MPI_Allreduce 与 MPI_Reduce 相同,不同之处在于它不需要根进程 ID(因为结果分配给所有进程)。

MPI_Allreduce 等效于先执行 MPI_Reduce,然后执行 MPI_Bcast。

2. 例子

(1)利用MPI_Scatter和MPI_Gather计算0, 1, 2, ..., 99的平均值

思路:

- 在根进程(进程0)上生成一个数组0, 1, 2, ..., 99。

- 把所有数字用

MPI_Scatter分发给4进程,每个进程得到25个数字。 - 每个进程计算它们各自得到的数字的平均数。

- 根进程用MPI_Gather收集各进程的平均数,然后计算这4个平均数的平均数,得出最后结果。

// Program that computes the average of an array of elements in parallel using

// MPI_Scatter and MPI_Gather

//

#include

#include

#include

#include

#include

// Creates an array of 0, 1, 2, ..., 99

float *create_array(int num_elements) {

float *array_nums = (float *)malloc(sizeof(float) * num_elements);

for (int i = 0; i < num_elements; i++) {

array_nums[i] = i;

}

return array_nums;

}

// Computes the average of an array of numbers

float compute_avg(float *array, int num_elements) {

float sum = 0.f;

int i;

for (i = 0; i < num_elements; i++) {

sum += array[i];

}

return sum / num_elements;

}

int main(int argc, char** argv) {

MPI_Init(NULL, NULL);

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

int world_size;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

assert(world_size == 4);

// Create a random array of elements on the root process. Its total

// size will be the number of elements per process times the number

// of processes

float *array_nums = NULL;

if (world_rank == 0) {

array_nums = create_array(100);

}

// For each process, create a buffer that will hold a subset of the entire array

int num_elements_per_proc = 25;

float *sub_array_nums = (float *)malloc(sizeof(float) * num_elements_per_proc);

assert(sub_array_nums != NULL);

// Scatter the random numbers from the root process to all processes in

// the MPI world

MPI_Scatter(array_nums, num_elements_per_proc, MPI_FLOAT, sub_array_nums,

num_elements_per_proc, MPI_FLOAT, 0, MPI_COMM_WORLD);

// Compute the average of your subset

float sub_avg = compute_avg(sub_array_nums, num_elements_per_proc);

// Gather all partial averages down to the root process

float *sub_avgs = NULL;

if (world_rank == 0) {

sub_avgs = (float *)malloc(sizeof(float) * world_size);

assert(sub_avgs != NULL);

}

MPI_Gather(&sub_avg, 1, MPI_FLOAT, sub_avgs, 1, MPI_FLOAT, 0, MPI_COMM_WORLD);

// Now that we have all of the partial averages on the root, compute the

// total average of all numbers. Since we are assuming each process computed

// an average across an equal amount of elements, this computation will

// produce the correct answer.

if (world_rank == 0) {

float avg = compute_avg(sub_avgs, world_size);

printf("Avg of all elements is %f\n", avg);

// Compute the average across the original data for comparison

float original_data_avg =

compute_avg(array_nums, num_elements_per_proc * world_size);

printf("Avg computed across original data is %f\n", original_data_avg);

}

// Clean up

if (world_rank == 0) {

free(array_nums);

free(sub_avgs);

}

free(sub_array_nums);

MPI_Barrier(MPI_COMM_WORLD);

MPI_Finalize();

}

编译命令:

mpicc -o avg.c avg运行命令:

mpirun -n 4 ./avg输出结果:

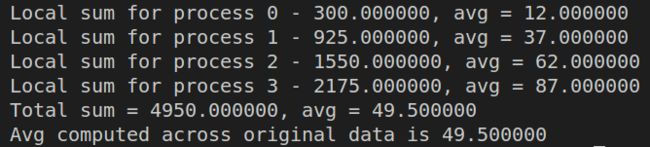

(2)利用MPI_Reduce计算0, 1, 2, ..., 99的平均值

使用 MPI_Reduce 可以简化上一个例子的代码中,各进程平均数求和的写法。

在下面的代码中,每个进程都会创建随机数并计算和保存在 local_sum 中。 然后使用 MPI_SUM 将 local_sum 归约至根进程。 然后,全局平均值为 global_sum / (world_size * num_elements_per_proc)。

// Program that computes the average of an array of elements in parallel using

// MPI_Scatter and MPI_Reduce

//

#include

#include

#include

#include

#include

// Creates an array of 0, 1, 2, ..., 99

float *create_array(int num_elements)

{

float *array_nums = (float *)malloc(sizeof(float) * num_elements);

for (int i = 0; i < num_elements; i++)

{

array_nums[i] = i;

}

return array_nums;

}

// Computes the average of an array of numbers

float compute_avg(float *array, int num_elements)

{

float sum = 0.f;

int i;

for (i = 0; i < num_elements; i++)

{

sum += array[i];

}

return sum / num_elements;

}

int main(int argc, char **argv)

{

MPI_Init(NULL, NULL);

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

int world_size;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

assert(world_size == 4);

// Create a random array of elements on the root process. Its total

// size will be the number of elements per process times the number

// of processes

float *array_nums = NULL;

if (world_rank == 0)

{

array_nums = create_array(100);

}

// For each process, create a buffer that will hold a subset of the entire array

int num_elements_per_proc = 25;

float *sub_array_nums = (float *)malloc(sizeof(float) * num_elements_per_proc);

assert(sub_array_nums != NULL);

// Scatter the random numbers from the root process to all processes in

// the MPI world

MPI_Scatter(array_nums, num_elements_per_proc, MPI_FLOAT, sub_array_nums,

num_elements_per_proc, MPI_FLOAT, 0, MPI_COMM_WORLD);

/*--------------- Use MPI_Reduce --------------- */

// Sum the numbers locally

float local_sum = 0;

int i;

for (i = 0; i < num_elements_per_proc; i++)

{

local_sum += sub_array_nums[i];

}

// Print the random numbers on each process

printf("Local sum for process %d - %f, avg = %f\n",

world_rank, local_sum, local_sum / num_elements_per_proc);

// Reduce all of the local sums into the global sum

float global_sum;

MPI_Reduce(&local_sum, &global_sum, 1, MPI_FLOAT, MPI_SUM, 0,

MPI_COMM_WORLD);

// Print the result

if (world_rank == 0)

{

printf("Total sum = %f, avg = %f\n", global_sum,

global_sum / (world_size * num_elements_per_proc));

// Compute the average across the original data for comparison

float original_data_avg =

compute_avg(array_nums, num_elements_per_proc * world_size);

printf("Avg computed across original data is %f\n", original_data_avg);

}

// Clean up

if (world_rank == 0)

{

free(array_nums);

}

free(sub_array_nums);

MPI_Barrier(MPI_COMM_WORLD);

MPI_Finalize();

}

输出结果:

(3)计算0, 1, 2, ..., 99的标准差

标准差定义:

- 首先计算所有数字的平均值,用MPI_Allreduce

- 累计每个数字与平均值的偏差,用MPI_Reduce

- 求平均

// Program that computes the standard deviation of an array of elements in parallel using

// MPI_Scatter, MPI_Allreduce and MPI_Reduce

//

#include

#include

#include

#include

#include

#include

// Creates an array of 0, 1, 2, ..., 99

float *create_array(int num_elements)

{

float *array_nums = (float *)malloc(sizeof(float) * num_elements);

for (int i = 0; i < num_elements; i++)

{

array_nums[i] = i;

}

return array_nums;

}

// Computes the average of an array of numbers

float compute_avg(float *array, int num_elements)

{

float sum = 0.f;

int i;

for (i = 0; i < num_elements; i++)

{

sum += array[i];

}

return sum / num_elements;

}

int main(int argc, char **argv)

{

MPI_Init(NULL, NULL);

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

int world_size;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

assert(world_size == 4);

// Create a random array of elements on the root process. Its total

// size will be the number of elements per process times the number

// of processes

float *array_nums = NULL;

if (world_rank == 0)

{

array_nums = create_array(100);

}

// For each process, create a buffer that will hold a subset of the entire array

int num_elements_per_proc = 25;

float *sub_array_nums = (float *)malloc(sizeof(float) * num_elements_per_proc);

assert(sub_array_nums != NULL);

// Scatter the random numbers from the root process to all processes in

// the MPI world

MPI_Scatter(array_nums, num_elements_per_proc, MPI_FLOAT, sub_array_nums,

num_elements_per_proc, MPI_FLOAT, 0, MPI_COMM_WORLD);

/*--------------- Use MPI_Allreduce --------------- */

float local_sum = 0;

for (int i = 0; i < num_elements_per_proc; ++i) {

local_sum += sub_array_nums[i];

}

// Reduce all of the local sums into the global sum in order to

// calculate the mean

float global_sum;

MPI_Allreduce(&local_sum, &global_sum, 1, MPI_FLOAT, MPI_SUM,

MPI_COMM_WORLD);

float mean = global_sum / (num_elements_per_proc * world_size);

// Compute the local sum of the squared differences from the mean

float local_sq_diff = 0;

for (int i = 0; i < num_elements_per_proc; i++)

{

local_sq_diff += (sub_array_nums[i] - mean) * (sub_array_nums[i] - mean);

}

// Reduce the global sum of the squared differences to the root

// process and print off the answer

float global_sq_diff;

MPI_Reduce(&local_sq_diff, &global_sq_diff, 1, MPI_FLOAT, MPI_SUM, 0,

MPI_COMM_WORLD);

// The standard deviation is the square root of the mean of the

// squared differences.

if (world_rank == 0)

{

float stddev = sqrt(global_sq_diff /

(num_elements_per_proc * world_size));

printf("Mean - %f, Standard deviation = %f\n", mean, stddev);

}

// Clean up

if (world_rank == 0)

{

free(array_nums);

}

free(sub_array_nums);

MPI_Barrier(MPI_COMM_WORLD);

MPI_Finalize();

}

输出结果: