【SpringCloud-Alibaba系列教程】12.日志链路追踪

引入问题

毕竟写代码,肯定有bug的,所以我们必要日志查看还是需要的,但是微服务查看,我们需要一条整个链路追踪,要不然我们根本不知道,哪里出问题了,所以我们需要进行实现链路日志追踪。

我们开始吧

首先就是引入我们的链路追踪的sleuth的相关依赖。

org.springframework.cloud

spring-cloud-starter-sleuth

然后进行我们访问之前写的api接口,我们看一下控制台会有那些内容。

http://localhost:7000/product-serv/product/1?token=admin

我们可以看到这有一个相同的一串

[service-product,a5670094401b487b,de21187ddc169043,true]

[api-gateway,a5670094401b487b,a5670094401b487b,true]

几个图对比后可以看到第一个就是服务名称。在不同的的会有一个相同的,你可以看一下

a5670094401b487b这就相当于一个链路上的,后面的不同id就是不同服务上面的唯一id。

第三个就是是否输出到第三方平台,这里true就是输出了,其实这里我已经配置了输出到zipkin界面。

我们来看一下配置:

org.springframework.cloud

spring-cloud-starter-zipkin

zipkin:

base-url: http://127.0.0.1:9411

discovery-client-enabled: false #让nacos把它当成一个url不要做服务名

sender:

type: web

sleuth:

web:

client:

enabled: true

sampler:

probability: 1.0 # 采样比例为: 0.1(即10%),设置的值介于0.0到1.0之间,1.0则表示全部采集。

其实这样是存在内存中的,如果重启那就没有了,那如何进行持久化呢,主要就是两种,一个就是mysql,es

我们先演示MySQL的,其实就是在启动的时候做一下修改。

例如我们原本的 Windows java -jar zipkin-server-2.23.16-exec.jar

如果需要持久化数据库我们首先创建持久化存储的表。

可以直接去网址下载:

https://github.com/openzipkin/zipkin/edit/master/zipkin-storage/mysql-v1/src/main/resources/mysql.sql

-- Copyright 2015-2019 The OpenZipkin Authors

--

-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except

-- in compliance with the License. You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software distributed under the License

-- is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

-- or implied. See the License for the specific language governing permissions and limitations under

-- the License.

--

CREATE TABLE IF NOT EXISTS zipkin_spans (

`trace_id_high` BIGINT NOT NULL DEFAULT 0 COMMENT 'If non zero, this means the trace uses 128 bit traceIds instead of 64 bit',

`trace_id` BIGINT NOT NULL,

`id` BIGINT NOT NULL,

`name` VARCHAR(255) NOT NULL,

`remote_service_name` VARCHAR(255),

`parent_id` BIGINT,

`debug` BIT(1),

`start_ts` BIGINT COMMENT 'Span.timestamp(): epoch micros used for endTs query and to implement TTL',

`duration` BIGINT COMMENT 'Span.duration(): micros used for minDuration and maxDuration query',

PRIMARY KEY (`trace_id_high`, `trace_id`, `id`)

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

ALTER TABLE zipkin_spans ADD INDEX(`trace_id_high`, `trace_id`) COMMENT 'for getTracesByIds';

ALTER TABLE zipkin_spans ADD INDEX(`name`) COMMENT 'for getTraces and getSpanNames';

ALTER TABLE zipkin_spans ADD INDEX(`remote_service_name`) COMMENT 'for getTraces and getRemoteServiceNames';

ALTER TABLE zipkin_spans ADD INDEX(`start_ts`) COMMENT 'for getTraces ordering and range';

CREATE TABLE IF NOT EXISTS zipkin_annotations (

`trace_id_high` BIGINT NOT NULL DEFAULT 0 COMMENT 'If non zero, this means the trace uses 128 bit traceIds instead of 64 bit',

`trace_id` BIGINT NOT NULL COMMENT 'coincides with zipkin_spans.trace_id',

`span_id` BIGINT NOT NULL COMMENT 'coincides with zipkin_spans.id',

`a_key` VARCHAR(255) NOT NULL COMMENT 'BinaryAnnotation.key or Annotation.value if type == -1',

`a_value` BLOB COMMENT 'BinaryAnnotation.value(), which must be smaller than 64KB',

`a_type` INT NOT NULL COMMENT 'BinaryAnnotation.type() or -1 if Annotation',

`a_timestamp` BIGINT COMMENT 'Used to implement TTL; Annotation.timestamp or zipkin_spans.timestamp',

`endpoint_ipv4` INT COMMENT 'Null when Binary/Annotation.endpoint is null',

`endpoint_ipv6` BINARY(16) COMMENT 'Null when Binary/Annotation.endpoint is null, or no IPv6 address',

`endpoint_port` SMALLINT COMMENT 'Null when Binary/Annotation.endpoint is null',

`endpoint_service_name` VARCHAR(255) COMMENT 'Null when Binary/Annotation.endpoint is null'

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

ALTER TABLE zipkin_annotations ADD UNIQUE KEY(`trace_id_high`, `trace_id`, `span_id`, `a_key`, `a_timestamp`) COMMENT 'Ignore insert on duplicate';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id_high`, `trace_id`, `span_id`) COMMENT 'for joining with zipkin_spans';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id_high`, `trace_id`) COMMENT 'for getTraces/ByIds';

ALTER TABLE zipkin_annotations ADD INDEX(`endpoint_service_name`) COMMENT 'for getTraces and getServiceNames';

ALTER TABLE zipkin_annotations ADD INDEX(`a_type`) COMMENT 'for getTraces and autocomplete values';

ALTER TABLE zipkin_annotations ADD INDEX(`a_key`) COMMENT 'for getTraces and autocomplete values';

ALTER TABLE zipkin_annotations ADD INDEX(`trace_id`, `span_id`, `a_key`) COMMENT 'for dependencies job';

CREATE TABLE IF NOT EXISTS zipkin_dependencies (

`day` DATE NOT NULL,

`parent` VARCHAR(255) NOT NULL,

`child` VARCHAR(255) NOT NULL,

`call_count` BIGINT,

`error_count` BIGINT,

PRIMARY KEY (`day`, `parent`, `child`)

) ENGINE=InnoDB ROW_FORMAT=COMPRESSED CHARACTER SET=utf8 COLLATE utf8_general_ci;

然后启动zipkin命令

java -jar zipkin-server-2.23.16-exec.jar --STORAGE_TYPE=mysql --MYSQL_HOST=127.0.0.1 --MYSQL_TCP_PORT=3306 --MYSQL_DB=zipkin --MYSQL_USER=root --MYSQL_PASS=123456

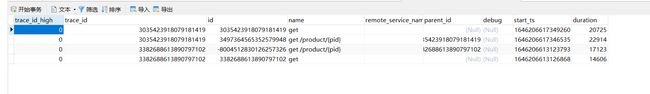

可以点开数据库查看

这样无论怎么重启都可以看到日志了

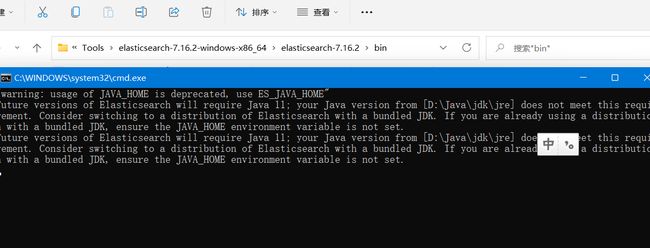

另一种就是es(全名elasticsearch)

我们可以先去官网下载https://www.elastic.co/cn/downloads/elasticsearch

然后下载解压去bin目录启动

java -jar zipkin-server-2.23.16-exec.jar --STORAGE_TYPE=elasticsearch --ES-HOST=localhost:9200

然后使用以上命令重启动zipkin

然后重启也是可以看到持久化的数据了。

这样我们的持久化就完成了。

到此,我们这一章的日志链路追踪就完成了

后期会在这个项目上不断添加,喜欢的请点个start~

项目源码参考一下分支220301_xgc_Sleuth

Gitee:https://gitee.com/coderxgc/springcloud-alibaba

GitHub:https://github.com/coderxgc/springcloud-alibaba