四、单线程多路IO复用+多线程业务工作池

文章目录

- 一、前言

-

- 1 编译方法

- 二、单线程多路IO复用+多线程业务工作池结构

- 三、重写`Client_Context`类

- 四、编写`Server`类

一、前言

我们以及讲完单线程多路IO复用 以及任务调度与执行的C++线程池,接下来我们就给他结合起来。

由于项目变大,尝试解耦项目,使用

CMake,可以看这篇文章现代CMake使用,使C++代码解耦

本节代码均可在仓库TinyWebServer 中找到

1 编译方法

# 进入Server目录下

mkdir build

cd build

cmake ..

cmake --build .

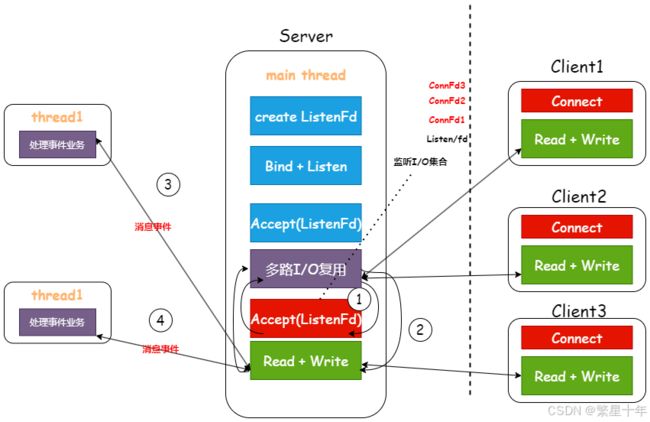

二、单线程多路IO复用+多线程业务工作池结构

简单来说就是把,读写任务交给线程池。

三、重写Client_Context类

上一节的Client_Context类并不能做到线程安全,以及管理客户端状态。所以做以下改变。

// client_context.h

class ClientContext {

public:

ClientContext() : active(true) {}

void pushMessage(const string &msg);

bool hasMessages() const;

string popMessage();

void setWriteReady(bool ready);

bool isWriteReady() const;

bool isActive() const;

void deactivate();

private:

queue<string> send_queue; // 消息队列

bool write_ready = false; // 是否可写

mutable mutex mtx; // const下也可锁

atomic<bool> active; // 活跃检测

};

// client_context.cpp

#include "client_context.h"

// ClientContext implementation

void ClientContext::pushMessage(const string &msg) {

lock_guard<mutex> lock(mtx);

send_queue.push(msg);

}

bool ClientContext::hasMessages() const {

lock_guard<mutex> lock(mtx);

return !send_queue.empty();

}

string ClientContext::popMessage() {

lock_guard<mutex> lock(mtx);

string msg = send_queue.front();

send_queue.pop();

return msg;

}

void ClientContext::setWriteReady(bool ready) {

lock_guard<mutex> lock(mtx);

write_ready = ready;

}

bool ClientContext::isWriteReady() const {

lock_guard<mutex> lock(mtx);

return write_ready;

}

bool ClientContext::isActive() const { return active; }

void ClientContext::deactivate() { active = false; }

四、编写Server类

封装成类,隐藏细节。

#pragma once

#include #include "server.h"

#include