初识混沌工程(Chaos Engineering): k8s install chaosblade

混沌工程文档: https://chaosblade.io/docs/

helm: https://github.com/helm/helm/releases

chaosblade: https://github.com/chaosblade-io/chaosblade/releases

chaosblade-box: https://github.com/chaosblade-io/chaosblade-box/releases

metrics-server: https://github.com/kubernetes-sigs/metrics-server/releases

ChaosBlade 是什么?

ChaosBlade是一个云原生混沌工程平台,支持多种环境、集群和语言。

包含混沌工程实验工具 chaosblade 和混沌工程平台 chaosblade-box,旨在通过混沌工程帮助企业解决云原生过程中高可用问题。

一、安装部署K8S

1.1 修改服务器名称

[root@VM-8-11-centos ~]# hostnamectl set-hostname master

[root@VM-16-10-centos ~]# hostnamectl set-hostname no1

vim /etc/hosts

# 内容BEGIN

10.0.8.11 master

10.0.16.10 no1

# 内容END

reboot

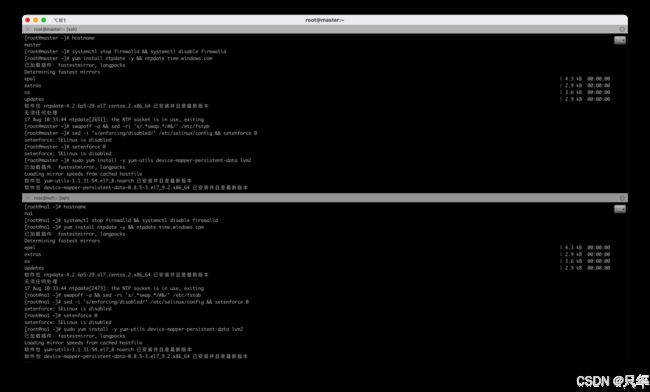

1.2 关闭防火墙和启用时间同步等

# 关闭防火墙(在3台master运行)

systemctl stop firewalld && systemctl disable firewalld

# 时间同步(在3台master运行)

yum install ntpdate -y && ntpdate time.windows.com

# 关闭swap(在3台master运行)

swapoff -a && sed -ri 's/.*swap.*/#&/' /etc/fstab

# 关闭selinux(在3台master运行)

# sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0

setenforce 0

# 安装必要的系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

1.3 安装Docker20.10.24

所有master+node+rancher执行

# 1 切换镜像源

[root@master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# 2 查看当前镜像源中支持的docker版本

[root@master ~]# yum list docker-ce --showduplicates

# 3 安装特定版本的docker-ce

# 必须指定--setopt=obsoletes=0,否则yum会自动安装更高版本

[root@master ~]# yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

或(推荐) yum install -y --setopt=obsoletes=0 docker-ce-20.10.24-3.el8 docker-ce-cli-20.10.24-3.el8

# 4 配置文件

vim /etc/systemd/system/docker.service

# 内容BEGIN

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

# 内容END

chmod +x /etc/systemd/system/docker.service

# 5 启动docker

# 重新加载配置文件

systemctl daemon-reload

# 启动docker

systemctl start docker

# 设置开机启动

systemctl enable docker.service

# 6 检查docker状态和版本

[root@master ~]# docker version

# 可以通过修改daemon配置文件/etc/docker/daemon.json来使用加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://mirror.ccs.tencentyun.com"

]

}

EOF

sudo systemctl daemon-reload && sudo systemctl restart docker

# 7 配置ipv4

# 1 安装ipset和ipvsadm

[root@master ~]# yum install ipset ipvsadmin -y

# 如果提示No package ipvsadmin available.需要使用 yum install -y ipvsadm

# 2 添加需要加载的模块写入脚本文件

[root@master ~]# cat < /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

# 3 为脚本文件添加执行权限

[root@master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

# 4 执行脚本文件

[root@master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modules

# 5 查看对应的模块是否加载成功

[root@master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

1.4 部署K8S集群1.20.11

网络配置 iptables

vim /etc/sysctl.d/k8s.conf

# 内容BEGIN

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0

# 内容END

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

配置kubernetes源

vim /etc/yum.repos.d/kubernetes.repo

# 内容BEGIN

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# 内容END

# 清除缓存

yum clean all

# 把服务器的包信息下载到本地电脑缓存起来,makecache建立一个缓存

yum makecache

安装kubelet kubeadm kubectl

# 当不指定具体版本时,将下载当前yum源对应的最新版本

yum install -y kubelet kubeadm kubectl

# 也可以指定版本,建议指定如下版本, 安装指定版本的kubelet,kubeadm,kubectl

yum install -y kubelet-1.20.11 kubeadm-1.20.11 kubectl-1.20.11

安装完成后,查看K8S版本

# 查看kubelet版本

[root@master ~]# kubelet --version

Kubernetes v1.20.11

# 查看kubeadm版本

[root@master ~]# kubeadm version

kubeadm version: &version.Info{

Major:"1", Minor:"20", GitVersion:"v1.20.11", GitCommit:"27522a29febbcc4badac257763044d0d90c11abd", GitTreeState:"clean", BuildDate:"2021-09-15T19:20:34Z", GoVersion:"go1.15.15", Compiler:"gc", Platform:"linux/amd64"}

# 配置kubelet的cgroup

# 编辑/etc/sysconfig/kubelet,添加下面的配置

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

# 4 设置kubelet开机自启

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start kubelet

[root@master ~]# systemctl status kubelet

# 没启动成功,报错先不管,后面的 `kubeadm init` 会拉起

[root@master ~]# systemctl enable kubelet

[root@master ~]# systemctl is-enabled kubelet

# 下载 cni-plugins-linux-amd64-v1.2.0.tgz 插件

$ wget https://github.com/containernetworking/plugins/releases/download/v1.2.0/cni-plugins-linux-amd64-v1.2.0.tgz

# 解压 cni-plugins-linux-arm64-v1.2.0.tgz 插件 并且复制到 /opt/cni/bin/

tar -zxvf cni-plugins-linux-amd64-v1.2.0.tgz -C /opt/cni/bin/

准备集群镜像

# 在安装kubernetes集群之前,必须要提前准备好集群需要的镜像,所需镜像可以通过下面命令查看

[root@master ~]# kubeadm config images list

# 下载镜像

# 此镜像在kubernetes的仓库中,由于网络原因,无法连接,下面提供了一种替代方案

images=(

kube-apiserver:v1.20.11

kube-controller-manager:v1.20.11

kube-scheduler:v1.20.11

kube-proxy:v1.20.11

pause:3.2

etcd:3.4.13-0

coredns:1.7.0

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

初始化K8S集群Master

注意,此操作只在规划的K8S Master的MASTER服务器(即本篇master)上执行

# 执行初始化命令 初始化k8s集群

[root@master ~]# kubeadm init \

--kubernetes-version=v1.20.11 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=10.0.8.11 \

--ignore-preflight-errors=all

kube-flannel.yml

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

k8s-app: flannel

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

k8s-app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.2.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.24.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.24.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: &