音视频开发—Ubuntu使用FFmpeg 完成音视频剪辑 代码实现

文章目录

-

- FFmpeg 命令示例

- FFmpeg 剪辑原理流程

- FFmpeg 使用C语言代码实现

-

- 初始化输入源视频

- 定位开始剪辑点

- 读取视频帧到输出流

- 完整代码

-

- 编译命令

FFmpeg 命令示例

使用-ss 指定视频开始剪辑点,从某个时间点开始到结束,可以结合 -t 或 -to 参数使用:

-t指定持续时间-to指定结束时间点

例如从从 00:01:00 开始,剪辑 10 秒:

ffmpeg -i ss_test.mp4 -ss 00:00:00 -t 10 out_test.ts

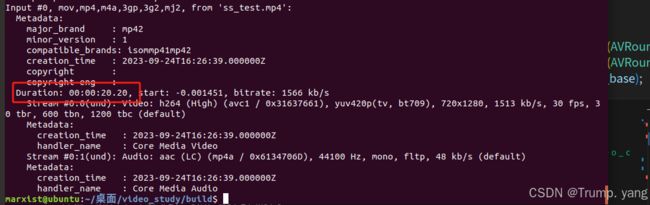

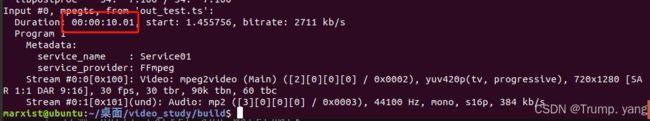

命令效果:可以使用ffprobe 查看视频文件的基本信息,包括格式、持续时间、比特率等。

剪辑前的源文件,时长20S

剪辑后的输出文件,时长为10S 说明剪辑成功了

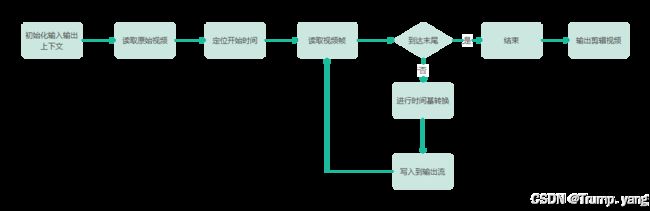

FFmpeg 剪辑原理流程

FFmpeg 使用C语言代码实现

初始化输入源视频

const char *input_filename = "ss_test.mp4";

const char *output_filename = "video_cut_demo.ts";

int64_t start_time =0; // Start time in seconds

int64_t duration = 10; // Duration in seconds

AVFormatContext *input_format_ctx = NULL;

AVFormatContext *output_format_ctx = NULL;

AVPacket packet;

int ret;

int video_stream_index = -1;

av_register_all ();

// 打开视频文件并给输入上下文赋值

if ((ret = avformat_open_input(&input_format_ctx, input_filename, NULL, NULL)) < 0)

{

fprintf(stderr, "Could not open input file '%s'\n", input_filename);

return ret;

}

if ((ret = avformat_find_stream_info(input_format_ctx, NULL)) < 0)

{

fprintf(stderr, "Could not find stream information\n");

return ret;

}

// 寻找视频流信息

for (int i = 0; i < input_format_ctx->nb_streams; i++)

{

if (input_format_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

video_stream_index = i;

break;

}

}

if (video_stream_index == -1)

{

fprintf(stderr, "Could not find video stream in the input file\n");

return -1;

}

定位开始剪辑点

// 开始剪辑的时间戳

int64_t start_time_pts = av_rescale_q_rnd(start_time * AV_TIME_BASE, AV_TIME_BASE_Q, input_format_ctx->streams[video_stream_index]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

ret = av_seek_frame(input_format_ctx, video_stream_index, start_time_pts, AVSEEK_FLAG_BACKWARD);

if (ret < 0)

{

fprintf(stderr, "Error seeking to the specified timestamp.\n");

return AVERROR_EXIT;

}

首先根据指定的开始时间 start_time 定位到视频文件中的相应位置,以便从该时间点开始剪辑视频。

av_seek_frame 解读:定位到指定的时间戳

ret = av_seek_frame(input_format_ctx, video_stream_index, start_time_pts, AVSEEK_FLAG_BACKWARD);

参数解释:

- input_format_ctx: 输入文件的格式上下文。

- video_stream_index: 视频流的索引,表示要定位到的视频流。

- start_time_pts: 目标时间戳(以输入视频流的时间基为单位)。

- AVSEEK_FLAG_BACKWARD: 允许向后查找,直到找到不晚于给定时间戳的帧。

av_seek_frame 函数会使解码器的读取位置跳转到 start_time_pts 对应的时间点,从而能够从该时间点开始读取和解码视频帧。

读取视频帧到输出流

// 确定结束信息

int64_t end_time = start_time + duration;

int64_t end_time_pts = av_rescale_q(end_time * AV_TIME_BASE, AV_TIME_BASE_Q, input_format_ctx->streams[video_stream_index]->time_base);

// 读取packet数据并且写入到输出流

while (av_read_frame(input_format_ctx, &packet) >= 0)

{

AVStream *in_stream = input_format_ctx->streams[packet.stream_index];

AVStream *out_stream = output_format_ctx->streams[packet.stream_index];

// 检查是否到了视频末尾

if (av_q2d(in_stream->time_base) * packet.pts > end_time)

{

av_packet_unref(&packet);

break;

}

// 将输入流的时间戳转换为输出流的时间戳

packet.pts = av_rescale_q_rnd(packet.pts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

packet.dts = av_rescale_q_rnd(packet.dts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

packet.duration = av_rescale_q(packet.duration, in_stream->time_base, out_stream->time_base);

packet.pos = -1;

// 写入packet到输出文件

if ((ret = av_interleaved_write_frame(output_format_ctx, &packet)) < 0)

{

fprintf(stderr, "Error muxing packet\n");

break;

}

av_packet_unref(&packet);

}

为了确保与源视频文件的音画时间同步,将输入流的时间戳转换为输出流的时间戳。

完整代码

extern "C"

{

#include

#include

#include

}

#include

using namespace std;

int main(int argc, char **argv)

{

// 输入与输出的文件

const char *input_filename = "ss_test.mp4";

const char *output_filename = "video_cut_demo.ts";

int64_t start_time =0; // Start time in seconds

int64_t duration = 10; // Duration in seconds

AVFormatContext *input_format_ctx = NULL;

AVFormatContext *output_format_ctx = NULL;

AVPacket packet;

int ret;

int video_stream_index = -1;

av_register_all ();

// 打开视频文件并给输入上下文赋值

if ((ret = avformat_open_input(&input_format_ctx, input_filename, NULL, NULL)) < 0)

{

fprintf(stderr, "Could not open input file '%s'\n", input_filename);

return ret;

}

if ((ret = avformat_find_stream_info(input_format_ctx, NULL)) < 0)

{

fprintf(stderr, "Could not find stream information\n");

return ret;

}

// 寻找视频流信息

for (int i = 0; i < input_format_ctx->nb_streams; i++)

{

if (input_format_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

video_stream_index = i;

break;

}

}

if (video_stream_index == -1)

{

fprintf(stderr, "Could not find video stream in the input file\n");

return -1;

}

// 开始剪辑的时间戳

int64_t start_time_pts = av_rescale_q_rnd(start_time * AV_TIME_BASE, AV_TIME_BASE_Q, input_format_ctx->streams[video_stream_index]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

ret = av_seek_frame(input_format_ctx, video_stream_index, start_time_pts, AVSEEK_FLAG_BACKWARD);

if (ret < 0)

{

fprintf(stderr, "Error seeking to the specified timestamp.\n");

return AVERROR_EXIT;

}

// 准备输出上下文

avformat_alloc_output_context2(&output_format_ctx, NULL, NULL, output_filename);

if (!output_format_ctx)

{

fprintf(stderr, "Could not create output context\n");

return AVERROR_UNKNOWN;

}

// 创建新的视频流

for (size_t i = 0; i < input_format_ctx->nb_streams; i++)

{

AVStream *in_stream = input_format_ctx->streams[i];

AVStream *out_stream = avformat_new_stream(output_format_ctx, NULL);

if (!out_stream)

{

fprintf(stderr, "Failed allocating output stream\n");

return AVERROR_UNKNOWN;

}

ret = avcodec_parameters_copy(out_stream->codecpar, in_stream->codecpar);

if (ret < 0)

{

fprintf(stderr, "Failed copying parameters to output stream\n");

return AVERROR_UNKNOWN;

}

out_stream->codecpar->codec_tag = 0;

}

// 打开输出文件并写入

if (!(output_format_ctx->oformat->flags & AVFMT_NOFILE))

{

if ((ret = avio_open(&output_format_ctx->pb, output_filename, AVIO_FLAG_WRITE)) < 0)

{

fprintf(stderr, "Could not open output file '%s'\n", output_filename);

return ret;

}

}

// 写入流头

if ((ret = avformat_write_header(output_format_ctx, NULL)) < 0)

{

fprintf(stderr, "Error occurred when opening output file\n");

return ret;

}

// 确定结束信息

int64_t end_time = start_time + duration;

int64_t end_time_pts = av_rescale_q(end_time * AV_TIME_BASE, AV_TIME_BASE_Q, input_format_ctx->streams[video_stream_index]->time_base);

// 读取packet数据并且写入到输出流

while (av_read_frame(input_format_ctx, &packet) >= 0)

{

AVStream *in_stream = input_format_ctx->streams[packet.stream_index];

AVStream *out_stream = output_format_ctx->streams[packet.stream_index];

// 检查是否到了视频末尾

if (av_q2d(in_stream->time_base) * packet.pts > end_time)

{

av_packet_unref(&packet);

break;

}

// 将输入流的时间戳转换为输出流的时间戳

packet.pts = av_rescale_q_rnd(packet.pts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

packet.dts = av_rescale_q_rnd(packet.dts, in_stream->time_base, out_stream->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

packet.duration = av_rescale_q(packet.duration, in_stream->time_base, out_stream->time_base);

packet.pos = -1;

// 写入packet到输出文件

if ((ret = av_interleaved_write_frame(output_format_ctx, &packet)) < 0)

{

fprintf(stderr, "Error muxing packet\n");

break;

}

av_packet_unref(&packet);

}

// 写入文件尾部

av_write_trailer(output_format_ctx);

avformat_close_input(&input_format_ctx);

if (output_format_ctx && !(output_format_ctx->oformat->flags & AVFMT_NOFILE))

{

avio_closep(&output_format_ctx->pb);

}

avformat_free_context(output_format_ctx);

return 0;

}

编译命令

需要链接FFmpeg的库,命令示意

g++ -o video_cut_demo video_cut_demo.cpp $(pkg-config --cflags --libs libavformat libavcodec libavutil)