Day10 【基于LSTM实现自回归语言模型文本续写任务】

基于LSTM实现文本续写任务

-

-

- 目标

- 数据准备

- 程序说明

-

- 定义模型结构

- 前向传播

- 构建词表

- 加载语料

- 构建训练样本

- 构建数据集

- 训练模型

- 文本续写

- 困惑度计算

- 训练过程展示

-

目标

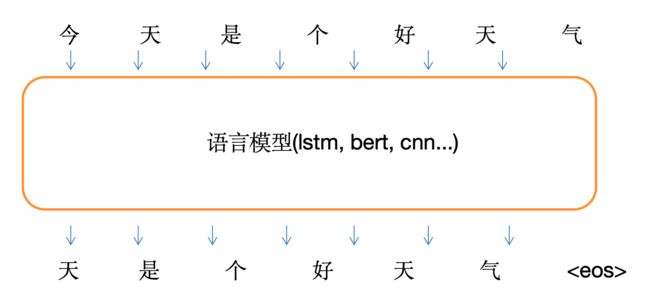

本文基于给定的词表,将输入的文本以字符分割为若干个词,然后基于词表将词初步序列化作为训练网络的输入序列,将词后面一个词在词表中的序号作为输入标签,取连续序列文本片段长度作为输入序列的长度。之后经过Embedding、LSTM等网络层。因为生成的词是词表中某个词,因此模型输出为已知词表上的多类别概率分布,从而实现一个简单文本的续写任务。

数据准备

词表文件vocab.txt

语料文件语料训练文件

程序说明

定义模型结构

class LanguageModel(nn.Module):

def __init__(self, input_dim, vocab):

super(LanguageModel, self).__init__()

self.embedding = nn.Embedding(len(vocab), input_dim)

self.layer = nn.LSTM(input_dim, input_dim, num_layers=1, batch_first=True)

self.classify = nn.Linear(input_dim, len(vocab))

self.dropout = nn.Dropout(0.1)

self.loss = nn.functional.cross_entropy

LanguageModel类继承自nn.Module,表示一个神经网络模型。self.embedding: 嵌入层,用于将每个单词映射到固定维度的向量空间。self.layer: LSTM 层,处理输入的序列数据。self.classify: 全连接层,用于将 LSTM 的输出映射到词表大小的输出空间,即词汇的预测概率分布。self.dropout: 丢弃层,避免过拟合。self.loss: 使用交叉熵损失函数来计算损失。

前向传播

def forward(self, x, y=None):

x = self.embedding(x)

x, _ = self.layer(x)

y_pred = self.classify(x)

if y is not None:

return self.loss(y_pred.view(-1, y_pred.shape[-1]), y.view(-1))

else:

return torch.softmax(y_pred, dim=-1)

- 输入

x是词的索引,首先通过嵌入层转换成向量表示。 x, _ = self.layer(x)通过 LSTM 层处理输入序列。- 然后通过

self.classify进行预测,得到每个词的概率分布。 - 如果有真实标签

y,则计算并返回损失;如果没有真实标签,则返回预测的概率分布。

构建词表

def build_vocab(vocab_path):

vocab = {"" : 0}

with open(vocab_path, encoding="utf8") as f:

for index, line in enumerate(f):

char = line[:-1]

vocab[char] = index + 1

return vocab

- 从给定的文件

vocab_path加载词表,词表是一个字典,每个字符对应一个唯一的索引。 - 词表中包含一个特殊的

0,用于填充。

加载语料

def load_corpus(path):

corpus = ""

with open(path, encoding="gbk") as f:

for line in f:

corpus += line.strip()

return corpus

- 从文件

path中加载语料,将每行的空格、换行符去掉,并将所有文本连接成一个长字符串。

构建训练样本

def build_sample(vocab, window_size, corpus):

start = random.randint(0, len(corpus) - 1 - window_size)

end = start + window_size

window = corpus[start:end]

target = corpus[start + 1:end + 1]

x = [vocab.get(word, vocab["" ]) for word in window]

y = [vocab.get(word, vocab["" ]) for word in target]

return x, y

- 从语料中随机选取一个窗口

window_size长度的子序列,并将其作为输入x和目标y(目标y是输入x向后移一位的序列)。 - 每个字符都被映射为词表中的索引。

构建数据集

def build_dataset(sample_length, vocab, window_size, corpus):

dataset_x = []

dataset_y = []

for i in range(sample_length):

x, y = build_sample(vocab, window_size, corpus)

dataset_x.append(x)

dataset_y.append(y)

return torch.LongTensor(dataset_x), torch.LongTensor(dataset_y)

- 根据需要的样本数量

sample_length生成训练数据集dataset_x和dataset_y。 - 每个样本是一个长度为

window_size的输入序列和一个对应的目标序列。

训练模型

def train(corpus_path, save_weight=True):

epoch_num = 10

batch_size = 64

train_sample = 50000

char_dim = 256

window_size = 10

vocab = build_vocab("vocab.txt")

corpus = load_corpus(corpus_path)

model = build_model(vocab, char_dim)

if torch.cuda.is_available():

model = model.cuda()

optim = torch.optim.Adam(model.parameters(), lr=0.01)

print("文本词表模型加载完毕,开始训练")

for epoch in range(epoch_num):

model.train()

watch_loss = []

for batch in range(int(train_sample / batch_size)):

x, y = build_dataset(batch_size, vocab, window_size, corpus)

if torch.cuda.is_available():

x, y = x.cuda(), y.cuda()

optim.zero_grad()

loss = model(x, y)

loss.backward()

optim.step()

watch_loss.append(loss.item())

print("=========\n第%d轮平均loss:%f" % (epoch + 1, np.mean(watch_loss)))

- 训练过程设置了训练轮数(

epoch_num)、批大小(batch_size)等超参数。 - 对于每个 epoch,通过

build_dataset生成批量的训练数据,并使用优化器optim和 LSTM 模型来计算并更新模型参数。

文本续写

def generate_sentence(openings, model, vocab, window_size):

reverse_vocab = dict((y, x) for x, y in vocab.items())

model.eval()

with torch.no_grad():

pred_char = ""

while pred_char != "\n" and len(openings) <= 30:

openings += pred_char

x = [vocab.get(char, vocab["" ]) for char in openings[-window_size:]]

x = torch.LongTensor([x])

if torch.cuda.is_available():

x = x.cuda()

y = model(x)[0][-1]

index = sampling_strategy(y)

pred_char = reverse_vocab[index]

return openings

- 通过给定的

openings(生成的文本前缀)和训练好的模型生成一段文本,直到生成换行符或达到最大长度(30个字符)。 sampling_strategy用于控制生成策略(贪心策略或随机采样)。

困惑度计算

def calc_perplexity(sentence, model, vocab, window_size):

prob = 0

model.eval()

with torch.no_grad():

for i in range(1, len(sentence)):

start = max(0, i - window_size)

window = sentence[start:i]

x = [vocab.get(char, vocab["" ]) for char in window]

x = torch.LongTensor([x])

target = sentence[i]

target_index = vocab.get(target, vocab["" ])

if torch.cuda.is_available():

x = x.cuda()

pred_prob_distribute = model(x)[0][-1]

target_prob = pred_prob_distribute[target_index]

prob += math.log(target_prob, 10)

return 2 ** (prob * ( -1 / len(sentence)))

困惑度是衡量语言模型预测性能的一个指标,通常用于评估模型在处理文本时的表现。困惑度是文本的预测概率的倒数的几何平均值。

- 困惑度低表示模型预测较准确,即交叉熵较低。

- 困惑度越低,表示模型的预测能力越强。

- 困惑度可以理解为模型对文本序列的平均不确定性。

其计算公式如下:

困惑度公式

PPL ( S ) = 2 H ( S ) \text{PPL}(S) = 2^{H(S)} PPL(S)=2H(S)

其中, H ( S ) H(S) H(S) 是文本序列 S S S 的 交叉熵(Cross-Entropy)。

交叉熵公式

对于给定的文本序列 S = ( w 1 , w 2 , . . . , w N ) S = (w_1, w_2, ..., w_N) S=(w1,w2,...,wN),如果模型的概率分布为 p ( w 1 , w 2 , . . . , w N ) p(w_1, w_2, ..., w_N) p(w1,w2,...,wN),交叉熵 H ( S ) H(S) H(S) 的计算公式为:

H ( S ) = − 1 N ∑ i = 1 N log 2 p ( w i ∣ w 1 , . . . , w i − 1 ) H(S) = - \frac{1}{N} \sum_{i=1}^{N} \log_2 p(w_i | w_1, ..., w_{i-1}) H(S)=−N1i=1∑Nlog2p(wi∣w1,...,wi−1)

- N N N:序列的长度。

- p ( w i ∣ w 1 , . . . , w i − 1 ) p(w_i | w_1, ..., w_{i-1}) p(wi∣w1,...,wi−1):在给定前

i-1个词的情况下,模型对第 i i i 个词 w i w_i wi 的预测概率。

全部代码

#coding:utf8

import torch

import torch.nn as nn

import numpy as np

import math

import random

import os

import re

"""

基于pytorch的LSTM语言模型

"""

class LanguageModel(nn.Module):

def __init__(self, input_dim, vocab):

super(LanguageModel, self).__init__()

self.embedding = nn.Embedding(len(vocab), input_dim)

self.layer = nn.LSTM(input_dim, input_dim, num_layers=1, batch_first=True)

self.classify = nn.Linear(input_dim, len(vocab))

self.dropout = nn.Dropout(0.1)

self.loss = nn.functional.cross_entropy

#当输入真实标签,返回loss值;无真实标签,返回预测值

def forward(self, x, y=None):

x = self.embedding(x) #output shape:(batch_size, sen_len, input_dim)

x, _ = self.layer(x) #output shape:(batch_size, sen_len, input_dim)

y_pred = self.classify(x) #output shape:(batch_size, sen_len,vocab_size)

if y is not None:

return self.loss(y_pred.view(-1, y_pred.shape[-1]), y.view(-1))

else:

return torch.softmax(y_pred, dim=-1)

#加载字表

def build_vocab(vocab_path):

vocab = {"" :0}

with open(vocab_path, encoding="utf8") as f:

for index, line in enumerate(f):

char = line[:-1] #去掉结尾换行符

vocab[char] = index + 1 #留出0位给pad token

return vocab

#加载语料

def load_corpus(path):

corpus = ""

# 把所有语料去掉空格、换行、制表符后合并为一行

with open(path, encoding="gbk") as f:

for line in f:

corpus += line.strip()

return corpus

#随机生成一个样本

#从文本中截取随机窗口,前n个字作为输入,最后一个字作为输出

def build_sample(vocab, window_size, corpus):

start = random.randint(0, len(corpus) - 1 - window_size)

end = start + window_size

window = corpus[start:end]

target = corpus[start + 1:end + 1] #输入输出错开一位

# print(window, target)

x = [vocab.get(word, vocab["" ]) for word in window] #将字转换成序号

y = [vocab.get(word, vocab["" ]) for word in target]

return x, y

#建立数据集

#sample_length 输入需要的样本数量。需要多少生成多少

#vocab 词表

#window_size 样本长度

#corpus 语料字符串

def build_dataset(sample_length, vocab, window_size, corpus):

dataset_x = []

dataset_y = []

for i in range(sample_length):

x, y = build_sample(vocab, window_size, corpus)

dataset_x.append(x)

dataset_y.append(y)

return torch.LongTensor(dataset_x), torch.LongTensor(dataset_y)

#建立模型

def build_model(vocab, char_dim):

model = LanguageModel(char_dim, vocab)

return model

#文本生成测试代码

def generate_sentence(openings, model, vocab, window_size):

reverse_vocab = dict((y, x) for x, y in vocab.items())

model.eval()

with torch.no_grad():

pred_char = ""

#生成了换行符,或生成文本超过30字则终止迭代

while pred_char != "\n" and len(openings) <= 30:

openings += pred_char

x = [vocab.get(char, vocab["" ]) for char in openings[-window_size:]]

x = torch.LongTensor([x]) # 以一个数据作为batch传进去

if torch.cuda.is_available():

x = x.cuda()

y = model(x)[0][-1]

index = sampling_strategy(y)

pred_char = reverse_vocab[index]

return openings

def sampling_strategy(prob_distribution):

if random.random() > 0.1:

strategy = "greedy"

else:

strategy = "sampling"

if strategy == "greedy":

return int(torch.argmax(prob_distribution))

elif strategy == "sampling":

prob_distribution = prob_distribution.cpu().numpy()

return np.random.choice(list(range(len(prob_distribution))), p=prob_distribution)

#计算文本ppl

"""困惑度是序列预测概率的倒数的几何平均值。

如果模型对词序列的预测越准确,困惑度就越低。

"""

def calc_perplexity(sentence, model, vocab, window_size):

prob = 0

model.eval()

with torch.no_grad():

for i in range(1, len(sentence)):

start = max(0, i - window_size)

window = sentence[start:i]

x = [vocab.get(char, vocab["" ]) for char in window]

x = torch.LongTensor([x])

target = sentence[i]

target_index = vocab.get(target, vocab["" ])

if torch.cuda.is_available():

x = x.cuda()

pred_prob_distribute = model(x)[0][-1]

target_prob = pred_prob_distribute[target_index]

prob += math.log(target_prob, 10)

return 2 ** (prob * ( -1 / len(sentence)))

def train(corpus_path, save_weight=True):

epoch_num = 10 #训练轮数

batch_size = 64 #每次训练样本个数

train_sample = 50000 #每轮训练总共训练的样本总数

char_dim = 256 #每个字的维度

window_size = 10 #样本文本长度

vocab = build_vocab("vocab.txt") #建立字表

corpus = load_corpus(corpus_path) #加载语料

model = build_model(vocab, char_dim) #建立模型

if torch.cuda.is_available():

model = model.cuda()

optim = torch.optim.Adam(model.parameters(), lr=0.01) #建立优化器

print("文本词表模型加载完毕,开始训练")

for epoch in range(epoch_num):

model.train()

watch_loss = []

for batch in range(int(train_sample / batch_size)):

x, y = build_dataset(batch_size, vocab, window_size, corpus) #构建一组训练样本

if torch.cuda.is_available():

x, y = x.cuda(), y.cuda()

optim.zero_grad() #梯度归零

loss = model(x, y) #计算loss

loss.backward() #计算梯度

optim.step() #更新权重

watch_loss.append(loss.item())

print("=========\n第%d轮平均loss:%f" % (epoch + 1, np.mean(watch_loss)))

sentence1 = generate_sentence("让他在半年之前,就不能做出", model, vocab, window_size)

print(sentence1, "ppl值:", calc_perplexity(sentence1, model, vocab, window_size))

sentence2 = generate_sentence("李慕站在山路上,深深的呼吸", model, vocab, window_size)

print(sentence2, "ppl值:", calc_perplexity(sentence2, model, vocab, window_size))

if not save_weight:

return

else:

base_name = os.path.basename(corpus_path).replace("txt", "pth")

model_path = os.path.join("model", base_name)

torch.save(model.state_dict(), model_path)

return

if __name__ == "__main__":

# build_vocab_from_corpus("corpus/all.txt")

train("corpus.txt", False)

训练过程展示

=========

第1轮平均loss:4.392599

让他在半年之前,就不能做出了一个人,他们的人影,说道:“你曾经 ppl值: 1.9966701716231285

李慕站在山路上,深深的呼吸,说道:“你们比我们的的人,你们怎么 ppl值: 2.191639233704626

=========

第2轮平均loss:4.020655

让他在半年之前,就不能做出一样。梅大人看着李慕,说道:“你们这 ppl值: 1.9009707069137618

李慕站在山路上,深深的呼吸口气,说道:“何事?”李慕道:“你们 ppl值: 1.8455512776830252

=========

第3轮平均loss:3.935250

让他在半年之前,就不能做出什么事情,就算是一个人,你们的话,你 ppl值: 2.001999826232699

李慕站在山路上,深深的呼吸的,说道:“你们的修为,你们的修为, ppl值: 1.9896320594914976

=========

第4轮平均loss:3.886380

让他在半年之前,就不能做出来,他们的修行者,你们的修行者,你们 ppl值: 1.9183123621654121

李慕站在山路上,深深的呼吸,说道:“你们的身体,我们的修行者, ppl值: 1.9155974694788018

=========

第5轮平均loss:3.869880

让他在半年之前,就不能做出火,李慕也不会有危险,李慕也不会有危 ppl值: 2.1028747398858734

李慕站在山路上,深深的呼吸了口气,说道:“我不是你们的身份,你 ppl值: 1.8643938342098927

=========

第6轮平均loss:3.834472

让他在半年之前,就不能做出来,他们相比,他们就是一个人迹,他们 ppl值: 2.185777249537648

李慕站在山路上,深深的呼吸,说道:“你们一样。”李慕看着李慕, ppl值: 1.8768523224392026

=========

第7轮平均loss:3.825364

让他在半年之前,就不能做出一个无数,李慕的身体,李慕的身影,说 ppl值: 1.9390962034912358

李慕站在山路上,深深的呼吸,说道:“你们的,还是我们的事情,我 ppl值: 1.904831412083304

=========

第8轮平均loss:3.808068

让他在半年之前,就不能做出了一个铜镜,李慕又走到,李慕又走到, ppl值: 1.9291944683376374

李慕站在山路上,深深的呼吸,说道:“你们的蛇妖,你们也不是,还 ppl值: 2.2240269549096894

=========

第9轮平均loss:3.808428

让他在半年之前,就不能做出了一个,打算是他犯下了,他们的修为, ppl值: 2.108446855075013

李慕站在山路上,深深的呼吸,说道:“你们,我们就是我们的,你们 ppl值: 2.0873199238763656

=========

第10轮平均loss:3.798536

让他在半年之前,就不能做出了一个,我们也不会到了,我们也不会来 ppl值: 2.0313443842526797

李慕站在山路上,深深的呼吸,说道:“你们一定要不是你们问你们的 ppl值: 2.105591155276767