@余凯_西二旗民工 【SVM之菜鸟实现】—5步SVM

#翻译#了下 余凯老师的 心法

以前的一篇博文:二分类SVM方法Matlab实现

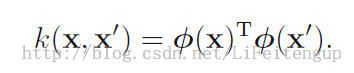

前几日实现了下,虽然说是Linear-SVM,但是只要可以有映射函数也可以做kernel-svm

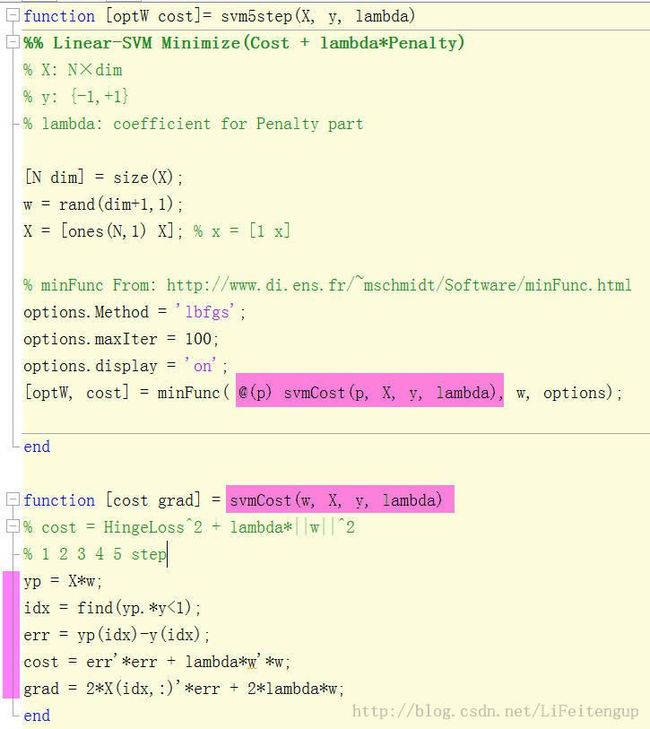

function [optW cost]= svm5step(X, y, lambda)

%% Linear-SVM Minimize(Cost + lambda*Penalty)

% X: N×dim

% y: {-1,+1}

% lambda: coefficient for Penalty part

% By LiFeiteng Email:[email protected]

[N dim] = size(X);

w = rand(dim+1,1);

X = [ones(N,1) X]; % x = [1 x]

% minFunc From: http://www.di.ens.fr/~mschmidt/Software/minFunc.html

options.Method = 'lbfgs';

options.maxIter = 100;

options.display = 'on';

[optW, cost] = minFunc( @(p) svmCost(p, X, y, lambda), w, options);

end

function [cost grad] = svmCost(w, X, y, lambda)

% cost = HingeLoss^2 + lambda*||w||^2

% 1 2 3 4 5 step

yp = X*w;

idx = find(yp.*y<1);

err = yp(idx)-y(idx);

cost = err'*err + lambda*w'*w;

grad = 2*X(idx,:)'*err + 2*lambda*w;

end

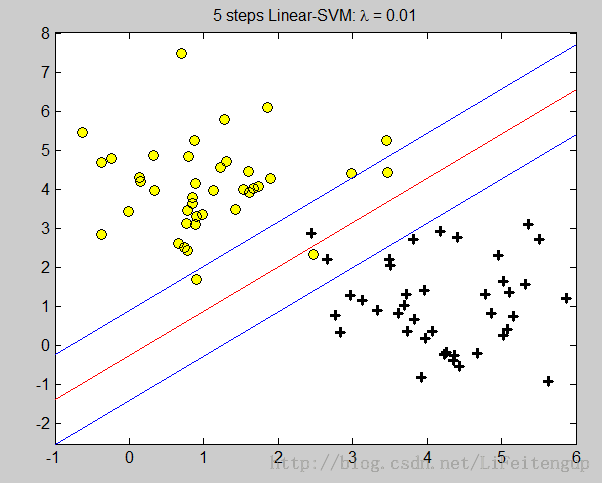

测试用例:

clear

close all

x0 = [1 4]';

x1 = [4 1]';

X0 = [];

X1 = [];

for i = 1:40

X0 = [X0 normrnd(x0, 1)];

X1 = [X1 normrnd(x1, 1)];

end

X = [X0 X1]';

y = [-ones(size(X0,2),1); ones(size(X1,2),1)];

save data X0 X1 X y

plot(X0(1,:),X0(2,:), 'ko', 'MarkerFaceColor', 'y', 'MarkerSize', 7);

hold on

plot(X1(1,:),X1(2,:), 'k+','LineWidth', 2, 'MarkerSize', 7);

lambda = 0.01;

w = svm5step(X, y, lambda)

k = -w(2)/w(3); b = -w(1)/w(3);

h = refline(k,b); %已知斜率w 截距b 画直线

set(h, 'Color', 'r')

b = -(w(1)+1)/w(3);

h = refline(k,b); %已知斜率w 截距b 画直线

b = -(w(1)-1)/w(3);

h = refline(k,b); %已知斜率w 截距b 画直线

title(['5 steps Linear-SVM: \lambda = ' num2str(lambda)] )