ORACLE迁移GP实践

1 ORACLE_HOME /home/oracle/client_1 2 ORACLE_DSN dbi:Oracle:host=192.168.11.1;sid=orcl 3 ORACLE_USER manager 4 ORACLE_PWD tiger 5 SCHEMA test 6 TYPE TABLE VIEW PACKAGE COPY 7 PG_NUMERIC_TYPE 0 8 PG_INTEGER_TYPE 1 9 DEFAULT_NUMERIC float 10 SKIP fkeys pkeys ukeys indexes checks 11 NLS_LANG AMERICAN_AMERICA.UTF8 12 PG_DSN dbi:Pg:dbname=easyetl;host=127.0.0.1;port=5432 13 PG_USER easyetl 14 PG_PWD password 15 OUTPUT output.sql

1-4 配置源端Oracle的信息

5 oracle的schema取值

6 准备转化的数据类型,也包括导数据的copy命令

7-9 用来转化oracle的number(p,s)到PG的类型:

7表示是否使用PG内部的数据类型,0表示不使用,1表示使用

8表示在7设置为0时,如果8设置为1,则类型number(p)的定义变更为integer;如果8设置为0,则number(p)也转化为numeric(p)

9表示是8设置为1的时候,类型number转化为float,如果8设置为0,则9不起作用.

简单的设置,如果7,8均设置为0,那么number(p) --> numeric(p),number(p,s) --> numeric(p,s), number --> numeric

10 约束们是否需要创建

11 语言选择

12-14 配置目的端PG(GP亦可),如果这三行信息不配置,也没关系,可以生成oracle转化为PG的脚本

15 生成文件

迁移中出现的情况:

(1) 表可以完全迁移过去

(2) 视图里面如果没有起别名的话,也需要手动添加别名

(3) package需要手动修改.注:ver13版本的package生成需要把perform/decode屏蔽掉,因为这二点未做好,模块为PLSQL.pm.

当然package转化不仅仅只是这部分东西,主要的有:

a 别名需要显式写出

b 隐式转化要显式写出

c 函数的差异(GP官方有一套Oracle的函数实现,基本上够用)

d oracle里面非标准写法,如: a left join b写成 a,b where a.xx=b.xx(+)

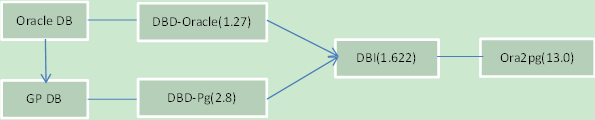

2. Oracle的数据迁移到GP的实现过程

#!/bin/bash if [ $# -lt 3 ];then echo 'Usage `basename $0` pipe tablename control' exit 1 fi pipename=$1 tablename=$2 control=$3 condition=$4 mknod $pipename p /root/software/sqluldr2 user=manager/tigerd@orcl query="select * from $tablename where $condition" field=0x7c file=$pipename

charset=utf8 text=CSV safe=yes persql="begin dbms_lock.sleep(2); end;" & gpload -f $control -l gpload.log rm -rf $pipename

ora2gp.sh --生成control文件,包括管道文件名称.然后调用上述进程实现载入过程.

#!/usr/bin/env python #-*- coding:utf-8 -*- import yaml import subprocess import sys import os # Script starts from here paramnum=len(sys.argv) datadt=20140820 condition="1=1" tplpath="/root/template/" pipepath="/tmp/pipe" batname="/root/script/dataload.sh" if (paramnum == 1): print 'Usage:'+ sys.argv[0]+' tablename ' sys.exit() elif(paramnum == 2): tablename=sys.argv[1] elif(paramnum == 3): tablename=sys.argv[1] datadt=sys.argv[2] elif(paramnum == 4): tablename=sys.argv[1] datadt=sys.argv[2] condition=sys.argv[3] else: print 'Usage:'+ sys.argv[0]+' tablename datadt condition. (datadt condition is optional)!' sys.exit() pid=os.getpid() pipename=pipepath+str(pid) f = open(tplpath+"gp_template_load.ctl") dataMap = yaml.load(f) f.close() dataMap['GPLOAD']['INPUT'][0]['SOURCE']['FILE'][0]=pipename dataMap['GPLOAD']['OUTPUT'][0]['TABLE']=tablename dataMap['GPLOAD']['INPUT'][6]['ERROR_TABLE']=tablename+'_err' filename=tplpath+tablename+'.ctl' f = open(filename,'w') yaml.dump(dataMap,f) f.close() handle=subprocess.Popen([batname,pipename,tablename,filename,condition]) handle.communicate()

VERSION: 1.0.0.1

DATABASE: dw

USER: manager

HOST: gp

PORT: 5432

GPLOAD:

INPUT:

- SOURCE:

LOCAL_HOSTNAME:

- gp

FILE:

- /tmp/mypipe

PORT_RANGE: [8001,9000]

- FORMAT: csv

- DELIMITER: ','

- QUOTE: '"'

- HEADER: true

- ERROR_LIMIT: 10000

- ERROR_TABLE: tablename_err

OUTPUT:

- TABLE: tablename

- MODE: INSERT

PRELOAD:

- TRUNCATE: true

后续操作:

上面的程序可以当作同步使用,但是真正的在生产使用就会有点不太让人放心.

原因有三:

(1)dataload.sh里面的sqluldr是放在后台处理的.当sqluldr出现异常,gpload可能会等待.当gpload出现异常的时候,sqluldr还是会载出文件.而且dataload.sh是fork出二个进程,当进程

出现异常,还需要手动寻找,kill掉.

(2)日常记录与处理.

(3)oracle与gp的表结构要严格一致才行.

基于此,写了可以统一处理fork的进程,增加了获取gp column list,加上日志处理这几部分.

oraconf文件格式:

#CONFNAME:USER^PASS^TNSNAME

gpconf文件格式:

#host:port:database:user:passwd

control文件看上面以及官方文档吧.

#!/bin/sh . greenplum_loaders_path.sh . setenv if [ $# -lt 4 ];then echo "Usage : `basename $0` confname etl_date mode src_tbname tgt_tbname " echo " confname : configuration at ${PWD}/conf/oraconf " echo " etl_date : YYYYMMDD " echo " mode : 1 truncate; 2 append" echo " src_tbname : oracle datasource tablename " echo " tgt_tbname(optional) : greenplum datasource tablename" exit 1 fi #trap the exception quit trap 'log_info "TERM/INTERRUPT(subprocess) close";close_subproc' INT TERM declare -a sublist function log_info() { DATETIME=`date +"%Y%m%d %H:%M:%S"` echo -e "S $DATETIME P[$$]: $*"| tee -a "$LOGPATH"/"$ETLDATE"/"$GPTABLE".log } function collect_subproc() { local index if [ ${#sublist} -eq 0 ];then index=0 else index=$[${#sublist}]+1 fi sublist[$index]=$1 } function close_subproc() { for subid in ${sublist[@]} do log_info "kill processid: $subid" kill $subid done } function parse_yaml() { local file=$1 local tablename=$2 local pipename=$3 local etldate=$4 sed -i -e "s/mypipe/"$pipename"/" -e "s/tablename_err/public."$tablename"_err/" -e "s/\<tablename\>/"$tablename"/" -e "s/etl_date/"$etldate"/" $file } if [ $(dirname $0) == '.' ];then PRIPATH=${PWD} else PRIPATH=$(dirname $0) fi TPLPATH="$PRIPATH"/template LOGPATH="$PRIPATH"/log CONFNAME=$1 ETLDATE=$2 MODE=$3 ORATABLE=$4 GPTABLE=$5 [ -z "$GPTABLE" ] && GPTABLE="$ORATABLE" [ ! -d "$LOGPATH"/"$ETLDATE" ] && mkdir -p "$LOGPATH"/"$ETLDATE" PIPENAME="P"$$"$GPTABLE" eval `grep "^$CONFNAME" "$PRIPATH"/conf/oraconf |awk -F':' '{print $2}'|awk -F'^' '{print "ORACLE_USER="$1";ORACLE_PASS="$2";ORACLE_SID="$3}'` eval $(eval `grep ^[^#] "$PRIPATH"/conf/gpconf |awk -F':' -v table=$GPTABLE '{printf("psql -h %s -p %d -U %s %s -tAc \047\\\d %s \047",$1,$2,$4,$3,table)}'`|awk -F"|" '{cmd=cmd$1","}END{print "collist="cmd}') collist=`echo $collist|sed "s/,$//g"` echo >> "$LOGPATH"/"$ETLDATE"/"$GPTABLE".log #create and modify template for gpload use log_info "create template "$LOGPATH"/"$ETLDATE"/"$GPTABLE".ctl." cp "$TPLPATH"/gp_template_load_"$MODE".ctl "$LOGPATH"/"$ETLDATE"/"$GPTABLE".ctl if [ $? -ne 0 ]; then log_info "create template "$LOGPATH"/"$ETLDATE"/"$GPTABLE".ctl failed." exit 2 fi parse_yaml "$LOGPATH"/"$ETLDATE"/"$GPTABLE".ctl $GPTABLE $PIPENAME $ETLDATE if [ $? -ne 0 ]; then log_info "modify template "$LOGPATH"/"$ETLDATE"/"$GPTABLE".ctl failed." exit 2 fi #create pipename log_info "create pipe /tmp/"$PIPENAME"." mknod /tmp/"$PIPENAME" p if [ $? -ne 0 ];then log_info "create pipe failed!" exit 3 fi gpload -f "$LOGPATH"/"$ETLDATE"/"$GPTABLE".ctl -l "$LOGPATH"/"$ETLDATE"/"$GPTABLE".log & collect_subproc $! log_info "unload sql:select $collist from $ORATABLE" sqluldr2 user="$ORACLE_USER"/"$ORACLE_PASS"@"$ORACLE_SID" query="select $collist from $ORATABLE" head=Yes field=0x7c file=/tmp/"$PIPENAME" charset=gb18030 text=CSV safe=yes presql="begin dbms_lock.sleep(5); end;" log=+"$LOGPATH"/"$ETLDATE"/"$GPTABLE".log & collect_subproc $! wait if [ $? -ne 0 ];then log_info "$GPTABLE load failed!" else log_info "$GPTABLE load succ!" fi log_info "rm -rf /tmp/"$PIPENAME"" rm -rf /tmp/"$PIPENAME" if [ $? -ne 0 ];then log_info "rm /tmp/"$PIPENAME" failed." exit 4 fi