LVS+KEEPALIVED实现负载均高可用集群

LVS+KEEPALIVED负载均衡高可用集群

通过命令检测kernel是否已经支持LVS的ipvs模块

[root@www ~]# modprobe -l|grep ipvs

kernel/net/netfilter/ipvs/ip_vs.ko

kernel/net/netfilter/ipvs/ip_vs_rr.ko

kernel/net/netfilter/ipvs/ip_vs_wrr.ko

kernel/net/netfilter/ipvs/ip_vs_lc.ko

kernel/net/netfilter/ipvs/ip_vs_wlc.ko

kernel/net/netfilter/ipvs/ip_vs_lblc.ko

kernel/net/netfilter/ipvs/ip_vs_lblcr.ko

kernel/net/netfilter/ipvs/ip_vs_dh.ko

kernel/net/netfilter/ipvs/ip_vs_sh.ko

kernel/net/netfilter/ipvs/ip_vs_sed.ko

kernel/net/netfilter/ipvs/ip_vs_nq.ko

kernel/net/netfilter/ipvs/ip_vs_ftp.ko

有蓝色的两项表明系统内核已经默认支持IPVS

下面就可以进行安装

两种方式

第一:源码包安装

Make

Make install

可能会出错

由于编译程序找不到对应的内核

解决:ln -s /usr/src/kernels/2.6.32-279.el6.x86_64/ /usr/src/linux

第二种:

Rpm包安装

红帽的LoadBalancer是有这个包的

[root@www ~]# yum list |grep ipvs

ipvsadm.x86_64 1.25-10.el6 LoadBalancer

[root@www ~]# yum install ipvsadm.x86_64

Loaded plugins: product-id, subscription-manager

Updating certificate-based repositories.

Unable to read consumer identity

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package ipvsadm.x86_64 0:1.25-10.el6 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

=========================================================================================================================================

Package Arch Version Repository Size

=========================================================================================================================================

Installing:

ipvsadm x86_64 1.25-10.el6 LoadBalancer 41 k

Transaction Summary

=========================================================================================================================================

Install 1 Package(s)

Total download size: 41 k

Installed size: 74 k

Is this ok [y/N]: y

Downloading Packages:

ipvsadm-1.25-10.el6.x86_64.rpm | 41 kB 00:00

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Installed products updated.

Verifying: ipvsadm-1.25-10.el6.x86_64 1/1

Installed:

ipvsadm.x86_64 0:1.25-10.el6

Complete!

[root@www ~]# ipvsadm --help

ipvsadm v1.25 2008/5/15 (compiled with popt and IPVS v1.2.1)

Usage:

ipvsadm -A|E -t|u|f service-address [-s scheduler] [-p [timeout]] [-M netmask]

ipvsadm -D -t|u|f service-address

ipvsadm -C

ipvsadm -R

ipvsadm -S [-n]

ipvsadm -a|e -t|u|f service-address -r server-address [options]

ipvsadm -d -t|u|f service-address -r server-address

ipvsadm -L|l [options]

ipvsadm -Z [-t|u|f service-address]

ipvsadm --set tcp tcpfin udp

ipvsadm --start-daemon state [--mcast-interface interface] [--syncid sid]

ipvsadm --stop-daemon state

如果看到提示表明IPVS已经安装成功

开始配置LVS两种方式

Ipvsadmin和prianha来进行配置

第一种ipvsadmin

638 ipvsadm -A -t 192.168.1.250:80 -s rr -p 600添加一个虚拟ip采用轮询的方式每个节点保持10分钟

639 ipvsadm -a -t 192.168.1.250:80 -r 192.168.1.200:80 -g添加real server -g表示DR方式

640 ipvsadm -a -t 192.168.1.250:80 -r 192.168.1.201:80 -g添加real server -g表示DR方式

641 ifconfig eth0:0 192.168.1.250 netmask 255.255.255.0 up 添加虚拟ip

643 route add -host 192.168.1.250 dev eth0:0添加虚拟ip路由

645 echo "1" > /proc/sys/net/ipv4/ip_forward添加路由转发,Dr不是必须的NAT是必须的

[root@www ~]# ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.250:http rr

-> www.ha1.com:http Route 1 0 0

-> www.ha2.com:http Route 1 0 0

方便管理的话可以做一个启动和停止脚本

#!/bin/sh

# description: Start LVS of Director server

VIP=192.168.1.250

RIP1=192.168.1.200

RIP2=192.168.1.201

./etc/rc.d/init.d/functions

case "$1" in

start)

echo " start LVS of Director Server"

# set the Virtual IP Address and sysctl parameter

/sbin/ifconfig eth0:0 $VIP broadcast $VIP netmask 255.255.255.255 up

echo "1" >/proc/sys/net/ipv4/ip_forward

#Clear IPVS table

/sbin/ipvsadm -C

#set LVS

/sbin/ipvsadm -A -t $VIP:80 -s rr -p 600

/sbin/ipvsadm -a -t $VIP:80 -r $RIP1:80 -g

/sbin/ipvsadm -a -t $VIP:80 -r $RIP2:80 -g

#Run LVS

/sbin/ipvsadm

;;

stop)

echo "close LVS Directorserver"

echo "0" >/proc/sys/net/ipv4/ip_forward

/sbin/ipvsadm -C

/sbin/ifconfig eth0:0 down

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

第二种方式采用piranha来配置lvs,也可以采用网页登陆piranha方式进行配置

首先安装piranha

yum install piranha

piranha安装完毕后,会产生/etc/sysconfig/ha/lvs.cf文件

编辑这个文件

[root@www ha]# vim /etc/sysconfig/ha/lvs.cf

service = lvs

primary = 192.168.1.202

backup = 0.0.0.0

backup_active = 0

keepalive = 6

deadtime = 10

debug_level = NONE

network = direct

virtual server1 {

address = 192.168.1.250 eth0:0

# vip_nmask = 255.255.255.255

active = 1

load_monitor = none

timeout = 5

reentry = 10

port = http

send = "GET / HTTP/1.0\r\n\r\n"

expect = "HTTP"

scheduler = rr

protocol = tcp

# sorry_server = 127.0.0.1

server Real1 {

address = 192.168.1.200

active = 1

weight = 1

}

server Real2 {

address = 192.168.1.201

active = 1

weight = 1

}

}

[root@www ha]# /etc/init.d/pulse status

pulse (pid 3211) is running...

[root@www ha]# cat /proc/sys/net/ipv4/ip_forward

1

[root@www ha]# route add -host 192.168.1.250 dev eth0:0

Relserver配置

VIP=192.168.1.250

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p

防止arp

验证

[root@www ha]# ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.250:http rr

-> www.ha1.com:http Route 1 0 5

-> www.ha2.com:http Route 1 0 5

当我们关掉一台节点的httd进程时

机子会把这台节点提出去

这时候就只会访问ha1的站点了

形成了负载均衡

剩下的就是对这两台LVS做keepalived高可用 也就是使用vrrp

安装keepalived

[root@www keepalived]# rpm -Uvh ~/keepalived-1.2.7-5.x86_64.rpm

配置keepalived.conf

vrrp_instance VI_1 {

state MASTER # master

interface eth0

lvs_sync_daemon_interface eth1

virtual_router_id 50 # unique, but master and backup is same

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass uplooking

}

virtual_ipaddress {

192.168.1.250 # vip

}

}

virtual_server 192.168.1.250 80 {

delay_loop 20

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

protocol TCP

real_server 192.168.1.200 80 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

real_server 192.168.1.201 80 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

}

完整验证:

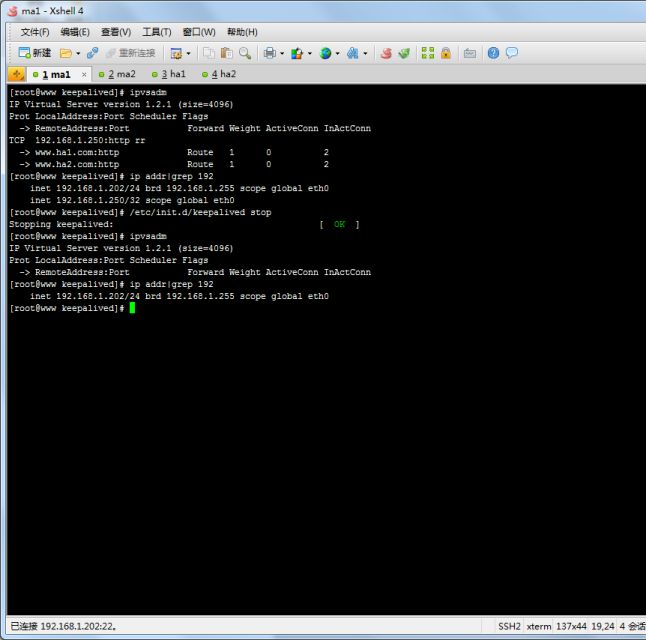

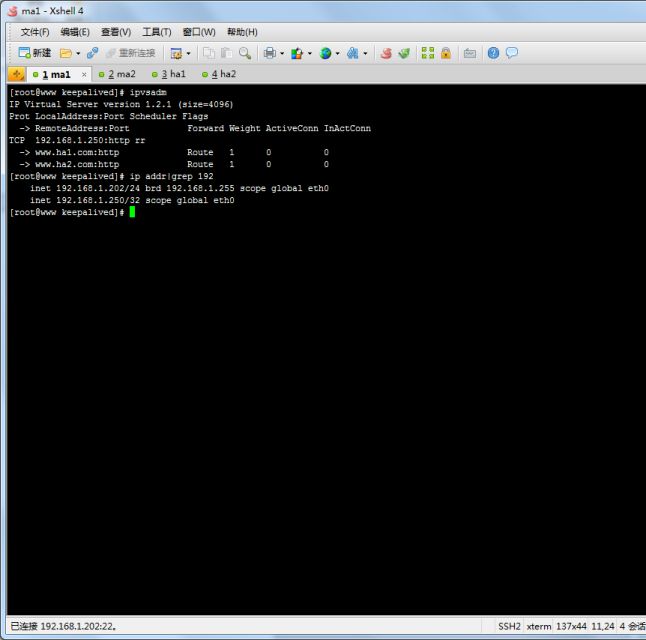

第一步:keepalived节点down掉一台

从上图中可以看出虚拟ip已经不在这台ma1上面

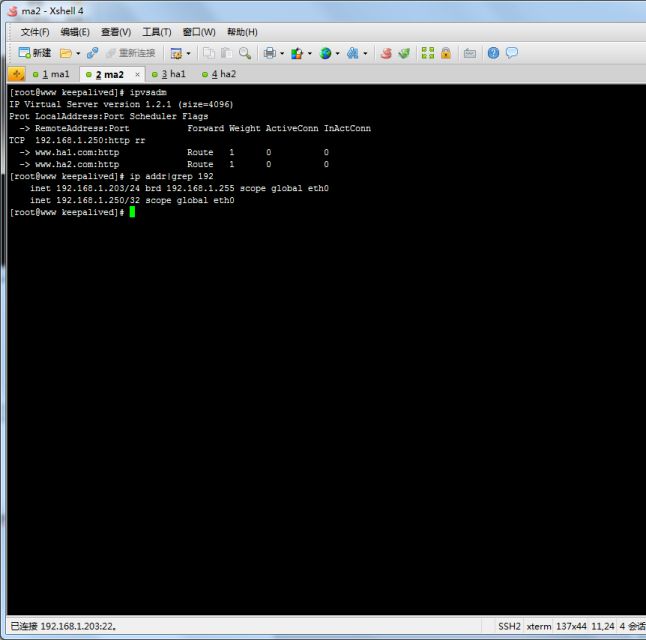

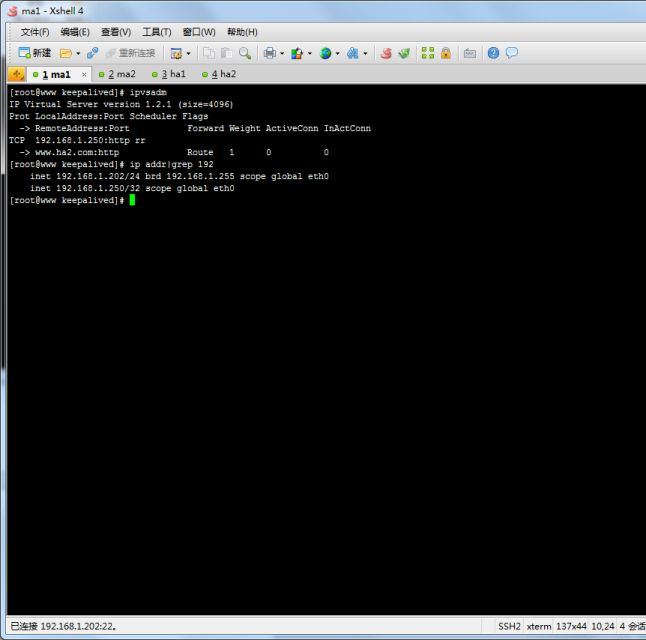

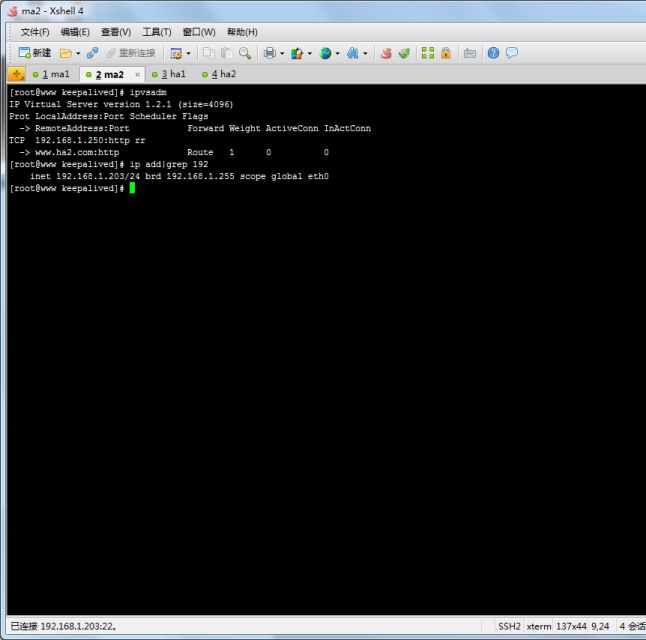

下面我们来看ma2的配置

从上图中可以看出ma2的各项进程都运行正常,虚拟ip迁移,实现了LVS的高可用

可以看出依然实现了负载均衡

现在故障处理完毕启动ma1的keepalived

虚拟ip又回到ha1了

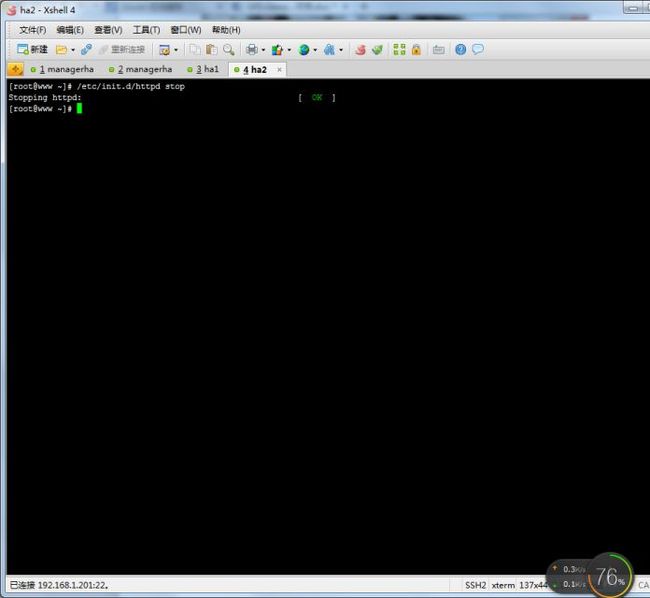

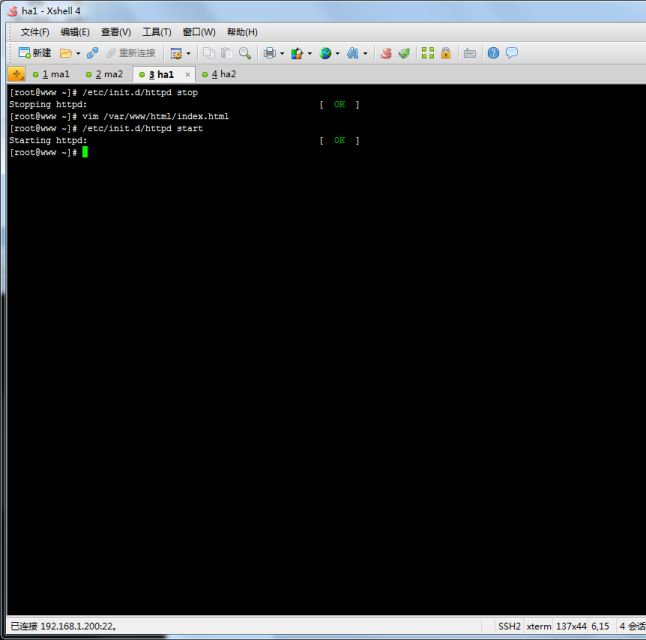

下面我们来看一下ha1,停掉ha1上httpd服务

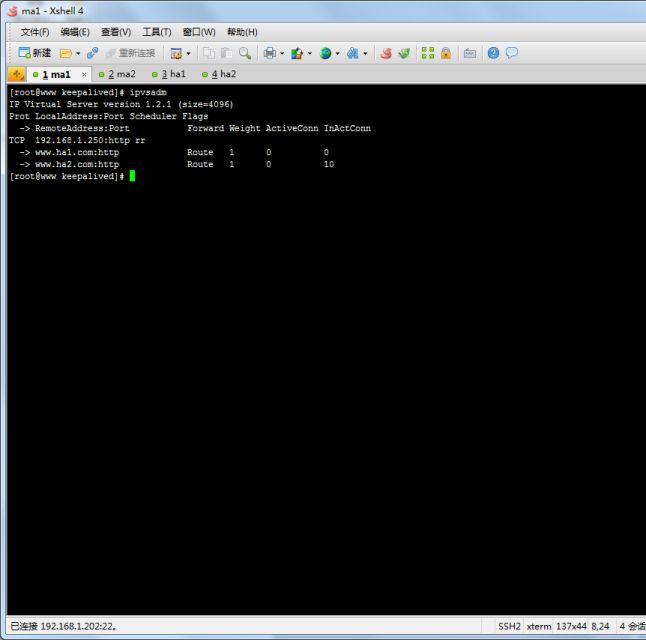

查看ma1

我们可以看到虚拟IP没有移动,但是在lvs中的ha1的路由表已经被踢出来了

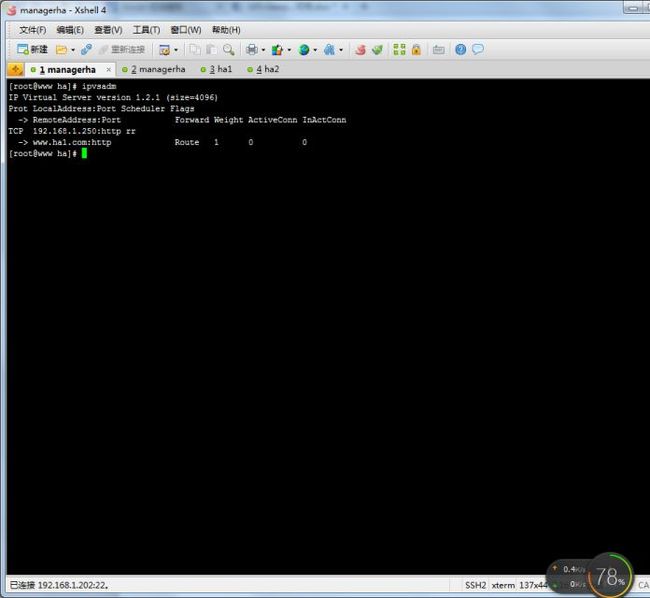

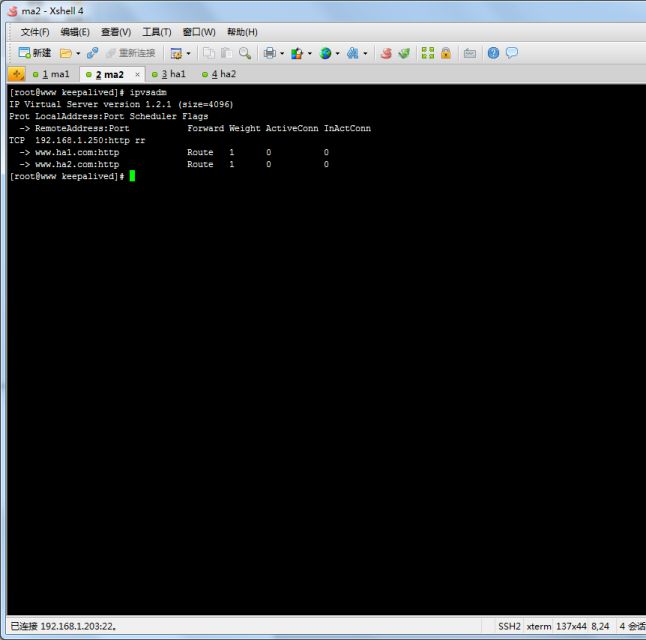

下面我们来看ma2

也可以看到虚拟IP没有移动过来,但是在lvs中的ha1的路由表已经被踢出来了

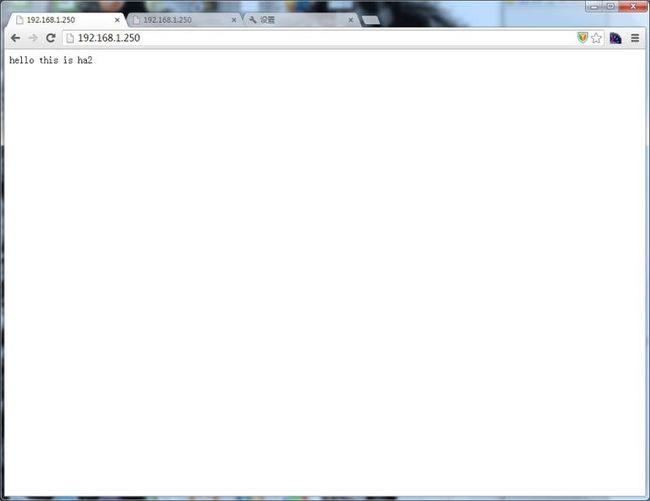

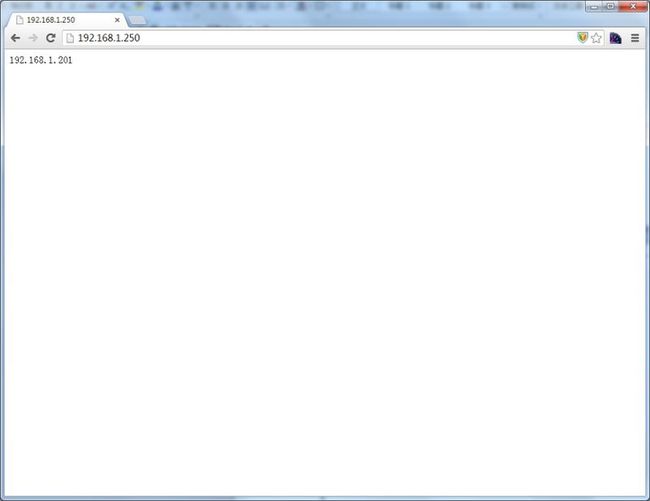

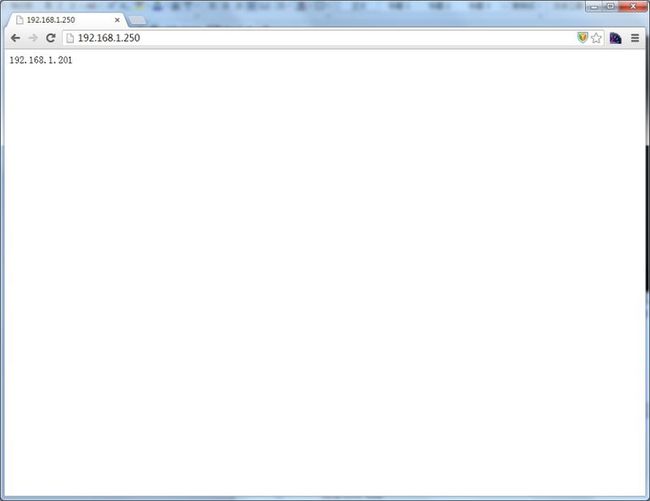

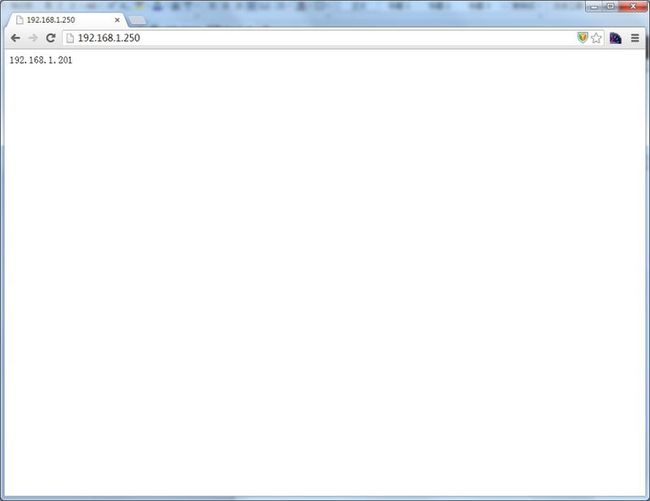

现在访问web

就只能看到ha2的web页面了

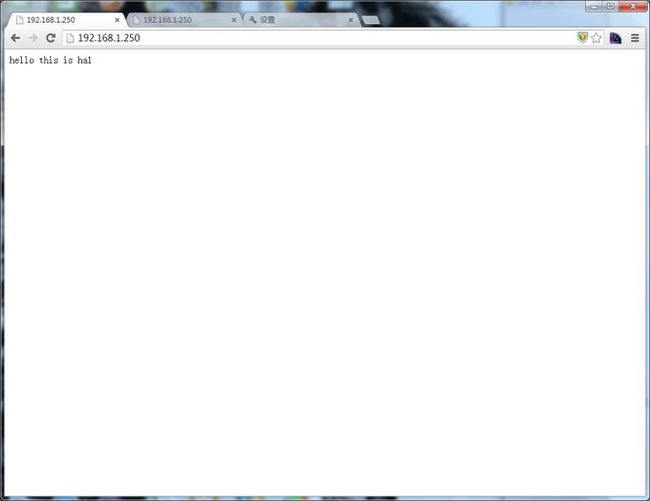

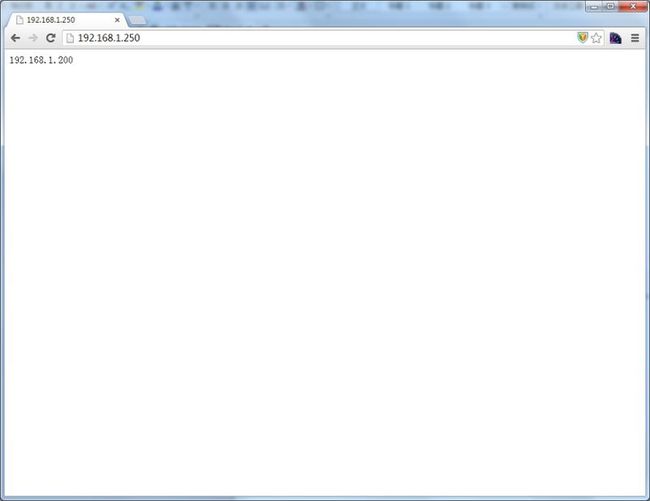

现在启动ha1的web服务

我们可以看到ma1和ma2中ha1的路由表又自动添加进来了

访问web页面

验证完成

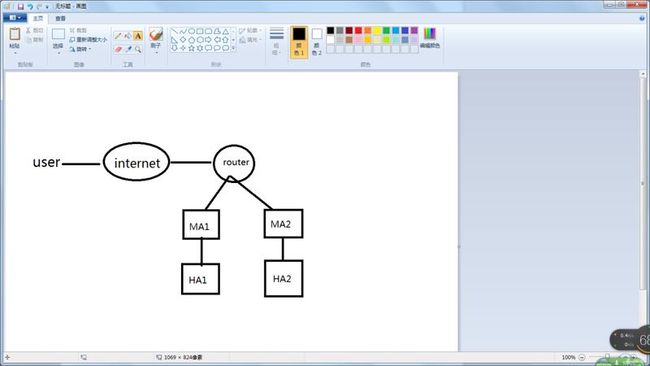

总结说明

网络结构

集群说明:

1.使用lvs构成web的负载均衡高可用

2.使用keepalived形成lvs节点的高可用

验证说明:

1.停掉任意节点的keepalived进程虚拟IP会迁移到另外一台去,而web服务器任然是两台进行负载均衡,从而实现lvs节点的高可用

2.停掉任意一台web服务器,虚拟ip不会迁移,但是在lvs配置会把ha1的路由表踢出去从而实现web服务器的高可用