Hadoop上路_10-分布式Hadoop集群搭建

1.创建模板系统:

参照前文。本例使用ubuntu10.10。初始化用户hadoop,密码dg,主机名hadoop-dg 。

1)解压jdk、hadoop,配置bin到环境变量:

jdk1.7.0_17 hadoop-1.1.2

2)配置%hadoop%/conf/hadoop-env.sh:

export JAVA_HOME=/jdk目录

3)安装openssh,尚不配置无密码登陆:

openssh-client openssh-service openssh-all

4)配置网卡静态IP、hostname、hosts:

#查看网卡名称 ifconfig #配置网卡参数 sudo gedit /etc/network/interfaces

auto eth0 iface eth0 inet static address 192.168.1.251 gateway 192.168.1.1 netmask 255.255.255.0

#重启网卡 sudo /etc/init.d/networking restart

#修改hostname sudo gedit /etc/hostname

hadoop-dg

#修改hosts sudo gedit /etc/hosts

127.0.0.1 hadoop-dg 192.168.1.251 hadoop-dg

5)赋予用户对hadoop安装目录可写的权限:

sudo chown -hR hadoop hadoop-1.1.2

6)关闭防火墙:

sudo ufw disable #查看状态 sudo ufw status #开启 #sudo ufw enable

2. 根据模板系统复制出dg1、dg2、dg3虚拟系统:

#在Windows命令行进入virtualbox安装目录,执行命令,最后参数为vdi文件路径 %virtualbox%> VBoxManage internalcommands sethduuid %dg2.vdi%

1)使用dg1创建主控机(namenode、jobtracker节点所在):

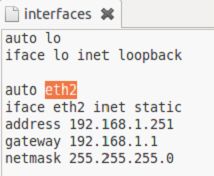

(1)配置网卡静态IP:

#配置网卡参数 sudo gedit /etc/network/interfaces

auto eth2 iface eth2 inet static address 192.168.1.251 gateway 192.168.1.1 netmask 255.255.255.0

#重启网卡 sudo /etc/init.d/networking restart

(2)配置hostname:

sudo gedit /etc/hostname

master

(3)配置hosts:

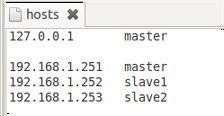

sudo gedit /etc/hosts

127.0.0.1 master 192.168.1.251 master 192.168.1.252 slave1 192.168.1.253 slave2

配置hostname和hosts后重启系统。

(4)配置hadoop:

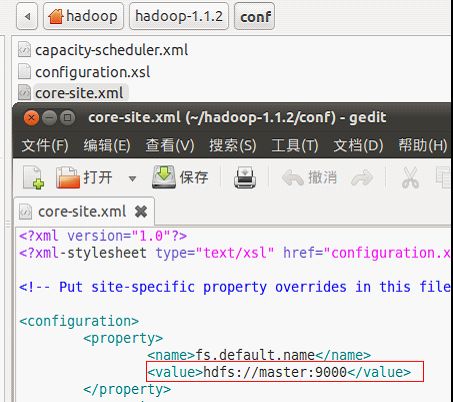

① %hadoop%/conf/core-site.xml:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<!-- 当前用户须要对此目录有读写权限 -->

<value>/home/hadoop/hadoop-${user.name}</value>

</property>

</configuration>

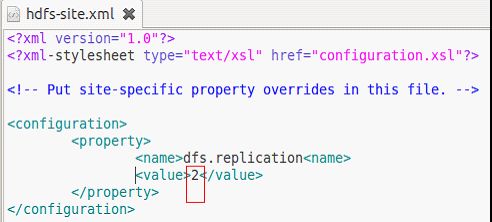

② %hadoop%/conf/hdfs-site.xml:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication<name> <!-- 当前有两个附属机 --> <value>2</value> </property> </configuration>

③ %hadoop%/conf/mapred-site.xml:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapred.job.tracker</name> <value>master:9001</value> </property> </configuration>

④ %hadoop%/conf/masters:

master

⑤ %hadoop%/conf/slaves:

slave1 slave2

⑥ 创建附属机后执行:

#拷贝全部配置文件到附属机slave1 scp -rpv ~/hadoop-1.1.2/conf/* slave1:~/hadoop-1.1.2/conf/ #拷贝全部配置文件到附属机slave2 scp -rpv ~/hadoop-1.1.2/conf/* slave2:~/hadoop-1.1.2/conf/

首先:更改两台附属机的网络配置,ping通对方及主控机:

然后:在master主控机执行操作:

然后可以看到slave1/slave2附属机上的文件了:

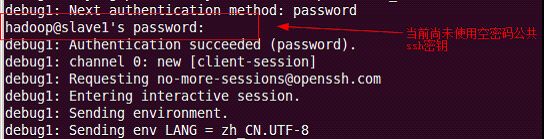

(5)创建空密码公共密钥:

ssh-keygen

读取id_rsa.pub文件中的密码内容保存到新文件 sudo cat id_rsa.pub >> authorized_keys

首先:在附属机上创建~/.ssh目录:

#进入用户根目录 cd ~/ #创建.ssh目录 sudo mkdir .ssh #获得.ssh目录的权限 sudo chown -hR hadoop .ssh

![]()

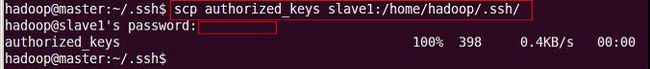

然后:在主控机拷贝公共密钥到从机:

#创建附属机后执行 #拷贝主控机的空密码密钥到附属机slave1 scp authorized_keys slave1:~/.ssh/ #拷贝主控机的空密码密钥到附属机slave2 scp authorized_keys slave2:~/.ssh/

#登陆附属机slave1 ssh slave1 exit #登陆附属机slave2 ssh slave2

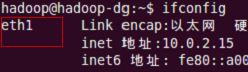

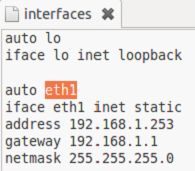

2)使用dg2、dg3创建附属机(datanode、tasktracker节点所在):

(1)配置网卡静态IP:

#查看网卡配置 ifconfig

|

|

#配置网卡参数 sudo gedit /etc/network/interfaces

auto eth1 iface eth1 inet static address 192.168.1.252 gateway 192.168.1.1 netmask 255.255.255.0  |

auto eth1 iface eth1 inet static address 192.168.1.253 gateway 192.168.1.1 netmask 255.255.255.0  |

#重启网卡 sudo /etc/init.d/networking restart

(2)配置hostname:

sudo gedit /etc/hostname

| slave1 | slave2 |

(3)配置hosts:

sudo gedit /etc/hosts

127.0.0.1 slave1 192.168.1.251 master 192.168.1.252 slave1 192.168.1.253 slave2  |

127.0.0.1 slave2 192.168.1.251 master 192.168.1.252 slave1 192.168.1.253 slave2  |

配置hostname和hosts后重启系统。

(4)配置hadoop:

从主控机拷贝hadoop配置文件:

scp -rpv ~/hadoop-1.1.2/conf/* slave1:~/hadoop-1.1.2/conf/ scp -rpv ~/hadoop-1.1.2/conf/* slave2:~/hadoop-1.1.2/conf/

(5)拷贝主控机的空密码公共密钥:

首先:在附属机创建~/.ssh目录:

cd ~/ sudo mkdir .ssh sudo chown -hR hadoop .ssh

然后:在主控机拷贝公共密钥到附属机:

scp authorized_keys slave1:~/.ssh/ scp authorized_keys slave2:~/.ssh/

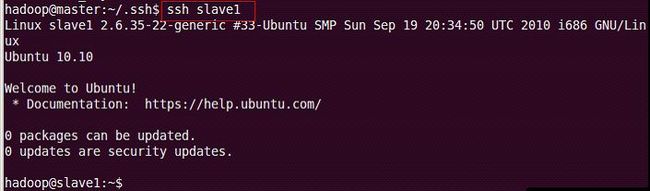

从主控机登陆附属机,进行验证

ssh slave1 ssh slave2

3. 启动集群:

1)在主控机(master)格式化HDFS:

hadoop namenode -format

2)在主控机启动Hadoop:

start-all.sh

3)验证1-查看hadoop.tmp.dir目录:

启动 hadoop成功后,在master的hadoop.tmp.dir指定的目录中生成了dfs文件夹,slave的hadoop.tmp.dir指定的目录中均生成了dfs文件夹和mapred文件夹。

4)验证2-查看dfs报告:

cd ~/hadoop-1.1.2/bin/ hadoop dfsadmin -report

错误:

可用的datanodes启动0:

查看jps,正常:

查看:%hadoop%/logs/hadoop-hadoop-namenode-master.log日志信息:

2013-05-22 17:08:27,164 ERROR org.apache.hadoop.security.UserGroupInformation: PriviledgedActionException as:hadoop cause:java.io.IOException: File /home/hadoop/hadoop-hadoop/mapred/system/jobtracker.info could only be replicated to 0 nodes, instead of 1 2013-05-22 17:08:27,164 INFO org.apache.hadoop.ipc.Server: IPC Server handler 6 on 9000, call addBlock(/home/hadoop/hadoop-hadoop/mapred/system/jobtracker.info, DFSClient_NONMAPREDUCE_592417942_1, null) from 127.0.0.1:47247: error: java.io.IOException: File /home/hadoop/hadoop-hadoop/mapred/system/jobtracker.info could only be replicated to 0 nodes, instead of 1 java.io.IOException: File /home/hadoop/hadoop-hadoop/mapred/system/jobtracker.info could only be replicated to 0 nodes, instead of 1 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:1639) at org.apache.hadoop.hdfs.server.namenode.NameNode.addBlock(NameNode.java:736) at sun.reflect.GeneratedMethodAccessor6.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:601) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:578) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1393) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1389) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1149) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1387)

解决1:

配置 master 主控机和两台附属机的 hosts 为:

127.0.0.1 localhost.localdomain localhost ::1 localhost6.localdomain6 localhost6 192.168.1.251 master 192.168.1.252 slave1 192.168.1.253 slave2

重启机器。重试,没起作用。

解决2:

1. 在slave1执行ssh-keygen,将生成的id_rsa.pub追加到已有的由master最初生成的authorized_keys。此时密钥文件中保存了两台机器的rsa密码;

#在slave1上生成自己的id_rsa.pub密钥文件 ssh-keygen #使用 >> 追加进到已有的文件中 sudo cat id_rsa.pub >> authorized_keys

2. 在slave2上将~/.ssh目录中已有的authorized_keys删除;

3. 在slave1上将authorized_keys空密码公共密钥远程复制到slave2;

scp authorized_keys slave2:~/.ssh/

4. 在slave2执行ssh-keygen,将生成的id_rsa.pub追加到authorized_keys。此时密钥文件保存了三台机器的rsa密码;

5. 在slave2上将authorized_keys空密码公共密钥远程复制到master和slave1替换原有的文件;

6. 在master上登陆slave1和slave2和master;

7. 在slave1上登陆slave1和slave2和master;

8. 在slave2上登陆slave1和slave2和master;

9. hadoop dfsadmin -report

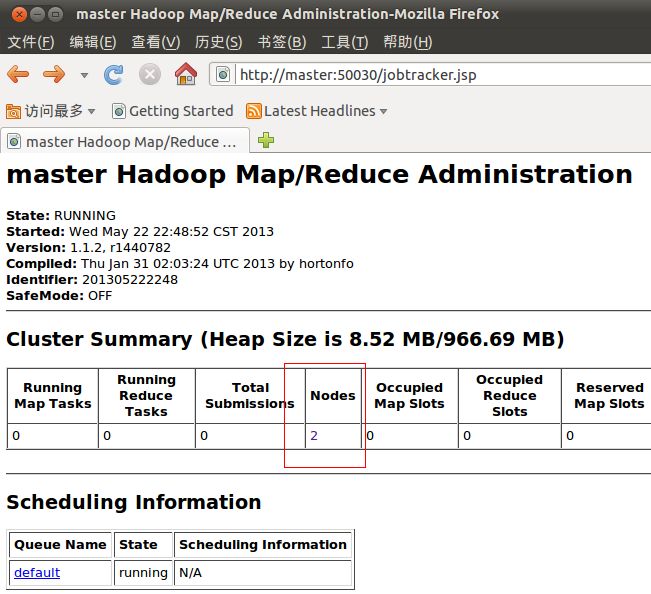

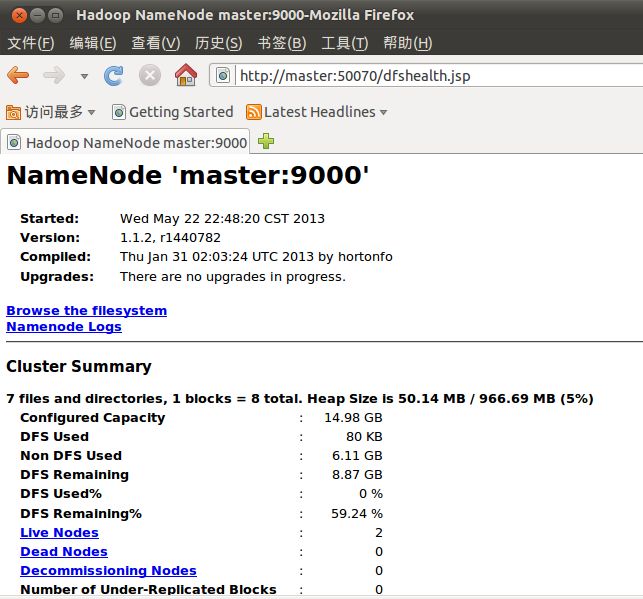

成功:

hadoop@master:~/hadoop-1.1.2/bin$ hadoop dfsadmin -report Configured Capacity: 16082411520 (14.98 GB) Present Capacity: 9527099422 (8.87 GB) DFS Remaining: 9527042048 (8.87 GB) DFS Used: 57374 (56.03 KB) DFS Used%: 0% Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 ------------------------------------------------- Datanodes available: 2 (2 total, 0 dead) Name: 192.168.1.252:50010 Decommission Status : Normal Configured Capacity: 8041205760 (7.49 GB) DFS Used: 28687 (28.01 KB) Non DFS Used: 3277914097 (3.05 GB) DFS Remaining: 4763262976(4.44 GB) DFS Used%: 0% DFS Remaining%: 59.24% Last contact: Wed May 22 22:54:29 CST 2013 Name: 192.168.1.253:50010 Decommission Status : Normal Configured Capacity: 8041205760 (7.49 GB) DFS Used: 28687 (28.01 KB) Non DFS Used: 3277398001 (3.05 GB) DFS Remaining: 4763779072(4.44 GB) DFS Used%: 0% DFS Remaining%: 59.24% Last contact: Wed May 22 22:54:30 CST 2013

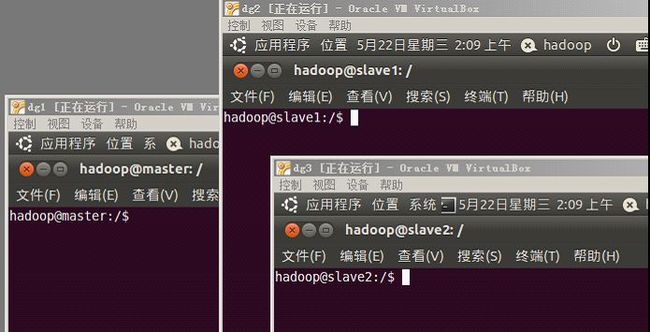

5)验证3-网页监视:

http://192.168.1.251:50070

- end