Sqoop安装与配置

Sqoop的运行需要依赖于Hadoop,因此需要首先安装Hadoop2.5.2,并且Sqoop的启动前需要首先安装Hadoop。

本文使用如下版本

- sqoop-1.4.5.bin_hadoop-2.0.4-alpha版本,

- Hadoop使用2.5.2版本

Sqoop安装和配置的步骤

1. 从如下地址下载Sqoop

- http://mirror.bit.edu.cn/apache/sqoop/1.4.5/sqoop-1.4.5.bin__hadoop-2.0.4-alpha.tar.gz

注意安装包中hadoop前面是__(两个下划线) ,把其中的一个_去掉

2.解压sqoop,在系统变量里配置如下变量SQOOP_HOME和PATH

- sudo vim /etc/profile

-

- export SQOOP_HOME=/home/hadoop/software/sqoop-1.4.5.bin_hadoop-2.0.4-alpha

- export PATH=$SQOOP_HOME/bin:$PATH

-

- ###如下两行在sqoop-env.sh配置,在此处将其注释掉

- #export HADOOP_COMMON_HOME=/home/hadoop/software/hadoop-2.5.2

- #export HADOOP_MAPRED_HOME=/home/hadoop/software/hadoop-2.5.2

3. 修改配置文件

- cp sqoop-env-template.sh sqoop-env.sh

- cp sqoop-site-template.xml sqoop-site.xml

4. 修改sqoop-env.sh文件

- export HADOOP_COMMON_HOME=/home/hadoop/software/hadoop-2.5.2

-

- export HADOOP_MAPRED_HOME=/home/hadoop/software/hadoop-2.5.2

-

- export HIVE_HOME=/home/hadoop/software/apache-hive-0.14.0-bin

注意HADOOP_MAPRED_HOME需要配置成home/hadoop/software/hadoop-2.5.2,而不是/home/hadoop/software/hadoop-2.5.2/share/hadoop/mapreduce, 配置成后者在执行sqoop import的时候报Hadoop的类找不到

配置Sqoop的jar

1. 检查$SQOOP_HOME/lib下是否有sqoop-1.4.5.jar

2. 如果没有则下载到此目录

配置MySQL JDBC Driver

1. 检查检查$SQOOP_HOME/lib下是否有MySQL的JDBC驱动有mysql-connector-java-5.1.34.jar

2. 如果没有则下载到此目录、

启动Hadoop和MySQL

1. 首先运行Hadoop

2. 再次运行MySQL

使用Sqoop对HDFS的和MySQL双向导入导出

1. 查看Sqoop可用的命令参数

结果:

- Available commands:

- codegen Generate code to interact with database records

- create-hive-table Import a table definition into Hive

- eval Evaluate a SQL statement and display the results

- export Export an HDFS directory to a database table

- help List available commands

- import Import a table from a database to HDFS

- import-all-tables Import tables from a database to HDFS

- job Work with saved jobs

- list-databases List available databases on a server

- list-tables List available tables in a database

- merge Merge results of incremental imports

- metastore Run a standalone Sqoop metastore

- version Display version information

2. 获取所有的数据库(密码为空)

- ./sqoop list-databases --connect jdbc:mysql://192.168.26.135:3306 --username root

结果

- information_schema

- metadata_store

- mysql

- performance_schema

- sqoop

3. 列出指定数据所有的表

- ./sqoop list-tables --connect jdbc:mysql://192.168.26.135:3306/metadata_store --username root

结果:

- BUCKETING_COLS

- CDS

- COLUMNS_V2

- DATABASE_PARAMS

- DBS

- FUNCS

- FUNC_RU

- GLOBAL_PRIVS

- PARTITIONS

- PARTITION_KEYS

- PARTITION_KEY_VALS

- PARTITION_PARAMS

- PART_COL_STATS

- ROLES

- SDS

- SD_PARAMS

- SEQUENCE_TABLE

- SERDES

- SERDE_PARAMS

- SKEWED_COL_NAMES

- SKEWED_COL_VALUE_LOC_MAP

- SKEWED_STRING_LIST

- SKEWED_STRING_LIST_VALUES

- SKEWED_VALUES

- SORT_COLS

- TABLE_PARAMS

- TAB_COL_STATS

- TBLS

- VERSION

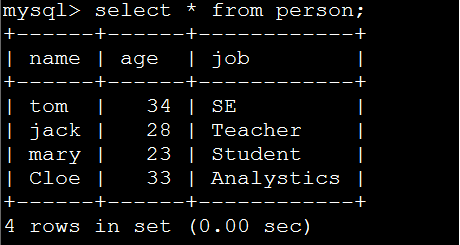

4. 在MySQL中创建测试数据库和表

4.1 创建表

- mysql> create table person(name varchar(40), age int, job varchar(40));

4.2 插入数据

- mysql> insert into person(name, age, job) values("jack",28,"Teacher");

- Query OK, 1 row affected (0.00 sec)

-

- mysql> insert into person(name, age, job) values("mary",23,"Student");

- Query OK, 1 row affected (0.00 sec)

-

- mysql> insert into person(name, age, job) values("Cloe",33,"Analystics");

- Query OK, 1 row affected (0.00 sec)

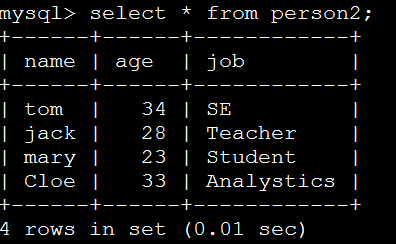

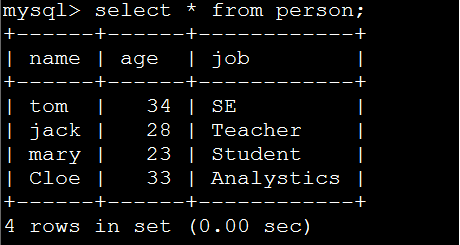

4.3 查询插入结果:

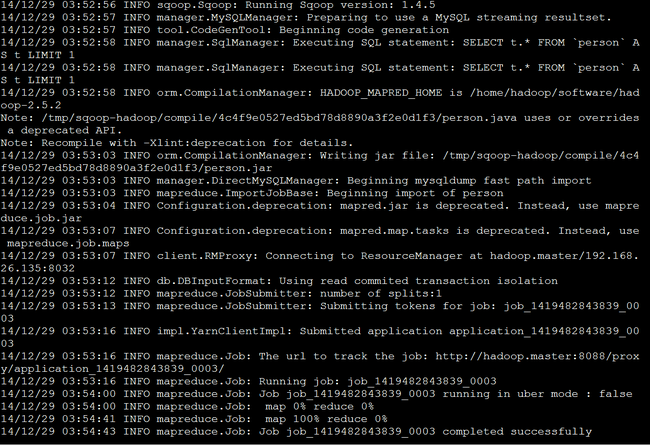

5. MySQL的数据导入到HDFS中

5.1 执行导入命令

- ./sqoop import --connect jdbc:mysql://192.168.26.135:3306/sqoop --username root --direct --table person --fields-terminated-by ':' --target-dir /user/hadoop/person -m1

参数说明:

--table 指定要导入到HDFS中的表名

--fields-terminated-by 指定保存到HDFS上的每一行使用什么分隔符来分隔MySQL数据行的列

--m1 表示MapperTask的个数为1

--target-dir 表示导入到HDFS中的目录

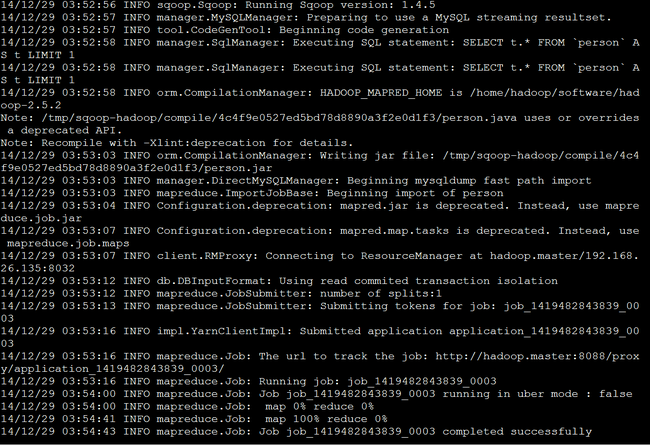

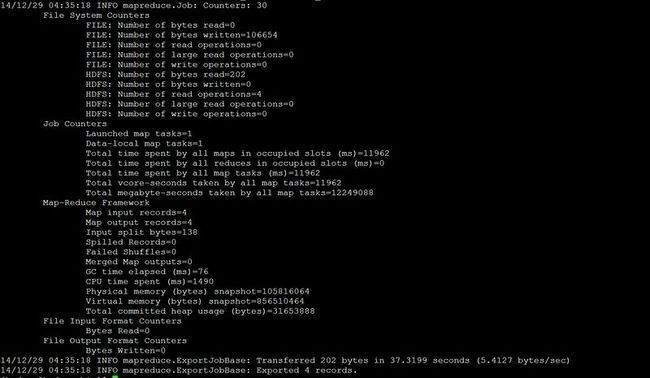

5.2 导入过程

5.3 查看HDFS中的导入状态

- [hadoop@hadoop bin]$ hdfs dfs -cat /user/hadoop/person/part-m-00000

- tom:34:SE

- jack:28:Teacher

- mary:23:Student

- Cloe:33:Analystics

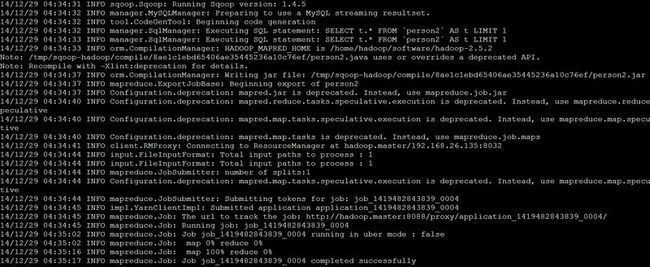

6. HDFS数据导出到MySQL中

6.1 首先在MySQL中创建表person2

- mysql> create table person2(name varchar(40), age int, job varchar(40));

6.2 执行sqoop导出操作

- ./sqoop export --connect jdbc:mysql://192.168.26.135:3306/sqoop --username root --table person2 --export-dir '/user/hadoop/person/part-m-00000' --fields-terminated-by ':' -m1

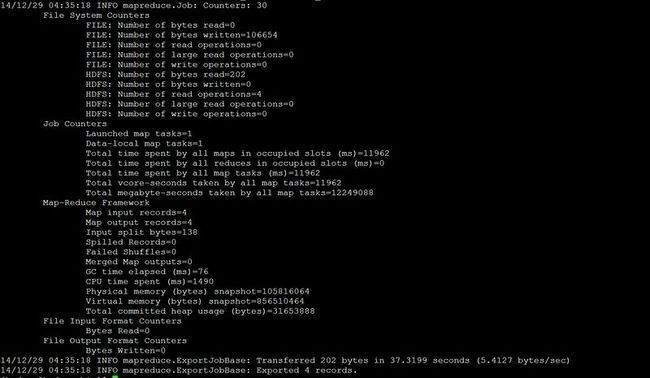

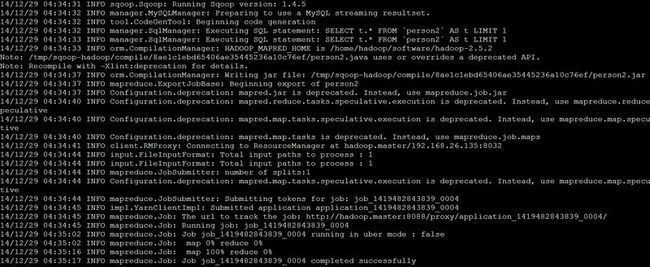

6.3 执行过程

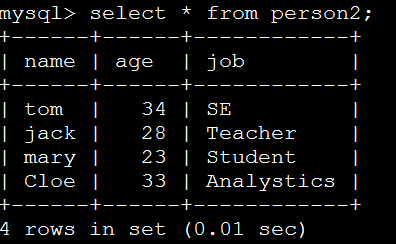

6.4 执行结果

错误处理

1

- java.net.ConnectException: Connection refused

- at java.net.PlainSocketImpl.socketConnect(Native Method)

- at java.net.PlainSocketImpl.doConnect(PlainSocketImpl.java:351)

- at java.net.PlainSocketImpl.connectToAddress(PlainSocketImpl.java:213)

- at java.net.PlainSocketImpl.connect(PlainSocketImpl.java:200)

- at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:366)

- at java.net.Socket.connect(Socket.java:529)

- at java.net.Socket.connect(Socket.java:478)

- at java.net.Socket.<init>(Socket.java:375)

- at java.net.Socket.<init>(Socket.java:218)

- at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:173)

- at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:267)

- at com.mysql.jdbc.Connection.createNewIO(Connection.java:2739)

- at com.mysql.jdbc.Connection.<init>(Connection.java:1553)

- at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:266)

- at java.sql.DriverManager.getConnection(DriverManager.java:582)

- at java.sql.DriverManager.getConnection(DriverManager.java:185)

- at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:278)

- at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:187)

- at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:162)

- at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:62)

- at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:117)

- at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:723)

- at org.apache.hadoop.mapred.MapTask.run(MapTask.java:370)

- at org.apache.hadoop.mapred.Child$4.run(Child.java:255)

- at java.security.AccessController.doPrivileged(Native Method)

- at javax.security.auth.Subject.doAs(Subject.java:396)

- at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1121)

- at org.apache.hadoop.mapred.Child.main(Child.java:249)

这个错误是指定sqoop import时报的错,解决办法是把mysql的jdbc连接串,把localhost改为实际的IP或者域名

2. sqoop导入mysql数据出错

用sqoop导入mysql数据出现以下错误:

14/12/03 16:37:58 ERROR manager.SqlManager: Error reading from database: java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@54b0a583 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@54b0a583 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:930)

at com.mysql.jdbc.MysqlIO.checkForOutstandingStreamingData(MysqlIO.java:2694)

at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:1868)

at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2109)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2642)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2571)

at com.mysql.jdbc.StatementImpl.executeQuery(StatementImpl.java:1464)

at com.mysql.jdbc.ConnectionImpl.getMaxBytesPerChar(ConnectionImpl.java:3030)

at com.mysql.jdbc.Field.getMaxBytesPerCharacter(Field.java:592)

at com.mysql.jdbc.ResultSetMetaData.getPrecision(ResultSetMetaData.java:444)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:285)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:240)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:226)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:295)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1773)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1578)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:96)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:478)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:601)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

14/12/03 16:37:58 ERROR tool.ImportTool: Encountered IOException running import job: java.io.IOException: No columns to generate for ClassWriter

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1584)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:96)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:478)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:601)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

这个是由于mysql-connector-java的bug造成的,出错时我用的是mysql-connector-java-5.1.10-bin.jar,更新成mysql-connector-java-5.1.32-bin.jar就可以了。mysql-connector-java-5.1.32-bin.jar的下载地址为http://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.32.tar.gz。下载完后解压,在解压的目录下可以找到mysql-connector-java-5.1.32-bin.jar。