android + red5 + rtmp

背景:在已有的red5服务器环境下实现android客户端的视频直播

要实现客户端视频直播就先先对服务器端有所了解

Red5流媒体服务器是Adboe的产品,免费并且是开源的,与Flash搭配的时候可谓是天生一对,但使用Java和Android作为客户端调用却可谓一波三折。

Red5是一个采用Java开发开源的Flash流媒体服务器。它支持:把音频(MP3)和视频(FLV)转换成播放流; 录制客户端播放流(只支持FLV);共享对象;现场直播流发布;远程调用。Red5使用RSTP作为流媒体传输协议,在其自带的一些示例中演示了在线录制,flash流媒体播放,在线聊天,视频会议等一些基本功能。

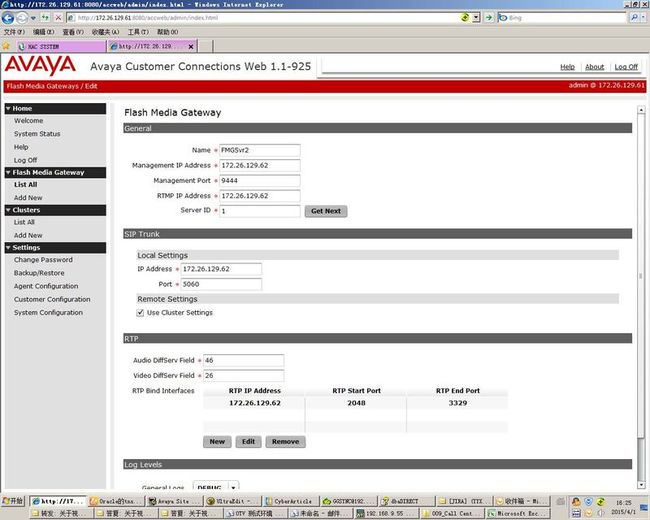

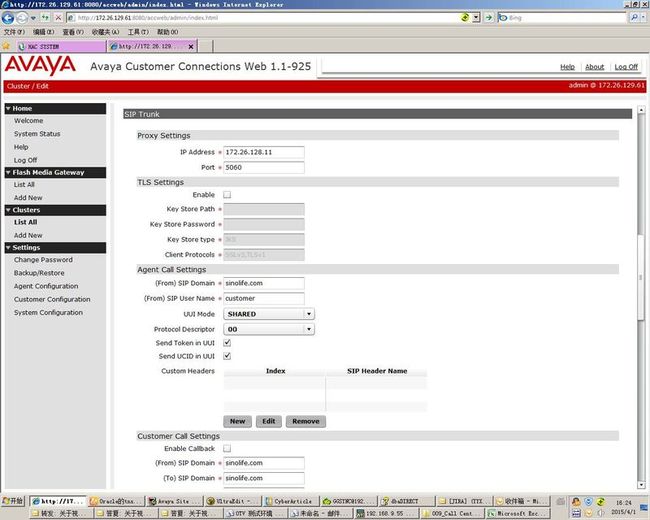

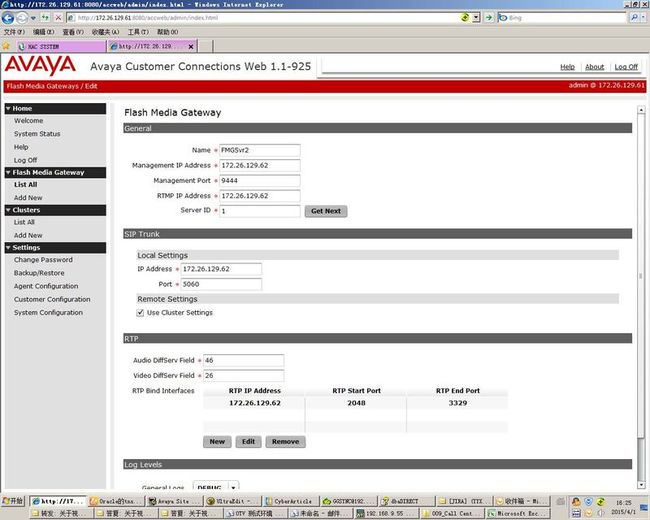

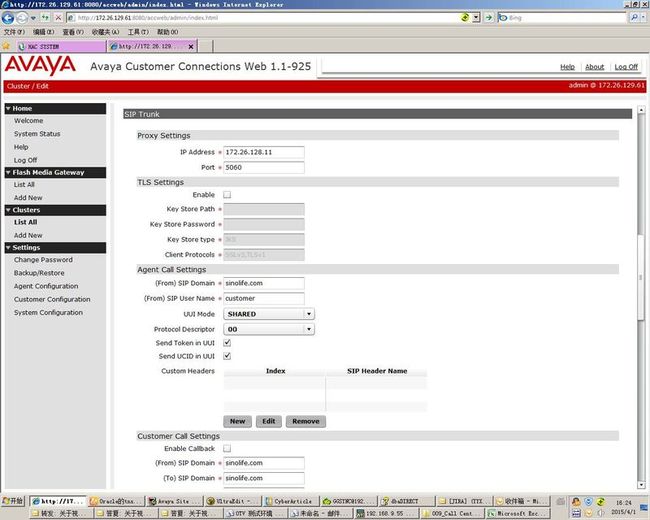

服务器的相关配置

Adobe的Red5源代码里有一个RTMPClient的类,这个类在使用上其实不复杂,但却没办法成功调用。观察日志,发现是连接成功后在开始创建流的时候,服务端把连接断开了。我能想到的解释就是可能公司现在所使用的Red5服务器的版本与这个RTMPClient不兼容。

国外还有一个收费的RTMPClient,价值是395刀。具体网址和产品的名称我就不指出了,有心人肯定会找得到。这个客户端类库很强大,使用也很方便,我注册了一个试用的key,发现能和Red5服务器成功连接并且通讯良好。(国内有破解版)

之前用vlc和Vitamio尝试过,结果播放不了直播流,可能是我太笨了吧.

有可能的原因如下:

http://blog.csdn.net/qiuchangyong/article/details/18862247

利用LocalServerSocket采集视频数据的实时流数据,android自带的LocalServerSocket是和java 的serverSocket是不同的,因为LocalServerSocket的客户端和服务端都是必须在本机,所以我们可以在调用RecodMedia录制视频的时候建立客户端LocalSocket来向服务端发送数据,在服务端接收数据调用juv—rtmp—client将接收的数据打包成RTMP协议数据向流媒体服务器发送数据。

利用android自带的Camera来录制数据,这里主要用到了照相机的CallBack回调接口,在这个回调接口里面可以实时的接收照相机录制的视频数据,这里面录制的视频数据是YUV420SP格式的数据流,这是最原始的数据流,是无法显示的服务器界面的。将数据流转化成YUV420SP2RGB格式的数据,然后利用juv—rtmp—client向流媒体服务器发送数据。

juv—rtmp—client这个jar包封装了很多和流媒体服务器交互的方法,当然有视频数据传输格式。在流媒体服务提供了三种格式的传输方式:Record、Live、Append。

利用juv—rtmp—client有几个主要的类

其中最重要的几个类是NetConnection,NetStream, License,其中NetConnection,NetStream这两个类是负责创建连接和回调服务端的数据。而License则顾名思义是负责验证有没有授权。由于按照官方给出的使用说明,在使用前必须调用License.setKey()方法传入注册所得到的key。

CameraVideoActivity

RTMPConnectionUtil

附带一款测试Red5用rtmp是否能够连接工具

要实现客户端视频直播就先先对服务器端有所了解

Red5流媒体服务器是Adboe的产品,免费并且是开源的,与Flash搭配的时候可谓是天生一对,但使用Java和Android作为客户端调用却可谓一波三折。

Red5是一个采用Java开发开源的Flash流媒体服务器。它支持:把音频(MP3)和视频(FLV)转换成播放流; 录制客户端播放流(只支持FLV);共享对象;现场直播流发布;远程调用。Red5使用RSTP作为流媒体传输协议,在其自带的一些示例中演示了在线录制,flash流媒体播放,在线聊天,视频会议等一些基本功能。

服务器的相关配置

<context-param>

<param-name>webAppRootKey</param-name>

<param-value>/FlashMediaGateway</param-value>

</context-param>

[root@lapaccweb WEB-INF]# more red5-web.properties

webapp.contextPath=/FlashMediaGateway

webapp.virtualHosts=*:1935,*:443

recordPath=/opt/Avaya/AccWeb/data/SessionRecording/

playbackPath=/opt/Avaya/AccWeb/data/SessionRecording/

absolutePath=true

Adobe的Red5源代码里有一个RTMPClient的类,这个类在使用上其实不复杂,但却没办法成功调用。观察日志,发现是连接成功后在开始创建流的时候,服务端把连接断开了。我能想到的解释就是可能公司现在所使用的Red5服务器的版本与这个RTMPClient不兼容。

国外还有一个收费的RTMPClient,价值是395刀。具体网址和产品的名称我就不指出了,有心人肯定会找得到。这个客户端类库很强大,使用也很方便,我注册了一个试用的key,发现能和Red5服务器成功连接并且通讯良好。(国内有破解版)

之前用vlc和Vitamio尝试过,结果播放不了直播流,可能是我太笨了吧.

有可能的原因如下:

http://blog.csdn.net/qiuchangyong/article/details/18862247

利用LocalServerSocket采集视频数据的实时流数据,android自带的LocalServerSocket是和java 的serverSocket是不同的,因为LocalServerSocket的客户端和服务端都是必须在本机,所以我们可以在调用RecodMedia录制视频的时候建立客户端LocalSocket来向服务端发送数据,在服务端接收数据调用juv—rtmp—client将接收的数据打包成RTMP协议数据向流媒体服务器发送数据。

利用android自带的Camera来录制数据,这里主要用到了照相机的CallBack回调接口,在这个回调接口里面可以实时的接收照相机录制的视频数据,这里面录制的视频数据是YUV420SP格式的数据流,这是最原始的数据流,是无法显示的服务器界面的。将数据流转化成YUV420SP2RGB格式的数据,然后利用juv—rtmp—client向流媒体服务器发送数据。

juv—rtmp—client这个jar包封装了很多和流媒体服务器交互的方法,当然有视频数据传输格式。在流媒体服务提供了三种格式的传输方式:Record、Live、Append。

利用juv—rtmp—client有几个主要的类

其中最重要的几个类是NetConnection,NetStream, License,其中NetConnection,NetStream这两个类是负责创建连接和回调服务端的数据。而License则顾名思义是负责验证有没有授权。由于按照官方给出的使用说明,在使用前必须调用License.setKey()方法传入注册所得到的key。

CameraVideoActivity

private void init() {

aCamera = new AndroidCamera(CameraVideoActivity.this);

hour = (TextView) findViewById(R.id.mediarecorder_TextView01);

minute = (TextView) findViewById(R.id.mediarecorder_TextView03);

second = (TextView) findViewById(R.id.mediarecorder_TextView05);

mStart = (Button) findViewById(R.id.mediarecorder_VideoStartBtn);

mStop = (Button) findViewById(R.id.mediarecorder_VideoStopBtn);

mReturn = (Button) findViewById(R.id.mediarecorder_VideoReturnBtn);

// 开始录像

mStart.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

if (streaming == false) {

aCamera.start();

}

aCamera.startVideo();

isTiming = true;

handler.postDelayed(task, 1000);

// 设置按钮状态

mStart.setEnabled(false);

mReturn.setEnabled(false);

mStop.setEnabled(true);

}

});

mReturn.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

if (RTMPConnectionUtil.netStream != null) {

RTMPConnectionUtil.netStream.close();

RTMPConnectionUtil.netStream = null;

}

if (aCamera != null) {

aCamera = null;

}

finish();

}

});

mStop.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

aCamera.stop();

// 设置按钮状态

mStart.setEnabled(true);

mReturn.setEnabled(true);

mStop.setEnabled(false);

isTiming = false;

}

});

}

public class AndroidCamera extends AbstractCamera implements

SurfaceHolder.Callback, Camera.PreviewCallback {

private SurfaceView surfaceView;

private SurfaceHolder surfaceHolder;

private Camera camera;

private int width;

private int height;

private boolean init;

int blockWidth;

int blockHeight;

int timeBetweenFrames; // 1000 / frameRate

int frameCounter;

byte[] previous;

public AndroidCamera(Context context) {

surfaceView = (SurfaceView) findViewById(R.id.mediarecorder_Surfaceview);

surfaceView.setVisibility(View.VISIBLE);

surfaceHolder = surfaceView.getHolder();

surfaceHolder.addCallback(AndroidCamera.this);

surfaceHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

width = 352 / 2;

height = 288 / 2;

init = false;

Log.d("DEBUG", "AndroidCamera()");

}

private void startVideo() {

Log.d("DEBUG", "startVideo()");

RTMPConnectionUtil.ConnectRed5(CameraVideoActivity.this);

RTMPConnectionUtil.netStream = new UltraNetStream(

RTMPConnectionUtil.connection);

RTMPConnectionUtil.netStream

.addEventListener(new NetStream.ListenerAdapter() {

@Override

public void onNetStatus(final INetStream source,

final Map<String, Object> info) {

Log.d("DEBUG", "Publisher#NetStream#onNetStatus: "

+ info);

final Object code = info.get("code");

if (NetStream.PUBLISH_START.equals(code)) {

if (CameraVideoActivity.aCamera != null) {

RTMPConnectionUtil.netStream

.attachCamera(aCamera, -1 /* snapshotMilliseconds */);

Log.d("DEBUG", "aCamera.start()");

aCamera.start();

} else {

Log.d("DEBUG", "camera == null");

}

}

}

});

RTMPConnectionUtil.netStream.publish("anystringdata", NetStream.LIVE);

}

public void start() {

camera.startPreview();

streaming = true;

}

public void stop() {

camera.stopPreview();

streaming = false;

}

public void printHexString(byte[] b) {

for (int i = 0; i < b.length; i++) {

String hex = Integer.toHexString(b[i] & 0xFF);

if (hex.length() == 1) {

hex = '0' + hex;

}

}

}

@Override

public void onPreviewFrame(byte[] arg0, Camera arg1) {

// TODO Auto-generated method stub

// if (!active) return;

if (!init) {

blockWidth = 32;

blockHeight = 32;

timeBetweenFrames = 100; // 1000 / frameRate

frameCounter = 0;

previous = null;

init = true;

}

final long ctime = System.currentTimeMillis();

/** 将采集的YUV420SP数据转换为RGB格式 */

byte[] current = RemoteUtil.decodeYUV420SP2RGB(arg0, width, height);

try {

//

final byte[] packet = RemoteUtil.encode(current, previous,

blockWidth, blockHeight, width, height);

fireOnVideoData(new MediaDataByteArray(timeBetweenFrames,

new ByteArray(packet)));

previous = current;

if (++frameCounter % 10 == 0)

previous = null;

} catch (Exception e) {

e.printStackTrace();

}

final int spent = (int) (System.currentTimeMillis() - ctime);

try {

Thread.sleep(Math.max(0, timeBetweenFrames - spent));

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width,

int height) {

// TODO Auto-generated method stub

// camera.startPreview();

// camera.unlock();

// Log.d("DEBUG", "surfaceChanged()");

// startVideo();

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

// TODO Auto-generated method stub

camera = Camera.open();

try {

camera.setPreviewDisplay(surfaceHolder);

camera.setPreviewCallback(this);

Camera.Parameters params = camera.getParameters();

params.setPreviewSize(width, height);

camera.setParameters(params);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

camera.release();

camera = null;

}

Log.d("DEBUG", "surfaceCreated()");

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

// TODO Auto-generated method stub

if (camera != null) {

camera.stopPreview();

camera.release();

camera = null;

}

}

}

/* 定时器设置,实现计时 */

private Handler handler = new Handler();

int s, h, m, s1, m1, h1;

private Runnable task = new Runnable() {

public void run() {

if (isTiming) {

handler.postDelayed(this, 1000);

s++;

if (s < 60) {

second.setText(format(s));

} else if (s < 3600) {

m = s / 60;

s1 = s % 60;

minute.setText(format(m));

second.setText(format(s1));

} else {

h = s / 3600;

m1 = (s % 3600) / 60;

s1 = (s % 3600) % 60;

hour.setText(format(h));

minute.setText(format(m1));

second.setText(format(s1));

}

}

}

};

RTMPConnectionUtil

public static void ConnectRed5(Context context) {

// License.setKey("63140-D023C-D7420-00B15-91FC7");

connection = new UltraNetConnection();

connection.configuration().put(UltraNetConnection.Configuration.INACTIVITY_TIMEOUT, -1);

connection.configuration().put(UltraNetConnection.Configuration.RECEIVE_BUFFER_SIZE, 256 * 1024);

connection.configuration().put(UltraNetConnection.Configuration.SEND_BUFFER_SIZE, 256 * 1024);

//connection.client(new ClientHandler(context));

connection.addEventListener(new NetConnectionListener());

//Log.d("DEBUG", User.id + " - " + User.phone);

connection.connect(red5_url);

}

附带一款测试Red5用rtmp是否能够连接工具