宿主机:

操作系统:Microsoft Windows 7 旗舰版 Service Pack 1 (build 7601), 32-bit

处理器:Intel(R) Core(TM)2 Duo CPU T5870 @ 2.00GHz 双核

内存:3.00 GB

硬盘:日立 HITACHI HTS543225L9SA00(250GB) 使用时间:5922小时 温度:45℃

IP地址:192.168.0.17(内网)

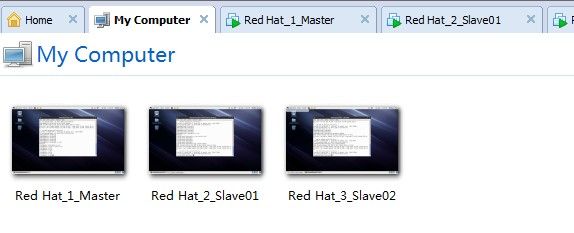

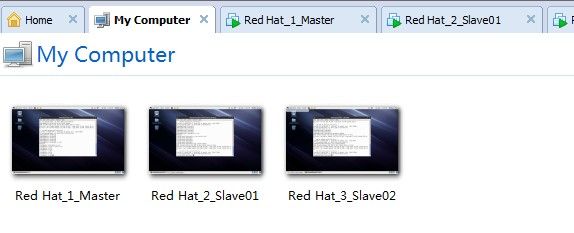

一、服务器规划

3个虚拟linux服务器,每个分配8G硬盘,256M内存,一个作为master,另外2个作为slave,都建立hadoop用户。

Master 机器主要配置NameNode 和JobTracker 的角色,负责总管分布式数据和分解任务的执行;

2 个Salve 机器配置DataNode 和TaskTracker 的角色,负责分布式数据存储以及任务的执行。

1)master节点:

虚拟机名称:Red Hat_1_Master

hostname:master.hado0p

IP:192.168.70.101

2)slave01节点

虚拟机名称:Red Hat_2_Slave01

hostname:slave01.hadoop

IP:192.169.70.102

3)slave02节点

虚拟机名称:Red Hat_3_Slave02

hostname:slave02.hadoop

IP:192.168.70.103

二、安装虚拟机

虚拟机:vmware workstation 9.0

操作系统:rhel-server-6.4-i386

安装过程略,说一下clone,装完一个redhat后,可使用clone方法,克隆另外2个虚拟系统

方法:

Red Hat_1_Master power off,右键Manage-->Clone

Next,选择 create a full clone

命名虚拟机名称,选择目录

完成clone。

三、IP,hosts配置(root用户)

按照一的规划配置IP。

注意:clone的系统,网卡eth0的MAC地址都一样,先将clone出的2台mac改掉:

vi /etc/udev/rules.d/70-persistent-net.rules

删掉eth0的配置,将eth1改为eth0,保存退出;

vi /etc/sysconfig/network-scripts/ifcfg-eth0

将HWADDR值改为rules文件修改后的eth0 MAC,over。

1、修改hostname

vi /etc/hosts

每个节点的hosts文件,添加:

192.168.70.101 master.hadoop

192.168.70.102 slave01.hadoop

192.168.70.103 slave02.hadoop

保存,退出。

2、修改固定IP

vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE="eth0"

BOOTPROTO=static #静态IP

HWADDR="00:0c:29:cd:32:a1"

IPADDR=192.168.70.102 #IP地址

NETMASK=255.255.255.0 #子网掩码

GATEWAY=192.168.70.2 #默认网关

IPV6INIT="yes"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

UUID="dd883cbe-f331-4f3a-8972-fd0e24e94704"

保存,退出;其它二台如法炮制。

3、

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=slave01.hadoop #hostname

GATEWAY=192.168.70.2 #默认网关

保存,退出;其它二台同样修改。

重启network服务,查看IP,宿主机、三台虚拟机器互ping。

四、安装JDK jdk-6u45-linux-i586.bin

从宿主机通过sftp上传到master节点 /usr目录

chmod +x jdk-6u45-linux-i586.bin

./jdk-6u45-linux-i586.bin

当前目录会unpack出jdk1.6.0_45目录,修改系统环境变量(所有用户生效)

vi /etc/profile

末尾添加:

JAVA_HOME=/usr/jdk1.6.0_45

export JAVA_HOME

PATH=$PATH:$JAVA_HOME/bin

export PATH

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export CLASSPATH

保存,退出;其它二台做同样配置。

加载环境变量:

source /etc/profile

测试:

java -version

五、ssh免密登录

1、开启认证(root)

[root@master usr]# vi /etc/ssh/sshd_config

#解除注释

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

#AuthorizedKeysCommand none

#AuthorizedKeysCommandRunAs nobody

2、生成证书公私密钥(hadoop)

[master@master ~]$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

Generating public/private dsa key pair.

Your identification has been saved in /home/master/.ssh/id_dsa.

Your public key has been saved in /home/master/.ssh/id_dsa.pub.

The key fingerprint is:

f5:a6:3b:8f:cf:dd:e7:94:1f:64:37:f4:44:b1:71:e8 [email protected]

The key's randomart image is:

+--[ DSA 1024]----+

| ++|

| ..+|

| . . o.|

| . . E..|

| S o +o|

| o o +|

| . o.|

| .+ . o+|

| o++ ..=|

+-----------------+

[master@master ~]$

Id_dsa.pub为公钥,id_dsa为私钥

将公钥文件复制成authorized_keys文件 到.ssh目录

[master@master ~]# cd .ssh/

[master@master .ssh]# ls

id_dsa id_dsa.pub known_hosts

[master@master .ssh]#

[master@master .ssh]#

[master@master .ssh]# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[master@master .ssh]#

[master@master .ssh]#

[master@master .ssh]$ ll

total 16

-rw-------. 1 master master 610 Oct 21 06:49 authorized_keys

-rw-------. 1 master master 668 Oct 21 06:48 id_dsa

-rw-r--r--. 1 master master 610 Oct 21 06:48 id_dsa.pub

-rw-r--r--. 1 master master 1181 Oct 21 06:50 known_hosts

3、测试登录

[hadoop@master .ssh]$ ssh localhost

期望结果是不需要输入密码,事与愿违:

[hadoop@master .ssh]$ ssh localhost

hadoop@localhost's password:

找原因:

[hadoop@master .ssh]$ ssh -v localhost

OpenSSH_5.3p1, OpenSSL 1.0.0-fips 29 Mar 2010

debug1: Reading configuration data /etc/ssh/ssh_config

debug1: Applying options for *

debug1: Connecting to localhost [::1] port 22.

debug1: Connection established.

debug1: identity file /home/hadoop/.ssh/identity type -1

debug1: identity file /home/hadoop/.ssh/id_rsa type -1

debug1: identity file /home/hadoop/.ssh/id_dsa type 2

...

...

debug1: No valid Key exchange context

debug1: Next authentication method: gssapi-with-mic

debug1: Unspecified GSS failure. Minor code may provide more information

Credentials cache file '/tmp/krb5cc_500' not found

debug1: Unspecified GSS failure. Minor code may provide more information

Credentials cache file '/tmp/krb5cc_500' not found

debug1: Unspecified GSS failure. Minor code may provide more information

debug1: Unspecified GSS failure. Minor code may provide more information

Credentials cache file '/tmp/krb5cc_500' not found

debug1: Next authentication method: publickey

debug1: Trying private key: /home/hadoop/.ssh/identity

debug1: Trying private key: /home/hadoop/.ssh/id_rsa

debug1: Offering public key: /home/hadoop/.ssh/id_dsa

debug1: Authentications that can continue: publickey,gssapi-keyex,gssapi-with-mic,password

debug1: Next authentication method: password

hadoop@localhost's password:

切root,看日志:

[root@master ~]# tail -30 /var/log/secure

Sep 7 05:57:59 master sshd[3960]: Received signal 15; terminating.

Sep 7 05:57:59 master sshd[4266]: Server listening on 0.0.0.0 port 22.

Sep 7 05:57:59 master sshd[4266]: Server listening on :: port 22.

Sep 7 05:58:05 master sshd[4270]: Accepted password for root from ::1 port 44995 ssh2

Sep 7 05:58:06 master sshd[4270]: pam_unix(sshd:session): session opened for user root by (uid=0)

Sep 7 06:06:43 master su: pam_unix(su:session): session opened for user hadoop by root(uid=0)

Sep 7 06:07:09 master sshd[4395]: Authentication refused: bad ownership or modes for file /home/hadoop/.ssh/authorized_keys

Sep 7 06:07:09 master sshd[4395]: Authentication refused: bad ownership or modes for file /home/hadoop/.ssh/authorized_keys

改权限:

chmod 600 ~/.ssh/authorized_keys

再测试,通过。

[root@master .ssh]# ssh localhost

The authenticity of host 'localhost (::1)' can't be established.

RSA key fingerprint is 10:e8:ce:b5:2f:d8:e7:18:82:5a:92:06:6a:0d:6d:c2.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (RSA) to the list of known hosts.

[root@master ~]#

4、公钥分发给slave节点,从而master可以免密码登录到slave

[hadoop@master .ssh]$ scp id_dsa.pub [email protected]:/home/hadoop/master.id_dsa.pub

[hadoop@master .ssh]$ scp id_dsa.pub [email protected]:/home/hadoop/master.id_dsa.pub

5、在slave节点上,将master的认证密钥追加到authorized_keys

[hadoop@slave02 ~]$ cat master.id_dsa.pub > .ssh/authorized_keys

6、从master主机ssh登录slave

[hadoop@master .ssh]$ ssh slave01.hadoop

Last login: Sat Sep 7 06:15:35 2013 from localhost

[hadoop@slave01 ~]$ exit

logout

Connection to slave01.hadoop closed.

[hadoop@master .ssh]$

[hadoop@master .ssh]$

[hadoop@master .ssh]$ ssh slave02.hadoop

Last login: Sat Sep 7 06:16:24 2013 from localhost

[hadoop@slave02 ~]$ exit

logout

Connection to slave02.hadoop closed.

不需要密码了,done。

注:目前实现的是master免密登录slave,如果要实现各个节点彼此免密,可将各个节点的公钥整合到一个临时文件,然后将内容覆盖到各个authorized_keys,省去很多分发、追加的操作。