1. The Single-Source Shortest Path Problem

-- Input: Directed graph G = (V , E), edge lengths ce for each e in E, source vertex s in V. [Can assume no parallel edges.]

-- Goal: For every destination v in V, compute the length (sum of edge costs) of a shortest s-v path.

2. Dijkstra's Algorithm

-- Good news: O(m log n) running time using heaps (n = number of vertices, m = number of edges)

-- Bad news:

(1) Not always correct with negative edge lengths

(2) Not very distributed (need information of the whole graph)

3. Negative Cycles

-- Question: How to define shortest path when G has a negative cycle?

-- Solution #1: Compute the shortest s-v path, with cycles allowed.

Problem: Undefined. [will keep traversing negative cycle]

-- Solution #2: Compute shortest cycle-free s-v path.

Problem: NP-hard (no polynomial algorithm, unless P=NP)

-- Solution #3: (For now) Assume input graph has no negative cycles.

Proposition: For every v, there is a shortest s-v path with n - 1 edges.

4. Optimal Substructure

-- Intuition: Exploit sequential nature of paths. Subpath of a shortest path should itself be shortest.

-- Issue: Not clear how to define "smaller" & "larger" subproblems.

-- Key idea: Artificially restrict the number of edges in a path.

Subproblem size <==> Number of permitted edges

5. Optimal Substructure Lemma

-- Lemma: Let G = (V , E) be a directed graph with edge lengths ce and source vertex s.

[G might or might not have a negative cycle]

-- For every v in V, i in {1 , 2 , ... }, let P = shortest s-v path with at most i edges. (Cycles are permitted.)

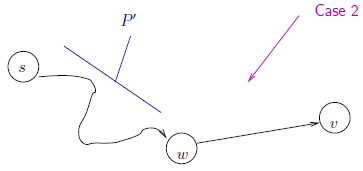

-- Case 1: If P has <= (i - 1) edges, it is a shortest s-v path with <= (i - 1) edges.

-- Case 2: If P has i edges with last hop (w , v), then P' is a shortest s-w path with (i - 1) edges.

6. The Recurrence

-- Notation: Let L(i , v) = minimum length of a s-v path with at most i edges.

-- With cycles allowed

-- Defined as +Infinity if no s-v paths with at most i edges

-- Recurrence: For every v in V, i in {1 , 2 , ... }

L(i , v) = min { L(i-1 , v) [Case 1] , min(u;v) in E {L(i-1 , w) + cwv} [Case 2] }

7. The Bellman-Ford Algorithm

-- Let A = 2-D array (indexed by i and v)

-- Base case: A[0 , s] = 0; A[0 , v] = +Infinity for all v <> s.

-- For i = 1, 2, ... , n - 1

For each v in V

A[i , v] = min { A[i - 1 , v] , min(w,v) in E {A[i - 1 , w] + cwv } }

-- If G has no negative cycle, then algorithm is correct [with final answers = A[n - 1 , v] ]

-- Total work is O( n Sum(v in V) { in-deg(v) } ) = O(mn)

n --> # iterations of outer loop (i.e. choices of i)

Sum(v in V) { in-deg(v) } --> work done in one iteration = m.

-- Stopping Early: Suppose for some j < n - 1, A[j , v] = A[j - 1, v] for all vertices v.

8. Checking for a Negative Cycle

-- G has no negative-cost cycle (that is reachable from s) <==> In the extended Bellman-Ford algorithm, A[n - 1 , v] = A[n , v] for all v in V.

-- Consequence: Can check for a negative cycle just by running Bellman-Ford for one extra iteration.

-- Proof.

==> Already proved in correctness of Bellman-Ford

<== Assume A[n - 1 , v] = A[n , v] for all v in V. (Assume also these are finite )

Let d(v) denote the common value of A[n - 1 , v] and A[n , v].

d(v) = A[n , v] = min { A[i - 1 , v] , min(w,v) in E { A[n - 1 , w] + cwv } }

Thus: d(v) <= d(w) + cwv for all edges (w , v) in E

Equivalently: d(v) - d(w) <= cwv

Consider an arbitrary cycle C. Sum (w , v) in C {cwv} >= Sum (w, v) in C { d(v) - d(w) } = 0

9. Space Optimization

-- Only need the A[i - 1 , v]'s to compute the A[i , v]'s ==> Only need O(n) to remember the current and last rounds of subproblems [only O(1) per destination!]

-- Concern: Without a filled-in table, how do we reconstruct the actual shortest paths?

-- Predecessor pointers : Compute a second table B, where B[i , v] = 2nd-to-last vertex on a shortest s --> v path with i edges (or NULL if no such paths exist)

-- Reconstruction: Tracing back predecessor pointers { the B[n - 1 , v]'s (= last hop of a shortest s-v path) -- from v to s yields a shortest s-v path.

10. Computing Predecessor Pointers

-- A[i , v] = min { (1) A[i - 1 , v] = (2) min(w,v) in E {A[i - 1 , w] + cwv } }

-- Base case: B[0 , v] =NULL for all v in V

-- To compute B[i , v] with i > 0:

Case 1: B[i , v] = B[i - 1 , v]

Case 2: B[i , v] = the vertex w achieving the minimum (i.e., the new last hop)

Correctness: Computation of A[i , v] is brute-force search through the (1+in-degree(v)) possible optimal solutions, B[i , v] is just caching the last hop of the winner.

To reconstruct a negative-cost cycle: Use depth-first search to check for a cycle of predecessor pointers after each round (must be a negative cost cycle).

11. From Bellman-Ford to Internet Routing

-- The Bellman-Ford algorithm is intuitively "distributed".

-- Switch from source-driven to destination driven [Just reverse all directions in the Bellman-Ford algorithm]. Every vertex v stores shortest-path distance from v to destination t and the first hop of a shortest path for all relevant destinations t.("Distance vector protocols")

-- Can't assume all A[i , v]'s get computed before all A[i - 1, v]'s. Switch from "pull-based" to "push-based": As soon as A[i , v] < A[i - 1 , v], v notifies all of its neighbors. Algorithm guaranteed to converge eventually. (Assuming no negative cycles) [Reason: Updates strictly decrease sum of shortest-path estimates]

-- Handling Failures: Each V maintains entire shortest path to t, not just the next hop.

Con: More space required.

Pro#1: More robust to failures. (Counting to Infitity)

Pro#2: Permits more sophisticated route selection (e.g., if you care about intermediate stops).