Digging more into the Molehill APIs

few months ago, we announced at Max 2010 in Los Angeles, the introduction of the Molehill APIs in the Adobe Flash runtimes on mobile and desktop. For more infos check the “Molehill” page on Adobe Labs. I wanted to give you guys more details about Molehill, some more technical details on how it is going to work from an ActionScript developer standpoint.

So let's get started ![]()

What is Molehill?

“Molehill’ is the codename for the set of 3D GPU accelerated APIs that will be exposed in ActionScript 3 in the Adobe Flash Player and Adobe AIR. This will enable high-end 3D rendering inside the Adobe Flash Platform. Molehill will rely on DirectX9 on Windows, OpenGL 1.3 on MacOS and Linux. On mobile platforms like Android, Molehill will rely on OpenGL ES2. Technically, the Molehill APIs are truly 3D GPU programmable shader based, and will expose features that 3D developers have been looking for since a long time in Flash, like programmable vertex and fragment shaders, to enable things like vertex skinning on the GPU for bones animation but also native z-buffering, stencil color buffer, cube textures and more.

In terms of performance, Adobe Flash Player 10.1 today, renders thousands of non z-buffered triangles at approximately 30 Hz. With the new 3D APIs, developers can expect hundreds of thousands of z-buffered triangles to be rendered at HD resolution in full screen at around 60 Hz. Molehill will make it possible to deliver sophisticated 3D experiences across almost every computer and device connected to the Internet. To get an idea of how Molehill performs and see a live demo check this video.

The way it works.

The existing Flash Player 2.5D APIs that we introduced in Flash Player 10 are not deprecated, the Molehill APIs will offer a solution to advanced 3D rendering requiring full GPU acceleration. Depending on the project that you will be working on, you will decide which APIs you want to use.

We introduced recently the concept of “Stage Video” in Flash Player 10.2 available as a beta on Adobe Labs.

Stage Video relies on the same design, by enabling full GPU acceleration for video, from decoding to presentation. With this new rendering model, the Adobe Flash Player does not present the video frames or 3D buffer inside the display list but inside a texture sitting behind the stage painted through the GPU. This allows the Adobe Flash Player to directly paint on screen the content available on the graphics card memory. No more read back is required, to retrieve the frames from the GPU to push them on screen through the display list on the CPU.

As a result, because the 3D content sits behind the Flash Player stage and is not part of the display list, the Context3D and Stage3D objects are not display objects. So remember that you cannot interact with them just like with any DisplayObject, rotations, blend modes, filters and many other effects cannot be applied.

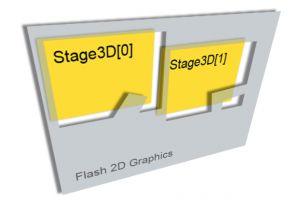

The following figure illustrates the idea:

Of course, as you can see, 2D content can overlay the 3D content with no problems, but the opposite is not possible. However, we will provide an API which will allow you to draw your 3D content to a BitmapData if required. From an ActionScript APIs standpoint, as a developer you interact with the two main objects, a Stage3D and a Context3D object. You request to the Adobe Flash Player a 3D context and a Context3D object will be created for you. So now you may wonder, what happens if the GPU driver is incompatible, do I get a black screen failing silently?

The Flash Player will still return you a Context3D object but using software fallback internally, so you will still get all the Molehill features and same API but running on the CPU. To achieve, this we rely on a very fast CPU rasterizer from TransGaming Inc. called “SwiftShader”. The great news is that even when running on software, SwiftShader runs about 10 times faster than today’s vector rasterizer available in Flash Player 10.1, so you can expect some serious performance improvements even when running in software mode.

The beauty of “Molehill” APIs is that you do not have to worry what is happening internally. Am I running on DirectX, OpenGL or SwiftShader? Should I use a different API for OpenGL when on MacOS and Linux or OpenGL ES 2 when running on a mobile platform? No, everything is transparent for you as a developer, you program one single API and the Adobe Flash Player will handle this for you internally and do the translation behind the scene.

It is important to remember, that the Molehill APIs do not use what is called a fixed function pipeline but a programmable pipeline only, which means that you will have to work with vertex and fragment shaders to display anything on screen. For this, you will be able to upload on the graphics card your shaders as pure low-level AGAL (“Adobe Graphics Assembly Language”) bytecode as a ByteArray. As a developer you have two ways to do this, write your shaders at the assembly level, which requires an advanced understanding of how shaders works or use a higher-level language like Pixel Bender 3D which will expose a more natural way to program your shaders and compile for you the appropriate AGAL bytecode.

In order to represent your triangles, you will need to work with VertexBuffer3D and IndexBuffer3D objects by passing vertices coordinates and indices, and once your vertex shaders and fragment shaders are ready, you can upload them to the graphics card through a Program3D object. Basically, a vertex shader deals with the position of the vertices used to draw your triangles whereas a fragment shader handles the appearance of the pixels used to texture your triangles.

The following figure illustrates the difference between the types of shaders:

As stated before, Molehill does not use a fixed function pipeline, hence developers will be free to create their own custom shaders and totally control the rendering pipeline. So let’s focus a little bit on the concept of vertex and fragment shaders with Molehill.

Digging into vertex and fragment shaders

To illustrate the idea, here is a simple example of low-level shading assembly you could write to display your triangles and work at the pixel level with Molehill. Let's get ready, cause we are going to go very low-level and code shaders to the metal ![]() . Of course if you hate this, do not worry, you will be able to use a higher-level shading language like Pixel Bender 3D.

. Of course if you hate this, do not worry, you will be able to use a higher-level shading language like Pixel Bender 3D.

To create our shader program to upload to the graphics card; we need first a vertex shader (Context3DProgramType.VERTEX) which should at least output a clip-space position coordinate. To perform this, we need to multiply va0 (each vertex position attributes) by vc0 (vertex constant 0)our projection matrix stored at this index andoutput the result through the op keyword (standing for "output position" of the vertex shader):

Now you may wonder, what is this m44 thing? Where does it comes from?

It is actually a 4x4 matrix transformation, it projects our vertices according to the projection matrix we defined, we could have written our shader like the following, by manually calculating the dot product on each attribute, but the m44 instruction (performing a 4x4 matrix transform on all attributes in one line) is way shorter:

Remember thatvc0 (vertex constant 0), it is actually just our projection matrix stored in this index, passed earlier as a constant through the setProgramsConstantMatrix API on the Context3D object:

As with our matrix constant, va0 (vertex attributes 0) for the position, needs to be defined, and we did this through the setVertexBufferAt API on the Context3D object

In our example, the vertex shader passes the vertices color (va1) to the fragment shader through v0 and the mov instruction to actually paint our triangles pixels. To do this, we could write the following:

And as you can imagine, va1 (vertexattributes1) for the color was defined through setVertexBufferAt, to expose our pixel colors (float 3) in the shaders

Our vertices position and colors are defined into our VertexBuffer3D object :

We have our vertex shader defined, now we need to define and upload our fragment shader (Context3DProgramType.FRAGMENT), the idea is to retrieve each vertex color passed (copied from va1 to v0) and output this color through the oc opcode:

As you can imagine, a fragment shader should always output a color. Then, we need to upload all this to the Context3D object

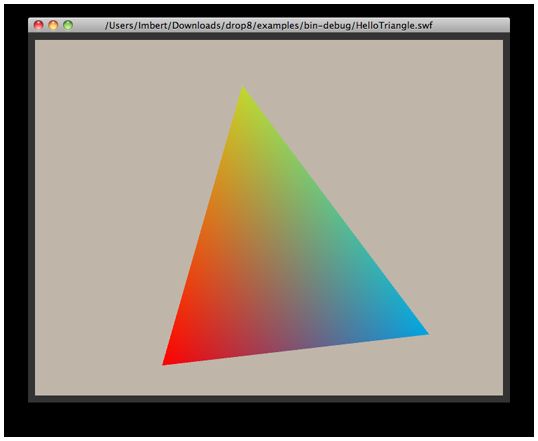

If we compile and run those shaders, we would get the following result:

Now, let’s say we need to invert the colors of each pixel, it would be really easy. As this operation is performed on the pixels color only, we would just modify our fragment shader and use the sub opcode to subtract the color, as following:

Here, we invert the color of each pixel by subtracting each pixel color from 1 (white). The white pixel we subtract from is stored in a fragment constant (fc1) that we passed by using the setProgramConstantsFromVector API:

The final pixel color is then stored in a fragment temporary register (ft0) and passed as the final output color.

By using this modified fragment shader, we end up with the following result:

As another exercice, let's process a sepia-tone filter.

To achieve this, we need to first convert to gray scale then to sepia. We would use the following fragment shader for this:

As usual, we would have defined our constants using the setPrograConstantsFromVector API:

By using such a fragment shader, we would end up with the following result:

As you can imagine, this gives you a lot of power and will allow you to go way further in terms of shading and handle things like lightning through vertex or fragment shading, fog, or even animation through vertex skinning and even more.

Ok, so last one, let's now apply a texture to our triangle from a BitmapData, to do this, we would need to pass uv values from our vertex shader to our fragment shader and then use those values to apply our texture that we sampled in our fragment shader.

To pass the uv values, we would need to modify our vertex shader this way :

Our uv coordinates are now copied from va1 to v0, ready to be passed to the fragment shader. Notice that we do not pass any vertex color anymore to the fragment shader, just the uv coordinates.

As expected, we defined our uv valuesfor each vertex (float2) through va1 with setVertexBufferAt :

Our vertices position and uv values are defined into our VertexBuffer3D object :

Then we retrieve the uv values in our fragment shader and sample our texture :

To define our texture, we instantiate our BitmapData, upload it to a Texture object and upload it to the GPU:

And then to access it from fs1, we set it:

By using this modified shader program, we end up with this :

will cover in later tutorials new effects like per-fragment fog or heat signature with texture lookup too.

Of course, we just covered here how shaders work with Molehill. To control your pixels you need triangles and vertices and indices defining them in your scene. For this, you will need other objects like VertexBuffer3D and IndexBuffer3D, attached to your Context3D object.

The following figure illustrates the overall interaction of objects:

As you can see, the Molehill APIs are very low-level and expose features for advanced 3D developers who want to work at such a level with 3D. Of course, some developers will prefer working with higher-level frameworks, which exposes ready to go APIs, and we took care of that.

Building mountains out of Molehill

We know that many ActionScript 3 developers would prefer having a light, a camera, a plane to work with rather than a vertex buffer and shaders bytecode. So to make sure that everyone can enjoy the power of Molehill, we are actively working with existing 3D frameworks like Alternativa3D, Flare3D, Away3D, Sophie3D, Yogurt3D and more. Today most of these frameworks are already Molehill enabled and will be available for you when Molehill is available in a next version of the Adobe Flash runtimes.

Most of the developers from these frameworks were at Max this year to present sessions about how they leveraged Molehill in their respective framework. We expect developers to build their engine on top of Molehill, hence, advanced 3D developers and non-3D developers will be able to benefit from Molehill.

I hope you enjoyed this little deep dive into Molehill, stay tuned for more Molehill stuff soon ![]()