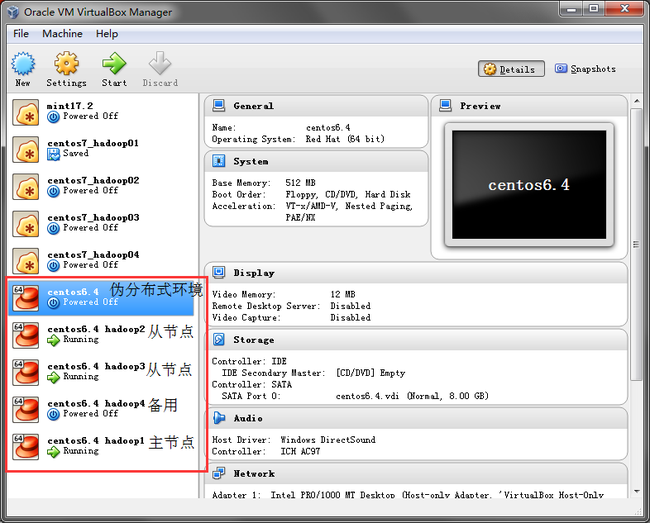

伪分布式环境搭建http://mvplee.iteye.com/blog/2212629

复制之前在VirutalBox搭建的伪分布式环境,修改主机名,分别为hadoop1、hadoop2、hadoop3

修改每台主机的主机名,分别为hadoop1、hadoop2、hadoop3

/etc/sysconfig/network /etc/hosts reboot

修改hadoop1主机的配置文件

core-site.xml mapred-site.xml

配置每台主机的ssh

[root@hadoop03 local]# ssh-keygen -t rsa [root@hadoop03 local]# cd /root/.ssh [root@hadoop03 .ssh]# cat id_rsa.pub >>authorized_keys

在hadoop2、hadoop3上复制自己的公钥到hadoop1上,hadoop1、hadoop2都可以免密码登录hadoop1

[root@hadoop2 .ssh]# ssh-copy-id id hadoop0 [root@hadoop03 .ssh]# ssh-copy-id -i hadoop1 [root@hadoop1 .ssh]# more authorized_keys

复制hadoop1中的authoried_keys到hadoop2、hadoop3上,确保三台主机上的authorized_keys内容一样都可以互相面密码登录

[root@hadoop1 .ssh]# scp /root/.ssh/authorized_keys hadoop2:/root/.ssh/ [root@hadoop1 .ssh]# scp /root/.ssh/authorized_keys hadoop3:/root/.ssh/

删除hadoop1上hadoop包中的logs、tmp目录

[root@hadoop1 hadoop-1.1.2]# rm -rf logs [root@hadoop1 hadoop-1.1.2]# rm -rf tmp

在hadoop1上复制JDK目录和hadoop目录到hadoop1、hadoop2上

[root@hadoop1 local]# scp -r /usr/local/jdk1.6.0_24 hadoop2:/usr/local/jdk1.6.0_24 [root@hadoop1 local]# scp -r /usr/local/jdk1.6.0_24 hadoop3:/usr/local/jdk1.6.0_24 [root@hadoop1 local]# scp -r /usr/local/hadoop-1.1.2 hadoop2:/usr/local/hadoop-1.1.2 [root@hadoop1 local]# scp -r /usr/local/hadoop-1.1.2 hadoop3:/usr/local/hadoop-1.1.2

在hadoop1上复制profile到hadoop2、hadoop3上,重新加载资源文件

[root@hadoop1 local]# scp /etc/profile hadoop2:/etc/ [root@hadoop1 local]# scp /etc/profile hadoop3:/etc/ [root@hadoop2 local]# source /etc/profile [root@hadoop3 local]# source /etc/profile

配置hadoop主从节点,在hadoop1主机上的slaves文件中加入hadoop2、hadoop3为从节点,masters上存放的是SecondarNameNode

[root@hadoop1 local]# more hadoop-1.1.2/conf/slaves #localhost hadoop2 hadoop3

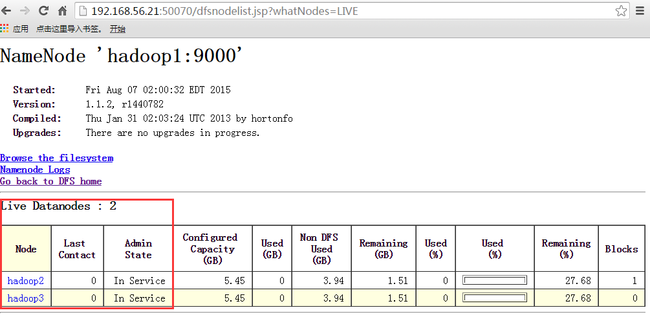

在hadoop1上格式namenode,启动hadoop

[root@hadoop1 local]# hadoop namenode -format [root@hadoop1 local]# jps 5740 JobTracker 5659 SecondaryNameNode 5492 NameNode 5839 Jps [root@hadoop2 local]# jps 3473 TaskTracker 3568 Jps 3365 DataNode [root@hadoop3 local]# jps 2233 TaskTracker 2310 Jps 2142 DataNode

使用浏览器登录http://192.168.56.21:50070/