使用VirtualBox搭建伪分布式环境,Linux为CentOS6.4,网络连接模式为Host-only

物理环境虚拟机网卡地址设置为192.168.56.0/24网段

设置CentOS的IP地址,必须和虚拟机网卡地址在同一网段,配置完CentOS的IP地址后重启一下网卡

[root@centos /]# service network restart

Shutting down loopback interface: [ OK ]

Bringing up loopback interface: [ OK ]

Bringing up interface Wired_connection_1: Active connection state: activated

Active connection path: /org/freedesktop/NetworkManager/ActiveConnection/3

[ OK ]

[root@centos /]# ifconfig

eth2 Link encap:Ethernet HWaddr 08:00:27:B5:55:86

inet addr:192.168.56.101 Bcast:192.168.56.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:feb5:5586/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:115333 errors:0 dropped:0 overruns:0 frame:0

TX packets:65446 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:153775846 (146.6 MiB) TX bytes:5372883 (5.1 MiB)

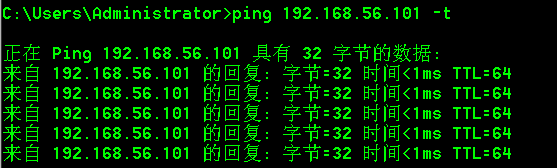

从物理机ping虚拟机CentOS地址

关闭CentOS防火强

[root@centos /]# service iptables stop iptables: Flushing firewall rules: [ OK ] iptables: Setting chains to policy ACCEPT: filter [ OK ] iptables: Unloading modules: [ OK ] [root@centos /]# service iptables status iptables: Firewall is not running. [root@centos /]# chkconfig --list | grep iptables iptables 0:off 1:off 2:on 3:on 4:on 5:on 6:off [root@centos /]# chkconfig iptables off [root@centos /]# chkconfig --list | grep iptables iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off

设置SSH登录

ssh-keygen -t rsa//生成公钥/私钥 cp ~/.ssh/id_rsa.pub ~/.ssh/authorized_keys//拷贝文件 ssh centos//测试

将jdk-6u24-linux-i586.bin、hadoop-1.1.2.tar.gz通过WinSCP上传到CentOS中,通过FTP这种方式传文件,我认为物理机和虚拟机传文件很方便,什么插件都不用装

给jdk-6u24-linux-i586.bin增加执行权限,就可以使用tab键补全

[root@centos local]# [root@centos local]# chmod u+x jdk-6u24-linux-i586.bin

CentOS自带一个OpenJDK

[root@centos local]# java -version java version "1.7.0_09-icedtea" OpenJDK Runtime Environment (rhel-2.3.4.1.el6_3-i386) OpenJDK Client VM (build 23.2-b09, mixed mode)

解压HotSpot JDK

[root@centos local]# ./ jdk-6u24-linux-i586.bin [root@centos local]# ls -l drwxr-xr-x. 10 root root 4096 May 19 10:18 jdk1.6.0_24 -rwxr--r--. 1 root root 84927175 May 4 11:48 jdk-6u24-linux-i586.bin修改profile文件,增加JAVA_HOME和环境变量PTAH的配置

[root@centos local]# vim /etc/profile export JAVA_HOME=/usr/local/jdk1.6.0_24 export PATH=.:$JAVA_HOME/bin:$PATH重新加载文件,验证OpenJDK是否切换为HotSpot

[root@centos local]# source /etc/profile [root@centos local]# java -version java version "1.6.0_24" Java(TM) SE Runtime Environment (build 1.6.0_24-b07) Java HotSpot(TM) Client VM (build 19.1-b02, mixed mode, sharing)解压hadoop-1.1.2.tar.gz

[root@centos local]# tar -xzvf hadoop-1.1.2.tar.gz修改profile文件增加HADOOP_HOME及环境变量

export JAVA_HOME=/usr/local/jdk1.6.0_24 export HADOOP_HOME=/usr/local/hadoop-1.1.2 export PATH=.:$HADOOP_HOME/bin:$JAVA_HOME/bin:$PATH

修改hadoop中conf目录下的4个配置文件

hadoop-env.sh

/usr/local/hadoop-1.1.2/conf/hadoop-env.sh export JAVA_HOME=/usr/local/jdk1.6.0_24

core-site.xml,配置Hadoop Common Project相关的属性配置,Hadoop1.x框架基础晨星配置

/usr/local/hadoop-1.1.2/conf/core-site.xml

<configuration>

<property>

<!--指定NameNode主机名和端口号-->

<name>fs.default.name</name>

<value>hdfs://centos:9000</value>

</property>

<property>

<!--指定Hadoop临时目录-->

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

</configuration> hdfs-site.xml,配置HDFS相关属性