Java中各种锁类型的基准性能评测

周末对Java中各种类型的锁做了基准评测。测试的条件有两个:1)是10、50、100个不同的并发线程,2)是读写比例近似1:1,10:1,100:1,1000:1。测试方法是,对各种加锁的Map方法做性能评测,它们都是实现了MapWrapper接口的封装,测试的就是Map的get和put方法。测试的锁类型有:1)hashtable:直接测试Hashtable,2)synclock:对HashMap的方法直接加synchronized(理论上性能应和Hashtable相当),3)mutexlock:对HashMap的方法显示加Lock锁,4)rwlock:对HashMap加读写锁,5)concrrent:直接使用ConcurrentHashMap的方法,6)对HashMap读操作不加锁,写操作加Lock。针对每种测试条件,起的每个线程执行特定次数(10万或100万)次读写操作,写入的内容是随机生成的整数,每种条件下一个线程要跑三遍,取总的处理时间的平均值作为计算结果。

评测的代码如下:

public interface MapWrapper { void put(Object key,Object value); Object get(Object key); void clear(); String getName(); } public class HashTableMapWrapper implements MapWrapper{ private final Map<Object,Object> map; public HashTableMapWrapper() { map = new Hashtable<Object,Object>(); } @Override public void clear() { map.clear(); } @Override public Object get(final Object key) { return map.get(key); } @Override public void put(final Object key, final Object value) { map.put(key, value); } @Override public String getName() { return "hashtable"; } } public class SyncMapWrapper implements MapWrapper{ private final Map<Object,Object> map; public SyncMapWrapper() { map = new HashMap<Object,Object>(); } @Override public synchronized void clear() { map.clear(); } @Override public synchronized Object get(final Object key) { return map.get(key); } @Override public synchronized void put(final Object key, final Object value) { map.put(key, value); } @Override public String getName() { return "synclock"; } } public class LockMapWrapper implements MapWrapper{ private final Map<Object,Object> map; private final Lock lock; public LockMapWrapper() { map = new HashMap<Object,Object>(); lock = new ReentrantLock(); } @Override public void clear() { lock.lock(); try { map.clear(); } catch (final Exception e) { } finally { lock.unlock(); } } @Override public Object get(final Object key) { lock.lock(); try { return map.get(key); } catch (final Exception e) { // TODO: handle exception }finally { lock.unlock(); } return null; } @Override public void put(final Object key, final Object value) { lock.lock(); try { map.put(key, value); } catch (final Exception e) { } finally { lock.unlock(); } } @Override public String getName() { return "mutexlock"; } } public class RWLockMapWrapper implements MapWrapper{ private final Map<Object,Object> map; private final Lock readLock; private final Lock writeLock; public RWLockMapWrapper() { map = new HashMap<Object,Object>(); final ReentrantReadWriteLock lock = new ReentrantReadWriteLock(); readLock = lock.readLock(); writeLock = lock.writeLock(); } @Override public void clear() { writeLock.lock(); try { map.clear(); } catch (final Exception e) { } finally { writeLock.unlock(); } } @Override public Object get(final Object key) { readLock.lock(); try { return map.get(key); } catch (final Exception e) { // TODO: handle exception }finally { readLock.unlock(); } return null; } @Override public void put(final Object key, final Object value) { writeLock.lock(); try { map.put(key, value); } catch (final Exception e) { } finally { writeLock.unlock(); } } @Override public String getName() { return "rwlock"; } } public class ConcurrentMapWrapper implements MapWrapper{ private final Map<Object,Object> map; public ConcurrentMapWrapper() { map = new ConcurrentHashMap<Object,Object>(); } @Override public void clear() { map.clear(); } @Override public Object get(final Object key) { return map.get(key); } @Override public void put(final Object key, final Object value) { map.put(key, value); } @Override public String getName() { return "concrrent"; } } public class WriteLockMapWrapper implements MapWrapper{ private final Map<Object,Object> map; private final Lock lock; public WriteLockMapWrapper() { map = new HashMap<Object,Object>(); lock = new ReentrantLock(); } @Override public void clear() { lock.lock(); try { map.clear(); } catch (final Exception e) { } finally { lock.unlock(); } } @Override public Object get(final Object key) { return map.get(key); } @Override public void put(final Object key, final Object value) { lock.lock(); try { map.put(key, value); } catch (final Exception e) { } finally { lock.unlock(); } } @Override public String getName() { return "writelock"; } } public class BenchMark { public volatile long totalTime; private CountDownLatch latch; private final int loop; private final int threads; private final float ratio; public BenchMark(final int loop, final int threads,final float ratio) { super(); this.loop = loop; this.threads = threads; this.ratio = ratio; } class BenchMarkRunnable implements Runnable{ private final MapWrapper mapWrapper; private final int size; public void benchmarkRandomReadPut(final MapWrapper mapWrapper,final int loop) { final Random random = new Random(); int writeTime = 0; for (int i=0;i<loop;i++) { final int n = random.nextInt(size); if (mapWrapper.get(n) == null) { mapWrapper.put(n, n); writeTime++; } } } public BenchMarkRunnable(final MapWrapper mapWrapper,final int size) { this.mapWrapper = mapWrapper; this.size = size; } @Override public void run() { final long start = System.currentTimeMillis(); benchmarkRandomReadPut(mapWrapper,loop); final long end = System.currentTimeMillis(); totalTime += end-start; latch.countDown(); } } public void benchmark(final MapWrapper mapWrapper) { final float size = loop*threads*ratio; totalTime = 0; for (int k=0;k<3;k++) { latch = new CountDownLatch(threads); for (int i=0;i<threads;i++) { new Thread(new BenchMarkRunnable(mapWrapper,(int)size)).start(); } try { latch.await(); } catch (final Exception e) { e.printStackTrace(); } mapWrapper.clear(); Runtime.getRuntime().gc(); Runtime.getRuntime().runFinalization(); try { Thread.sleep(1000); } catch (final Exception e) { } } final int rwratio = (int)(1.0/ratio); System.out.println("["+mapWrapper.getName()+"]threadnum["+threads+"]ratio["+rwratio+"]avgtime["+totalTime/3+"]"); } public static void benchmark2(final int loop, final int threads,final float ratio) { final BenchMark benchMark = new BenchMark(loop,threads,ratio); final MapWrapper[] wrappers = new MapWrapper[] { new HashTableMapWrapper(), new SyncMapWrapper(), new LockMapWrapper(), new RWLockMapWrapper(), new ConcurrentMapWrapper(), new WriteLockMapWrapper(), }; for (final MapWrapper wrapper : wrappers) { benchMark.benchmark(wrapper); } } public static void test() { benchmark2(1000000,10,1);//r:w 1:1 benchmark2(1000000,10,0.1f);//r:w 10:1 benchmark2(1000000,10,0.01f);//r:w 100:1 benchmark2(1000000,10,0.001f);//r:w 1000:1 ///// benchmark2(1000000,50,0.1f);//r:w 10:1 benchmark2(1000000,50,0.01f);//r:w 100:1 benchmark2(1000000,50,0.001f);//r:w 1000:1 ///// benchmark2(100000,100,1f);//r:w 10:1 benchmark2(100000,100,0.1f);//r:w 10:1 benchmark2(100000,100,0.01f);//r:w 100:1 benchmark2(100000,100,0.001f);//r:w 1000:1 //// } public static void main(final String[] args) { test(); } }

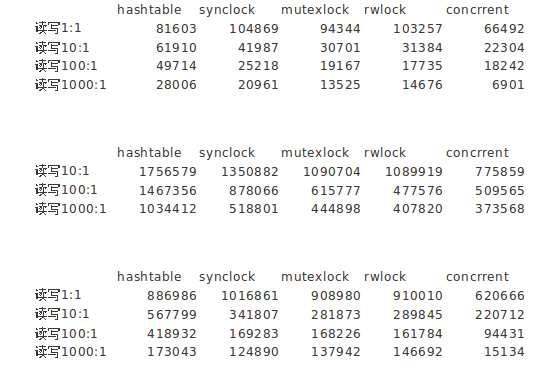

测试是在本机进行的,JVM参数是: -Xms2048M -Xmx2048M -XX:+UseParallelGC -XX:+AggressiveOpts -XX:+UseFastAccessorMethods -XX:+HeapDumpOnOutOfMemoryError。依次是并发10、50、100个线程测试得到的数据表如下:

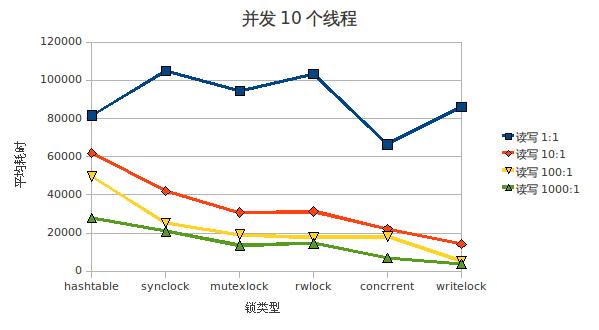

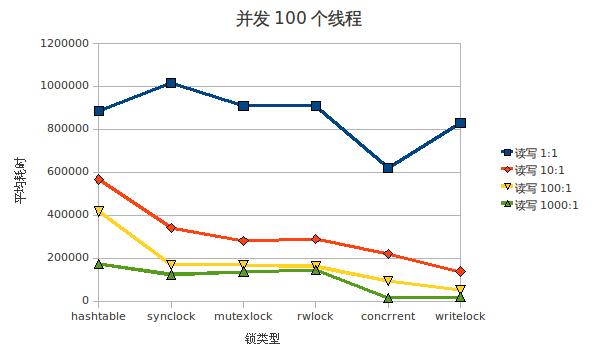

基于上面的数据使用openoffice做出的曲线对比图如下:

1、并发10个线程的平均耗时曲线对比图:

2、并发50个线程的平均耗时曲线对比图:

3、并发100个线程的平均耗时曲线对比图:

对于测试数据来说,不必太当真,同样的用例跑几遍数据都会有偏差,但总体上数据间的对比应该基本一致的。对于上面的测试结果,其中并发50个线程时没有读写1:1的数据,这其实是我的一个失误,到整理结果时才发现。但在并发100个线程时,我调整了单线程的循环次数,从上面的100万次调整到10万次,这是因为在100万次时我的机器已经严重CPU耗尽,久久不出结果,并伴有大量全GC。

根据测试结果,可以得出下面的结论:

1、读写比例相近(1:1)时,各种并发的速度差不多,只有ConcurrentHashMap因为分段加锁,性能稍好些。

2、在几种并发及读写比例中,hashtable都表现得很差,即便是和显示加互斥锁的synclock和mutexlock相比。

3、并发线程越多,锁的影响就越有体现。如果加锁的方法处理耗时更长,这种对比就更加明显(比如文件操作等)。

4、直观的数据对比来说,在100线程并发读写1000:1的最大化条件下,表现最差的hashtable和表现最好的concurrent性能比是11:1,其他条件下的比例都在这之内。

5、对比rwlock和writelock(rwlock加读写锁,writelock读不加锁写加互斥锁),读写锁的开销还是有一些的,因读写比例的不同而有几倍的差距。因为concurrent和writelock的区别在于concurrent是分段加锁,所以它俩的读写比例大时差别不大。

6、综合分析,concurrent这种读不加锁、写加分段锁的做法是效果最好的(尽管读有时会脏读的情况)。

对于这种基准测试,如果你有更好更精确的方法,也请不吝分享之。

==================== 华丽的终止符 ===================

原文链接:http://www.kafka0102.com/2010/08/298.html