CUDA 2.1 Released

2.1的release版本在前几天发布了,比2.1beta稳定一些,减少了一两个bug,没有太多更新,guide上有一个前景图,这个倒是有点感觉;

下面是从网站上转载过来的,安装之前都最好自己好好读一遍;最近在回答很多人的问题,发现很多学习的时候都太急于求成,很多基础的东西都不太弄清楚的时候,就像很快出成绩,我还是对这些朋友善意的提醒,好好把基础弄扎实,磨刀不误砍柴工,没有谁可以一步登天,学习是需要积累的过程,慢慢积累这个很重要;

还有一些问题是粗心造成的,如果是这样,希望大家都能仔细的先自己分析一下问题;

遇到问题的时候,首先需要分析问题,定位问题,才能找到问题的根源,才能找到问题的解决方法,不能只在哪里看错误,为什么错了,一直不去找,优秀的测试人员比开发人员更重要。

我们需要debug,而不是需要bug maker。占用别人时间的时候,请别人回答问题的时候,首先得考虑一下别人的时间也是很宝贵的:)每个人的时间都差不多是平等的。

这里也没必要苦口婆心的说什么劝言。

CUDA的学习需要有C或者C++语言的基础,需要对并行算法有深入的了解,要想在这个基础上有更深入的研究,需要对device的架构,芯片的architecture有深入的了解,一点一点的积累;

我只是说一下我学习CUDA的过程,从CUDA开始,到现在,看的文章不下200篇,里面有的文章,几乎都看过,需要积累的过程,只有大量的投入,才会有产出。

要写高性能的程序,device + architecture + algorithm 这三者必须都要很深入的了解,device 是硬件的发展,architecture是芯片的架构,这三者都是相互促进的,对于编程语言本身,这个都是应该掌握的基本功,C/C++这样的都是基本功,我在学习C/C++的时候,用的方法也很简单,找了一本数据结构的书,把上面的每个数据结构都自己实现一遍。

大学毕业的时候,统计过大学写过的代码,大概有8w多行,离李开复先生提出来的10w行的代码还有很远的距离,我不是计算机专业的,想想,如果是计算机专业的人,应该可以达到10w行的。

看过少林的一个纪录片,少林释德建大师在三皇寨的生活,其中他讲到他对徒弟的教育的时候,说道十多年要求他徒弟练习的都是一些基本招式,都是一些基本功,没有教他们很多很花的招式。

编程也是一样的,基础太重要了……

http://forums.nvidia.com/index.php?showtopic=84440

NVIDIA CUDA FAQ version 2.1

This document is intended to answer frequently asked questions seen on the CUDA forums.

-

General questions

- What is NVIDIA CUDA?

NVIDIA® CUDA™ is a general purpose parallel computing architecture that leverages the parallel compute engine in NVIDIA graphics processing units (GPUs) to solve many complex computational problems in a fraction of the time required on a CPU.

With over 100 million CUDA-enabled GPUs sold to date, thousands of software developers are already using the free CUDA software development tools to solve problems in a variety of professional and home applications – from video and audio processing and physics simulations, to oil and gas exploration, product design, medical imaging, and scientific research.

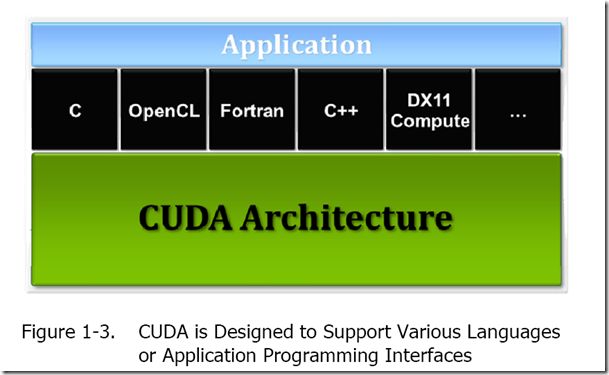

CUDA allows developers to program applications in high level languages like C and will support industry standard APIs such as Microsoft® DirectX® and OpenCL, to seamlessly integrate into the development environments of today and tomorrow. - What's new in CUDA 2.1?

- TESLA devices are now supported on Windows Vista

- Support for using a GPU that is not driving a display on Vista (was already supported on Windows XP, OSX and Linux)

- VisualStudio 2008 support for Windows XP and Windows Vista

- Just-in-time (JIT) compilation, for applications that dynamically generate CUDA kernels

- New interoperability APIs for Direct3D 9 and Direct3D 10 accelerate communication with DirectX applications

- OpenGL interoperability improvements

- Debugger support using gdb for CUDA on RHEL 5.x (separate download)

- Linux Profiler 1.1 Beta (separate download)

- Support for recent releases of Linux including Fedora9, OpenSUSE 11 and Ubuntu 8.04

- Numerous bug fixes

- What is NVIDIA Tesla™?

With the world’s first teraflop many-core processor, NVIDIA® Tesla™ computing solutions enable the necessary transition to energy efficient parallel computing power. With 240 cores per processor and a standard C compiler that simplifies application development, Tesla scales to solve the world’s most important computing challenges—more quickly and accurately. - What kind of performance increase can I expect using GPU Computing over CPU-only code?

This depends on how well the problem maps onto the architecture. For data parallel applications, we have seen speedups anywhere from 10x to 200x.

See the CUDA Zone web page for many examples of applications accelerated using CUDA:

http://www.nvidia.com/cuda - What operating systems does CUDA support?

CUDA supports Windows XP, Windows Vista, Linux and Mac OS (including 32-bit and 64-bit versions).

CUDA 2.1 supports the following Linux distributions:- Red Hat Enterprise Linux 4.3

- Red Hat Enterprise Linux 4.4

- Red Hat Enterprise Linux 4.5

- Red Hat Enterprise Linux 4.6

- Red Hat Enterprise Linux 4.7*

- Red Hat Enterprise Linux 5.0

- Red Hat Enterprise Linux 5.1

- Red Hat Enterprise Linux 5.2*

- SUSE Linux Enterprise Desktop 10.0 SP1

- SUSE Linux 10.3

- SUSE Linux 11.0*

- Fedora 8

- Fedora 9*

- Ubuntu 7.10

- Ubuntu 8.04*

* new support with CUDA v2.1

Users have reported that CUDA works on other Linux distributions such as Gentoo, but these are not offically supported. - Can I run CUDA under DOS?

CUDA will not work in full-screen DOS mode since the display driver is not loaded. - What versions of Microsoft Visual Studio does CUDA support?

CUDA 2.1 supports Microsoft Visual Studio 2005 (v.8) and 2008 (v.9). Visual Studio 2003 (v.7) is no longer supported. - What GPUs does CUDA run on?

GPU Computing is a standard feature in all NVIDIA's 8-Series and later GPUs. We recommend that the GPU have at least 256MB of graphics memory. System configurations with less than the recommended memory size may not have enough memory to properly support all CUDA programs.

For a full list of supported products see:

http://www.nvidia.com/object/cuda_learn_products.html - What is the "compute capability"?

The compute capability indicates the version of the compute hardware included in the GPU.

Compute capability 1.0 corresponds to the original G80 architecture.

Compute capability 1.1 (introduced in later G8x parts) adds support for atomic operations on global memory. See "What are atomic operations?" in the programming section below.

Compute capability 1.2 (introduced in the GT200 architecture) adds the following new features:- Support for atomic functions operating in shared memory and atomic functions operating on 64-bit words in global memory

- Support for warp vote functions

- The number of registers per multiprocessor is 16384

- The maximum number of active warps per multiprocessor is 32

- The maximum number of active threads per multiprocessor is 1024

Compute capability 1.3 adds support for double precision floating point numbers.

See the CUDA Programming Guide for a full list of GPUs and their compute capabilities. - What are the technical specifications of the NVIDIA Tesla C1060 Processor ?

The Tesla C1060 consists of 30 multiprocessors, each of which is comprised of 8 scalar processor cores, for a total of 240 processors. There is 16KB of shared memory per multiprocessor.

Each processor has a floating point unit which is capable of performing a scalar multiply-add and a multiply, and a "superfunc" operation (such as rsqrt or sin/cos) per clock cycle.

The processors are clocked at 1.296 GHz. The peak computation rate accessible from CUDA is therefore around 933 GFLOPS (240 * 3 * 1.296). If you include the graphics functionality that is accessible from CUDA (such as texture interpolation), the FLOPs rate is much higher.

Each multiprocessor includes a single double precision multiply-add unit, so the double precision floating point performance is around 78 GFLOPS (30 * 2 * 1.296).

The card includes 4 GB of device memory. The maximum observed bandwidth between system and device memory is about 6GB/second with a PCI-Express 2.0 compatible motherboard.

Other GPUs in the Tesla series have the same basic architecture, but vary in the number of multiprocessors, clock speed, memory bus width and amount of memory.

See the programming guide for more details. - Does CUDA support multiple graphics cards in one system?

Yes. Applications can distribute work across multiple GPUs. This is not done automatically, however, so the application has complete control.

The Tesla S1070 Computing System supports 4 GPUs in an external enclosure:

http://www.nvidia.com/object/tesla_computing_solutions.html

There are also motherboards available that support up to 4 PCI-Express cards.

It is also possible to buy pre-built Tesla "Personal SuperComputer" systems off the shelf:

http://www.nvidia.com/object/personal_supercomputing.html

See the "multiGPU" example in the CUDA SDK for an example of programming multiple GPUs. - Where can I find a good introduction to parallel programming?

The course "ECE 498: Programming Massively Parallel Processors" at the University of Illinois, co-taught by Dr. David Kirk and Dr. Wen-mei Hwu is a good introduction:

http://courses.ece.uiuc.edu/ece498/al/Syllabus.html

The book "An Introduction to Parallel Computing: Design and Analysis of Algorithms" by Grama, Karypis et al isn't bad either (despite the reviews):

http://www.amazon.com/Introduction-Paralle...2044533-7984021

Although not directly applicable to CUDA, the book "Parallel Programming in C with MPI and OpenMP" by M.J.Quinn is also worth reading:

http://www.amazon.com/Parallel-Programming...3109&sr=1-1

For a full list of educational material see:

http://www.nvidia.com/object/cuda_education.html - Where can I find more information on NVIDIA GPU architecture?

- J. Nickolls et al. "Scalable Programming with CUDA" ACM Queue, vol. 6 no. 2 Mar./Apr. 2008 pp 40-53

http://www.acmqueue.org/modules.php?name=C...age&pid=532 - E. Lindholm et al. "NVIDIA Tesla: A Unified Graphics and Computing Architecture," IEEE Micro, vol. 28 no. 2, Mar.Apr. 2008, pp 39-55

- J. Nickolls et al. "Scalable Programming with CUDA" ACM Queue, vol. 6 no. 2 Mar./Apr. 2008 pp 40-53

- How does CUDA structure computation?

CUDA broadly follows the data-parallel model of computation. Typically each thread executes the same operation on different elements of the data in parallel.

The data is split up into a 1D or 2D grid of blocks. Each block can be 1D, 2D or 3D in shape, and can consist of up to 512 threads on current hardware. Threads within a thread block can coooperate via the shared memory.

Thread blocks are executed as smaller groups of threads known as "warps". The warp size is 32 threads on current hardware. - Can I run CUDA remotely?

Under Linux it is possible to run CUDA programs via remote login. We currently recommend running with an X-server.

CUDA does not work with Windows Remote Desktop, although it does work with VNC. - How do I pronounce CUDA?

koo-duh.

Programming questions

- I think I've found a bug in CUDA, how do I report it?

First of all, please verify that your code works correctly in emulation mode and is not caused by faulty hardware.

If you are reasonably sure it is a bug, you can either post a message on the forums or send a private message to one of the forum moderators.

If you are a researcher or commercial application developer, please sign up as a registered developer to get additional support and the ability to file your own bugs directly:

http://developer.nvidia.com/page/registere...er_program.html

Your bug report should include a simple, self-contained piece of code that demonstrates the bug, along with a description of the bug and the expected behaviour.

Please include the following information with your bug report:- Machine configuration (CPU, Motherboard, memory etc.)

- Operating system

- CUDA Toolkit version

- Display driver version

- For Linux users, please attach an nvidia-bug-report.log, which is generated by running "nvidia-bug-report.sh".

- What are the advantages of CUDA vs. graphics-based GPGPU?

CUDA is designed from the ground-up for efficient general purpose computation on GPUs. Developers can compile C for CUDA to avoid the tedious work of remapping their algorithms to graphics concepts.

CUDA exposes several hardware features that are not available via graphics APIs. The most significant of these is shared memory, which is a small (currently 16KB per multiprocessor) area of on-chip memory which can be accessed in parallel by blocks of threads. This allows caching of frequently used data and can provide large speedups over using textures to access data. Combined with a thread synchronization primitive, this allows cooperative parallel processing of on-chip data, greatly reducing the expensive off-chip bandwidth requirements of many parallel algorithms. This benefits a number of common applications such as linear algebra, Fast Fourier Transforms, and image processing filters.

Whereas fragment programs in graphics APIs are limited to outputting 32 floats (RGBA * 8 render targets) at a pre-specified location, CUDA supports scattered writes - i.e. an unlimited number of stores to any address. This enables many new algorithms that were not possible using graphics APIS to perform efficiently using CUDA.

Graphics APIs force developers to store data in textures, which requires packing long arrays into 2D textures. This is cumbersome and imposes extra addressing math. CUDA can perform loads from any address.

CUDA also offers highly optimized data transfers to and from the GPU. - Does CUDA support C++?

Full C++ is supported for host code. However, only the C subset of C++ is fully supported for device code. Some features of C++ (such as templates, operator overloading) work in CUDA device code, but are not officially supported. - Can the CPU and GPU run in parallel?

Kernel invocation in CUDA is asynchronous, so the driver will return control to the application as soon as it has launched the kernel.

The "cudaThreadSynchronize()" API call should be used when measuring performance to ensure that all device operations have completed before stopping the timer.

CUDA functions that perform memory copies and that control graphics interoperability are synchronous, and implicitly wait for all kernels to complete. - Can I transfer data and run a kernel in parallel (for streaming applications)?

Yes, CUDA 2.1 supports overlapping GPU computation and data transfers using streams. See the programming guide for more details. - Is it possible to DMA directly into GPU memory from another PCI-E device?

Not currently, but we are investigating ways to enable this. - Is it possible to write the results from a kernel directly to texture (for multi-pass algorithms)

Not currently, but you can copy from global memory back to the array (texture). Device to device memory copies are fast. - Can I write directly to the framebuffer?

No. In OpenGL you have to write to a mapped pixel buffer object (PBO), and then render from this. The copies are in video memory and fast, however. See the "postProcessGL" sample in the SDK for more details. - Can I read directly from textures created in OpenGL/Direct3D?

You cannot read directly from OpenGL textures in CUDA. You can copy the texture data to a pixel buffer object (PBO) and then map this buffer object for reading in CUDA.

In Direct3D it is possible to map D3D resources and read them in CUDA. This may involve an internal copy from the texture format to linear format. - Does graphics interoperability work with multiple GPUs?

Yes, although for best performance the GPU doing the rendering should be the same as the one doing the computation. - Does CUDA support graphics interoperability with Direct3D 10?

Yes, this is supported in CUDA 2.1. - How do I get the best performance when transferring data to and from OpenGL pixel buffer objects (PBOs)?

For optimal performance when copying data to and from PBOs, you should make sure that the format of the source data is compatible with the format of the destination. This will ensure that the driver doesn't have to do any format conversion on the CPU and can do a direct copy in video memory. When copying 8-bit color data from the framebuffer using glReadPixels we recommend using the GL_BGRA format and ensuring that the framebuffer has an alpha channel (e.g. glutInitDisplayMode(GLUT_RGBA_ | GLUT_ALPHA) if you're using GLUT). - What texture features does CUDA support?

CUDA supports 1D, 2D and 3D textures, which can be accessed with normalized (0..1) or integer coordinates. Textures can also be bound to linear memory and accessed with the "tex1Dfetch" function.

Cube maps, texture arrays, compressed textures and mip-maps are not currently supported.

The hardware only supports 1, 2 and 4-component textures, not 3-component textures.

The hardware supports linear interpolation of texture data, but you must use "cudaReadModeNormalizedFloat" as the "ReadMode". - What are the maximum texture sizes supported?

1D textures are limited to 8K elements. 1D "buffer" textures bound to linear memory are limited to 2^27 elements.

The maximum size for a 2D texture is 64K by 32K pixels, assuming sufficient device memory is available.

The maximum size of a 3D texture is 2048 x 2048 x 2048. - What is the size of the texture cache?

The texture cache has an effective 16KB working set size per multiprocessor and is optimized for 2D locality. Fetches from linear memory bound as texture also benefit from the texture cache. - Can I index into an array of textures?

No. Current hardware can't index into an array of texture references (samplers). If all your textures are the same size, an alternative might be to load them into the slices of a 3D texture. You can also use a switch statement to select between different texture lookups. - Are graphics operations such as z-buffering and alpha blending supported in CUDA?

No. Access to video memory in CUDA is done via the load/store mechanism, and doesn't go through the normal graphics raster operations like blending. We don't have any plans to expose blending or any other raster ops in CUDA. - What are the peak transfer rates between the CPU and GPU?

The performance of memory transfers depends on many factors, including the size of the transfer and type of system motherboard used.

We recommend NVIDIA nForce motherboards for best transfer performance. On PCI-Express 2.0 systems we have measured up to 6.0 GB/sec transfer rates.

You can measure the bandwidth on your system using the bandwidthTest sample from the SDK.

Transfers from page-locked memory are faster because the GPU can DMA directly from this memory. However allocating too much page-locked memory can significantly affect the overall performance of the system, so allocate it with care. - What is the precision of mathematical operations in CUDA?

All compute-capable NVIDIA GPUs support 32-bit integer and single precision floating point arithmetic. They follow the IEEE-754 standard for single-precision binary floating-point arithmetic, with some minor differences - notably that denormalized numbers are not supported.

Later GPUs (for example, the Tesla C1060) also. include double precision floating point. See the programming guide for more details. - Why are the results of my GPU computation slightly different from the CPU results?

There are many possible reasons. Floating point computations are not guaranteed to give identical results across any set of processor architectures. The order of operations will often be different when implementing algorithms in a data parallel way on the GPU.

This is a very good reference on floating point arithmetic:

What Every Computer Scientist Should Know About Floating-Point Arithmetic

The GPU also has several deviations from the IEEE-754 standard for binary floating point arithmetic. These are documented in the CUDA Programming Guide, section A.2. - Does CUDA support double precision arithmetic?

Yes. GPUs with compute capability 1.3 (those based on the GT200 architecture, such as the Tesla C1060 and later) support double precision floating point in hardware. - How do I get double precision floating point to work in my kernel?

You need to add the switch "-arch sm_13" to your nvcc command line, otherwise doubles will be silently demoted to floats. See the "Mandelbrot" sample in the CUDA SDK for an example of how to switch between different kernels based on the compute capability of the GPU.

You should also be careful to suffix all floating point literals with "f" (for example, "1.0f") otherwise they will be interpreted as doubles by the compiler. - Can I read double precision floats from texture?

The hardware doesn't support double precision float as a texture format, but it is possible to use int2 and cast it to double as long as you don't need interpolation:

texture<int2> my_texture; <br>static __inline__ __device__ double fetch_double(texture<int2> t, int i) <br>{ <br>int2 v = tex1Dfetch(t,i); <br>return __hiloint2double(v.y, v.x); <br>} </int2></int2> - Does CUDA support long integers?

Current GPUs are essentially 32-bit machines, but they do support 64 bit integers (long longs). Operations on these types compile to multiple instruction sequences. - When should I use the __mul24 and __umul24 functions?

G8x hardware supports integer multiply with only 24-bit precision natively (add, subtract and logical operations are supported with 32 bit precision natively). 32-bit integer multiplies compile to multiple instruction sequences and take around 16 clock cycles.

You can use the __mul24 and __umul24 built-in functions to perform fast multiplies with 24-bit precision.

Be aware that future hardware may switch to 32-bit native integers, it which case __mul24 and __umul24 may actually be slower. For this reason we recommend using a macro so that the implementation can be switched easily. - Does CUDA support 16-bit (half) floats?

All floating point computation is performed with 32 or 64 bits.

The driver API supports textures that contain 16-bit floats through the CU_AD_FORMAT_HALF array format. The values are automatically promoted to 32-bit during the texture read.

16-bit float textures are planned for a future release of CUDART.

Other support for 16-bit floats, such as enabling kernels to convert between 16- and 32-bit floats (to read/write float16 while processing float32), is also planned for a future release. - Where can I find documentation on the PTX assembly language?

This is included in the CUDA Toolkit documentation ("ptx_isa_1.3.pdf"). - How can I see the PTX code generated by my program?

Add "-keep" to the nvcc command line (or custom build setup in Visual Studio) to keep the intermediate compilation files. Then look at the ".ptx" file. The ".cubin" file also includes useful information including the actual number of hardware registers used by the kernel. - How can I find out how many registers / how much shared/constant memory my kernel is using?

Add the option "--ptxas-options=-v" to the nvcc command line. When compiling, this information will be output to the console. - Is it possible to see PTX assembly interleaved with C code?

Yes! Add the option "--opencc-options -LIST:source=on" to the nvcc command line. - What is CUTIL?

CUTIL is a simple utility library designed used in the CUDA SDK samples.

It provides functions for:- parsing command line arguments

- read and writing binary files and PPM format images

- comparing arrays of data (typically used for comparing GPU results with CPU)

- timers

- macros for checking error codes

Note that CUTIL is not part of the CUDA Toolkit and is not supported by NVIDIA. It exists only for the convenience of writing concise and platform-independent example code. - Does CUDA support operations on vector types?

CUDA defines vector types such as float4, but doesn't include any operators on them by default. However, you can define your own operators using standard C++. The CUDA SDK includes a header "cutil_math.h" that defines some common operations on the vector types.

Note that since the GPU hardware uses a scalar architecture there is no inherent performance advantage to using vector types for calculation. - Does CUDA support swizzling?

CUDA does not support swizzling (e.g. "vector.wzyx", as used in the Cg/HLSL shading languages), but you can access the individual components of vector types. - Is it possible to run multiple CUDA applications and graphics applications at the same time?

CUDA is a client of the GPU in the same way as the OpenGL and Direct3D drivers are - it shares the GPU via time slicing. It is possible to run multiple graphics and CUDA applications at the same time, although currently CUDA only switches at the boundaries between kernel executions.

The cost of context switching between CUDA and graphics APIs is roughly the same as switching graphics contexts. This isn't something you'd want to do more than a few times each frame, but is certainly fast enough to make it practical for use in real time graphics applications like games. - Can CUDA survive a mode switch?

If the display resolution is increased while a CUDA application is running, the CUDA application is not guaranteed to survive the mode switch. The driver may have to reclaim some of the memory owned by CUDA for the display. - Is it possible to execute multiple kernels at the same time?

No, CUDA only executes a single kernel at a time on each GPU in the system. In some cases it is possible to have a single kernel perform multiple tasks by branching in the kernel based on the thread or block id. - What is the maximum length of a CUDA kernel?

The maximum kernel size is 2 million PTX instructions. - How can I debug my CUDA code?

Use device emulation for debugging (breakpoints, stepping, printfs), but make sure to keep checking that your program runs correctly on the device too as you add more code. This is to catch issues, before they become buried into too much code, that are hard or impossible to catch in device emulation mode. The two most frequent ones are:- Dereferencing device pointers in host code or host pointers in device code

- Missing __syncthreads().

See Section “Debugging using the Device Emulation Mode" from the programming guide for more details. - How can I optimize my CUDA code?

Here are some basic tips:- Make sure global memory reads and writes are coalesced where possible (see programming guide section 6.1.2.1).

- If your memory reads are hard to coalesce, try using 1D texture fetches (tex1Dfetch) instead.

- Make as much use of shared memory as possible (it is much faster than global memory).

- Avoid large-scale bank conflicts in shared memory.

- Use types like float4 to load 128 bits in a single load.

- Avoid divergent branches within a warp where possible.

We recommend using the CUDA Visual Profiler to profile your application. - How do I choose the optimal number of threads per block?

For maximum utilization of the GPU you should carefully balance the the number of threads per thread block, the amount of shared memory per block, and the number of registers used by the kernel.

You can use the CUDA occupancy calculator tool to compute the multiprocessor occupancy of a GPU by a given CUDA kernel:

http://www.nvidia.com/object/cuda_programming_tools.html - What is the maximum kernel execution time?

On Windows, individual GPU program launches have a maximum run time of around 5 seconds. Exceeding this time limit usually will cause a launch failure reported through the CUDA driver or the CUDA runtime, but in some cases can hang the entire machine, requiring a hard reset.

This is caused by the Windows "watchdog" timer that causes programs using the primary graphics adapter to time out if they run longer than the maximum allowed time.

For this reason it is recommended that CUDA is run on a GPU that is NOT attached to a display and does not have the Windows desktop extended onto it. In this case, the system must contain at least one NVIDIA GPU that serves as the primary graphics adapter. - Why do I get the error message: "The application failed to initialize properly"?

This problem is associated with improper permissions on the DLLs (shared libraries) that are linked with your CUDA executables. All DLLs must be executable. The most likely problem is that you unzipped the CUDA distribution with cygwin's "unzip", which sets all permissions to non-executable. Make sure all DLLs are set to executable, particularly those in the CUDA_BIN directory, by running the cygwin command "chmod +x *.dll" in the CUDA_BIN directory. Alternatively, right-click on each DLL in the CUDA_BIN directory, select Properties, then the Security tab, and make sure "read & execute" is set. For more information see:

http://www.cygwin.com/ml/cygwin/2002-12/msg00686.html - When compiling with Microsoft Visual Studio 2005 why do I get the error "C:/Program Files/Microsoft Visual Studio 8/VC/INCLUDE/xutility(870): internal error: assertion failed at: "D:/Bld/rel/gpgpu/toolkit/r2.0/compiler/edg/EDG_3.9/src/lower_name.c"

You need to install Visual Studio 2005 Service Pack 1:

http://www.microsoft.com/downloads/details...;displaylang=en - I get the CUDA error "invalid argument" when executing my kernel

You might be exceeding the maximum size of the arguments for the kernel. Parameters to __global__ functions are currently passed to the device via shared memory and the total size is limited to 256 bytes.

As a workaround you can pass arguments using constant memory. - What are atomic operations?

Atomic operations allow multiple threads to perform concurrent read-modify-write operations in memory without conflicts. The hardware serializes accesses to the same address so that the behaviour is always deterministic. The functions are atomic in the sense that they are guaranteed to be performed without interruption from other threads. Atomic operations must be associative (i.e. order independent).

Atomic operations are useful for sorting, reduction operations and building data structures in parallel.

Devices with compute capability 1.1 support atomic operations on 32-bit integers in global memory. This includes logical operations (and, or, xor), increment and decrement, min and max, exchange and compare and swap (CAS). To compile code using atomics you must add the option "-arch sm_11" to the nvcc command line.

Compute capability 1.2 devices also support atomic operations in shared memory.

Atomic operations values are not currently supported for floating point values.

There is no radiation risk from atomic operations. - Does CUDA support function pointers?

No, current hardware does not support function pointers (jumping to a computed address). You can use a switch to select between different functions. If you don't need to switch between functions at runtime, you can sometimes use C++ templates or macros to compile different versions of your kernels that can be switched between in the host code. - How do I compute the sum of an array of numbers on the GPU?

This is known as a parallel reduction operation. See the "reduction" sample in the CUDA SDK for more details. - How do I output a variable amount of data from each thread?

This can be achieved using a parallel prefix sum (also known as "scan") operation. The CUDA Data Parallel Primitives library (CUDPP) includes highly optimized scan functions:

http://www.gpgpu.org/developer/cudpp/

The "marchingCubes" sample in the CUDA SDK demonstrates the use of scan for variable output per thread. - How do I sort an array on the GPU?

The "particles" sample in the CUDA SDK includes a fast parallel radix sort.

To sort an array of values within a block, you can use a parallel bitonic sort. See the "bitonic" sample in the SDK.

The CUDPP library also includes sort functions. - What do I need to distribute my CUDA application?

Applications that use the driver API only need the CUDA driver library ("nvcuda.dll" under Windows), which is included as part of the standard NVIDIA driver install.

Applications that use the runtime API also require the runtime library ("cudart.dll" under Windows), which is included in the CUDA Toolkit. It is permissible to distribute this library with your application under the terms of the End User License Agreement included with the CUDA Toolkit.

CUFFT

- What is CUFFT?

CUFFT is a Fast Fourier Transform (FFT) library for CUDA. See the CUFFT documentation for more information. - What types of transforms does CUFFT support?

The current release supports complex to complex (C2C), real to complex (R2C) and complex to real (C2R). - What is the maximum transform size?

For 1D transforms, the maximum transform size is 16M elements in the 1.0 release.

CUBLAS

- What is CUBLAS?

CUBLAS is an implementation of BLAS (Basic Linear Algebra Subprograms) on top of the CUDA driver. It allows access to the computational resources of NVIDIA GPUs. The library is self contained at the API level, that is, no direct interaction with the CUDA driver is necessary.

See the documentation for more details.