MongoDB(AutoSharding+Replication sets 稳定性测试 )

单Replication sets设计:

如图所示,10.9.3.228只启动Mongos和config两个服务

^_^[root@:/usr/local/mongodb/bin]#cat runServerConfig.sh ./mongod --configsvr --dbpath=../data/config --logpath=../data/config.log --fork ^_^[root@:/usr/local/mongodb/bin]#cat runServerMongos.sh ./mongos --configdb 10.7.3.228:27019 --logpath=../data/mongos.log --logappend --fork

注意:Mongos里面的ip和端口是config服务的ip和端口

先进性配置AutoSharding

163的shardv已经启动了,只要启动下165服务器的autoSharding服务

[root@localhost bin]# cat runServerShard.sh ./mongod --shardsvr -dbpath=../data/mongodb --logpath=../data/shardsvr_logs.txt --fork

配置163和164的Replication

[root@localhost bin]# ./mongo 10.10.21.163:27018

MongoDB shell version: 1.8.2

connecting to: 10.10.21.163:27018/test

> cfg={_id:"set163164",members:[

... {_id:0,host:"10.10.21.163:27018"},

... {_id:1,host:"10.10.21.164:27017"}

... ]}

{

"_id" : "set163164",

"members" : [

{

"_id" : 0,

"host" : "10.10.21.163:27018"

},

{

"_id" : 1,

"host" : "10.10.21.164:27017"

}

]

}

> rs.initiate(cfg)

{

"info" : "Config now saved locally. Should come online in about a minute.",

"ok" : 1

}

> rs.conf()

{

"_id" : "set163164",

"version" : 1,

"members" : [

{

"_id" : 0,

"host" : "10.10.21.163:27018"

},

{

"_id" : 1,

"host" : "10.10.21.164:27017"

}

]

}

set163164:PRIMARY>

set163164:PRIMARY>

set163164:PRIMARY> show dbs

admin (empty)

local 14.1962890625GB

set163164:PRIMARY> use local

switched to db local

set163164:PRIMARY> show collections

oplog.rs

system.replset

set163164:PRIMARY> db.system.replset.find()

{ "_id" : "set163164", "version" : 1, "members" : [

{

"_id" : 0,

"host" : "10.10.21.163:27018"

},

{

"_id" : 1,

"host" : "10.10.21.164:27017"

}

] }

set163164:PRIMARY> rs.isMaster()

{

"setName" : "set163164",

"ismaster" : true,

"secondary" : false,

"hosts" : [

"10.10.21.163:27018",

"10.10.21.164:27017"

],

"maxBsonObjectSize" : 16777216,

"ok" : 1

}

至此Replication sets配置成功!

再在228服务器上进行相应Sharding配置

use admin

> db.runCommand({addshard:"set163164/10.10.21.163:27018,10.10.21.165:27018"});

{ "shardAdded" : "set163164", "ok" : 1 }

> db.runCommand({enableSharding:"test"})

{ "ok" : 1 }

> db.runCommand({shardcollection:"test.users",key:{_id:1}})

{ "collectionsharded" : "test.users", "ok" : 1 }

然后分别在163和164服务器上启动rep服务,163要单独启动shard服务

163:

[root@localhost bin]# cat runServerShard.sh ./mongod --shardsvr --dbpath=../data/mongodb --logpath=../data/shardsvr_logs.txt --fork --replSet set163164

[root@localhost bin]# cat runServerShard.sh ./mongod --dbpath=../data --logpath=../data/shardsvr_logs.txt --fork --replSet set163164

至此AutoSharding+Rep配置成功。然后进行测试稳定性阶段。

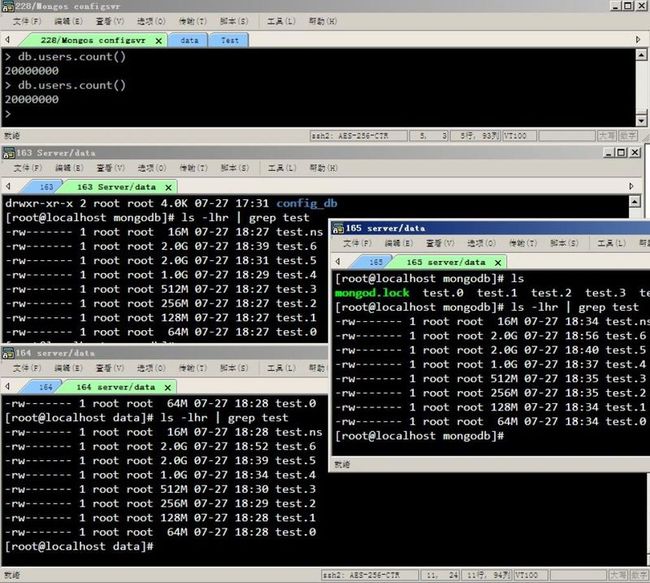

先看下结果:

可以看到,总共插入2000W条数据,163和164相同大小 165属于分片 数据。

我现在进行稳定性测试:

断掉163服务器。

Mongos那再相应进行查询:

> db.users.find()

error: { "$err" : "error querying server: 10.10.21.163:27018", "code" : 13633 }

> db.users.find()

error: {

"$err" : "DBClientBase::findOne: transport error: 10.10.21.163:27018 query: { setShardVersion: \"test.users\", configdb: \"10.7.3.228:27019\", version: Timestamp 11000|1, serverID: ObjectId('4e2f64af98dd90fed26585a4'), shard: \"shard0000\", shardHost: \"10.10.21.163:27018\" }",

"code" : 10276

}

> db.users.find()

error: { "$err" : "socket exception", "code" : 11002 }直接出现错误!

再进行手动添加164服务器!

> db.runCommand({addshard:"10.10.21.164:27017"});

{

"ok" : 0,

"errmsg" : "host is part of set: set163164 use replica set url format <setname>/<server1>,<server2>,...."

}

还是出错!

可见这样配置是有问题的!

经过一段时间的思考和反复测试,发现是否是投票上除了问题

看到官网上有这样一段话:

Consensus Vote

For a node to be elected primary, it must receive a majority of votes. This is a majority of all votes in the set: if you have a 5-member set and 4 members are down, a majority of the set is still 3 members (floor(5/2)+1). Each member of the set receives a single vote and knows the total number of available votes.

If no node can reach a majority, then no primary can be elected and no data can be written to that replica set (although reads to secondaries are still possible).

那么2台Server投票是否会出现问题,那再加一台如何?

这边也可以 把164作为 arbiter来 :

use admin

var cfg={_id:"set162163164", members:[{_id:0,host:"10.10.21.162:27018"}, {_id:1,host:"10.10.21.163:27017"}, {_id:2,host:"10.10.21.164:27017",arbiterOnly:true} ]}

rs.initiate(cfg)

rs.conf()

228:

use admin

#db.runCommand({addshard:"set162163164/10.10.21.162:27018,10.10.21.163:27017,10.10.21.164:27017"}) #正常添加3台

db.runCommand({addshard:"set162163164/10.10.21.162:27018,10.10.21.163:27017"}) #arbiter

db.runCommand({addshard:"10.10.21.165:27018"})

db.runCommand({enableSharding:"test"})

db.runCommand({shardcollection:"test.users",key:{_id:1}})

经过试验:

稳定性已经提高,断掉162,163,164任意一台Server ,Mongos都能自动reconnect中其中的vote的一个成员primary.

最终设计图:

原文链接: http://blog.csdn.net/crazyjixiang/article/details/6636671