keepalived基础知识及高可用的实现

目录

1、keepalived软件介绍

2、keepalived的安装及VRRP的实现

3、总结

1、keepalived软件介绍

keepalived是由c语言编写的一个路径选择软件,是IPVS的一个扩展性项目,为IPVS提供高可用性(故障转移)特性,它的高可用性是通过VRRP协议实现的,并实现了对负载均衡服务器池中的real server进行健康状态检测,当real server不可用时,自身实现了故障的隔离,这弥补了IPVS不能对real server服务器进行健康检测的不足,这也是keepalived运用最广泛的场景,当然keepalived不只限于实现IPVS的高可用。

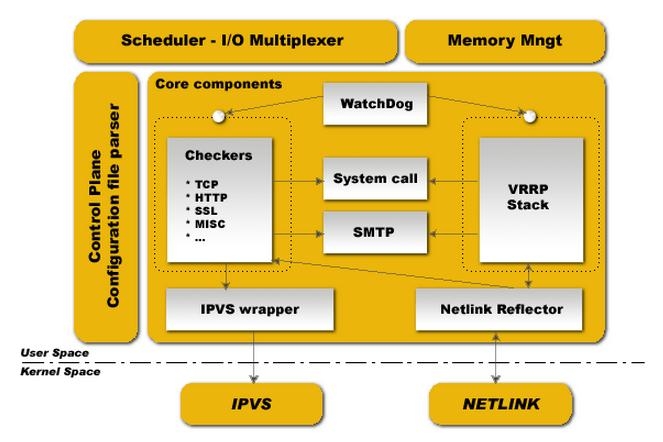

1.1、keepalived软件架构

图片来自“http://www.keepalived.org/documentation.html”

下部分是内核空间,有IPVS和NETLINK两个部分组成,IPVS提供IP虚拟网络,而NETLINK提供高级的路由功能和其他相关的网络功能。上部分是用户空间,由这些组件来完成具体的功能,在核心组件中:

1、WatchDog:实现对healthchecking和VRRP进程的监控,如果子进程非法停止,父进程会重启子进程。

2、 Checkers 负责real server的 healthchecking,是 keepalived 最主要的功能,它实时对real server进行测试,判断其是否存活后实现对lvs规则的添加或删除。可利用OSI模型中的第四、五、七层来进行测试。healthchecking进程由父进程监控一个独立的进程运行。

3、 VRRP Stack 负责负载均衡器之间的失败切换( FailOver),由父进程监控一个独立的进程运行。

4、 IPVS wrapper 用来发送设定的规则到内核 ipvs 代码。

5、Netlink Reflector 用来设定 vrrp 的 vip 地址等。

为了keepalived的健状性和稳定性,keepalived启动后运行了三个守护进程,一个父进程,两个child进程,父进程用于监控两个child进程,两个child进程中一个是vrrp child,一个是healthchecking child。

2、keepalived的安装及VRRP的实现

VRRP(Virtual Router Redundancy Protocol)全称为“虚拟路由冗余协议”,对VRRP这里不做过多的阐述,可自行google了解,简单说来就是一种能在多个运行VRRP的设备上使用一个虚拟的IP地址对外提供服务,这个虚拟的IP地址绑定在这一组运行了VRRP组设备中的MASTER节点上,当这个节点发生故障时,其他的BACKUP节点中的一个能够接管出现故障的设备,使服务不会中断。运行VRRP协议的设备一般是专业的路由设备,比如华为、锐捷、CISCO的路由产品,而keepalived这个软件把VRRP协议在linux主机上得以实现,使linux主机也具备了高可用的特性。下边让我们来看一下keepalived是怎样实现VRRP的,准确的说应该是VRRP v2版本。

2.1、keepalived的安装

在现在主流的centos系统中,keepalived已被收录进yum源,可直接用yum进行安装,只是得到的版本不是最新的稳定版,此处以目前最稳定版的编译安装方式作介绍。目前最稳定的是"keepalived-1.2.16.tar.gz"。

[root@nod1 software]# tar xf keepalived-1.2.16.tar.gz [root@nod1 software]# cd keepalived-1.2.16 [root@nod1 keepalived-1.2.16]# ls AUTHOR ChangeLog configure.in COPYING genhash install-sh keepalived.spec.in Makefile.in TODO bin configure CONTRIBUTORS doc INSTALL keepalived lib README VERSION

在编译时可能会有决依赖关系,一般要安装以下这几个包: libnl-devel openssl-devel

[root@nod1 keepalived-1.2.16]# ./configure --prefix=/usr/local/keepalived 编译完成后会出现如下信息: Keepalived configuration ------------------------ Keepalived version : 1.2.16 Compiler : gcc Compiler flags : -g -O2 -DFALLBACK_LIBNL1 Extra Lib : -lssl -lcrypto -lcrypt -lnl Use IPVS Framework : Yes IPVS sync daemon support : Yes IPVS use libnl : Yes fwmark socket support : Yes Use VRRP Framework : Yes Use VRRP VMAC : Yes SNMP support : No SHA1 support : No Use Debug flags : No [root@nod1 keepalived-1.2.16]# make && make install [root@nod1 keepalived]# pwd /usr/local/keepalived [root@nod1 keepalived]# ll total 16 drwxr-xr-x 2 root root 4096 May 26 08:32 bin drwxr-xr-x 5 root root 4096 May 26 08:32 etc drwxr-xr-x 2 root root 4096 May 26 08:32 sbin drwxr-xr-x 3 root root 4096 May 26 08:32 share [root@nod1 keepalived-1.2.16]# /usr/local/keepalived/sbin/keepalived -v Keepalived v1.2.16 (05/26,2015)

安装好后,先来测试一下看keepalived是否能正常启动:

[root@nod1 keepalived]# /usr/local/keepalived/sbin/keepalived -D [root@nod1 keepalived]# ps aux | grep keepalived root 8130 0.0 0.2 44844 1032 ? Ss 08:43 0:00 /usr/local/keepalived/sbin/keepalived -D root 8131 0.1 0.4 47072 2384 ? S 08:43 0:00 /usr/local/keepalived/sbin/keepalived -D root 8132 2.3 0.3 46948 1560 ? S 08:43 0:00 /usr/local/keepalived/sbin/keepalived -D root 8143 0.0 0.1 103236 860 pts/0 S+ 08:43 0:00 grep keepalived #出现了三个守护进程,用以下命令可看出三个进程之间的关联: [root@nod1 keepalived]# pstree | grep keepalived |-keepalived---2*[keepalived]

2.2、VRRP的实现

[root@nod1 keepalived]# pwd /usr/local/keepalived [root@nod1 keepalived]# ls bin etc sbin share [root@nod1 keepalived]# tree etc -L 3 etc ├── keepalived │ ├── keepalived.conf │ └── samples │ ├── client.pem │ ├── dh1024.pem │ ├── keepalived.conf.fwmark │ ├── keepalived.conf.HTTP_GET.port │ ├── keepalived.conf.inhibit │ ├── keepalived.conf.IPv6 │ ├── keepalived.conf.misc_check │ ├── keepalived.conf.misc_check_arg │ ├── keepalived.conf.quorum │ ├── keepalived.conf.sample │ ├── keepalived.conf.SMTP_CHECK │ ├── keepalived.conf.SSL_GET │ ├── keepalived.conf.status_code │ ├── keepalived.conf.track_interface │ ├── keepalived.conf.virtualhost │ ├── keepalived.conf.virtual_server_group │ ├── keepalived.conf.vrrp │ ├── keepalived.conf.vrrp.localcheck │ ├── keepalived.conf.vrrp.lvs_syncd │ ├── keepalived.conf.vrrp.routes │ ├── keepalived.conf.vrrp.scripts │ ├── keepalived.conf.vrrp.static_ipaddress │ ├── keepalived.conf.vrrp.sync │ ├── root.pem │ └── sample.misccheck.smbcheck.sh ├── rc.d │ └── init.d │ └── keepalived └── sysconfig └── keepalived

从上边的输出信息中可知在etc这个目录下有个keepalived.conf配置文件,在samples目录下有一堆的配置样例,在etc/rc.d/init.d/下还有一个启动脚本。

下边来测试keepalived软件的VRRP的实现,我这里有两个节点,一个ip为192.168.0.200,另一个ip为192.168.0.201,两个节点都以编译安装好keepalived软件。

一个完善的keepalived.conf的配置文件一般分为三个配置块,一是全局定义块,二是vrrp实例定义块,三是虚拟服务器定义块,在各配置段中还有一些了配置段,而有些配置段不是必须的。如果只是想实现vrrp,那配置文件(192、168.0.200节点上)中只保留以下内容即可:

global_defs {

router_id LVS_VRRP_1

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 123

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.222

}

}

注释:

在全局定义块中“router_id”是表示运行vrrp主机的惟一标识,在vrrp实例块中“state MASTER”表示主机运行后的角色是MASTER。

“ virtual_router_id 123”表示虚拟路由标识,表示在"VI_1"这个vrrp实例中的一个唯一标识,在主节点和备节点中这个号码是相同的,且在整个VRRP中也是唯一的,号码取值范围为0-255。

“ priority 150 ”表示vrrp实例中的优先级,数字越大,优先级越高。

在备节点(192.168.0.201)上的配置如下:

[root@nod2 ~]# vim /usr/local/keepalived/etc/keepalived/keepalived.conf

global_defs {

router_id LVS_VRRP_2 !与主节点不同

}

vrrp_instance VI_1 {

state BACKUP !与主节点不同

interface eth0

virtual_router_id 123

priority 140 ! 小于主节点优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.222

}

}

备节点上一般有三处与主节点不同。

两个节点的配置文件都准备好后,就可以启动keepalived了,如下:

[root@nod2 ~]# /usr/local/keepalived/sbin/keepalived -D -f /usr/local/keepalived/etc/keepalived/keepalived.conf

选项“-D”:表示把启动信息打印到日志记录,即/var/log/messages

选项“-f”:表示读取的配置文件,默认时keepalived会去读取/etc/keepalived/keepalived.conf文件

[root@nod1 ~]# /usr/local/keepalived/sbin/keepalived -D -f /usr/local/keepalived/etc/keepalived/keepalived.conf

接下来验证keepalived是否正常工作,因为在启动时用了“-D”选项,所以我们去查看/var/log/messages文件即可:

下边是nod1上的日志输出信息:

May 26 09:06:15 nod1 Keepalived[8229]: Starting Keepalived v1.2.16 (05/26,2015) May 26 09:06:15 nod1 Keepalived[8230]: Starting Healthcheck child process, pid=8231 May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Netlink reflector reports IP 192.168.0.200 added May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Netlink reflector reports IP fe80::20c:29ff:fe92:a73d added May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Netlink reflector reports IP fe80::20c:29ff:fe92:a747 added May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Registering Kernel netlink reflector May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Registering Kernel netlink command channel May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Registering gratuitous ARP shared channel May 26 09:06:15 nod1 Keepalived[8230]: Starting VRRP child process, pid=8232 May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Opening file '/usr/local/keepalived/etc/keepalived/keepalived.conf'. May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Configuration is using : 61889 Bytes May 26 09:06:15 nod1 Keepalived_vrrp[8232]: Using LinkWatch kernel netlink reflector... May 26 09:06:16 nod1 Keepalived_vrrp[8232]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] May 26 09:06:16 nod1 Keepalived_vrrp[8232]: VRRP_Instance(VI_1) Transition to MASTER STATE May 26 09:06:16 nod1 Keepalived_vrrp[8232]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election May 26 09:06:17 nod1 Keepalived_vrrp[8232]: VRRP_Instance(VI_1) Entering MASTER STATE ..... May 26 09:06:17 nod1 Keepalived_vrrp[8232]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.222

从日志输出可知,nod1已成为了MASTER,且把192.168.0.222这个虚拟地址配置在了eth0上。

以下是nod2上的日志输出信息:

May 26 09:05:32 nod2 Keepalived[2819]: Starting Keepalived v1.2.16 (05/26,2015) May 26 09:05:32 nod2 Keepalived[2820]: Starting Healthcheck child process, pid=2821 May 26 09:05:32 nod2 Keepalived[2820]: Starting VRRP child process, pid=2822 May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Netlink reflector reports IP 192.168.0.201 added May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Netlink reflector reports IP fe80::20c:29ff:fec6:f77a added May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Registering Kernel netlink reflector May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Registering Kernel netlink command channel May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Netlink reflector reports IP 192.168.0.201 added May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Netlink reflector reports IP fe80::20c:29ff:fec6:f77a added May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Registering Kernel netlink reflector May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Registering Kernel netlink command channel May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Registering gratuitous ARP shared channel May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Opening file '/usr/local/keepalived/etc/keepalived/keepalived.conf'. May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Configuration is using : 6120 Bytes May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Opening file '/usr/local/keepalived/etc/keepalived/keepalived.conf'. May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Configuration is using : 61761 Bytes May 26 09:05:32 nod2 Keepalived_vrrp[2822]: Using LinkWatch kernel netlink reflector... May 26 09:05:32 nod2 Keepalived_healthcheckers[2821]: Using LinkWatch kernel netlink reflector... May 26 09:05:32 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Entering BACKUP STATE May 26 09:05:32 nod2 Keepalived_vrrp[2822]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] May 26 09:05:36 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Transition to MASTER STATE May 26 09:05:37 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Entering MASTER STATE May 26 09:05:37 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) setting protocol VIPs. May 26 09:05:37 nod2 Keepalived_healthcheckers[2821]: Netlink reflector reports IP 192.168.0.222 added May 26 09:05:37 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.222 May 26 09:05:39 nod2 ntpd[1086]: Listen normally on 7 eth0 192.168.0.222 UDP 123 May 26 09:05:39 nod2 ntpd[1086]: peers refreshed May 26 09:05:42 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.222 May 26 09:06:41 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Received higher prio advert May 26 09:06:41 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) Entering BACKUP STATE May 26 09:06:41 nod2 Keepalived_vrrp[2822]: VRRP_Instance(VI_1) removing protocol VIPs.

因我是先启动的nod2节点,再启动的nod1节点,所以在nod2的日志输出中看到它先把自己设定为了MASTER角色,然后当nod1启动后,nod2接收到了一个优先级比自己高的通知,nod2再把自己降级为BACKUP。

再去nod1上查看一相网卡上是否配置了虚拟IP,记住keepalived配置的虚拟IP,用ifconfig命令是无法查看的:

[root@nod1 keepalived]# ip add list 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000 link/ether 00:0c:29:92:a7:3d brd ff:ff:ff:ff:ff:ff inet 192.168.0.200/24 brd 192.168.0.255 scope global eth0 inet 192.168.0.222/32 scope global eth0 inet6 fe80::20c:29ff:fe92:a73d/64 scope link valid_lft forever preferred_lft forever

最后再来验证一下VRRP是否能实现高可用,在nod1节点上停止keepalived进程:

[root@nod1 keepalived]# killall keepalived [root@nod1 keepalived]# ip add list #虚拟ip地址已被释放 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000 link/ether 00:0c:29:92:a7:3d brd ff:ff:ff:ff:ff:ff inet 192.168.0.200/24 brd 192.168.0.255 scope global eth0 inet6 fe80::20c:29ff:fe92:a73d/64 scope link valid_lft forever preferred_lft forever [root@nod2 ~]# ip add list #nod2上被配置了虚拟ip 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000 link/ether 00:0c:29:c6:f7:7a brd ff:ff:ff:ff:ff:ff inet 192.168.0.201/24 brd 192.168.0.255 scope global eth0 inet 192.168.0.222/32 scope global eth0 inet6 fe80::20c:29ff:fec6:f77a/64 scope link valid_lft forever preferred_lft forever

以上测试证明了keepalived利用VRRP实现了高可用的功能。

2.3、keepalived.conf配置段详解

上边已说到过keepalived.conf一般由全局配置段、vrrp实例配置段、虚拟服务配置段组成,而各配置段中还可以嵌套另外的配置段,而有些配置段又不是必须的。虚拟服务配置是专门针对LVS设计的,如果你所在的环境不是让LVS具有高可用性,那虚拟服务配置段就可以不要,如果你想让keepalived每一次状态的改变(MASTER-BACKUP间的转变)都以邮件的方式通知管理员,那可增加关于邮件报警相关的配置段,总之,keepalived.conf的配置十分灵活,下边对常用的配置进行说明。

下边以一个实例说明:

!全局配置段

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server smtp.163.com

#定义所使用的smtp服务器

smtp_connect_timeout 30

router_id NGINX_NUM1

#vrrp路由的唯一标识,在各服务器上运行vrrp的主机上应该是唯一的

}

! vrrp的脚本检测配置段,以后边vrrp实例中调用

vrrp_script chk_nginx {

script "killall -0 nginx" #定义了一个检测nginx进程是否存在的块

interval 1 #检测的间隔时间

weight -5 #如果nginx进程不存在,刚把相应的vrrp实例的优先级减去5

fall 2 #如果两次检测nginx进程都不在,则认为nginx不可用

rise 1 #如果1次检测到nginx进程,则认为nginx可用

}

! vrrp实例配置段

vrrp_instance VI_1 {

state MASTER #MASTER或BACKUP一定要大写

interface eth0

virtual_router_id 10

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.0.222

}

track_script {

chk_nginx #这里调用了上边定义的chk_nginx

}

notify_master "/bin/sh /etc/keepalived/scripts/notify.sh master"

#这里定义当vrrp转变为master角色时所要进行的操作,这里执行了一个脚本,脚本的内容就是向管理员发送了邮件

notify_backup "/bin/sh /etc/keepalived/scripts/notify.sh backup"

#这里定义当vrrp转变为backup角色时所要进行的操作

notify_fault "/bin/sh /etc/keepalived/scripts/notify.sh fault"

}

!lvs虚拟服务配置段

virtual_server 192.168.0.222 80 {

delay_loop 6

lb_algo rr

#定义调度算法

lb_kind DR #定义lvs的模型

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.0.202 80 {

#定义一个real server

weight 1

HTTP_GET {

#定义real server的检测机制,这里是七层检测机制,如果后端的real server不是运行的http服务,那可用以TCP_CHECK

url {

path /

status_code 200

}

connect_timeout 3

#健康检测时的超时时间

nb_get_retry 3

#重试次数

delay_before_retry 3

#延迟重试,如果一次检测中不成功,则延迟此值后再重试

}

}

real_server 192.168.0.203 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

从这个配置文件中也可看出keepalived不仅可以为LVS提供高可用,也可以给nginx做高可用,只是keepalived和LVS结合时自身带了健康检测的机制,这也是healthcheck子进程所在的意义,而对非LVS做高可用时则需要借助vrrp_script这个配置段和相应的脚本来实现。

3、总结

至此,keepalived的软件架构,安装及配置文件的结构进行了简单说明,通过此博文可知,keepalived在与LVS结合时是最佳选择,因为keepalived自带了对real server的健康检测机制,这正好弥补了LVS的不足,而要知道的是keepalived不仅仅适用与LVS结合实现高可用,在其他需要高可用的环境keepalived依然可用,但总结起来keepalived更适用与作为负载均衡调度器应用类的高可用方案,比如nginx作为反向代理时,haproxy等这样的应用,在接下来的博客中将会以两个实例来说明利用keepalived实现nginx和lvs的高可用。