lvs+heartbeat+ldirectord

-----------------------------------

一、前言

二、环境

三、配置

1.NAS服务器配置

2.web服务器(web1和web2)配置

3.节点服务器(node1和node2)配置

四、测试

-----------------------------------

一、前言

Heartbeat 项目是 Linux-HA 工程的一个组成部分,它实现了一个高可用集群系统。心跳服务和集群通信是高可用集群的两个关键组件,在 Heartbeat 项目里,由 heartbeat 模块实现了这两个功能。heartbeat最核心的包括两个部分,心跳监测部分和资源接管部分,心跳监测可以通过网络链路和串口进行,而且支持冗余链路,它们之间相互发送报文来告诉对方自己当前的状态,如果在指定的时间内未收到对方发送的报文,那么就认为对方失效,这时需启动资源接管模块来接管运行在对方主机上的资源或者服务。高可用集群是指一组通过硬件和软件连接起来的独立计算机,它们在用户面前表现为一个单一系统,在这样的一组计算机系统内部的一个或者多个节点停止工作,服务会从故障节点切换到正常工作的节点上运行,不会引起服务中断。从这个定义可以看出,集群必须检测节点和服务何时失效,何时恢复为可用。这个任务通常由一组被称为“心跳”的代码完成。在Linux-HA里这个功能由一个叫做heartbeat的程序完成。

为了从主Director将LVS负载均衡资源故障转移到备用Director,并从集群中自动移除节点,我们需要使用ldirectord程序,这个程序在启动时自动建立IPVS表,然后监视集群节点的健康情况,在发现失效节点时将其自动从IPVS表中移除。

二、环境

1.系统 CentOS6.4 32位

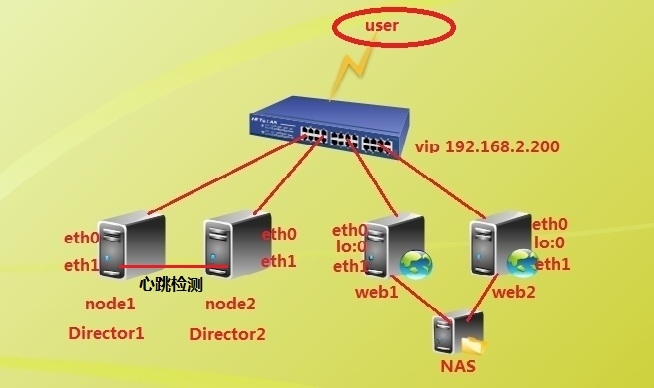

2.拓扑图

3.地址规划

node1

eth0 192.168.2.10/24

eth1 192.168.3.10/24

node2

eth0 192.168.2.20/24

eth1 192.168.3.20/24

web1

eth0 192.168.2.50/24

eth1 192.168.4.10/24

lo:0 192.168.2.200/32

web2

eth0 192.168.2.60/24

eth1 192.168.4.20/24

lo:0 192.168.2.200/32

NAS

eth0 192.168.4.30

三、配置

1.NAS服务器配置

# yum install rpcbind nfs-utils # service rpcbind start Starting rpcbind: [ OK ] # service nfs start Starting NFS services: [ OK ] Starting NFS quotas: [ OK ] Starting NFS mountd: [ OK ] Starting NFS daemon: [ OK ] # vim /etc/exports //编辑共享清单 /webroot *(rw,sync) # exportfs -rv exporting *:/webroot # echo "web" >/webroot/index.html

2.web服务器(web1和web2)配置,web1和web2相同配置。

# setenforce 0 # vim /etc/sysctl.conf //添加这两行 net.ipv4.conf.all.arp_announce = 2 net.ipv4.conf.all.arp_ignore = 1 # sysctl -p # yum install httpd //安装httpd # echo "ok" >/var/www/html/.test.html //做个探测页面 # showmount -e 192.168.4.30 Export list for 192.168.4.30: /webroot * # mount 192.168.4.30:/webroot /var/www/html/ # mount 192.168.4.30:/webroot on /var/www/html type nfs (rw,vers=4,addr=192.168.4.30,clientaddr=192.168.4.10) # cat /var/www/html/index.html web

3.节点服务器(node1和node2)配置,以node1为例,node2相同配置。

# hostname node1.a.com //node2为node2.a.com # vim /etc/sysconfig/network HOSTNAME=node1.a.com # hostname node1.a.com # vim /etc/hosts 3 192.168.2.10 node1.a.com 4 192.168.2.20 node2.a.com # wget ftp://mirror.switch.ch/pool/1/mirror/scientificlinux/6rolling/i386/os/Packages/epel-release-6-5.noarch.rpm # rpm -ivh epel-release-6-5.noarch.rpm # cd /etc/yum.repos.d/ # ls CentOS-Base.repo CentOS-Media.repo epel.repo CentOS-Debuginfo.repo CentOS-Vault.repo epel-testing.repo # yum install cluster-glue heartbeat //互联网安装 # vim clusterlabs.repo [clusterlabs] name=High Availability/Clustering server technologies (epel-5) baseurl=http://www.clusterlabs.org/rpm/epel-5 type=rpm-md gpgcheck=0 enabled=1 # yum install ldirectord //互联网安装 # rpm -ql ldirectord # cd /usr/share/doc/ldirectord-1.0.4/ # vim ldirectord.cf 25 virtual=192.168.2.200:80 26 real=192.168.2.50:80 gate 27 real=192.168.2.60:80 gate 28 fallback=127.0.0.1:80 gate 29 service=http 30 scheduler=rr 31 #persistent=600 32 #netmask=255.255.255.255 33 protocol=tcp 34 checktype=negotiate 35 checkport=80 36 request=".test.html" 37 receive="ok" # cp ldirectord.cf /etc/ha.d/ # rpm -ql heartbeat |less # cd /usr/share/doc/heartbeat-3.0.4/ # cp authkeys ha.cf haresources /etc/ha.d/ # cd /etc/ha.d/ # vim ha.cf 93 bcast eth1 # Solaris //定义心跳网卡 211 node node1.a.com //声明所有节点 212 node node2.a.com # dd if=/dev/urandom bs=512 count=1 |openssl md5 1+0 records 1+0 records out 512 bytes (512 B) copied, 0.000216749 s, 2.4 MB/s (stdin)= 5fb04bb9534a5c7c8c7c030d0390f34c # vim authkeys 23 auth 3 24 #1 crc 25 #2 sha1 HI! 26 3 md5 5fb04bb9534a5c7c8c7c030d0390f34c //node1和node2钥匙要一样。 # chmod 600 authkeys # vim haresources 45 node1.a.com IPaddr::192.168.2.200/24/eth1 ldirectord::ldirectord.cf //以node1为主节点,node2配置一样。

四、启动测试

# ip addr //node1 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:59:e7:04 brd ff:ff:ff:ff:ff:ff inet 192.168.2.10/24 brd 192.168.2.255 scope global eth0 inet 192.168.2.200/24 brd 192.168.2.255 scope global secondary eth0 inet6 fe80::20c:29ff:fe59:e704/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:59:e7:0e brd ff:ff:ff:ff:ff:ff inet 192.168.3.10/24 brd 192.168.3.255 scope global eth1 inet6 fe80::20c:29ff:fe59:e70e/64 scope link valid_lft forever preferred_lft forever # service heartbeat status //node1 heartbeat OK [pid 6721 et al] is running on node1.a.com [node1.a.com]... # service heartbeat status //node2 heartbeat OK [pid 8684 et al] is running on node2.a.com [node2.a.com]... # service httpd status //web1 httpd (pid 3457) is running... # service httpd status //web2 httpd (pid 2424) is running... # ipvsadm -l //node1 IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.2.200:http rr -> 192.168.2.50:http Route 1 0 0 -> 192.168.2.60:http Route 1 0 0 # ipvsadm //node2 IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn

1.浏览器访问虚拟地址http://192.168.2.200

2.模拟node1失效

[root@node1 ha.d]# cd /usr/share/heartbeat/ [root@node1 heartbeat]# ./hb_standby Going standby [all]. [root@node1 heartbeat]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn [root@node2 ha.d]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.2.200:http rr -> 192.168.2.50:http Route 1 0 0 -> 192.168.2.60:http Route 1 0 0

3.测试,页面访问正常

4.node1重新接管服务

[root@node1 heartbeat]# ./hb_takeover [root@node1 heartbeat]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.2.200:http rr -> 192.168.2.50:http Route 1 0 0 -> 192.168.2.60:http Route 1 0 0 [root@node2 ha.d]# ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn

ps:NAS只是简单的测试,同样可以实现永久自动挂载,参见我的另一篇博客《NFS简单配置》