HA Cluster―Corosync+NFS实现LAMP高可用

本次试验是基于Corosync和NFS对LAMP做高可用;

在一台宕机后另一台可以接替。

很多原理性的理论:HA Cluster―heartbeat v2基于crm配置有介绍;这里就不作介绍;直接进入配置阶段。

一、准备环境

| 服务器 | IP | 主机名 |

| httpd+php+mysql | 192.168.0.111 | node1.soul.com |

| httpd+php+mysql | 192.168.0.112 | node2.soul.com |

| NFS | 192.168.0.113 | nfs.soul.com |

| VIP |

192.168.0.222 | |

同步时间

#为了方便,这里单独使用了一台ansible机器,并非实验必须 [root@ansible ~]# ansible nodes -a "date" node1.soul.com | success | rc=0 >> Wed Apr 23 09:36:53 CST 2014 node2.soul.com | success | rc=0 >> Wed Apr 23 09:36:53 CST 2014 nfs.soul.com | success | rc=0 >> Wed Apr 23 09:36:53 CST 2014

对应的机器上安装软件

[root@node1 ~]# rpm -q httpd php

httpd-2.2.15-29.el6.centos.x86_64

php-5.3.3-26.el6.x86_64

[root@node2 ~]# rpm -q httpd php

httpd-2.2.15-29.el6.centos.x86_64

php-5.3.3-26.el6.x86_64

#两台机器都需要

[root@node1 ~]# chkconfig httpd off

[root@node1 ~]# chkconfig --list httpd

httpd 0:off 1:off 2:off 3:off 4:off 5:off 6:off

#分别在两台机器上安装mysql

#注意的是如果在node1上操作,那node2上就不要初始化数据库了

#这里数据库的存储目录需要指定为NFS的共享目录;且初始化的时候需要先挂载NFS

[root@node1 ~]# mount

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

192.168.0.113:/webstore on /share type nfs (rw,vers=4,addr=192.168.0.113,clientaddr=192.168.0.111)

#如上所示,两台都是如此

安装nfs

[root@nfs ~]# service nfs start

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

Starting RPC idmapd: [ OK ]

[root@nfs ~]#

[root@nfs ~]# exportfs -v

[root@nfs ~]# exportfs -v

/webstore 192.168.0.111(rw,wdelay,no_root_squash,no_subtree_check)

/webstore 192.168.0.112(rw,wdelay,no_root_squash,no_subtree_check

#在httpd服务器上查看

[root@node1 ~]# showmount -e 192.168.0.113

Export list for 192.168.0.113:

/webstore 192.168.0.112,192.168.0.111

#所有服务都准备测试好以后,全部关闭,并关闭开启自动启动。

#NFS服务需要开机启动;否则挂不上

二、安装配置corosync和pacemaker

#这里以node1操作

[root@node1 ~]# rpm -q corosync pacemaker

corosync-1.4.1-17.el6.x86_64

pacemaker-1.1.10-14.el6.x86_64

#配置corosync

[root@node1 ~]# cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

[root@node1 ~]# vim /etc/corosync/corosync.conf

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2 #版本号

secauth: on #认证

threads: 0 #认证时并行线程数

interface {

ringnumber: 0 #环号码

bindnetaddr: 192.168.0.0 #绑定的网络

mcastaddr: 226.94.40.1 #多播地址

mcastport: 5405 #多播端口

ttl: 1 #发送次数

}

}

logging {

fileline: off

to_stderr: no #输入到标准错误

to_logfile: yes #启用日志

to_syslog: no #发送到系统日志

logfile: /var/log/cluster/corosync.log #日志路径

debug: off #是否开启debug

timestamp: on #是否开启时间戳

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

ver: 0 #版本号

name: pacemaker #开启自动启动pacemaker

}

aisexec {

user: root #运行时用户

group: root

}

-- INSERT --

生成认证密钥

[root@node1 corosync]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

#可能会有与随机数不够导致需要敲键盘;可以选择敲键盘;也可以使用伪随机数

#建议没事多敲敲键盘;即保证了安全;有锻炼了身体

#拷贝authkey corosync.conf到node2

[root@node1 corosync]# ls

authkey corosync.conf.example service.d

corosync.conf corosync.conf.example.udpu uidgid.d

#

[root@node1 corosync]# scp -p authkey corosync.conf node2:/etc/corosync/

authkey 100% 128 0.1KB/s 00:00

corosync.conf 100% 520 0.5KB/s 00:00

[root@node1 corosync]#

#注意权限

启动测试

[root@node1 ~]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 ~]# ssh node2 'service corosync start'

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 ~]#

#验证是否启动成功

#验证启动是否正常

[root@node1 ~]# grep -e "Corosync Cluster Engine" -e "configuration file" /var/log/cluster/corosync.log

Apr 23 11:48:40 corosync [MAIN ] Corosync Cluster Engine ('1.4.1'): started and ready to provide service.

Apr 23 11:48:40 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

#验证初始化成员节点通知是否正常发出

[root@node1 ~]# grep TOTEM /var/log/cluster/corosync.log

Apr 23 11:48:40 corosync [TOTEM ] Initializing transport (UDP/IP Multicast).

Apr 23 11:48:40 corosync [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Apr 23 11:48:40 corosync [TOTEM ] The network interface [192.168.0.111] is now up.

Apr 23 11:48:41 corosync [TOTEM ] Process pause detected for 879 ms, flushing membership messages.

Apr 23 11:48:41 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed.

Apr 23 11:48:53 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed.

#查看pacemaker是否正常启动

[root@node1 ~]# grep pcmk_startup /var/log/cluster/corosync.log

Apr 23 11:48:40 corosync [pcmk ] info: pcmk_startup: CRM: Initialized

Apr 23 11:48:40 corosync [pcmk ] Logging: Initialized pcmk_startup

Apr 23 11:48:40 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615

Apr 23 11:48:40 corosync [pcmk ] info: pcmk_startup: Service: 9

Apr 23 11:48:40 corosync [pcmk ] info: pcmk_startup: Local hostname: node1.soul.com

[root@node1 ~]#

安装crmsh和pssh两个包

[root@node1 ~]# scp -p pssh-2.3.1-2.el6.x86_64.rpm crmsh-1.2.6-4.el6.x86_64.rpm node2:/root

pssh-2.3.1-2.el6.x86_64.rpm 100% 49KB 48.8KB/s 00:00

crmsh-1.2.6-4.el6.x86_64.rpm 100% 484KB 483.7KB/s 00:00

[root@node1 ~]#

[root@node1 ~]# yum -y install crmsh-1.2.6-4.el6.x86_64.rpm pssh-2.3.1-2.el6.x86_64.rpm

[root@node2 ~]# yum -y install crmsh-1.2.6-4.el6.x86_64.rpm pssh-2.3.1-2.el6.x86_64.rpm

#安装完成后即可使用crm命令来查看

[root@node1 ~]# crm status

Last updated: Wed Apr 23 11:57:29 2014

Last change: Wed Apr 23 11:49:04 2014 via crmd on node2.soul.com

Stack: classic openais (with plugin)

Current DC: node2.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Online: [ node1.soul.com node2.soul.com ]

#crm的用法:

[root@node1 ~]# crm

crm(live)# help

#就是这样,一句话也说不清楚,需要慢慢研究,

This is crm shell, a Pacemaker command line interface.

Available commands:

cib manage shadow CIBs

resource resources management

configure CRM cluster configuration

node nodes management

options user preferences

history CRM cluster history

site Geo-cluster support

ra resource agents information center

status show cluster status

help,? show help (help topics for list of topics)

end,cd,up go back one level

quit,bye,exit exit the program

一切准备就绪后;下面就是配置资源了,最繁琐的也是这里

三、配置高可用集群资源

首先做个简单的规划;需要配置哪些资源,先后次序后续启动时很重要的:

1、配置VIP

2、配置NFS共享存储

3、配置httpd服务

4、配置mysql服务

5、配置一个资源组;把上述资源加入该组

所需要的命令或需要使用的代理大致:

#资源类型:

crm(live)# ra

crm(live)ra# classes

lsb

ocf / heartbeat pacemaker

service

stonith

crm(live)ra#

#资源代理,可以此类推查看

crm(live)ra# list lsb

NetworkManager abrt-ccpp abrt-oops abrtd acpid

atd auditd autofs blk-availability bluetooth

corosync corosync-notifyd cpuspeed crond cups

dnsmasq firstboot haldaemon halt htcacheclean

httpd ip6tables iptables irqbalance kdump

killall libvirt-guests lvm2-lvmetad lvm2-monitor mdmonitor

messagebus mysqld netconsole netfs network

nfs nfslock ntpd ntpdate pacemaker

php-fpm portreserve postfix psacct quota_nld

rdisc restorecond rngd rpcbind rpcgssd

rpcidmapd rpcsvcgssd rsyslog sandbox saslauthd

single smartd spice-vdagentd sshd svnserve

sysstat udev-post wdaemon winbind wpa_supplicant

#详细信息

crm(live)ra# info lsb:nfs

lsb:nfs

NFS is a popular protocol for file sharing across networks.

This service provides NFS server functionality, which is \

configured via the /etc/exports file.

Operations' defaults (advisory minimum):

start timeout=15

stop timeout=15

status timeout=15

restart timeout=15

force-reload timeout=15

monitor timeout=15 interval=15

大致了解后,添加资源:

#首先配置几个全局属性信息

#禁用stonith设备,因为这里没有该设备可以使用

crm(live)configure# property stonith-enabled=false

crm(live)configure# verify #校验下

#忽略不满足法定票数时的操作

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# verify

crm(live)configure# commit #确认后提交

#查看配置的信息

crm(live)configure# show

node node1.soul.com

node node2.soul.com

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

配置VIP

crm(live)configure# primitive webip ocf:heartbeat:IPaddr params ip=192.168.0.222 op monitor interval=30s timeout=30s on-fail=restart

#参数可以help查看

crm(live)configure# verify

crm(live)configure# commit

crm(live)configure# show

node node1.soul.com

node node2.soul.com

primitive webip ocf:heartbeat:IPaddr \

params ip="192.168.0.222" \

op monitor interval="30s" timeout="30s" on-fail="restart"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

#commit后就可以查看状态信息

crm(live)# status

Last updated: Wed Apr 23 12:26:20 2014

Last change: Wed Apr 23 12:25:22 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node2.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

1 Resources configured

Online: [ node1.soul.com node2.soul.com ]

webip (ocf::heartbeat:IPaddr): Started node1.soul.com

配置NFS共享存储

crm(live)configure# primitive webstore ocf:heartbeat:Filesystem \

params device="192.168.0.113:/webstore" \

directory="/share" fstype="nfs" \

op monitor interval=40s timeout=40s \

op start timeout=60s op stop timeout=60s

crm(live)configure# verify

crm(live)configure# show

node node1.soul.com

node node2.soul.com

primitive webip ocf:heartbeat:IPaddr \

params ip="192.168.0.222" \

op monitor interval="30s" timeout="30s" on-fail="restart"

primitive webstore ocf:heartbeat:Filesystem \

params device="192.168.0.113:/webstore" directory="/share" fstype="nfs" \

op monitor interval="40s" timeout="40s" \

op start timeout="60s" interval="0" \

op stop timeout="60s" interval="0"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

配置httpd服务

crm(live)configure# primitive webserver lsb:httpd op monitor interval=30s timeout=30s on-fail=restart crm(live)configure# verify crm(live)configure# commit crm(live)# status Last updated: Wed Apr 23 13:20:54 2014 Last change: Wed Apr 23 13:20:46 2014 via crmd on node2.soul.com Stack: classic openais (with plugin) Current DC: node2.soul.com - partition with quorum Version: 1.1.10-14.el6-368c726 2 Nodes configured, 2 expected votes 3 Resources configured Online: [ node1.soul.com node2.soul.com ] webip (ocf::heartbeat:IPaddr): Started node1.soul.com webstore (ocf::heartbeat:Filesystem): Started node2.soul.com webserver (lsb:httpd): Started node1.soul.com

配置mysql服务

crm(live)configure# primitive webdb lsb:mysqld op monitor interval=30s timeout=30s on-fail=restart crm(live)configure# verify crm(live)configure# commit crm(live)configure# cd crm(live)# status Last updated: Wed Apr 23 13:25:38 2014 Last change: Wed Apr 23 13:25:17 2014 via cibadmin on node1.soul.com Stack: classic openais (with plugin) Current DC: node2.soul.com - partition with quorum Version: 1.1.10-14.el6-368c726 2 Nodes configured, 2 expected votes 4 Resources configured Online: [ node1.soul.com node2.soul.com ] webip (ocf::heartbeat:IPaddr): Started node1.soul.com webstore (ocf::heartbeat:Filesystem): Started node2.soul.com webserver (lsb:httpd): Started node1.soul.com webdb (lsb:mysqld): Started node2.soul.com

配置一个资源组;并将以上资源加入该

#从上面的资源状态信息可以看出;资源会自动负载均衡到两台机器

#所以需要让其都归到一个组内

crm(live)configure# group webcluster webip webstore webserver webdb

crm(live)configure# verify

crm(live)configure# commit

crm(live)configure# cd

crm(live)# status

Last updated: Wed Apr 23 13:30:25 2014

Last change: Wed Apr 23 13:29:55 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node2.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Online: [ node1.soul.com node2.soul.com ]

Resource Group: webcluster

webip (ocf::heartbeat:IPaddr): Started node1.soul.com

webstore (ocf::heartbeat:Filesystem): Started node1.soul.com

webserver (lsb:httpd): Started node1.soul.com

webdb (lsb:mysqld): Started node1.soul.com

#组添加后就可以看到资源自动转移到同一节点

此时;定义一个顺序约束;让其按照指定顺序启动/关闭

crm(live)configure# help order

Usage:

...............

order <id> {kind|<score>}: <rsc>[:<action>] <rsc>[:<action>] ...

[symmetrical=<bool>]

kind :: Mandatory | Optional | Serialize

crm(live)configure# order ip_store_http_db Mandatory: webip webstore webserver webdb

crm(live)configure# verify

crm(live)configure# commit

crm(live)configure# show xml

<?xml version="1.0" ?>

<cib num_updates="4" dc-uuid="node2.soul.com" update-origin="node1.soul.com" crm_feature_set="3.0.7" validate-with="pacemaker-1.2" update-client="cibadmin" epoch="14" admin_epoch="0" cib-last-written="Wed Apr 23 13:37:27 2014" have-quorum="1">

<configuration>

<crm_config>

<cluster_property_set id="cib-bootstrap-options">

<nvpair id="cib-bootstrap-options-dc-version" name="dc-version" value="1.1.10-14.el6-368c726"/>

<nvpair id="cib-bootstrap-options-cluster-infrastructure" name="cluster-infrastructure" value="classic openais (with plugin)"/>

<nvpair id="cib-bootstrap-options-expected-quorum-votes" name="expected-quorum-votes" value="2"/>

<nvpair name="stonith-enabled" value="false" id="cib-bootstrap-options-stonith-enabled"/>

<nvpair name="no-quorum-policy" value="ignore" id="cib-bootstrap-options-no-quorum-policy"/>

<nvpair id="cib-bootstrap-options-last-lrm-refresh" name="last-lrm-refresh" value="1398230446"/>

</cluster_property_set>

</crm_config>

<nodes>

<node id="node2.soul.com" uname="node2.soul.com"/>

<node id="node1.soul.com" uname="node1.soul.com"/>

</nodes>

<resources>

<group id="webcluster">

<primitive id="webip" class="ocf" provider="heartbeat" type="IPaddr">

<instance_attributes id="webip-instance_attributes">

<nvpair name="ip" value="192.168.0.222" id="webip-instance_attributes-ip"/>

</instance_attributes>

<operations>

<op name="monitor" interval="30s" timeout="30s" on-fail="restart" id="webip-monitor-30s"/>

</operations>

</primitive>

<primitive id="webstore" class="ocf" provider="heartbeat" type="Filesystem">

<instance_attributes id="webstore-instance_attributes">

<nvpair name="device" value="192.168.0.113:/webstore" id="webstore-instance_attributes-device"/>

<nvpair name="directory" value="/share" id="webstore-instance_attributes-directory"/>

<nvpair name="fstype" value="nfs" id="webstore-instance_attributes-fstype"/>

</instance_attributes>

<operations>

<op name="monitor" interval="40s" timeout="40s" id="webstore-monitor-40s"/>

<op name="start" timeout="60s" interval="0" id="webstore-start-0"/>

<op name="stop" timeout="60s" interval="0" id="webstore-stop-0"/>

</operations>

</primitive>

<primitive id="webserver" class="lsb" type="httpd">

<operations>

<op name="monitor" interval="30s" timeout="30s" on-fail="restart" id="webserver-monitor-30s"/>

</operations>

</primitive>

<primitive id="webdb" class="lsb" type="mysqld">

<operations>

<op name="monitor" interval="30s" timeout="30s" on-fail="restart" id="webdb-monitor-30s"/>

</operations>

</primitive>

</group>

</resources>

<constraints>

<rsc_order id="ip_store_http_db" kind="Mandatory">

<resource_set id="ip_store_http_db-0">

<resource_ref id="webip"/>

<resource_ref id="webstore"/>

<resource_ref id="webserver"/>

<resource_ref id="webdb"/>

</resource_set>

</rsc_order>

</constraints>

</configuration>

</cib>

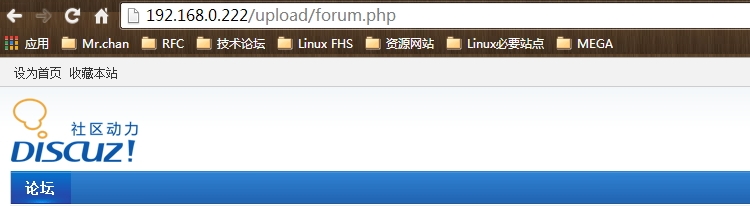

四、安装论坛测试

#首先查看目前资源运行于哪个节点

[root@node1 ~]# crm status

Last updated: Wed Apr 23 13:52:07 2014

Last change: Wed Apr 23 13:37:27 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node2.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Online: [ node1.soul.com node2.soul.com ]

Resource Group: webcluster

webip (ocf::heartbeat:IPaddr): Started node1.soul.com

webstore (ocf::heartbeat:Filesystem): Started node1.soul.com

webserver (lsb:httpd): Started node1.soul.com

webdb (lsb:mysqld): Started node1.soul.com

#可以看出都运行在node1上,然后更改下node1的httpd的配置文件

#更改对应的网页目录到NFS下的/share/www

#在到/share/www创建一个文件进行测试

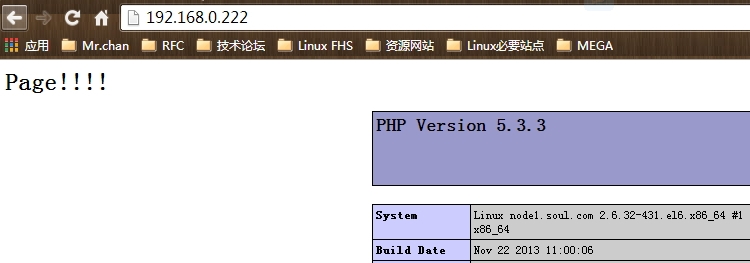

[root@node1 www]# vim /share/www/index.php

<h1>Page!!!!</h1>

<?php

phpinfo();

?>

#保存测试

测试访问正常。可以安装论坛测试

测试资源转移:

[root@node1 ~]# crm status

Last updated: Wed Apr 23 15:15:01 2014

Last change: Wed Apr 23 15:14:42 2014 via crm_attribute on node2.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Online: [ node1.soul.com node2.soul.com ]

Resource Group: webcluster

webip (ocf::heartbeat:IPaddr): Started node1.soul.com

webstore (ocf::heartbeat:Filesystem): Started node1.soul.com

webserver (lsb:httpd): Started node1.soul.com

webdb (lsb:mysqld): Started node1.soul.com

#目前查看运行在node1上,现在让node1节点standby:

[root@node1 ~]# crm node standby node1.soul.com

[root@node1 ~]# crm status

Last updated: Wed Apr 23 15:20:51 2014

Last change: Wed Apr 23 15:20:41 2014 via crm_attribute on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

4 Resources configured

Node node1.soul.com: standby

Online: [ node2.soul.com ]

Resource Group: webcluster

webip (ocf::heartbeat:IPaddr): Started node2.soul.com

webstore (ocf::heartbeat:Filesystem): Started node2.soul.com

webserver (lsb:httpd): Started node2.soul.com

webdb (lsb:mysqld): Started node2.soul.com

#查看全部转移到node2上

#刷新网页查看下

测试一切正常;到此;LAMP的高可用已配置完成。如有问题;可以留言讨论。

如有错误;恳请纠正。